python爬虫之爬取贴吧图片

确定爬取流程

- 手动翻页观察URL结构,构造URL列表。

- 发送request请求包。

- 解析response回复包,提取所需数据。

- 存储数据。

1.观察URL,构造url列表

第一页:https://tieba.baidu.com/f?kw=图片&ie=utf-8&pn=0

第二页:https://tieba.baidu.com/f?kw=图片&ie=utf-8&pn=50

第三页:https://tieba.baidu.com/f?kw=图片&ie=utf-8&pn=100

其中ie=utf-8表示用 UTF-8 字符集显示页面,这个参数对链接整体并没有什么影响。

发现kw是贴吧名,pn表示第几页,只不过数字扩大了五十倍。

然后我们构造url列表

def url_list():

url = "https://tieba.baidu.com/f?"

urllist = []

for page in range(startPage-1,endPage):

yema = page*50

link = url + "kw=" + str(tieba_name) + "&" + "pn=" + str(yema)

urllist.append(link)

return urllist2.发送请求包,并从回复包里提取所需数据

def get_html(url,path):

response = requests.get(url)

html = response.text

content = etree.HTML(html)

link_list = content.xpath(path)

return link_listetree.HTML():构造了一个XPath解析对象并对HTML文本进行自动修正。

content.xpath('//a[@class="j_th_tit "]/@href')意思就是获取a class标签下href属性的值/p/6130848813,然后我们就可以通过这个值构造帖子的URL。

3.存储数据

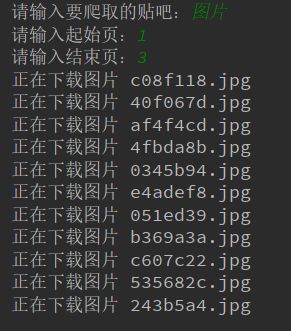

通过图片的链接地址依次下载图片保存到本地。

def download_image(url):

link = get_html(url,'//a[@class="j_th_tit "]/@href')

for link_tiezi in link:

fulllink = "https://tieba.baidu.com" + link_tiezi

link_tiezi_image = get_html(fulllink, '//img[@class="BDE_Image"]/@src')

for link_image in link_tiezi_image:

image_link = requests.get(link_image)

img = image_link.content

filename = link_image[-11:] ##以链接后11位作为文件名

path = os.getcwd() + '/' + 'img'

if not os.path.isdir(path): ##判断文件夹img是否存在

os.makedirs(path)

print"正在下载图片", filename

f = open('img/' + filename, "wb")

f.write(img)

f.close()

完整代码如下:

#!/usr/bin/env python

# -*- coding: utf-8 -*-

import os

import requests

from lxml import etree

def url_list():

url = "https://tieba.baidu.com/f?"

urllist = []

for page in range(startPage-1,endPage):

yema = page*50

link = url + "kw=" + str(tieba_name) + "&" + "pn=" + str(yema)

urllist.append(link)

return urllist

def get_html(url,path):

response = requests.get(url)

html = response.text

content = etree.HTML(html)

link_list = content.xpath(path)

return link_list

def download_image(url):

link = get_html(url,'//a[@class="j_th_tit "]/@href')

for link_tiezi in link:

fulllink = "https://tieba.baidu.com" + link_tiezi

link_tiezi_image = get_html(fulllink, '//img[@class="BDE_Image"]/@src')

for link_image in link_tiezi_image:

image_link = requests.get(link_image)

img = image_link.content

filename = link_image[-11:]

path = os.getcwd() + '/' + 'img'

if not os.path.isdir(path):

os.makedirs(path)

print"正在下载图片", filename

f = open('img/' + filename, "wb")

f.write(img)

f.close()

if __name__ == "__main__":

tieba_name = raw_input("请输入要爬取的贴吧:")

startPage = int(input("请输入起始页:"))

endPage = int(input("请输入结束页:"))

url = url_list()

for imageurl in url:

download_image(imageurl)