03 电商数仓(用户行为数据仓库 ODS/DWD层)

电商数仓(用户行为数据仓库 ODS/DWD层)

写在前面:本文是我学习电商数仓项目的过程中,对相关知识的记录与总结。

文章目录

- 电商数仓(用户行为数据仓库 ODS/DWD层)

- 1. 数仓分层概念

- 1.1 为什么要分层?

- 1.2 数仓分层

- 1.2.1 分层结构图

- 1.2.2 各层介绍

- 1.3 数据集市与数据仓库

- 2. 数仓搭建环境准备

- 2.1 Hive & MySQL安装

- 3. 数仓搭建之 ODS 层

- 3.1 创建数据库 `gmall`

- 3.2 ODS 原始数据层

- 3.2.1 创建启动日志表

- 3.2.2 创建事件日志表

- 3.2.3 Shell 中单引号和双引号区别

- 3.2.4 ODS 层加载数据脚本

- 4. 数仓搭建之 DWD 层

- 4.1 DWD 层启动表数据解析

- 4.1.1 创建启动表

- 4.1.2 向启动表中导入数据

- 4.1.3 查看是否导入成功

- 4.1.4 DWD 层启动表加载数据脚本

- 4.2 DWD 层事件表数据解析

- 4.2.1 创建基础明细表

- 4.2.2 自定义 UDF 函数(解析公共字段 cm)

- 4.2.3 自定义 UDTF 函数(解析具体事件字段)

- 4.2.4 解析事件日志基础明细表

- 4.2.5 DWD 层数据解析脚本

- 4.3 DWD 层事件表获取

- 4.3.1 商品点击表

- 4.3.2 商品详情页表

- 4.3.3 商品列表页表

- 4.3.4 广告表

- 4.3.5 消息通知表

- 4.3.6 用户前台活跃表

- 4.3.7 用户后台活跃表

- 4.3.8 评论表

- 4.3.9 收藏表

- 4.3.10 点赞表

- 4.3.11 错误日志表

- 4.3.12 DWD 层事件表加载数据脚本

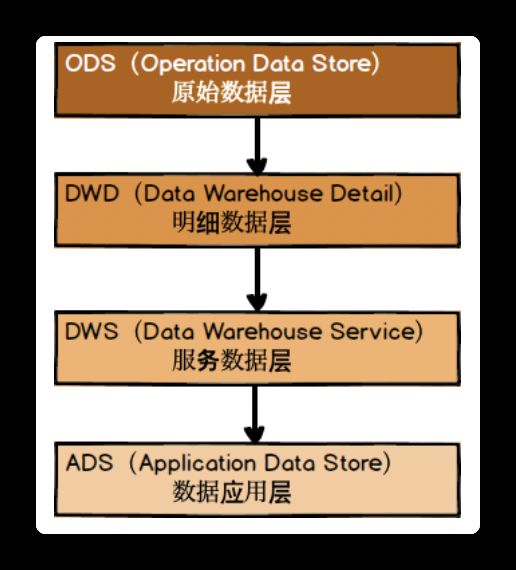

1. 数仓分层概念

1.1 为什么要分层?

- 把复杂问题简单化: 将一个复杂的任务分解成多个步骤来完成,每一层只处理单一的步骤,简单且方便定位问题。

- 减少重复开发: 规范数据分层,通过中间层数据,能过减少极大地重复计算,增加一次计算结果的复用性。

- 隔离原始数据: 不论是数据的异常还是数据的敏感性,使真实的数据与统计数据解耦开。

1.2 数仓分层

1.2.1 分层结构图

1.2.2 各层介绍

ODS (Operation Data Store)原始数据层: 存放原始数据,直接加载原始日志、数据,保持原貌不作处理。DWD (Data Warehouse Detail)数据明细层: 结构和粒度与原始表保持一致,对ODS层数据进行清洗 (去除空值、脏数据,超过极限范围的数据) 。DWS (Data Warehouse Service)服务数据层: 以DWD为基础,进行轻度汇总。ADS (Application Data Store)数据应用层: 为各种统计报表提供数据。

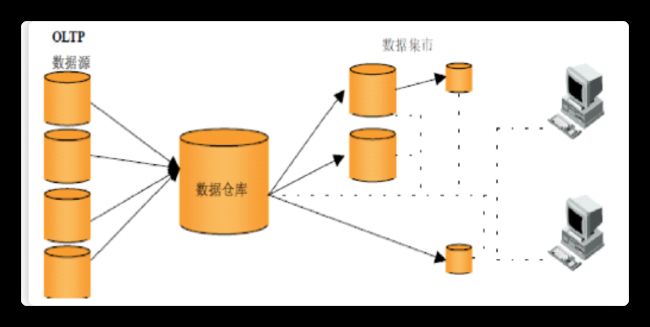

1.3 数据集市与数据仓库

-

数据集市: 是一种微型的数据仓库,它通常拥有更少的数据,更少的主题区域,以及更少的历史数据,因此是部门级的,一般只能为某个局部范围内的管理人员服务。

-

数据仓库: 是企业级的,能为整个企业的各个部门提供决策支持手段。

2. 数仓搭建环境准备

2.1 Hive & MySQL安装

-

安装

Hive & MySQL。先 Mark,之后陆续更新。

-

Hive运行引擎Tez。Hive默认使用MapReduce作为运行引擎,Tez是另一款运行引擎且优于MR,建议将运行引擎换为Tez。替换方法,之后陆续更新。

3. 数仓搭建之 ODS 层

3.1 创建数据库 gmall

-

数据准备

dt 2020-5-11 lg.sh dt 2020-5-12 lg.sh -

启动

hivebin/hive -

创建

gmall数据库create database gmall; -- 如果数据库存在且有数据,需要强制删除时执行: -- drop database gmall cascade; -

使用

gmall数据库use gmall;

3.2 ODS 原始数据层

3.2.1 创建启动日志表

-

原始数据格式

{ "action": "1", "ar": "MX", "ba": "Sumsung", "detail": "", "en": "start", "entry": "4", "extend1": "", "g": "[email protected]", "hw": "750*1134", "l": "es", "la": "0.0", "ln": "-103.0", "loading_time": "2", "md": "sumsung-7", "mid": "5", "nw": "3G", "open_ad_type": "1", "os": "8.2.7", "sr": "W", "sv": "V2.2.2", "t": "1589116880895", "uid": "5", "vc": "4", "vn": "1.0.5" } -

创建输入数据是

lzo输出是text,支持json解析的分区表。-- 如果要创建的表已经存在,则先删除 drop table if exists ods_start_log; -- 创建外部表,字段就是一个 String 类型的 json 数据 create external table ods_start_log (`line` string) -- 根据日期进行分区 partitioned by (`dt` string) -- Lzo压缩格式处理 Lzo --> text stored as inputformat 'com.hadoop.mapred.DeprecatedLzoTextInputFormat' outputformat 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' -- 设置数据存储的位置 location '/warehouse/gmall/ods/ods_start_log'; -

加载数据

load data inpath '/orgin_data/gmall/log/topic_start/2020-05-11' into table gmall.ods_start_log partition(dt='2020-05-11'); -

查看是否加载成功

select * from ods_start_log limit 2;

3.2.2 创建事件日志表

-

原始数据格式

1589212831498|{ "cm":{ "ln":"-64.8", "sv":"V2.0.6", "os":"8.1.6", "g":"[email protected]", "mid":"6", "nw":"3G", "l":"pt", "vc":"6", "hw":"1080*1920", "ar":"MX", "uid":"6", "t":"1589203841317", "la":"-51.8", "md":"Huawei-18", "vn":"1.0.6", "ba":"Huawei", "sr":"Y" }, "ap":"app", "et":[ { "ett":"1589114083593", "en":"active_background", "kv":{ "active_source":"2" } } ] } -

创建输入数据是

lzo输出是text,支持json解析的分区表。drop table if exists ods_event_log; create external table ods_event_log (`line` string) partitioned by (`dt` string) stored as inputformat 'com.hadoop.mapred.DeprecatedLzoTextInputFormat' outputformat 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' location '/warehouse/gmall/ods/ods_event_log'; -

加载数据

load data inpath '/orgin_data/gmall/log/topic_event/2020-05-11' into table gmall.ods_event_log partition(dt='2020-05-11'); -

查看是否加载成功

select * from ods_event_log limit 2;

3.2.3 Shell 中单引号和双引号区别

- 单引号不取变量值。

- 双引号取变量值。

- 单引号内部嵌套双引号,不取变量值。

- 双引号内部嵌套单引号,区变量值。

- 反引号执行引号中的命令。

3.2.4 ODS 层加载数据脚本

-

在

hadoop101的/home/zgl/bin目录下创建脚本ods_log.sh#!/bin/bash APP=gmall hive=/opt/module/hive-1.2.1/bin/hive # 如果是输入的日期按照取输入日期;如果没输入日期取当前时间的前一天 # [ -n 变量值 ] 判断变量的值,是否为空 if [-n "$1"] ; then do_date=$1 else do_date=`date -d "-1 day" +%F` fi echo "===日志日期为 $do_date===" sql=" load data inpath '/orgin_data/gmall/log/topic_start/$do_date' into table "$APP".ods_start_log partition(dt='$do_date'); load data inpath '/orgin_data/gmall/log/topic_event/$do_date' into table "$APP".ods_event_log partition(dt='$do_date'); " $hive -e "$sql" -

增加脚本权限

chmod 777 ods_log.sh -

脚本使用(将 12日 的数据导入)

ods_log.sh 2020-05-12 -

查看导入数据

select * from ods_start_log where dt='2020-05-12' limit 2; select * from ods_event_log where dt='2020-05-12' limit 2;

4. 数仓搭建之 DWD 层

该层是对 ODS 层数据进行清洗(去除空值、脏数据、超过极限范围的数据,行式存储改为列存储,该压缩格式等等)。

4.1 DWD 层启动表数据解析

4.1.1 创建启动表

drop table if exists dwd_start_log;

create external table dwd_start_log (

`mid_id` string,

`user_id` string,

`version_code` string,

`version_name` string,

`lang` string,

`source` string,

`os` string,

`area` string,

`model` string,

`brand` string,

`sdk_version` string,

`gmail` string,

`height_width` string,

`app_time` string,

`net_work` string,

`lng` string,

`lat` string,

`entry` string,

`open_ad_type` string,

`action` string,

`loading_time` string,

`detail` string,

`extend1` string

)

partitioned by(`dt` string)

location '/warehouse/gmall/dwd/dwd_start_log/';

4.1.2 向启动表中导入数据

insert overwrite table dwd_start_log

partition(dt='2020-05-11')

select

get_json_object(line, '$.mid') mid_id,

get_json_object(line, '$.uid') user_id,

get_json_object(line, '$.vc') version_code,

get_json_object(line, '$.vn') version_name,

get_json_object(line, '$.l') lang,

get_json_object(line, '$.sr') source,

get_json_object(line, '$.os') os,

get_json_object(line, '$.ar') area,

get_json_object(line, '$.md') model,

get_json_object(line, '$.ba') brand,

get_json_object(line, '$.sv') sdk_version,

get_json_object(line, '$.g') gmail,

get_json_object(line, '$.hw') height_width,

get_json_object(line, '$.t') app_time,

get_json_object(line, '$.nw') net_work,

get_json_object(line, '$.ln') lng,

get_json_object(line, '$.la') lat,

get_json_object(line, '$.entry') entry,

get_json_object(line, '$.open_ad_type') open_ad_type,

get_json_object(line, '$.action') action,

get_json_object(line, '$.loading_time') loading_time,

get_json_object(line, '$.detail') detail,

get_json_object(line, '$.extend1') extend1

from ods_start_log

where dt='2020-05-11';

4.1.3 查看是否导入成功

select * from dwd_start_log limit 2;

4.1.4 DWD 层启动表加载数据脚本

-

在

hadoop101的/home/zgl/bin/目录下创建脚本dwd_start_log.sh。#!/bin/bash APP=gmall hive=/opt/module/hive-1.2.1/bin/hive if [ -n "$1" ] ; then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dwd_start_log partition(dt='$do_date') select get_json_object(line, '$.mid') mid_id, get_json_object(line, '$.uid') user_id, get_json_object(line, '$.vc') version_code, get_json_object(line, '$.vn') version_name, get_json_object(line, '$.l') lang, get_json_object(line, '$.sr') source, get_json_object(line, '$.os') os, get_json_object(line, '$.ar') area, get_json_object(line, '$.md') model, get_json_object(line, '$.ba') brand, get_json_object(line, '$.sv') sdk_version, get_json_object(line, '$.g') gmail, get_json_object(line, '$.hw') height_width, get_json_object(line, '$.t') app_time, get_json_object(line, '$.nw') net_work, get_json_object(line, '$.ln') lng, get_json_object(line, '$.la') lat, get_json_object(line, '$.entry') entry, get_json_object(line, '$.open_ad_type') open_ad_type, get_json_object(line, '$.action') action, get_json_object(line, '$.loading_time') loading_time, get_json_object(line, '$.detail') detail, get_json_object(line, '$.extend1') extend1 from "$APP".ods_start_log where dt='$do_date'; " $hive -e "$sql" -

增加脚本权限

chmod 777 dwd_start_log.sh -

使用

dwd_start_log.sh 2020-05-12 -

查看是否导入数据成功

select * from dwd_start_log where dt='2020-05-12' limit 2;

4.2 DWD 层事件表数据解析

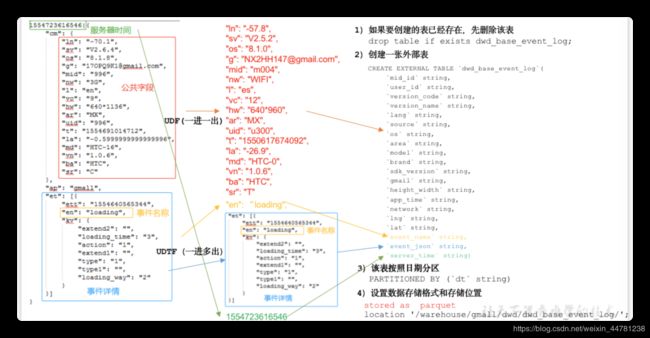

4.2.1 创建基础明细表

表的设计分析:

-

创建事件日志基础明细表

drop table if exists dwd_base_event_log; create external table dwd_base_event_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `event_name` string, `event_json` string, `server_time` string ) partitioned by (`dt` string) stored as parquet location '/warehouse/gmall/dwd/dwd_base_event_log/'; -

如上图,需要使用到

UDF和UDTF。

4.2.2 自定义 UDF 函数(解析公共字段 cm)

-

创建

maven工程:hive-function。 -

创建包

com.guli.udf。 -

在

pom.xml添加下列内容:<properties> <project.build.sourceEncoding>UTF8project.build.sourceEncoding> <hive.version>1.2.1hive.version> properties> <dependencies> <dependency> <groupId>org.apache.hivegroupId> <artifactId>hive-execartifactId> <version>${hive.version}version> dependency> dependencies> <build> <plugins> <plugin> <artifactId>maven-compiler-pluginartifactId> <version>2.3.2version> <configuration> <source>1.8source> <target>1.8target> configuration> plugin> <plugin> <artifactId>maven-assembly-pluginartifactId> <configuration> <descriptorRefs> <descriptorRef>jar-with-dependenciesdescriptorRef> descriptorRefs> configuration> <executions> <execution> <id>make-assemblyid> <phase>packagephase> <goals> <goal>singlegoal> goals> execution> executions> plugin> plugins> build> -

在包

com.guli.udf创建类BaseFieldUDF,用来解析公共字段。package com.guli.udf; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.hive.ql.exec.UDF; import org.json.JSONException; import org.json.JSONObject; public class BaseFieldUDF extends UDF { public String evaluate(String line, String jsonKeysString) { StringBuilder sb = new StringBuilder(); // 切割 jsonKeys 得到一个个 key 值 String[] keys = jsonKeysString.split(","); // 处理 line ,得到 服务器时间 和 json String[] logContents = line.split("\\|"); // 合法性校验 if (logContents.length != 2 || StringUtils.isBlank(logContents[1])) { return ""; } // 处理 json try { JSONObject jsonObject = new JSONObject(logContents[1]); // 获取公共字段 cm 中的数据 JSONObject base = jsonObject.getJSONObject("cm"); // 循环遍历取值 for (String key : keys) { String fieldName = key.trim(); if (base.has(fieldName)) { sb.append(base.getString(fieldName)).append("\t"); } else { sb.append("\t"); } } // 添加事件字段 sb.append(jsonObject.getString("et")).append("\t"); // 添加服务器时间 sb.append(logContents[0]).append("\t"); } catch (JSONException e) { e.printStackTrace(); } return sb.toString(); } }

4.2.3 自定义 UDTF 函数(解析具体事件字段)

-

创建包

com.guli.udtf。 -

在包

com.guli.udtf下创建类EventJsonUDTF,用于将多个事件展开。package com.guli.udtf; import org.apache.commons.lang.StringUtils; import org.apache.hadoop.hive.ql.exec.UDFArgumentException; import org.apache.hadoop.hive.ql.metadata.HiveException; import org.apache.hadoop.hive.ql.udf.generic.GenericUDTF; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.ObjectInspectorFactory; import org.apache.hadoop.hive.serde2.objectinspector.StructObjectInspector; import org.apache.hadoop.hive.serde2.objectinspector.primitive.PrimitiveObjectInspectorFactory; import org.json.JSONArray; import org.json.JSONException; import java.util.ArrayList; public class EventJsonUDTF extends GenericUDTF { // 在该方法中,指定输出参数的名称和参数类型 @Override public StructObjectInspector initialize(StructObjectInspector argOIs) throws UDFArgumentException { ArrayList<String> fieldNames = new ArrayList<>(); ArrayList<ObjectInspector> fieldOIs = new ArrayList<>(); fieldNames.add("event_name"); fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector); fieldNames.add("event_json"); fieldOIs.add(PrimitiveObjectInspectorFactory.javaStringObjectInspector); return ObjectInspectorFactory.getStandardStructObjectInspector(fieldNames, fieldOIs); } // 输入一条记录,输出若干条结果 @Override public void process(Object[] objects) throws HiveException { // 获取传入的 et String input = objects[0].toString(); // 如果传进来的数据为空,直接返回过滤掉该数据 if (StringUtils.isBlank(input)) { return; } else { try { // 获取一共有几个事件 JSONArray ja = new JSONArray(input); if (ja == null) { return; } // 遍历每一个事件 for (int i = 0; i < ja.length(); i++) { String[] result = new String[2]; try { // 获取每个事件的名称 result[0] = ja.getJSONObject(i).getString("en"); // 取出每一个事件的整体 result[1] = ja.getString(i); } catch (JSONException e) { continue; } // 将结果返回 forward(result); } } catch (JSONException e) { e.printStackTrace(); } } } // 当没有记录处理的时候该方法会被调用,用来清理代码或者产生额外的输出 @Override public void close() throws HiveException { } } -

将该项目打包。

-

将不带依赖的

jar包上传到hadoop101的opt/module/hive-1.2.1/目录下。 -

将

jar包添加到Hive的classpath。hive (gmall)> add jar /opt/module/hive-1.2.1/hive-function-1.0-SNAPSHOT.jar; -

创建临时函数与开发好的

java class关联。hive (gmall)> create temporary function base_analizer as 'com.guli.udf.BaseFieldUDF'; hive (gmall)> create temporary function flat_analizer as 'com.guli.udtf.EventJsonUDTF';

4.2.4 解析事件日志基础明细表

-

解析事件日志基础明细表

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_base_event_log partition (dt='2020-05-11') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, event_name, event_json, server_time from( select split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[0] as mid_id, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[1] as user_id, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[2] as version_code, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[3] as version_name, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[4] as lang, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[5] as source, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[6] as os, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[7] as area, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[8] as model, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[9] as brand, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[10] as sdk_version, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[11] as gmail, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[12] as height_width, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[13] as app_time, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[14] as network, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[15] as lng, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[16] as lat, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[17] as ops, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[18] as server_time from ods_event_log where dt='2020-05-11' and base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la')<>'' ) sdk_log lateral view flat_analizer(ops) tmp_k as event_name, event_json; -

测试

select * from dwd_base_event_log limit 2;

4.2.5 DWD 层数据解析脚本

-

在

hadoop101的/home/zgl/bin/目录下创建脚本dwd_base_log.sh#!/bin/bash # 定义变量方便修改 APP=gmall hive=/opt/module/hive-1.2.1/bin/hive if [ -n "$1" ]; then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" add jar /opt/module/hive-1.2.1/hive-function-1.0-SNAPSHOT.jar; create temporary function base_analizer as 'com.guli.udf.BaseFieldUDF'; create temporary function flat_analizer as 'com.guli.udtf.EventJsonUDTF'; set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dwd_base_event_log partition (dt='$do_date') select mid_id, user_id, version_code, version_name, lang, source, os, area, model, brand, sdk_version, gmail, height_width, app_time, network, lng, lat, event_name, event_json, server_time from( select split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[0] as mid_id, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[1] as user_id, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[2] as version_code, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[3] as version_name, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[4] as lang, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[5] as source, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[6] as os, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[7] as area, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[8] as model, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[9] as brand, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[10] as sdk_version, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[11] as gmail, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[12] as height_width, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[13] as app_time, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[14] as network, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[15] as lng, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[16] as lat, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[17] as ops, split(base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la'),'\t')[18] as server_time from "$APP".ods_event_log where dt='$do_date' and base_analizer(line, 'mid,uid,vc,vn,l,sr,os,ar,md,ba,sv,g,hw,t,nw,ln,la')<>'' ) sdk_log lateral view flat_analizer(ops) tmp_k as event_name, event_json; " $hive -e "$sql" -

增加脚本执行权限

chmod 777 dwd_base_log.sh -

脚本使用

dwd_base_log.sh 2020-05-12 -

查询导入结果

select * from dwd_base_event_log where dt='2020-05-12' limit 2;

4.3 DWD 层事件表获取

4.3.1 商品点击表

-

事件名称:

display -

建表语句

drop table if exists dwd_display_log; create external table dwd_display_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `goodsid` string, `place` string, `extend1` string, `category` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_display_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_display_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.goodsid') goodsid , get_json_object(event_json, '$.kv.place') place , get_json_object(event_json, '$.kv.extend1') extend1 , get_json_object(event_json, '$.kv.category') category , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='display'; -

查看插入数据是否成功

select * from dwd_display_log limit 2;

4.3.2 商品详情页表

- 事件名称:

newsdetail

-

建表语句

drop table if exists dwd_newsdetail_log; create external table dwd_newsdetail_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `action` string, `goodsid` string, `showtype` string, `news_staytime` string, `loading_time` string, `type1` string, `category` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_newsdetail_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_newsdetail_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.entry') entry , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.goodsid') goodsid , get_json_object(event_json, '$.kv.showtype') showtype , get_json_object(event_json, '$.kv.news_staytime') news_staytime , get_json_object(event_json, '$.kv.loading_time') loading_time , get_json_object(event_json, '$.kv.type1') type1 , get_json_object(event_json, '$.kv.category') category , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='newsdetail'; -

查看是否插入成功

select * from dwd_newsdetail_log limit 2;

4.3.3 商品列表页表

- 事件名称:

loading

-

建表语句

drop table if exists dwd_loading_log; create external table dwd_loading_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `loading_time` string, `loading_way` string, `extend1` string, `extend2` string, `type` string, `type1` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_loading_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_loading_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.loading_time') loading_time , get_json_object(event_json, '$.kv.loading_way') loading_way , get_json_object(event_json, '$.kv.extend1') extend1 , get_json_object(event_json, '$.kv.extend2') extend2 , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.type1') type1 , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='loading'; -

查看是否插入成功

select * from dwd_loading_log limit 2;

4.3.4 广告表

- 事件名称:

ad

-

建表语句

drop table if exists dwd_ad_log; create external table dwd_ad_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `entry` string, `action` string, `content` string, `detail` string, `ad_source` string, `behavior` string, `newstype` string, `show_style` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_ad_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_ad_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.entry') entry , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.content') content , get_json_object(event_json, '$.kv.detail') detail , get_json_object(event_json, '$.kv.source') ad_source , get_json_object(event_json, '$.kv.behavior') behavior , get_json_object(event_json, '$.kv.newstype') newstype , get_json_object(event_json, '$.kv.show_style') show_style , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='ad'; -

查看是否插入成功

select * from dwd_ad_log limit 2;

4.3.5 消息通知表

-

事件名称:

notification -

建表语句

drop table if exists dwd_notification_log; create external table dwd_notification_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `action` string, `type` string, `ap_time` string, `content` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_notification_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_notification_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.ap_time') ap_time , get_json_object(event_json, '$.kv.content') content , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='notification'; -

查看是否插入成功

select * from dwd_notification_log limit 2;

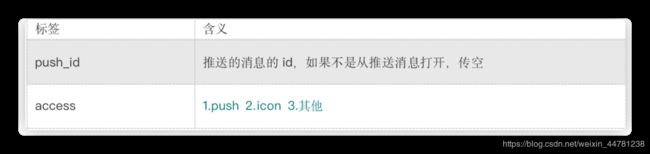

4.3.6 用户前台活跃表

-

事件名称:

active_foreground -

建表语句

drop table if exists dwd_active_foreground_log; create external table dwd_active_foreground_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `push_id` string, `access` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_active_foreground_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_active_foreground_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.push_id') push_id , get_json_object(event_json, '$.kv.access') access , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='active_foreground'; -

查看是否插入成功

select * from dwd_active_foreground_log limit 2;

4.3.7 用户后台活跃表

-

事件名称:

active_background -

建表语句

drop table if exists dwd_active_background_log; create external table dwd_active_background_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `active_source` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_active_foreground_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_active_background_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.active_source') active_source , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='active_background'; -

查看是否插入成功

select * from dwd_active_background_log limit 2;

4.3.8 评论表

-

事件名称:

comment -

建表语句

drop table if exists dwd_comment_log; create external table dwd_comment_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `comment_id` string, `userid` string, `p_comment_id` string, `content` string, `addtime` string, `other_id` string, `praise_count` string, `reply_count` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_comment_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_comment_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.comment_id') comment_id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.p_comment_id') p_comment_id , get_json_object(event_json, '$.kv.content') content , get_json_object(event_json, '$.kv.addtime') addtime , get_json_object(event_json, '$.kv.other_id') other_id , get_json_object(event_json, '$.kv.praise_count') praise_count , get_json_object(event_json, '$.kv.reply_count') reply_count , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='comment'; -

查看是否插入成功

select * from dwd_comment_log limit 2;

4.3.9 收藏表

-

事件名称:

favorites -

建表语句

drop table if exists dwd_favorites_log; create external table dwd_favorites_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `id` string, `course_id` string, `userid` string, `add_time` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_favorites_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_favorites_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.id') id , get_json_object(event_json, '$.kv.course_id') course_id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.add_time') add_time , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='favorites'; -

查看是否插入成功

select * from dwd_favorites_log limit 2;

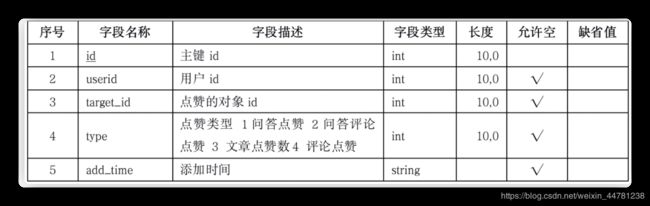

4.3.10 点赞表

-

事件名称:

praise -

建表语句

drop table if exists dwd_praise_log; create external table dwd_praise_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `id` string, `userid` string, `target_id` string, `type` string, `add_time` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_praise_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_praise_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.id') id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.target_id') target_id , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.add_time') add_time , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='praise'; -

查看是否插入成功

select * from dwd_praise_log limit 2;

4.3.11 错误日志表

-

事件名称:

error -

建表语句

drop table if exists dwd_error_log; create external table dwd_error_log ( `mid_id` string, `user_id` string, `version_code` string, `version_name` string, `lang` string, `source` string, `os` string, `area` string, `model` string, `brand` string, `sdk_version` string, `gmail` string, `height_width` string, `app_time` string, `network` string, `lng` string, `lat` string, `errorBrief` string, `errorDetail` string, `server_time` string ) partitioned by (`dt` string) location '/warehouse/gmall/dwd/dwd_error_log/'; -

插入数据

set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table dwd_error_log partition(dt='2020-05-11') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.errorBrief') errorBrief , get_json_object(event_json, '$.kv.errorDetail') errorDetail , server_time from dwd_base_event_log where dt='2020-05-11' and event_name='error'; -

查看是否插入成功

select * from dwd_error_log limit 2;

4.3.12 DWD 层事件表加载数据脚本

-

在

hadoop101的/home/zgl/bin/目录下创建脚本dwd_event_log.sh#!/bin/bash APP=gmall hive=/opt/module/hive-1.2.1/bin/hive if [ -n $1 ]; then do_date=$1 else do_date=`date -d "-1 day" +%F` fi sql=" set hive.exec.dynamic.partition.mode=nonstrict; insert overwrite table "$APP".dwd_display_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.goodsid') goodsid , get_json_object(event_json, '$.kv.place') place , get_json_object(event_json, '$.kv.extend1') extend1 , get_json_object(event_json, '$.kv.category') category , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='display'; insert overwrite table "$APP".dwd_newsdetail_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.entry') entry , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.goodsid') goodsid , get_json_object(event_json, '$.kv.showtype') showtype , get_json_object(event_json, '$.kv.news_staytime') news_staytime , get_json_object(event_json, '$.kv.loading_time') loading_time , get_json_object(event_json, '$.kv.type1') type1 , get_json_object(event_json, '$.kv.category') category , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='newsdetail'; insert overwrite table "$APP".dwd_loading_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.loading_time') loading_time , get_json_object(event_json, '$.kv.loading_way') loading_way , get_json_object(event_json, '$.kv.extend1') extend1 , get_json_object(event_json, '$.kv.extend2') extend2 , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.type1') type1 , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='loading'; insert overwrite table "$APP".dwd_ad_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.entry') entry , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.content') content , get_json_object(event_json, '$.kv.detail') detail , get_json_object(event_json, '$.kv.source') ad_source , get_json_object(event_json, '$.kv.behavior') behavior , get_json_object(event_json, '$.kv.newstype') newstype , get_json_object(event_json, '$.kv.show_style') show_style , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='ad'; insert overwrite table "$APP".dwd_notification_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.action') action , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.ap_time') ap_time , get_json_object(event_json, '$.kv.content') content , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='notification'; insert overwrite table "$APP".dwd_active_foreground_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.push_id') push_id , get_json_object(event_json, '$.kv.access') access , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='active_foreground'; insert overwrite table "$APP".dwd_active_background_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.active_source') active_source , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='active_background'; insert overwrite table "$APP".dwd_comment_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.comment_id') comment_id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.p_comment_id') p_comment_id , get_json_object(event_json, '$.kv.content') content , get_json_object(event_json, '$.kv.addtime') addtime , get_json_object(event_json, '$.kv.other_id') other_id , get_json_object(event_json, '$.kv.praise_count') praise_count , get_json_object(event_json, '$.kv.reply_count') reply_count , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='comment'; insert overwrite table "$APP".dwd_favorites_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.id') id , get_json_object(event_json, '$.kv.course_id') course_id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.add_time') add_time , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='favorites'; insert overwrite table "$APP".dwd_praise_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.id') id , get_json_object(event_json, '$.kv.userid') userid , get_json_object(event_json, '$.kv.target_id') target_id , get_json_object(event_json, '$.kv.type') type , get_json_object(event_json, '$.kv.add_time') add_time , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='praise'; insert overwrite table "$APP".dwd_error_log partition(dt='$do_date') select mid_id, user_id, version_code , version_name , lang , source , os , area , model , brand , sdk_version , gmail , height_width , app_time , network , lng , lat , get_json_object(event_json, '$.kv.errorBrief') errorBrief , get_json_object(event_json, '$.kv.errorDetail') errorDetail , server_time from "$APP".dwd_base_event_log where dt='$do_date' and event_name='error'; " $hive -e "$sql" -

增加脚本权限

chmod 777 dwd_event_log.sh -

执行脚本

dwd_event_log.sh 2020-05-12