简介

Elastic 官方已经发布了Elasticsearch Operator ,简化了 elasticsearch 以及 kibana的部署与升级,结合 fluentd-kubernetes-daemonset,现在在kubernetes 部署 EFK 已经非常方便。

部署 Elasticsearch Operator 和 一些必要的资源

这个yaml 文件比较大 我放了个链接,我这里主要修改了 namespace

kubectl apply -f https://download.elastic.co/downloads/eck/1.1.2/all-in-one.yaml

部署 Elasticsearch 集群

先创建几个 PersistentVolume StorageClass,我这里用的是localpv,我会部署3个data节点 所以创建三个pv,默认 elastic 用户 的密码是自动生成的 可以到 secrect 中查看,这个secret 名字以 elastic-user 结尾,用base64 解压就行。

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: local-storage-es-test provisioner: kubernetes.io/no-provisioner volumeBindingMode: WaitForFirstConsumer --- apiVersion: v1 kind: PersistentVolume metadata: name: es-test-pv-01 namespace: efk spec: capacity: storage: 100G accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: local-storage-es-test local: path: /data/elasticsearch-test nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-node-01 --- apiVersion: v1 kind: PersistentVolume metadata: name: es-test-pv-02 namespace: efk spec: capacity: storage: 100G accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: local-storage-es-test local: path: /data/elasticsearch-test nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-node-02 --- apiVersion: v1 kind: PersistentVolume metadata: name: es-test-pv-03 namespace: efk spec: capacity: storage: 100G accessModes: - ReadWriteOnce persistentVolumeReclaimPolicy: Retain storageClassName: local-storage-es-test local: path: /data/elasticsearch-test nodeAffinity: required: nodeSelectorTerms: - matchExpressions: - key: kubernetes.io/hostname operator: In values: - k8s-node-03 ---

然后创建es集群,文件里对不好理解的我写了注释

apiVersion: elasticsearch.k8s.elastic.co/v1 kind: Elasticsearch metadata: name: cluster-test namespace: efk spec: version: 7.3.0 http: tls: selfSignedCertificate:

## 取消默认的tls disabled: true nodeSets:

## master 节点 名称 - name: master count: 3 podTemplate: spec: volumes: - name: elasticsearch-data emptyDir: {} initContainers: - name: sysctl securityContext: privileged: true command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144'] containers: - name: elasticsearch readinessProbe: exec: command: - bash - -c - /mnt/elastic-internal/scripts/readiness-probe-script.sh failureThreshold: 3 initialDelaySeconds: 10 periodSeconds: 12 successThreshold: 1 timeoutSeconds: 12 env:

## jvm 内存 - name: ES_JAVA_OPTS value: -Xms1g -Xmx1g - name: READINESS_PROBE_TIMEOUT value: "10" resources: requests: cpu: 100m limits: cpu: 1000m config:

## 是不是master节点 ,节点这里可以看文档,一个节点既可以是master 也可以是 data node.master: "true" node.data: "false"

node.ingest: "false" - name: data count: 3 volumeClaimTemplates: - metadata: name: elasticsearch-data spec: accessModes: - ReadWriteOnce resources: requests: storage: 100G

## 我定义的 sc storageClassName: local-storage-es-test podTemplate: spec: initContainers: - name: sysctl securityContext: privileged: true command: ['sh', '-c', 'sysctl -w vm.max_map_count=262144'] - name: increase-fd-ulimit securityContext: privileged: true command: ["sh", "-c", "ulimit -n 65536"] containers: - name: elasticsearch readinessProbe: exec: command: - bash - -c - /mnt/elastic-internal/scripts/readiness-probe-script.sh failureThreshold: 3 initialDelaySeconds: 10 periodSeconds: 12 successThreshold: 1 timeoutSeconds: 12 env: - name: ES_JAVA_OPTS value: -Xms1g -Xmx1g - name: READINESS_PROBE_TIMEOUT value: "10" resources: requests: cpu: 100m limits: cpu: 1000m config: node.master: "false" node.data: "true" node.ingest: "true"

部署 Kibana 与 ingress

这里我主要设置了 Kinana Prefix 访问路径

apiVersion: kibana.k8s.elastic.co/v1 kind: Kibana metadata: name: kibana-test namespace: efk spec: version: 7.3.0 http: tls: selfSignedCertificate: disabled: true count: 1 elasticsearchRef: name: cluster-test podTemplate: spec: containers: - name: kibana resources: limits: cpu: 1000m requests: cpu: 100m env: - name: SERVER_BASEPATH value: "/kibana-test" - name: SERVER_REWRITEBASEPATH value: 'true' readinessProbe: failureThreshold: 3 httpGet: path: /kibana-test/login port: 5601 scheme: HTTP initialDelaySeconds: 10 periodSeconds: 10 successThreshold: 1 timeoutSeconds: 5 --- apiVersion: extensions/v1beta1 kind: Ingress metadata: name: ingress-kibana-test namespace: efk annotations: # use the shared ingress-nginx kubernetes.io/ingress.class: "nginx" spec: rules: - host: ks.***.cn http: paths: - path: /kibana-test backend: serviceName: kibana-test-kb-http servicePort: 5601

部署 Fluentd

这里贴一下 kubernetes_metadata 使用部分的源码,默认支持几个kubernetes_metadata的配置,所有配置看文档,这是一个 fluentd filter ,这个细节也可以不看。

@type kubernetes_metadata @id filter_kube_metadata kubernetes_url "#{ENV['FLUENT_FILTER_KUBERNETES_URL'] || 'https://' + ENV.fetch('KUBERNETES_SERVICE_HOST') + ':' + ENV.fetch('KUBERNETES_SERVICE_PORT') + '/api'}" verify_ssl "#{ENV['KUBERNETES_VERIFY_SSL'] || true}" ca_file "#{ENV['KUBERNETES_CA_FILE']}" skip_labels "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_LABELS'] || 'false'}" skip_container_metadata "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_CONTAINER_METADATA'] || 'false'}" skip_master_url "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_MASTER_URL'] || 'false'}" skip_namespace_metadata "#{ENV['FLUENT_KUBERNETES_METADATA_SKIP_NAMESPACE_METADATA'] || 'false'}"

这里贴一下 kubernetes 日志 tail input 使用部分的源码,默认路径为 /var/log/containers/*.log ,这里需要注意一些 fluentd pod volumes 的配置,保证在pod内 可以拿得到日志文件 。

@type tail @id in_tail_container_logs path /var/log/containers/*.log pos_file /var/log/fluentd-containers.log.pos tag "#{ENV['FLUENT_CONTAINER_TAIL_TAG'] || 'kubernetes.*'}" exclude_path "#{ENV['FLUENT_CONTAINER_TAIL_EXCLUDE_PATH'] || use_default}" read_from_head true @type "#{ENV['FLUENT_CONTAINER_TAIL_PARSER_TYPE'] || 'json'}" time_format %Y-%m-%dT%H:%M:%S.%NZ

这里是 fluentd DaemonSet 的配置 由于我的 docker 主目录在 /Data/docker 下 ,而且/var/log/ 下的文件只是一个链接 ,所以 我特意加了一个 volume ,总之要保证获取到文件。还有 es 的账号密码 我建议自己创一个,因为默认生成的elastic 用户密码是随机的(我之前创建集群的时候还不能自定义初始用户密码,我写这篇文章的时候没有特意去查,因为对我来说这点不是特别重要)。

apiVersion: v1 kind: ServiceAccount metadata: name: fluentd namespace: efk labels: app: fluentd --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRole metadata: name: fluentd labels: app: fluentd rules: - apiGroups: - "" resources: - pods - namespaces verbs: - get - list - watch --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: fluentd roleRef: kind: ClusterRole name: fluentd apiGroup: rbac.authorization.k8s.io subjects: - kind: ServiceAccount name: fluentd namespace: efk --- --- apiVersion: apps/v1 kind: DaemonSet metadata: name: fluentd-test namespace: efk labels: app: fluentd-test spec: selector: matchLabels: app: fluentd-test template: metadata: labels: app: fluentd-test spec: serviceAccount: fluentd serviceAccountName: fluentd tolerations: - key: node-role.kubernetes.io/master effect: NoSchedule containers: - name: fluentd image: fluent/fluentd-kubernetes-daemonset:v1.11.0-debian-elasticsearch7-1.0 env: - name: FLUENT_ELASTICSEARCH_HOST value: "cluster-test-es-http.efk.svc.cluster.local" - name: FLUENT_ELASTICSEARCH_USER value: "admin" - name: FLUENT_ELASTICSEARCH_PASSWORD value: "123" - name: FLUENT_ELASTICSEARCH_PORT value: "9200" - name: FLUENT_ELASTICSEARCH_SCHEME value: "http" - name: FLUENTD_SYSTEMD_CONF value: disable - name: FLUENT_KUBERNETES_METADATA_SKIP_CONTAINER_METADATA value: 'true' - name: FLUENT_KUBERNETES_METADATA_SKIP_MASTER_URL value: 'true' - name: FLUENT_KUBERNETES_METADATA_SKIP_NAMESPACE_METADATA value: 'true' - name: FLUENT_KUBERNETES_METADATA_SKIP_LABELS value: 'true' - name: FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP value: 'true' - name: K8S_NODE_NAME valueFrom: fieldRef: fieldPath: spec.nodeName resources: limits: memory: 512Mi requests: cpu: 100m memory: 200Mi volumeMounts: - name: varlog mountPath: /var/log - name: varlibdockercontainers mountPath: /var/log/containers readOnly: true - name: varlibdockercontainers-kube-path mountPath: /var/log/pods readOnly: true - name: varlibdockercontainers-real-path mountPath: /data/docker/containers readOnly: true terminationGracePeriodSeconds: 30 volumes: - name: varlog hostPath: path: /var/log - name: varlibdockercontainers hostPath: path: /var/log/containers - name: varlibdockercontainers-kube-path hostPath: path: /var/log/pods - name: varlibdockercontainers-real-path hostPath: path: /data/docker/containers

查看日志

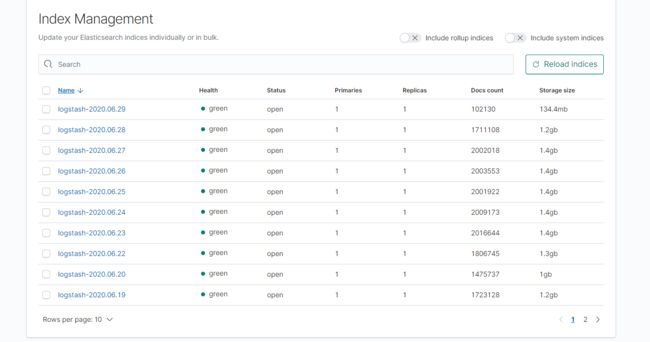

进入 kibana ,查看 所有 index 可以看到以下界面

这个名字什么的是可以改的,贴源码

@type elasticsearch @id out_es @log_level info include_tag_key true host "#{ENV['FLUENT_ELASTICSEARCH_HOST']}" port "#{ENV['FLUENT_ELASTICSEARCH_PORT']}" path "#{ENV['FLUENT_ELASTICSEARCH_PATH']}" scheme "#{ENV['FLUENT_ELASTICSEARCH_SCHEME'] || 'http'}" ssl_verify "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERIFY'] || 'true'}" ssl_version "#{ENV['FLUENT_ELASTICSEARCH_SSL_VERSION'] || 'TLSv1_2'}" user "#{ENV['FLUENT_ELASTICSEARCH_USER'] || use_default}" password "#{ENV['FLUENT_ELASTICSEARCH_PASSWORD'] || use_default}" reload_connections "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_CONNECTIONS'] || 'false'}" reconnect_on_error "#{ENV['FLUENT_ELASTICSEARCH_RECONNECT_ON_ERROR'] || 'true'}" reload_on_failure "#{ENV['FLUENT_ELASTICSEARCH_RELOAD_ON_FAILURE'] || 'true'}" log_es_400_reason "#{ENV['FLUENT_ELASTICSEARCH_LOG_ES_400_REASON'] || 'false'}" logstash_prefix "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_PREFIX'] || 'logstash'}" logstash_dateformat "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_DATEFORMAT'] || '%Y.%m.%d'}" logstash_format "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_FORMAT'] || 'true'}" index_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_INDEX_NAME'] || 'logstash'}" type_name "#{ENV['FLUENT_ELASTICSEARCH_LOGSTASH_TYPE_NAME'] || 'fluentd'}" include_timestamp "#{ENV['FLUENT_ELASTICSEARCH_INCLUDE_TIMESTAMP'] || 'false'}" template_name "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_NAME'] || use_nil}" template_file "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_FILE'] || use_nil}" template_overwrite "#{ENV['FLUENT_ELASTICSEARCH_TEMPLATE_OVERWRITE'] || use_default}" sniffer_class_name "#{ENV['FLUENT_SNIFFER_CLASS_NAME'] || 'Fluent::Plugin::ElasticsearchSimpleSniffer'}" request_timeout "#{ENV['FLUENT_ELASTICSEARCH_REQUEST_TIMEOUT'] || '5s'}" flush_thread_count "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_THREAD_COUNT'] || '8'}" flush_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_FLUSH_INTERVAL'] || '5s'}" chunk_limit_size "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_CHUNK_LIMIT_SIZE'] || '2M'}" queue_limit_length "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_QUEUE_LIMIT_LENGTH'] || '32'}" retry_max_interval "#{ENV['FLUENT_ELASTICSEARCH_BUFFER_RETRY_MAX_INTERVAL'] || '30'}" retry_forever true

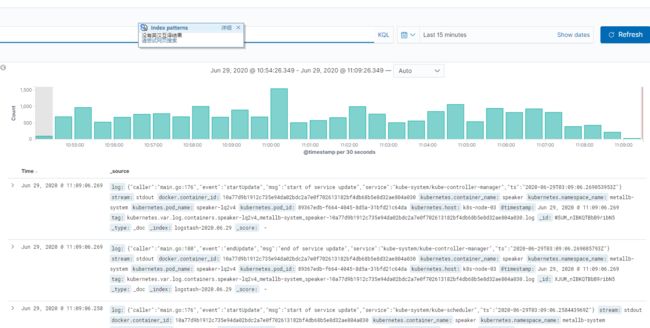

创建 Index patterns。

然后就可以查看了