[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR

目录

一、逻辑回归模型-log损失函数

1.1 模型定义

1.2 损失函数

1.3 梯度下降求解参数

二、利用最大似然估计求解逻辑回归模型参数

三、逻辑回归模型优缺点分析

四、spark ml机器学习库实现逻辑回归模型

五、离散特征作为模型输入

一、逻辑回归模型-log损失函数

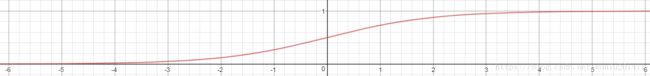

1.1 模型定义

1.2 损失函数

1.3 梯度下降求解参数

迭代![]() 直至收敛

直至收敛

二、利用最大似然估计求解逻辑回归模型参数

事件发生概率为:

训练样本的似然函数为:

利用梯度下降优化似然函数:

三、逻辑回归模型优缺点分析

优点:

- 结果通俗易懂,自变量的系数直接与权重挂钩,可以解释为自变量对目标变量的预测价值大小。

- 速度快,效率高,并且容易线上算法实现。

缺点:

- 目标变量中每个类别对应的样本数量要充足,才支持建模。

- 要注意排除自变量中的共线性问题。

- 异常值会给模型带来很大干扰,应该删除。

- 模型本身不能处理缺失值,所以需要对缺失值进行预处理。

- 模型是线性分类器,容易欠拟合,分类精度不高

四、spark ml机器学习库实现逻辑回归模型

1、读取数据,划分训练集和测试集

births = spark.read.csv("births_transformed.csv",header=True,inferSchema=True)

births_train,births_test = births.randomSplit([0.7,0.3])2、创建pipeline:feature transform、LR model

import pyspark.ml.feature as ft

import pyspark.ml.classification as cl

from pyspark.ml import Pipeline

encoder = ft.OneHotEncoder(inputCol = 'BIRTH_PLACE',outputCol='BIRTH_PLACE_VEC')

featuresCreator = ft.VectorAssembler(inputCols=[c for c in births.columns[2:]] + [encoder.getOutputCol()], outputCol = 'features')

logistic = cl.LogisticRegression(maxIter=10,regParam=0.01,labelCol='INFANT_ALIVE_AT_REPORT')

pipeline = Pipeline(stages=[encoder,featuresCreator,logistic])

model = pipeline.fit(births_train)

test_model = model.transform(births_test)其中,BIRTH_PLACE是类别变量,转化为向量;INFANT_ALIVE_AT_REPORT是label。结果如下:

test_model.take(1)

[Row(INFANT_ALIVE_AT_REPORT=0, BIRTH_PLACE=1, MOTHER_AGE_YEARS=13, FATHER_COMBINED_AGE=99, CIG_BEFORE=0, CIG_1_TRI=0, CIG_2_TRI=0, CIG_3_TRI=0, MOTHER_HEIGHT_IN=62, MOTHER_PRE_WEIGHT=218, MOTHER_DELIVERY_WEIGHT=240, MOTHER_WEIGHT_GAIN=22, DIABETES_PRE=0, DIABETES_GEST=0, HYP_TENS_PRE=0, HYP_TENS_GEST=0, PREV_BIRTH_PRETERM=0, BIRTH_PLACE_VEC=SparseVector(9, {1: 1.0}), features=SparseVector(24, {0: 13.0, 1: 99.0, 6: 62.0, 7: 218.0, 8: 240.0, 9: 22.0, 16: 1.0}), rawPrediction=DenseVector([0.9171, -0.9171]), probability=DenseVector([0.7145, 0.2855]), prediction=0.0)]probability为预测的概率值,prediction是预测结果。

3、模型评估

import pyspark.ml.evaluation as ev

evaluator = ev.BinaryClassificationEvaluator(rawPredictionCol='probability',labelCol='INFANT_ALIVE_AT_REPORT')

print(evaluator.evaluate(test_model,{evaluator.metricName:'areaUnderROC'}))

print(evaluator.evaluate(test_model,{evaluator.metricName:'areaUnderPR'}))结果为:

0.7368260192094396

0.7100164930103934五、离散特征作为模型输入

海量离散特征+LR在业内更为常见,将离散特征作为模型输入的优势如下:

1、LR为线性模型,将连续单变量离散化后变为N个,每个单变量有单独的权重,相当于为模型加入非线性,增强模型的表达能力,提高拟合精度。

2、离散化后的特征对异常值有很强的鲁棒性。

3、LR内部的计算是向量乘积,特征离散化后加快运算速度。

参考文献:

1、数据下载路径:

http://www.tomdrabas.com/data/LearningPySpark/births_train.csv.gz

2、Coursera:machine Learning课程

https://www.coursera.org/learn/machine-learning/resources/Zi29t

3、《数据挖掘与数据化运营实战》

4、https://testerhome.com/topics/11064/show_wechat

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第1张图片](http://img.e-com-net.com/image/info8/6925fae52aa04ac69f4ce2e2f74b8c01.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第2张图片](http://img.e-com-net.com/image/info8/451ec07771e24517ad64fe550e2fba36.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第3张图片](http://img.e-com-net.com/image/info8/4efafb20fa4b4a2db7e145efc2c2df11.jpg)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第4张图片](http://img.e-com-net.com/image/info8/52feaf185a5649649d3059b389a1236b.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第5张图片](http://img.e-com-net.com/image/info8/ef873f81db9d4f96acc49e1d2602d587.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第6张图片](http://img.e-com-net.com/image/info8/d550f306bcfc45bdb19a36ed1db3d262.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第7张图片](http://img.e-com-net.com/image/info8/1b6a8d536b084ff6892ea24e6e72d877.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第8张图片](http://img.e-com-net.com/image/info8/bb1d9916f15c45c996bff8113ff0a083.png)

![[机器学习算法]逻辑回归模型、优缺点及spark ml机器学习库实现LR_第9张图片](http://img.e-com-net.com/image/info8/a76f85d0766e4ce183d5bd3b32c48f4b.png)