Tensorflow2.0学习笔记(一)入门

目录

1 一个简单的手写数字图片识别程序

2 一个稍微复杂的手写数字图片识别程序

1 一个简单的手写数字图片识别程序

import tensorflow as tf

import numpy as np

"""

# 载入并准备好 MNIST 数据集

mnist = tf.keras.datasets.mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

"""

def load_mnist(path):

"""加载本地下载好的mnist数据集"""

f = np.load(path)

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

f.close()

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_mnist("mnist.npz")

x_train, x_test = x_train / 255.0, x_test / 255.0 # 将样本从整数转换为浮点数

# 利用tf.keras.Sequential容器封装网络层,前一层网络的输出默认作为下一层的输入

model = tf.keras.models.Sequential([

tf.keras.layers.Flatten(input_shape=(28, 28)),

tf.keras.layers.Dense(128, activation='relu'), # 创建一层网络,设置输出节点数为128,激活函数类型为Relu

tf.keras.layers.Dropout(0.2), # 在训练中每次更新时, 将输入单元的按比率随机设置为 0, 这有助于防止过拟合

tf.keras.layers.Dense(10, activation='softmax')]) # Dense层就是所谓的全连接神经网络层

# 为训练选择优化器和损失函数:

model.compile(optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

# 训练并验证模型

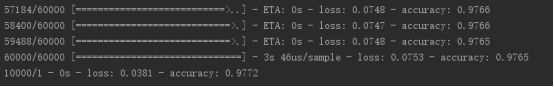

model.fit(x_train, y_train, epochs=5)

model.evaluate(x_test, y_test, verbose=2) # Verbosity mode. 0 = silent, 1 = progress bar, 2 = loss & acc

model.save_weights('weight/my_weights', save_format='tf') # 保存模型输出:

2 一个稍微复杂的手写数字图片识别程序

import tensorflow as tf

from tensorflow.keras.layers import Dense, Flatten, Conv2D

from tensorflow.keras import Model

import numpy as np

# mnist = tf.keras.datasets.mnist

# (x_train, y_train), (x_test, y_test) = mnist.load_data()

def load_mnist(path):

"""加载本地下载好的mnist数据集"""

f = np.load(path)

x_train, y_train = f['x_train'], f['y_train']

x_test, y_test = f['x_test'], f['y_test']

f.close()

return (x_train, y_train), (x_test, y_test)

(x_train, y_train), (x_test, y_test) = load_mnist("mnist.npz")

x_train, x_test = x_train / 255.0, x_test / 255.0

# Add a channels dimension

x_train = x_train[..., tf.newaxis]

x_test = x_test[..., tf.newaxis]

# 使用 tf.data 来将数据集切分为 batch 以及混淆数据集:

train_ds = tf.data.Dataset.from_tensor_slices((x_train, y_train)).shuffle(10000).batch(32)

test_ds = tf.data.Dataset.from_tensor_slices((x_test, y_test)).batch(32)

class MyModel(Model):

def __init__(self):

super(MyModel, self).__init__()

self.conv1 = Conv2D(32, 3, activation='relu')

self.flatten = Flatten()

self.d1 = Dense(128, activation='relu')

self.d2 = Dense(10, activation='softmax')

def call(self, x):

x = self.conv1(x)

x = self.flatten(x)

x = self.d1(x)

return self.d2(x)

model = MyModel()

# 为训练选择优化器与损失函数:

loss_object = tf.keras.losses.SparseCategoricalCrossentropy()

optimizer = tf.keras.optimizers.Adam()

# 选择衡量指标来度量模型的损失值(loss)和准确率(accuracy)

train_loss = tf.keras.metrics.Mean(name='train_loss')

train_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='train_accuracy')

test_loss = tf.keras.metrics.Mean(name='test_loss')

test_accuracy = tf.keras.metrics.SparseCategoricalAccuracy(name='test_accuracy')

# 使用 tf.GradientTape 来训练模型:

@tf.function

def train_step(images, labels):

with tf.GradientTape() as tape:

predictions = model(images)

loss = loss_object(labels, predictions)

gradients = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(gradients, model.trainable_variables))

train_loss(loss)

train_accuracy(labels, predictions)

# 测试模型:

@tf.function

def test_step(images, labels):

predictions = model(images)

t_loss = loss_object(labels, predictions)

test_loss(t_loss)

test_accuracy(labels, predictions)

EPOCHS = 5

for epoch in range(EPOCHS):

# 重置评估指标

train_loss.reset_states()

train_accuracy.reset_states()

test_loss.reset_states()

test_accuracy.reset_states()

for images, labels in train_ds:

train_step(images, labels)

for test_images, test_labels in test_ds:

test_step(test_images, test_labels)

template = 'Epoch {}, Loss: {}, Accuracy: {}, Test Loss: {}, Test Accuracy: {}'

print(template.format(epoch+1, train_loss.result(),

train_accuracy.result()*100,

test_loss.result(),

test_accuracy.result()*100))

pass输出:

参考资料:https://tensorflow.google.cn/tutorials/quickstart/beginner