决策树的简单应用

决策树(Decision Tree)是一种简单但是广泛使用的分类器。通过训练数据构建决策树,可以高效的对未知的数据进行分类。决策数有两大优点:1)决策树模型可以读性好,具有描述性,有助于人工分析;2)效率高,决策树只需要一次构建,反复使用,每一次预测的最大计算次数不超过决策树的深度。但是决策树对训练集里不存在数据进行预测时,效果很差。

from sklearn.model_selection._split import train_test_split

dataset = [

["Outlook","Temperature","Humidity","Wind","Play?"],

["sunny","hot","high","false","no"],

["sunny","hot","high","true","no"],

["overcast","hot","high","false","yes"],

["rain","mild","high","false","yes"],

["rain","cool","normal","false","yes"],

["rain","cool","normal","true","no"],

["overcast","cool","normal","true","yes"],

["sunny","mild","high","false","no"],

["sunny","cool","normal","false","yes"],

["rain","mild","normal","false","yes"],

["sunny","mild","normal","true","yes"],

["overcast","mild","high","true","yes"],

["overcast","hot","normal","false","yes"],

["rain","mild","high","true","no"]

]

'''

处理数据:

第一列;sunny对应0,overcast对应1,rain对应2;

第二列:hot对应0,mild对应1,cool对应2;

第三列:high对应0,normal对应1;

第四列:false对应0,true对应1;

第五列:no对应0,yes对应1.(作为预测结果)

'''

def dealdata():

#data_name存放原始数据,以坐标作为键,以原始数据的值作为值

data_name = {}

#data存放处理后的数据

data = []

for row in dataset[1:-1]:

tmp = []

for i,item in enumerate(row):

key = []

key.append(i)

if(item == "sunny" or item == "hot" or item == "high" or item=="false" or item == "no"):

tmp.append(0)

key.append(0)

elif(item == "overcast" or item == "mild" or item == "normal" or item=="true" or item == "yes"):

tmp.append(1)

key.append(1)

elif(item =="rain" or item == "cool"):

tmp.append(2)

key.append(2)

data_name[tuple(key)] = item

data.append(tmp)

return data,data_name

#生成规则:

def rules_format(xc_predict,xc_test,xd_test):

title = dataset[0]

rules = []

for i,row in enumerate(xd_test):

rule = "If "

item = row.tolist()

for j,d in enumerate(item):

tmp = []

tmp.append(j)

tmp.append(d)

rule += title[j]

rule += " = "

rule += data_name[tuple(tmp)]

if (j != len(item)-1):

rule += " and "

rule += " then "

rule += title[-1]

rule += " = "

if xc_predict[i]:

rule += "yes."

else:

rule += "no."

if xc_predict[i] == xc_test[i]:

rule += " predict correct!"

else:

rule += " predict error!"

rules.append(rule)

return rules

import numpy as np

from sklearn.tree import DecisionTreeClassifier

if __name__ == "__main__":

data,data_name = dealdata()

#产生决策树

mode = DecisionTreeClassifier(random_state=14)

x_d = np.zeros((15,4),dtype="int")

x_c = np.zeros((15,),dtype="bool")

for i,row in enumerate(data):

x_d[i] = row[:-1]

x_c[i] = row[-1] == 1

#划分训练集和测试集

xd_train,xd_test,xc_train,xc_test=train_test_split(x_d,x_c)

#决策树训练

mode.fit(xd_train,xc_train)

#决策树预测

xc_predict = mode.predict(xd_test)

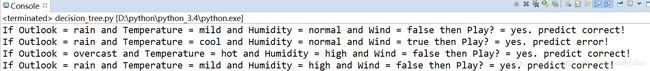

rules = rules_format(xc_predict,xc_test,xd_test)

for rule in rules:

print(rule)