MR预处理数据导入Hive表,再将Hive表数据导出后与HBase添加映射表关系并导入HBase表,根据HBaseAPI查询数据

本题是一个综合练习题目总共包括以下部分:

1.数据的预处理阶段

2.数据的入库操作阶段

3.数据的分析阶段

4.数据保存到数据库阶段

5.数据的查询显示阶段

给出数据格式表和数据示例,请先阅读数据说明,再做相应题目。

video.txt

user.txt

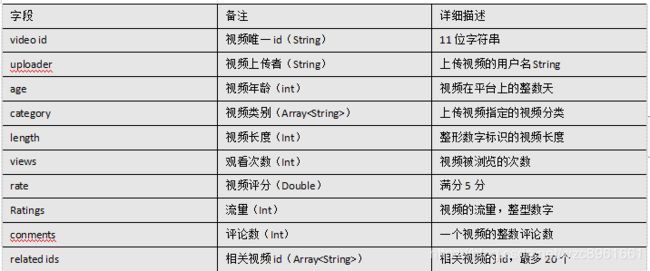

数据说明:

表1-1 视频表

表1-2 用户表

原始数据示例:

qR8WRLrO2aQ:mienge:406:People & Blogs:599:2788:5:1:0:4UUEKhr6vfA:zvDPXgPiiWI:TxP1eXHJQ2Q:k5Kb1K0zVxU:hLP_mJIMNFg:tzNRSSTGF4o:BrUGfqJANn8:OVIc-mNxqHc:gdxtKvNiYXc:bHZRZ-1A-qk:GUJdU6uHyzU:eyZOjktUb5M:Dv15_9gnM2A:lMQydgG1N2k:U0gZppW_-2Y:dUVU6xpMc6Y:ApA6VEYI8zQ:a3_boc9Z_Pc:N1z4tYob0hM:2UJkU2neoBs

预处理之后的数据示例:

qR8WRLrO2aQ:mienge:406:People & Blogs:599:2788:5:1:0:4UUEKhr6vfA,zvDPXgPiiWI,TxP1eXHJQ2Q,k5Kb1K0zVxU,hLP_mJIMNFg,tzNRSSTGF4o,BrUGfqJANn8,OVIc-mNxqHc,gdxtKvNiYXc,bHZRZ-1A-qk,GUJdU6uHyzU,eyZOjktUb5M,Dv15_9gnM2A,lMQydgG1N2k,U0gZppW_-2Y,dUVU6xpMc6Y,ApA6VEYI8zQ,a3_boc9Z_Pc,N1z4tYob0hM,2UJkU2neoBs

1、对原始数据进行预处理,格式为上面给出的预处理之后的示例数据。

通过观察原始数据形式,可以发现,每个字段之间使用“:”分割,视频可以有多个视频类别,类别之间&符号分割,且分割的两边有空格字符,同时相关视频也是可以有多个,多个相关视频也是用“:”进行分割。为了分析数据时方便,我们首先进行数据重组清洗操作。

即:将每条数据的类别用“,”分割,同时去掉两边空格,多个“相关视频id”也使用“,”进行分割

2、把预处理之后的数据进行入库到hive中

2.1创建数据库和表

创建数据库名字为:video

创建原始数据表:

视频表:video_ori 用户表:video_user_ori

创建ORC格式的表:

视频表:video_orc 用户表:video_user_orc

给出创建原始表语句

创建video_ori视频表:

create table video_ori(

videoId string,

uploader string,

age int,

category array,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array)

row format delimited

fields terminated by “:”

collection items terminated by “,”

stored as textfile;

创建video_user_ori用户表:

create table video_user_ori(

uploader string,

videos int,

friends int)

row format delimited

fields terminated by “,”

stored as textfile;

请写出ORC格式的建表语句:

创建video_orc表

create table video_orc(

videoId string,

uploader string,

age int,

category array,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array)

row format delimited

fields terminated by “:”

collection items terminated by “,”

stored as orc;

创建video_user_orc表:

create table video_user_orc(

uploader string,

videos int,

friends int)

row format delimited

fields terminated by “,”

stored as orc;

2.2分别导入预处理之后的视频数据到原始表video_ori和导入原始用户表的数据到video_user_ori中

请写出导入语句:

video_ori:

Load data inpath ‘/VideoData/video.txt’ into table test01. video_ori;

video_user_ori:

Load data local inpath ‘/opt/user.txt’ into table test01. video_user_ori;

2.3从原始表查询数据并插入对应的ORC表中

请写出插入语句:

video_orc:

Insert overwrite table video_orc select * from video_ori;

video_user_orc:

Insert overwrite table video_user_orc select * from video_user_ori;

3、对入库之后的数据进行hivesql查询操作

3.1从视频表中统计出视频评分为5分的视频信息,把查询结果保存到/export/rate.txt

请写出sql语句:

Hive –e “select * from test01.video_orc where rate=5;” > /export/rate.txt

3.2从视频表中统计出评论数大于100条的视频信息,把查询结果保存到/export/comments.txt

请写出sql语句:

Hive –e “select * from test01.video_orc where comments>100;” > /export/comments.txt

4、把hive分析出的数据保存到hbase中

4.1创建hive对应的数据库外部表

请写出创建rate外部表的语句:

create external table rate(

videoId string,

uploader string,

age int,

category array,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array)

row format delimited

fields terminated by “\t”

collection items terminated by “,”

stored as textfile;

请写出创建comments外部表的语句:

create external table comments(

videoId string,

uploader string,

age int,

category array,

length int,

views int,

rate float,

ratings int,

comments int,

relatedId array)

row format delimited

fields terminated by “\t”

collection items terminated by “,”

stored as textfile;

4.2加载第3步的结果数据到外部表中

请写出加载语句到rate表:

Load data local inpath ‘/export/rate.txt’ into table rate;

请写出加载语句到comments表:

Load data local inpath ‘/export/comments.txt’ into table comments;

4.3创建hive管理表与HBase进行映射

给出此步骤的语句

Hive中的rate,comments两个表分别对应hbase中的hbase_rate,hbase_comments两个表

创建hbase_rate表并进行映射:

create table hbase_rate(videoid string,uploader string,age int,category array,length int,views int,rate float,ratings int,comments int,relatedid array) stored by ‘org.apache.hadoop.hive.hbase.HBaseStorageHandler’ with serdeproperties(“hbase.columns.mapping” =“info:uploader,info:age,info:category,info:length,info:views,info:rate,info:ratings,info:comments,info:relatedid”) tblproperties(“hbase.table.name” = “hbase_rate”);

创建hbase_comments表并进行映射:

create table hbase_comments(videoid string,uploader string,age int,category array,length int,views int,rate float,ratings int,comments int,relatedid array) stored by ‘org.apache.hadoop.hive.hbase.HBaseStorageHandler’ with serdeproperties(“hbase.columns.mapping” =“info:uploader,info:age,info:category,info:length,info:views,info:rate,info:ratings,info:comments,info:relatedid”) tblproperties(“hbase.table.name” = “hbase_comments”);

4.4请写出通过insert overwrite select,插入hbase_rate表的语句

insert overwrite table hbase_rate select * from rate;

请写出通过insert overwrite select,插入hbase_comments表的语句

insert overwrite table hbase_comments select * from comments;

5.通过hbaseapi进行查询操作

5.1请使用hbaseapi 对hbase_rate表,按照通过startRowKey=1和endRowKey=100进行扫描查询出结果。

5.2请使用hbaseapi对hbase_comments表,只查询comments列的值。

5.3请使用hbaseapi 对hbase_user表,查询包含5的列值

5.4请使用hbaseapi对hbase_video表,只查询age列,并且大于700的值(使用列过滤器和列值过滤器)

5.5使用hbaseAPI创建一个命名空间hbase_result,在该命名空间下创建一张 habse_result表,列族为 info

预处理数据代码

package com.czxy.demo01;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.NullWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.TextInputFormat;

import org.apache.hadoop.mapreduce.lib.output.TextOutputFormat;

import java.io.IOException;

import java.net.URI;

public class VideoDataSplit {

private static Configuration conf=new Configuration();

/**

* Map阶段

* 即:将每条数据的类别用“,”分割,同时去掉两边空格,多个“相关视频id”也使用“,”进行分割

*/

public static class VideoSplitMap extends Mapper {

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

String line = value.toString().trim().replace(" ", "");

if (line.split(":").length > 9) {

if (line.contains("&")) {

line = line.replace("&", ",");

}

String[] split = line.split(":");

StringBuffer sb = new StringBuffer();

for (int i = 0; i {

@Override

protected void reduce(NullWritable key, Iterable values, Context context) throws IOException, InterruptedException {

String str="";

for (Text value : values) {

str+=value.toString().substring(0, value.toString().length()-1)+"\r\n";

}

byte[] bytes = str.getBytes();

FileSystem fs=FileSystem.get(URI.create("hdfs://node01:8020"),conf);

FSDataOutputStream outputStream = fs.create(new Path("/VideoData/video.txt"));

outputStream.write(bytes,0,bytes.length);

outputStream.close();

}

}

/**

* 驱动

*

* @param args

*/

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration _conf = new Configuration();

//Job job = new Job(_conf, "DeviceDataSplit");

Job job = new Job(_conf, "VideoDataSplit");

job.setJarByClass(VideoDataSplit.class);

//设置map输出类型

job.setMapperClass(VideoSplitMap.class);

job.setMapOutputKeyClass(NullWritable.class);

job.setMapOutputValueClass(Text.class);

//设置reduce输出类型

job.setReducerClass(VideoSplitReduce.class);

job.setOutputKeyClass(NullWritable.class);

job.setOutputValueClass(Text.class);

//设置输入

job.setInputFormatClass(TextInputFormat.class);

TextInputFormat.setInputPaths(job, new Path("D:\\传智专修学院课程\\2019年10月——2020年01月第三学期_大数据\\习题总结\\09-期末复习\\练习题原数据\\video.txt"));

//TextInputFormat.setInputPaths(job, new Path(args[0]));

//job.setNumReduceTasks(2);

//设置输出

job.setOutputFormatClass(TextOutputFormat.class);

TextOutputFormat.setOutputPath(job, new Path("D:\\传智专修学院课程\\2019年10月——2020年01月第三学期_大数据\\习题总结\\09-期末复习\\练习题原数据\\output"));

//TextOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}

HBaseAPI代码

package com.czxy.demo01;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.*;

import org.apache.hadoop.hbase.client.*;

import org.apache.hadoop.hbase.filter.CompareFilter;

import org.apache.hadoop.hbase.filter.SingleColumnValueFilter;

import org.apache.hadoop.hbase.filter.SubstringComparator;

import org.apache.hadoop.hbase.filter.ValueFilter;

import org.apache.hadoop.hbase.util.Bytes;

import org.junit.jupiter.api.Test;

import java.io.IOException;

public class API {

static Configuration configuration=new Configuration();

/**

* 5.5使用hbaseAPI创建一个命名空间hbase_result,在该命名空间下创建一张 habse_result表,列族为 info

* @throws IOException

*/

@Test

public void createTable() throws IOException {

Configuration configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum","node01:2181,node02:2181,node03:2181");

Connection connection = ConnectionFactory.createConnection(configuration);

Admin admin = connection.getAdmin();

HTableDescriptor hbase_result = new HTableDescriptor(TableName.valueOf("hbase_result"));

HColumnDescriptor info = new HColumnDescriptor("info");

hbase_result.addFamily(info);

admin.createTable(hbase_result);

admin.close();

connection.close();

}

/**

* 5.4请使用hbaseapi对hbase_video表,只查询age列,并且大于700的值(使用列过滤器和列值过滤器)

* @throws IOException

*/

@Test

public void singleFilter() throws IOException {

Configuration configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum","node01:2181,node02:2181,node03:2181");

Connection connection = ConnectionFactory.createConnection(configuration);

Table hbase_rate = connection.getTable(TableName.valueOf("hbase_rate"));

Scan scan = new Scan();

SingleColumnValueFilter singleColumnValueFilter = new SingleColumnValueFilter("info".getBytes(),"age".getBytes(),CompareFilter.CompareOp.GREATER,"700".getBytes());

scan.setFilter(singleColumnValueFilter);

ResultScanner scanner = hbase_rate.getScanner(scan);

for (Result result : scanner) {

System.out.println("rowKey:"+Bytes.toString(result.getRow()));

System.out.println(Bytes.toString(result.getValue("info".getBytes(),"age".getBytes())));

}

hbase_rate.close();

connection.close();

}

/**

* 5.3请使用hbaseapi 对hbase_user表,查询包含5的列值

* @throws IOException

*/

@Test

public void valueFilter() throws IOException {

Configuration configuration = HBaseConfiguration.create();

configuration.set("hbase.zookeeper.quorum","node01:2181,node02:2181,node03:2181");

Connection connection = ConnectionFactory.createConnection(configuration);

Table hbase_rate = connection.getTable(TableName.valueOf("hbase_rate"));

Scan scan = new Scan();

ValueFilter valueFilter = new ValueFilter(CompareFilter.CompareOp.EQUAL, new SubstringComparator("5"));

scan.setFilter(valueFilter);

ResultScanner scanner = hbase_rate.getScanner(scan);

for (Result result : scanner) {

System.out.println(Bytes.toString(result.getRow()));

Cell[] cells = result.rawCells();

for (Cell cell : cells) {

System.out.println(Bytes.toString(CellUtil.cloneValue(cell)));

}

}

hbase_rate.close();

connection.close();

}

/**

* 5.2请使用hbaseapi对hbase_comments表,只查询comments列的值。

* @throws IOException

*/

@Test

public void HBase_comments() throws IOException {

configuration.set("hbase.zookeeper.quorum", "node01:2181,node02:2181,node03:2181");

Connection connection = ConnectionFactory.createConnection(configuration);

Table hbase_comments = connection.getTable(TableName.valueOf("hbase_comments"));

Scan scan = new Scan();

ResultScanner scanner = hbase_comments.getScanner(scan);

for (Result result : scanner) {

System.out.println(Bytes.toString(result.getValue("info".getBytes(),"comments".getBytes())));

}

hbase_comments.close();

connection.close();

}

/**

* 5.1请使用hbaseapi 对hbase_rate表,按照通过startRowKey=1和endRowKey=100进行扫描查询出结果。

* @throws IOException

*/

@Test

public void HBase_rate() throws IOException {

configuration.set("hbase.zookeeper.quorum", "node01:2181,node02:2181,node03:2181");

Connection connection = ConnectionFactory.createConnection(configuration);

Table hbase_rate = connection.getTable(TableName.valueOf("hbase_rate"));

Scan scan = new Scan();

scan.setStartRow("1".getBytes());

scan.setStopRow("100".getBytes());

ResultScanner scanner = hbase_rate.getScanner(scan);

for (Result result : scanner) {

System.out.println(Bytes.toString(result.getValue("info".getBytes(),"videoid".getBytes())));

}

hbase_rate.close();

connection.close();

}

}