deepspeech2 代码之模型构建

模型构建

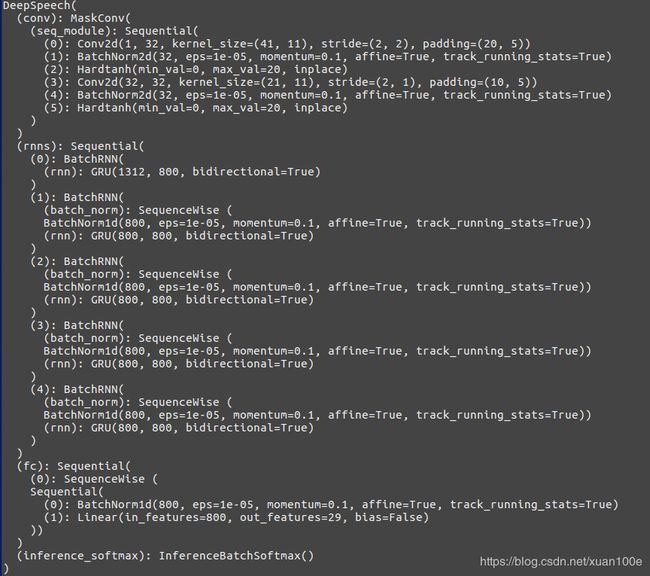

模型整体框架如下图所示

可以看到模型主要由以下几个部分构成:

DeepSpeech model

- MaskConv

- BatchRNN

- fc

model = DeepSpeech(rnn_hidden_size=args.hidden_size,

nb_layers=args.hidden_layers,

labels=labels,

rnn_type=supported_rnns[rnn_type],

audio_conf=audio_conf,

bidirectional=args.bidirectional,

mixed_precision=args.mixed_precision)

1.MaskConv

inputs: batch_size * 1 * mel * length (32 * 1 * 161 * n)

lengths: batch 32

output batch * 32 * 41 * n

class MaskConv(nn.Module):

def __init__(self, seq_module):

"""

Adds padding to the output of the module based on the given lengths. This is to ensure that the

results of the model do not change when batch sizes change during inference.

Input needs to be in the shape of (BxCxDxT)

:param seq_module: The sequential module containing the conv stack.

"""

super(MaskConv, self).__init__()

self.seq_module = seq_module

def forward(self, x, lengths):

"""

:param x: The input of size BxCxDxT

:param lengths: The actual length of each sequence in the batch

:return: Masked output from the module

"""

for module in self.seq_module:

x = module(x)

mask = torch.ByteTensor(x.size()).fill_(0)

if x.is_cuda:

mask = mask.cuda()

for i, length in enumerate(lengths):

length = length.item()

if (mask[i].size(2) - length) > 0:

mask[i].narrow(2, length, mask[i].size(2) - length).fill_(1)

x = x.masked_fill(mask, 0)

return x, lengths

self.conv = MaskConv(nn.Sequential(

nn.Conv2d(1, 32, kernel_size=(41, 11), stride=(2, 2), padding=(20, 5)),

nn.BatchNorm2d(32),

nn.Hardtanh(0, 20, inplace=True),

nn.Conv2d(32, 32, kernel_size=(21, 11), stride=(2, 1), padding=(10, 5)),

nn.BatchNorm2d(32),

nn.Hardtanh(0, 20, inplace=True)

))

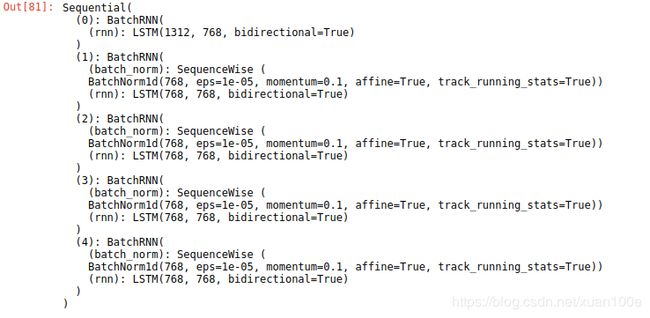

2. BatchRNN

class BatchRNN(nn.Module):

def __init__(self, input_size, hidden_size, rnn_type=nn.LSTM, bidirectional=False, batch_norm=True):

super(BatchRNN, self).__init__()

self.input_size = input_size

self.hidden_size = hidden_size

self.bidirectional = bidirectional

self.batch_norm = SequenceWise(nn.BatchNorm1d(input_size)) if batch_norm else None

self.rnn = rnn_type(input_size=input_size, hidden_size=hidden_size,

bidirectional=bidirectional, bias=True)

self.num_directions = 2 if bidirectional else 1

def flatten_parameters(self):

self.rnn.flatten_parameters()

def forward(self, x, output_lengths):

if self.batch_norm is not None:

x = self.batch_norm(x)

x = nn.utils.rnn.pack_padded_sequence(x, output_lengths)

x, h = self.rnn(x)

x, _ = nn.utils.rnn.pad_packed_sequence(x)

if self.bidirectional:

x = x.view(x.size(0), x.size(1), 2, -1).sum(2).view(x.size(0), x.size(1), -1) # (TxNxH*2) -> (TxNxH) by sum

return x

class SequenceWise(nn.Module):

def __init__(self, module):

"""

Collapses input of dim T*N*H to (T*N)*H, and applies to a module.

Allows handling of variable sequence lengths and minibatch sizes.

:param module: Module to apply input to.

"""

super(SequenceWise, self).__init__()

self.module = module

def forward(self, x):

t, n = x.size(0), x.size(1)

x = x.view(t * n, -1)

x = self.module(x)

x = x.view(t, n, -1)

return x

def __repr__(self):

tmpstr = self.__class__.__name__ + ' (\n'

tmpstr += self.module.__repr__()

tmpstr += ')'

return tmpstr

rnn = BatchRNN(input_size=rnn_input_size, hidden_size=rnn_hidden_size, rnn_type=rnn_type,

bidirectional=bidirectional, batch_norm=False)

rnns.append(('0', rnn))

for x in range(nb_layers - 1):

rnn = BatchRNN(input_size=rnn_hidden_size, hidden_size=rnn_hidden_size, rnn_type=rnn_type,

bidirectional=bidirectional)

rnns.append(('%d' % (x + 1), rnn))

self.rnns = nn.Sequential(OrderedDict(rnns))

3. FC

fully_connected = nn.Sequential(

nn.BatchNorm1d(rnn_hidden_size),

nn.Linear(rnn_hidden_size, num_classes, bias=False)

)

self.fc = nn.Sequential(

SequenceWise(fully_connected),)

x = self.fc(x)

x = x.transpose(0, 1)

# identity in training mode, softmax in eval mode

x = self.inference_softmax(x)

return x, output_lengths