[elk]-centos6.4 安装elk (elasticsearch logstash kibana)

Centos6.4 安装elk (elasticsearch logstash kibana)

elasticsearch

安装elasticsearch 以及插件head

采用二进制文件安装elasticsearch6.3.2

下载elasticsearch-6.3.2.tar.gz到/usr/local

| cd /usr/local tar -zxvf elasticsearch-6.3.2.tar.gz wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.2.2.tar.gz rm -rf /usr/local/elasticsearch-6.3.2.tar.gz #创建日志文件夹 mkdir -p /usr/local/elasticsearch-6.3.2/log

#修改elasticsearch.yml配置文件 ---------------------------------------------------------------------- cat > /usr/local/elasticsearch-6.3.2/config/elasticsearch.yml < #cluster.name 集群的cluster.name 必须一致 cluster.name: elasticsearch #node.name 集群的node.name 不一致 node.name: node-8 #path to data path.data: /usr/local/elasticsearch-6.3.2/data #path to log path.logs: /usr/local/elasticsearch-6.3.2/log #设置内存不会到交换分区上 需要安装插件 在5.0之后没有该配置了 #bootstrap.mlockall: true node.master: true #监听的主机 network.host: 0.0.0.0 #集群设置 discovery.zen.ping.unicast.hosts: ["10.29.112.172", "10.29.113.121"] #监听的端口 http.port: 9200 transport.tcp.port: 9300 bootstrap.memory_lock: false bootstrap.system_call_filter: false #便于监控插件走http协议获取相关数据 http.cors.enabled: true http.cors.allow-origin: "*" #回收缓存 避免es服务器内存一直增大 #缓存数据+当前查询需要缓存的数据量到达断路器限制时,会返回Data too large错误 indices.fielddata.cache.size: 60% EOF discovery.zen.ping.unicast.hosts: ["10.6.11.176", "10.6.11.177"] 建议使用ip 避免dns 解析出问题导致es 故障 |

另一台es 也同样安装

node.master: true

如果2个设置node.master: true 则先启动的es为主

| 修改/etc/security/limits.conf 参数 yum 安装的es默认是普通用户 需要修改limit yum 安装权限是默认设置好的 会创建用户 用户组

修改/etc/sysctl.conf cat > /etc/sysctl.conf < vm.max_map_count=655360 EOF sysctl -p 修改/etc/security/limits.d/90-nproc.conf cat > /etc/security/limits.d/90-nproc.conf < * soft nproc 2048 root soft nproc unlimited EOF 如果需要 也可以改大 elastic用户 正常情况够用 ulimit -a 查看opne file 值 如果过小 需要改大 建议65536 |

创建elastic用户 并切换到该用户启动

| useradd elastic chown -R elastic:elastic elasticsearch-6.3.2 su - elastic -c "sh /usr/local/elasticsearch-6.3.2/bin/elasticsearch -d" |

安装header

安装header 需要用到grunt 所以先安装node

#解压安装

cd /tmp

mkdir -p /usr/local/node

tar xvf node-v8.10.0-linux-x64.tar

mv node-v8.10.0-linux-x64 /usr/local/node/

#在环境变量中加入node地址

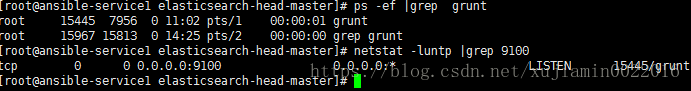

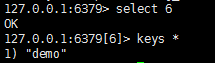

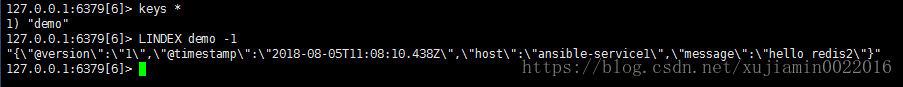

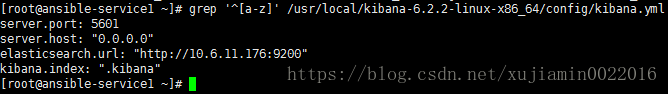

cat >>/etc/profile.d/node.sh< export NODE_PATH=/usr/local/node/node-v8.10.0-linux-x64 export PATH=$NODE_PATH/bin:$PATH EOF #应用/etc/profile 并添加软链接 cd /etc/profile.d/ . /etc/profile . /etc/bashrc ln -s /usr/local/node/node-v8.10.0-linux-x64/bin/node /usr/bin ln -s /usr/local/node/node-v8.10.0-linux-x64/bin/npm /usr/bin ln -s /usr/local/node/node-v8.10.0-linux-x64/bin/grunt /usr/bin #查看node版本 node -v #安装grunt npm -g config set user root npm install -g grunt-cli #安装header cd /usr/local/elasticsearch-6.3.2/ wget https://github.com/mobz/elasticsearch-head/archive/master.zip unzip master.zip cd elasticsearch-head-master/ npm install 修改_site/app.js中localhost为安装header的服务器的ip 在elasticsearch-head-master的文件夹中执行 grunt server >/dev/null & 查看header是否启动 存在9100端口即启动成功 访问10.6.11.176:9100 显示如下界面 ★星号表示主节点 如果连接header 提示不能连接 请检查防火墙 是否开启9200端口 或者检查配置文件是否含有下列配置 #便于监控插件走http协议获取相关数据 http.cors.enabled: true http.cors.allow-origin: "*" logstash 间隔15s 避免使用localhost,localhost换成ip 为了让错误日志输出再同一行 需改conf文件 system 添加syslog到logstash中 现在/etc/rsyslog.conf中开放权限 先用debug模式测试 测试成功后在logstash的all.conf文件中添加if 避免输出难以分辨 添加if判断 nginx 添加nginx访问日志到logstash 将nginx的access.log输出成json格式 需要修改nginx.conf log_format log_json '{ "@timestamp": "$time_local", ' '"remote_addr": "$remote_addr", ' '"referer": "$http_referer", ' '"request": "$request", ' '"status": $status, ' '"bytes": $body_bytes_sent, ' '"agent": "$http_user_agent", ' '"x_forwarded": "$http_x_forwarded_for", ' '"up_addr": "$upstream_addr",' '"up_host": "$upstream_http_host",' '"up_resp_time": "$upstream_response_time",' '"request_time": "$request_time"' ' }'; 使用debug先测试 tcp 日志 rubydebug 调试 也可以使用 echo “test” > /dev/tcp/10.6.11.176/6666 mysql 启动logstash /usr/local/logstash-6.3.2/bin/logstash -f /usr/local/logstash-6.3.2/config/all.conf 这边我没有找到http.log 于是临时搞了个http的日志 参照官网的格式 输入到access_log中 链接redis 输入info 发现创建了db6 里面有一个key select 6 keys * LINDEX demo -1 #常看列表的最后一行 读redis往es写 test-redis.conf 先查看里面多少条数据 在redis-in.conf中加入 实现本地文件获取数据写到redis 大致替换output如下 shipper.conf input { syslog { type => "system-syslog" host => "10.6.11.176" port => "514" } file { path => "/var/log/messages" type => "system" start_position => "beginning" } file { path => "/usr/local/elasticsearch-6.3.2/logs/elasticsearch.log" type => "es-log" start_position => "beginning" codec => multiline { pattern => "^\[" negate => true what => "previous" } } file{ path => "/var/log/nginx/access_json.log" codec => json start_position => "beginning" type => "nginx-log" } file { path => "/tmp/slow.log" type => "mysql-slow-log" start_position => "beginning" codec => multiline { pattern => "^# User@Host:" negate => true what => "previous" } } } output{ if [type] == "system-syslog" { redis { host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "system-syslog" } } if [type] == "system" { redis { host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "system" } } if [type] == "nginx-log" { redis { host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "nginx-log" } } } 从redis 读 写入es /usr/local/logstash-6.3.2/bin/logstash -f /usr/local/logstash-6.3.2/config/redis-out.conf #从redis读 往es写 input { redis { type => "system" host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "system" } redis { type => "nginx-log" host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "nginx-log" } redis { type => "system-syslog" host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "system-syslog" } } output { if [type] == "system-syslog" { elasticsearch { hosts => ["10.6.11.176:9200"] index => "system-syslog-%{+YYYY.MM.dd}" } } if [type] == "system" { elasticsearch { hosts => ["10.6.11.176:9200"] index => "system-%{+YYYY.MM.dd}" } } if [type] == "nginx-log" { elasticsearch { hosts => ["10.6.11.176:9200"] index => "nginx-log-%{+YYYY.MM.dd}" } } if [type] == "mysql-slow-log" { elasticsearch { hosts => ["10.6.11.176:9200"] index => "mysql-slow-log-%{+YYYY.MM.dd}" } } } 由于nginx-log的key的值少 所以一下子就读进去 然后key就消失了 kibana中 的确是有日志进来的 剩下的2个值太多了 比较慢 这边测试所以就直接删除掉key了 del system cat 1.log |tee –a > 1.log 从本地redis 读 写入远程redis /usr/local/logstash-6.3.2/bin/logstash -f redis-in.conf #从本地redis 写到远程redis input { redis { host => "10.6.11.176" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "demo" } } output { redis { host => "10.6.11.177" port => "6379" password => "Hangzhou@123" db => "6" data_type => "list" key => "demo" } } 执行之前 执行完之后 运行报错 es版本太低 低于kibana版本太多 kibana版本和es版本不一致的warning 不影响使用 先下载kibana安装包 解压 tar -xzf kibana-6.2.2-linux-x86_64.tar.gz -C /usr/local/ 在kibana.yml中加入以下配置 kibana 用nginx 加auth 认证 一般一个es节点 带一个kibana 连本地es 然后nginx 负载均衡 kibana 显示nginx 日志404 输入status:404 这边可能是由于这个日志的问题 显示不是很好看 访问状态统计 访问ip top5 创建dashboard 至此 elk的安装 以及配置介绍完毕 logstash

grok

使用redis 解耦logstash消息队列

安装kibana

![]()

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第1张图片](http://img.e-com-net.com/image/info8/50763fb51c2d4bca8d80f0128ddfbcee.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第2张图片](http://img.e-com-net.com/image/info8/8e2430b2ed22407784cc5c00fd507cb9.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第3张图片](http://img.e-com-net.com/image/info8/cf73c1e9f1d34239b78aa60d3c96ecc5.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第4张图片](http://img.e-com-net.com/image/info8/9a3c0a011ae44636a4a585d739ab78b9.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第5张图片](http://img.e-com-net.com/image/info8/aa81a7be8ce04882ba8bb9950d3e2181.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第6张图片](http://img.e-com-net.com/image/info8/91b1ef5fe5824f0b8f5909f1ad5a3970.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第7张图片](http://img.e-com-net.com/image/info8/981c71fbcd0946bc896f676f63d9cb25.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第8张图片](http://img.e-com-net.com/image/info8/93d817ea3b304900942aa448dae7759b.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第9张图片](http://img.e-com-net.com/image/info8/2ffacb2328504e0990cd0c38c8299ea5.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第10张图片](http://img.e-com-net.com/image/info8/d969d22fe28a488db67d772c0fd97a6d.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第11张图片](http://img.e-com-net.com/image/info8/6c6de8b0f7424c3fa8c19cba82cdd90c.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第12张图片](http://img.e-com-net.com/image/info8/a928e0222e924a0c87643c56ecf6752f.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第13张图片](http://img.e-com-net.com/image/info8/20652da7bd93404ab35ef37cabcb7d2c.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第14张图片](http://img.e-com-net.com/image/info8/a65e4f7eed8848ebacdd8bdab11f679a.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第15张图片](http://img.e-com-net.com/image/info8/d0a5967dedcc494d9da31636480ae781.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第16张图片](http://img.e-com-net.com/image/info8/70fc5ede1c814c9aa116eab7f22e482f.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第17张图片](http://img.e-com-net.com/image/info8/eb17422fc9324200ba2945d13cefc72d.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第18张图片](http://img.e-com-net.com/image/info8/c1ddce6b832e4dc28c2cf5456d7e8e16.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第19张图片](http://img.e-com-net.com/image/info8/aba0316a637541e8b331a497036be319.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第20张图片](http://img.e-com-net.com/image/info8/46e653d72af848f699b99819fd00ec16.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第21张图片](http://img.e-com-net.com/image/info8/ab93319790464896a2362b9ba8814a9b.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第22张图片](http://img.e-com-net.com/image/info8/3d25a62bc9154904a0b81a822f3ac02d.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第23张图片](http://img.e-com-net.com/image/info8/d6c14c0cf31b4aecbfb0b99413566b67.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第24张图片](http://img.e-com-net.com/image/info8/a7728b62e64e4c749ec4a04575f8b4cc.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第25张图片](http://img.e-com-net.com/image/info8/870bac095d3e40f68ee91ca70bc7f73d.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第26张图片](http://img.e-com-net.com/image/info8/ea4e259245bd4c1298a7bb9b26761704.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第27张图片](http://img.e-com-net.com/image/info8/aa9ffe3a5102465895d50b7267a66a8b.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第28张图片](http://img.e-com-net.com/image/info8/b233b8376595447e9c447451ccbe189c.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第29张图片](http://img.e-com-net.com/image/info8/0933bfb6fedb4efb8e135ebb10dac375.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第30张图片](http://img.e-com-net.com/image/info8/b90f01c95264491997628ee1a638efef.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第31张图片](http://img.e-com-net.com/image/info8/3281306d79cc415da8377a745fb3537d.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第32张图片](http://img.e-com-net.com/image/info8/d90e9b5fbf0d49e79616f9a799370670.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第33张图片](http://img.e-com-net.com/image/info8/faec24613330441b9a709bdc6c9fe84a.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第34张图片](http://img.e-com-net.com/image/info8/982f136865b2440fbe2a1afb790f1017.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第35张图片](http://img.e-com-net.com/image/info8/d45696e140c7405da705ff1643e5dad1.png)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第36张图片](http://img.e-com-net.com/image/info8/816c71e9379343279c524aa45c7a96a4.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第37张图片](http://img.e-com-net.com/image/info8/26f1a13538934cf7aa2128f2ab0a3a03.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第38张图片](http://img.e-com-net.com/image/info8/399cd12143db40df842b7d88fc481af4.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第39张图片](http://img.e-com-net.com/image/info8/dcb0fccd99cf4dc4812fdab95d5c80f0.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第40张图片](http://img.e-com-net.com/image/info8/62659184ef054c69a46b90f8201de75c.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第41张图片](http://img.e-com-net.com/image/info8/23767653682648928699e7bc6cba544e.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第42张图片](http://img.e-com-net.com/image/info8/4c087deef04b481889ac60c5ad63dfca.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第43张图片](http://img.e-com-net.com/image/info8/c3c43bc7a69c4ac4996e582c15664d5c.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第44张图片](http://img.e-com-net.com/image/info8/8b2ab00b4abb4df28b2866e18618cbea.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第45张图片](http://img.e-com-net.com/image/info8/36ca1456cd484fc38221085d797e32e6.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第46张图片](http://img.e-com-net.com/image/info8/dcc3f2dd910b473cb46d01063e0ac179.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第47张图片](http://img.e-com-net.com/image/info8/ccce40178da04c6dae6f70600b70a33b.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第48张图片](http://img.e-com-net.com/image/info8/72aa91493e134a2993102db3ccdabb76.jpg)

![[elk]-centos6.4 安装elk (elasticsearch logstash kibana)_第49张图片](http://img.e-com-net.com/image/info8/d6859cc69d02425bade57200f091dfe3.jpg)