15. Android MultiMedia框架完全解析 - Render流程分析

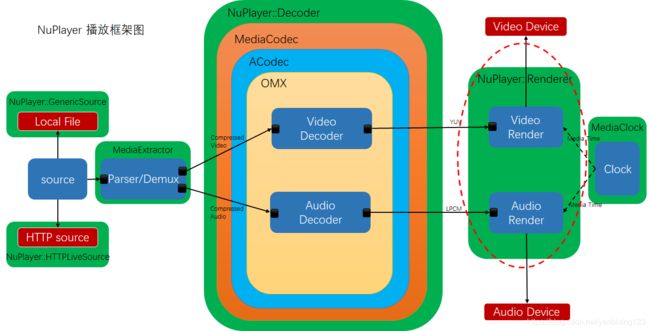

先来看Render在NuPlayer框架中所处的位置:

Renderer的作用就是根据传过来数据帧的时间来判断这一帧是否需要渲染,并进行音视频的同步。但是真正硬件渲染的代码在MediaCodec和ACodec中。

Renderer的位置是在NuPlayerDecoder后面,他俩之间的交互是从NuPlayer::Decoder::handleAnOutputBuffer()函数开始的,在这个函数中,通过

mRenderer->queueBuffer(mIsAudio, buffer, reply);

注意这个reply是个AMessage,它是NuPlayerDecoder传给Renderer的,用于Renderer向NuPlayerDecoder传递信息,同时在NuPlayer::Decoder::handleAnOutputBuffer()函数中,并没有post这个reply msg。

sp reply = new AMessage(kWhatRenderBuffer, this);

reply->setSize("buffer-ix", index);

reply->setInt32("generation", mBufferGeneration);

下面就从这个函数开始分析:

void NuPlayer::Renderer::queueBuffer(

bool audio,

const sp &buffer,

const sp ¬ifyConsumed) {

sp msg = new AMessage(kWhatQueueBuffer, this);

msg->setInt32("queueGeneration", getQueueGeneration(audio));

msg->setInt32("audio", static_cast(audio));

msg->setBuffer("buffer", buffer);

msg->setMessage("notifyConsumed", notifyConsumed);

msg->post();

} 这里是发送了一个kWhatQueueBuffer的msg,同时注意上面那个reply形参的变化,在NuPlayerRenderer中的名字为notifyConsumed。

继续调用到NuPlayer::Renderer::onQueueBuffer()函数中:

void NuPlayer::Renderer::onQueueBuffer(const sp &msg) {

int32_t audio;

CHECK(msg->findInt32("audio", &audio));

if (dropBufferIfStale(audio, msg)) {

return;

}

if (audio) {

mHasAudio = true;

mAudioEOS = false;

} else {

mHasVideo = true;

}

if (mHasVideo) {

if (mVideoScheduler == NULL) {

mVideoScheduler = new VideoFrameScheduler();

//初始化VideoFrameScheduler,用于Vsync

mVideoScheduler->init();

}

}

sp buffer;

CHECK(msg->findBuffer("buffer", &buffer));

sp notifyConsumed;

CHECK(msg->findMessage("notifyConsumed", ¬ifyConsumed));//Decoder中产生的reply,用于Renderer与NuPlayerDecoder通信。

QueueEntry entry; //这个是Renderer中维护的音频/视频队列的元素

entry.mBuffer = buffer;

entry.mNotifyConsumed = notifyConsumed;

entry.mOffset = 0;

entry.mFinalResult = OK;

entry.mBufferOrdinal = ++mTotalBuffersQueued;

if (audio) {

Mutex::Autolock autoLock(mLock);

mAudioQueue.push_back(entry);

postDrainAudioQueue_l();//对于音频的处理

} else {

mVideoQueue.push_back(entry);

postDrainVideoQueue();//对于视频的处理,这个函数每隔一段时间就会被调用一次,后面会分析

}

Mutex::Autolock autoLock(mLock);

if (!mSyncQueues || mAudioQueue.empty() || mVideoQueue.empty()) {

return;

}

sp firstAudioBuffer = (*mAudioQueue.begin()).mBuffer;

sp firstVideoBuffer = (*mVideoQueue.begin()).mBuffer;

if (firstAudioBuffer == NULL || firstVideoBuffer == NULL) {

// EOS signalled on either queue.

syncQueuesDone_l();

return;

}

int64_t firstAudioTimeUs;

int64_t firstVideoTimeUs;

CHECK(firstAudioBuffer->meta()

->findInt64("timeUs", &firstAudioTimeUs));

CHECK(firstVideoBuffer->meta()

->findInt64("timeUs", &firstVideoTimeUs));

int64_t diff = firstVideoTimeUs - firstAudioTimeUs;

ALOGV("queueDiff = %.2f secs", diff / 1E6);

if (diff > 100000ll) {

// Audio data starts More than 0.1 secs before video.

// Drop some audio.

//如果音频超前视频0.1s,则丢掉一些音频帧。

(*mAudioQueue.begin()).mNotifyConsumed->post();

mAudioQueue.erase(mAudioQueue.begin());

return;

}

syncQueuesDone_l();

} 在NuPlayerRenderer中,维持着两个List,一个是音频缓冲队列,一个是视频队列。根据数据帧的格式来选择添加到一个队列中,我们以视频为例,然后调用postDrainVideoQueue()函数来安排刷新。

同时注意,上面NuPlayerDecoder传过来的reply此时作为一个entry.mNotifyConsumed的成员,也同样添加到队列中了。

void NuPlayer::Renderer::postDrainVideoQueue() {

if (mDrainVideoQueuePending

|| getSyncQueues()

|| (mPaused && mVideoSampleReceived)) {

return;

}

if (mVideoQueue.empty()) {

return;

}

QueueEntry &entry = *mVideoQueue.begin();//从视频队列中取出第一个元素。

sp msg = new AMessage(kWhatDrainVideoQueue, this);//这里实际处理视频缓冲区和显示的消息。

msg->setInt32("drainGeneration", getDrainGeneration(false /* audio */));

if (entry.mBuffer == NULL) {

// EOS doesn't carry a timestamp.

msg->post();

mDrainVideoQueuePending = true;

return;

}

int64_t delayUs;

int64_t nowUs = ALooper::GetNowUs();

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

int64_t mediaTimeUs;

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

realTimeUs = mediaTimeUs;

} else {

int64_t mediaTimeUs;

CHECK(entry.mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

{

Mutex::Autolock autoLock(mLock);

if (mAnchorTimeMediaUs < 0) {//同步基准未设置的情况下,直接显示

mMediaClock->updateAnchor(mediaTimeUs, nowUs, mediaTimeUs);

mAnchorTimeMediaUs = mediaTimeUs;

realTimeUs = nowUs;

} else {

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);

}

}

if (!mHasAudio || mAudioEOS) {

// smooth out videos >= 10fps

mMediaClock->updateMaxTimeMedia(mediaTimeUs + 100000);

}

// Heuristics to handle situation when media time changed without a

// discontinuity. If we have not drained an audio buffer that was

// received after this buffer, repost in 10 msec. Otherwise repost

// in 500 msec.

delayUs = realTimeUs - nowUs;

if (delayUs > 500000) {

int64_t postDelayUs = 500000;

if (mHasAudio && (mLastAudioBufferDrained - entry.mBufferOrdinal) <= 0) {

postDelayUs = 10000;

}

msg->setWhat(kWhatPostDrainVideoQueue);

msg->post(postDelayUs);

mVideoScheduler->restart();

ALOGI("possible video time jump of %dms, retrying in %dms",

(int)(delayUs / 1000), (int)(postDelayUs / 1000));

mDrainVideoQueuePending = true;

return;

}

}

realTimeUs = mVideoScheduler->schedule(realTimeUs * 1000) / 1000;

int64_t twoVsyncsUs = 2 * (mVideoScheduler->getVsyncPeriod() / 1000);

delayUs = realTimeUs - nowUs;

ALOGW_IF(delayUs > 500000, "unusually high delayUs: %" PRId64, delayUs);

// post 2 display refreshes before rendering is due

msg->post(delayUs > twoVsyncsUs ? delayUs - twoVsyncsUs : 0);

mDrainVideoQueuePending = true;

} 对一些延时进行了判断,最主要的还是发送了kWhatDrainVideoQueue这个msg:

NuPlayer::Renderer::onMessageReceived()

case kWhatDrainVideoQueue:

{

int32_t generation;

CHECK(msg->findInt32("drainGeneration", &generation));

if (generation != getDrainGeneration(false /* audio */)) {

break;

}

mDrainVideoQueuePending = false;

onDrainVideoQueue();

postDrainVideoQueue();

break;

}在它的实现中,首先调用onDrainVideoQueue()函数来对这一帧数据进行处理,然后继续调用这个postDrainVideoQueue()函数,从而能够循环执行。

所以对数据帧的处理就在onDrainVideoQueue()函数中了:

void NuPlayer::Renderer::onDrainVideoQueue() {

if (mVideoQueue.empty()) {

return;

}

QueueEntry *entry = &*mVideoQueue.begin();//从视频队列中取出第一个元素

if (entry->mBuffer == NULL) {

// EOS

notifyEOS(false /* audio */, entry->mFinalResult);

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

setVideoLateByUs(0);

return;

}

//上面的代码是对EOS进行处理。

int64_t nowUs = -1;

int64_t realTimeUs;

if (mFlags & FLAG_REAL_TIME) {

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &realTimeUs));

} else {

int64_t mediaTimeUs;

CHECK(entry->mBuffer->meta()->findInt64("timeUs", &mediaTimeUs));

//track里面的时间

nowUs = ALooper::GetNowUs();

realTimeUs = getRealTimeUs(mediaTimeUs, nowUs);//现在的时间?

}

bool tooLate = false;

if (!mPaused) {

if (nowUs == -1) {

nowUs = ALooper::GetNowUs();

}

setVideoLateByUs(nowUs - realTimeUs);

tooLate = (mVideoLateByUs > 40000);//如果视频延迟了40000us,那么就不渲染了

if (tooLate) {

ALOGV("video late by %lld us (%.2f secs)",

(long long)mVideoLateByUs, mVideoLateByUs / 1E6);

} else {

int64_t mediaUs = 0;

mMediaClock->getMediaTime(realTimeUs, &mediaUs);

ALOGV("rendering video at media time %.2f secs",

(mFlags & FLAG_REAL_TIME ? realTimeUs :

mediaUs) / 1E6);

}

} else {

setVideoLateByUs(0);

if (!mVideoSampleReceived && !mHasAudio) {

// This will ensure that the first frame after a flush won't be used as anchor

// when renderer is in paused state, because resume can happen any time after seek.

Mutex::Autolock autoLock(mLock);

clearAnchorTime_l();

}

}

entry->mNotifyConsumed->setInt64("timestampNs", realTimeUs * 1000ll);

//注意,这个entry->mNotifyConsume就是从NuPlayerDecoder中传过来的reply,上面计算的参数在这里使用了。

entry->mNotifyConsumed->setInt32("render", !tooLate);

//如果延迟超过40000us的话,就不渲染,标记render为0.

entry->mNotifyConsumed->post();

//执行post函数,注意这是NuPlayerDecoder传过来的reply,sp reply = new AMessage(kWhatRenderBuffer, this);此时使用post到NuPlayerDecoder中。

mVideoQueue.erase(mVideoQueue.begin());

entry = NULL;

mVideoSampleReceived = true;

if (!mPaused) {

if (!mVideoRenderingStarted) {

mVideoRenderingStarted = true;

notifyVideoRenderingStart();

}

Mutex::Autolock autoLock(mLock);

notifyIfMediaRenderingStarted_l();

}

//这里的代码是通知NuPlayer,Render开始了。

} 对于Renderer的执行流程,这里就执行完了。发现其实它只是进行了音视频的同步和视频是否进行丢帧处理,并没有执行真正的渲染步骤,而且,只是对数据帧是否需要渲染做了标记而已,

entry->mNotifyConsumed->setInt32("render", !tooLate);

//如果延迟超过40000us的话,就不渲染,标记render为0.

真正的渲染步骤是硬件来执行的,而且,渲染是通过ACodec来完成的。

那么继续来看这个渲染流程,回到NuPlayer::Decoder::handleAnOutputBuffer()函数中,这个reply发送了kWhatRenderBuffer msg,

NuPlayer::Decoder::onMessageReceived()

case kWhatRenderBuffer:

{

if (!isStaleReply(msg)) {

onRenderBuffer(msg);

}

break;

}

void NuPlayer::Decoder::onRenderBuffer(const sp &msg) {

status_t err;

int32_t render;

size_t bufferIx;

int32_t eos;

CHECK(msg->findSize("buffer-ix", &bufferIx));//找到buffer-ix

if (!mIsAudio) {

int64_t timeUs;

sp buffer = mOutputBuffers[bufferIx];

buffer->meta()->findInt64("timeUs", &timeUs);

if (mCCDecoder != NULL && mCCDecoder->isSelected()) {

mCCDecoder->display(timeUs);//显示字幕

}

}

if (msg->findInt32("render", &render) && render) {//根据NuPlayerRenderer传过来的标记,来判断是否进行渲染

int64_t timestampNs;

CHECK(msg->findInt64("timestampNs", ×tampNs));

err = mCodec->renderOutputBufferAndRelease(bufferIx, timestampNs);

//发送给MediaCodec渲染并且release

} else {

mNumOutputFramesDropped += !mIsAudio;

err = mCodec->releaseOutputBuffer(bufferIx);

//不渲染,直接release

}

if (err != OK) {

ALOGE("failed to release output buffer for %s (err=%d)",

mComponentName.c_str(), err);

handleError(err);

}

if (msg->findInt32("eos", &eos) && eos

&& isDiscontinuityPending()) {

finishHandleDiscontinuity(true /* flushOnTimeChange */);

}

} 来看看这两个函数的实现,都是在MediaCodec.cpp中:

status_t MediaCodec::renderOutputBufferAndRelease(size_t index) {

sp msg = new AMessage(kWhatReleaseOutputBuffer, this);

msg->setSize("index", index);

msg->setInt32("render", true);

sp response;

return PostAndAwaitResponse(msg, &response);

}

status_t MediaCodec::releaseOutputBuffer(size_t index) {

sp msg = new AMessage(kWhatReleaseOutputBuffer, this);

msg->setSize("index", index);

sp response;

return PostAndAwaitResponse(msg, &response);

}

他们的区别只是设置对应的buffer-ix的render标志位。、

void MediaCodec::onMessageReceived(const sp &msg) {

case kWhatReleaseOutputBuffer:

{

sp replyID;

CHECK(msg->senderAwaitsResponse(&replyID));

if (!isExecuting()) {

PostReplyWithError(replyID, INVALID_OPERATION);

break;

} else if (mFlags & kFlagStickyError) {

PostReplyWithError(replyID, getStickyError());

break;

}

status_t err = onReleaseOutputBuffer(msg);

PostReplyWithError(replyID, err);

break;

} 执行到这里,重点又成了MediaCodec::onReleaseOutputBuffer()函数:

status_t MediaCodec::onReleaseOutputBuffer(const sp &msg) {

size_t index;

CHECK(msg->findSize("index", &index));

int32_t render;

if (!msg->findInt32("render", &render)) {

render = 0;

}

if (!isExecuting()) {

return -EINVAL;

}

if (index >= mPortBuffers[kPortIndexOutput].size()) {

return -ERANGE;

}

BufferInfo *info = &mPortBuffers[kPortIndexOutput].editItemAt(index);

if (info->mNotify == NULL || !info->mOwnedByClient) {

return -EACCES;

}

// synchronization boundary for getBufferAndFormat

{

Mutex::Autolock al(mBufferLock);

info->mOwnedByClient = false;

}

if (render && info->mData != NULL && info->mData->size() != 0) {//render是否为true

info->mNotify->setInt32("render", true);

int64_t mediaTimeUs = -1;

info->mData->meta()->findInt64("timeUs", &mediaTimeUs);

int64_t renderTimeNs = 0;

if (!msg->findInt64("timestampNs", &renderTimeNs)) {

// use media timestamp if client did not request a specific render timestamp

ALOGV("using buffer PTS of %lld", (long long)mediaTimeUs);

renderTimeNs = mediaTimeUs * 1000;

}

info->mNotify->setInt64("timestampNs", renderTimeNs);//Renderer给的timestampNs

if (mSoftRenderer != NULL) {//这里判断是否使用软件去渲染

std::list doneFrames = mSoftRenderer->render(

info->mData->data(), info->mData->size(),

mediaTimeUs, renderTimeNs, NULL, info->mFormat);

// if we are running, notify rendered frames

if (!doneFrames.empty() && mState == STARTED && mOnFrameRenderedNotification != NULL) {

sp notify = mOnFrameRenderedNotification->dup();

sp data = new AMessage;

if (CreateFramesRenderedMessage(doneFrames, data)) {

notify->setMessage("data", data);

notify->post();

}

}

}

}

info->mNotify->post();

info->mNotify = NULL;

return OK;

} 这里的info->mNotify是从&mPortBuffers[kPortIndexOutput]里面获取到的,info->mNotify是ACodec给MediaCodec的reply,所以,下一篇文章再次分析MediaCodec与ACodec之间的关系。