PCL学习:刚性物体的位姿估计

代码说明:

1. 在存在遮挡情况的场景中估计某个刚性物体的位姿;

2. 采用类SampleConsensusPrerejective,实现基于采样一致性的位姿估计,其高效之处在于利用类CorrespondenceRejectorPoly提前去除了错误位姿的假设;

SampleConsensusPrerejective的关键成员函数:

| void | setSourceFeatures (const FeatureCloudConstPtr &features) |

| 设置一个指向源点云的特征描述对象的 boost 库的共享指针 features. | |

| void | setTargetFeatures (const FeatureCloudConstPtr &features) |

| 设置一个指向目标点云的特征描述对象的 boost 库的共享指针 features. | |

| void | setNumberOfSamples (int nr_samples) |

| 设置每次迭代中使用的样本数量,为了正常估计至少需要3对。 | |

| void | setCorrespondenceRandomness (int k) |

| 设置计算协方差时选择附近多少个点为邻居 :设置的点的数量 k 越高,协方差计算越准确但也会相应降低计算速度 | |

| void | setSimilarityThreshold (float similarity_threshold) |

| 设置多边形相似阈值,对于目标物体和场景上的对应点,根据他们在各自的空间里之间的欧式距离是不变得这一几何特性,使用类CorrespondenceRejectorPoly去消除错误对应点;这个值越接近1 ,该算法通过减少迭代次数而变得月快速;但是,当噪声存在时,这也增加了排查正确对应点对的风险。 | |

| void | setInlierFraction (float inlier_fraction) |

| 设置内点比例:对于噪声大、有遮挡的场景,不需要位姿估计的结果使得所有点都配准,如果在setInputSource点云中,内点数目占总点云的比例高于指定的内点比例nlier_fraction,即可认为变换假设是有效的。 | |

| void | setCorrespondenceRandomness (float distance) |

| 判断内点阈值:此处的阈值用来判断用假设变换矩阵变换setInputSource输入源点云后,于setInputTarget输入的目标点云最近点的距离小于所设阈值,则认为该点为内点; |

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

// Types

typedef pcl::PointNormal PointNT;

typedef pcl::PointCloud PointCloudT;

typedef pcl::FPFHSignature33 FeatureT; //FPFH特征描述子

typedef pcl::FPFHEstimation FeatureEstimationT; //FPFH特征估计类

typedef pcl::PointCloud FeatureCloudT;

typedef pcl::visualization::PointCloudColorHandlerCustom ColorHandlerT; //自定义颜色句柄

// Align a rigid object to a scene with clutter and occlusions

int

main (int argc, char **argv)

{

// Point clouds

PointCloudT::Ptr object (new PointCloudT);

PointCloudT::Ptr object_aligned (new PointCloudT);

PointCloudT::Ptr scene (new PointCloudT);

FeatureCloudT::Ptr object_features (new FeatureCloudT);

FeatureCloudT::Ptr scene_features (new FeatureCloudT);

// Get input object and scene

if (argc != 3)

{

pcl::console::print_error ("Syntax is: %s object.pcd scene.pcd\n", argv[0]);

return (1);

}

// 加载目标物体和场景点云

pcl::console::print_highlight ("Loading point clouds...\n");

if (pcl::io::loadPCDFile (argv[1], *object) < 0 ||

pcl::io::loadPCDFile (argv[2], *scene) < 0)

{

pcl::console::print_error ("Error loading object/scene file!\n");

return (1);

}

// 下采样:使用0.005提速分辨率对目标物体和场景点云进行空间下采样

pcl::console::print_highlight ("Downsampling...\n");

pcl::VoxelGrid grid;

const float leaf = 0.005f;

grid.setLeafSize (leaf, leaf, leaf);

grid.setInputCloud (object);

grid.filter (*object);

grid.setInputCloud (scene);

grid.filter (*scene);

// 估计场景法线

pcl::console::print_highlight ("Estimating scene normals...\n");

pcl::NormalEstimation nest;

//pcl::NormalEstimationOMP nest;

nest.setRadiusSearch (0.01);

nest.setInputCloud (scene);

nest.compute (*scene);

// 特征估计

pcl::console::print_highlight ("Estimating features...\n");

FeatureEstimationT fest;

fest.setRadiusSearch (0.025);//该搜索半径决定FPFH特征描述的范围,一般设置为分辨率10倍以上

fest.setInputCloud (object);

fest.setInputNormals (object);

fest.compute (*object_features);

fest.setInputCloud (scene);

fest.setInputNormals (scene);

fest.compute (*scene_features);

// 实施配准

pcl::console::print_highlight ("Starting alignment...\n");

pcl::SampleConsensusPrerejective align;//基于采样一致性的位姿估计

align.setInputSource (object);

align.setSourceFeatures (object_features);

align.setInputTarget (scene);

align.setTargetFeatures (scene_features);

align.setMaximumIterations (50000); // 采样一致性迭代次数

align.setNumberOfSamples (3); // 创建假设所需的样本数,为了正常估计至少需要3点

align.setCorrespondenceRandomness (5); // 使用的临近特征点的数目

align.setSimilarityThreshold (0.9f); // 多边形边长度相似度阈值

align.setMaxCorrespondenceDistance (2.5f * 0.005); // 判断是否为内点的距离阈值

align.setInlierFraction (0.25f); //接受位姿假设所需的内点比例

{

pcl::ScopeTime t("Alignment");

align.align (*object_aligned);

}

if (align.hasConverged ())

{

// Print results

printf ("\n");

Eigen::Matrix4f transformation = align.getFinalTransformation ();

pcl::console::print_info (" | %6.3f %6.3f %6.3f | \n", transformation (0,0), transformation (0,1), transformation (0,2));

pcl::console::print_info ("R = | %6.3f %6.3f %6.3f | \n", transformation (1,0), transformation (1,1), transformation (1,2));

pcl::console::print_info (" | %6.3f %6.3f %6.3f | \n", transformation (2,0), transformation (2,1), transformation (2,2));

pcl::console::print_info ("\n");

pcl::console::print_info ("t = < %0.3f, %0.3f, %0.3f >\n", transformation (0,3), transformation (1,3), transformation (2,3));

pcl::console::print_info ("\n");

pcl::console::print_info ("Inliers: %i/%i\n", align.getInliers ().size (), object->size ());

// Show alignment

pcl::visualization::PCLVisualizer visu("点云库PCL学习教程第二版-鲁棒位姿估计");

int v1(0),v2(0);

visu.createViewPort(0,0,0.5,1,v1);

visu.createViewPort(0.5,0,1,1,v2);

visu.setBackgroundColor(255,255,255,v1);

visu.addPointCloud (scene, ColorHandlerT (scene, 0.0, 255.0, 0.0), "scene",v1);

visu.addPointCloud (object_aligned, ColorHandlerT (object_aligned, 0.0, 0.0, 255.0), "object_aligned",v1);

visu.addPointCloud(object,ColorHandlerT (object, 0.0, 255.0, 0.0), "object_before_aligned",v2);

visu.addPointCloud(scene,ColorHandlerT (scene, 0.0, 0.0, 255.0), "scene_v2",v2);

visu.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE,3,"scene");

visu.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE,3,"object_aligned");

visu.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE,3,"object_before_aligned");

visu.setPointCloudRenderingProperties(pcl::visualization::PCL_VISUALIZER_POINT_SIZE,3,"scene_v2");

visu.spin ();

}

else

{

pcl::console::print_error ("Alignment failed!\n");

return (1);

}

return (0);

} cmd执行命令:

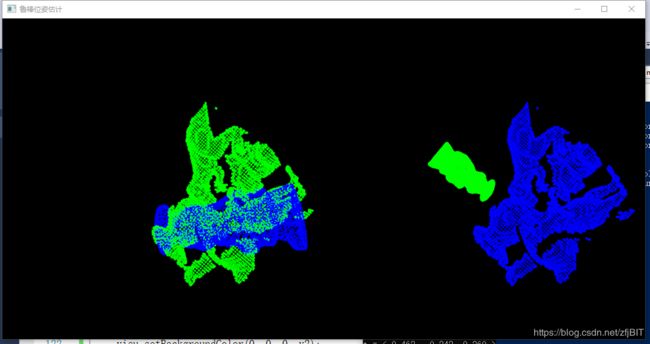

.\alignment_prerejective.exe ..\..\source\chef.pcd ..\..\source\rs1.pcd显示结果:右视图为原始点云,左视图为配准结果;蓝色为场景点云,绿色为目标点云;