【全栈之路】seata1.1.0分布式事务Springboot尝鲜体验

项目开源地址:https://gitee.com/shuogesha/shop2020

本次采用seata的docker安装的方式,准备docker环境,如果需要持久化mysql,或者配置nacos请同步需要的配置,本次就不再细说啦!好了我们开始:

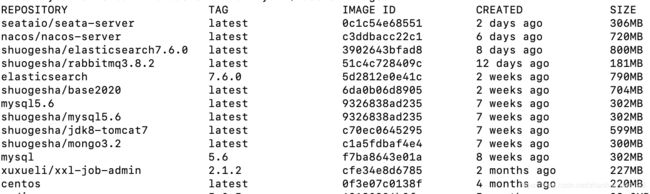

docker pull seataio/seata-server

完成后docker images看下是否成功

尝试执行启动

docker run --name seata-server -p 8091:8091 -d seataio/seata-server:latest

然后docker ps -a 看是否运行,如果没启动成功自己看下那里错了

默认的配置请打docker exec -it 容器id bash里面去看下

root@8f911515cc45:/seata-server# cd resources/

root@8f911515cc45:/seata-server/resources# ls

META-INF README-zh.md README.md file.conf file.conf.example io logback.xml registry.conf

root@8f911515cc45:/seata-server/resources# pwd

/seata-server/resources

root@8f911515cc45:/seata-server/resources#

当然可以自定义配置具体看dockerhub的文章和教程

容器命令行及查看日志

$ docker exec -it seata-server sh$ tail -f /root/logs/seata/seata-server.log使用自定义配置文件

The default configuration could be found under path /seata-server/resources, suggest that put your custom configuration under other directories. And the environment variableSEATA_CONFIG_NAME is required when use custom configuration, and the value must be started with file: like file:/root/seata-config/registry:

默认的配置文件路径为 /seata-server/resources,建议将自定义配置文件放到其他目录下; 使用自定义配置文件时必须指定环境变量 SEATA_CONFIG_NAME,并且环境变量的值需要以file:开始, 如: seata-config/registry

$ docker run --name seata-server \

-p 8091:8091 \

-e SEATA_CONFIG_NAME=file:/root/seata-config/registry \

-v /PATH/TO/CONFIG_FILE:/root/seata-config \

seataio/seata-server具体的配置可以从容器里面copy出去,registry.conf

registry {

# file 、nacos 、eureka、redis、zk、consul、etcd3、sofa

type = "file"nacos {

serverAddr = "localhost"

namespace = ""

cluster = "default"

}

eureka {

serviceUrl = "http://localhost:8761/eureka"

application = "default"

weight = "1"

}

redis {

serverAddr = "localhost:6379"

db = "0"

}

zk {

cluster = "default"

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

consul {

cluster = "default"

serverAddr = "127.0.0.1:8500"

}

etcd3 {

cluster = "default"

serverAddr = "http://localhost:2379"

}

sofa {

serverAddr = "127.0.0.1:9603"

application = "default"

region = "DEFAULT_ZONE"

datacenter = "DefaultDataCenter"

cluster = "default"

group = "SEATA_GROUP"

addressWaitTime = "3000"

}

file {

name = "file.conf"

}

}config {

# file、nacos 、apollo、zk、consul、etcd3

type = "file"nacos {

serverAddr = "localhost"

namespace = ""

group = "SEATA_GROUP"

}

consul {

serverAddr = "127.0.0.1:8500"

}

apollo {

app.id = "seata-server"

apollo.meta = "http://192.168.1.204:8801"

namespace = "application"

}

zk {

serverAddr = "127.0.0.1:2181"

session.timeout = 6000

connect.timeout = 2000

}

etcd3 {

serverAddr = "http://localhost:2379"

}

file {

name = "file.conf"

}

}

file.conf

## transaction log store, only used in seata-server

store {

## store mode: file、db

mode = "db"## file store property

file {

## store location dir

dir = "sessionStore"

# branch session size , if exceeded first try compress lockkey, still exceeded throws exceptions

maxBranchSessionSize = 16384

# globe session size , if exceeded throws exceptions

maxGlobalSessionSize = 512

# file buffer size , if exceeded allocate new buffer

fileWriteBufferCacheSize = 16384

# when recover batch read size

sessionReloadReadSize = 100

# async, sync

flushDiskMode = async

}## database store property

db {

## the implement of javax.sql.DataSource, such as DruidDataSource(druid)/BasicDataSource(dbcp) etc.

datasource = "dbcp"

## mysql/oracle/h2/oceanbase etc.

dbType = "mysql"

driverClassName = "com.mysql.jdbc.Driver"

url = "jdbc:mysql://mysql5.6:3306/base2020"

user = "root"

password = "yi"

minConn = 1

maxConn = 10

globalTable = "global_table"

branchTable = "branch_table"

lockTable = "lock_table"

queryLimit = 100

}

}

我这里的单体boot就直接存mysql了,mysql的docker跑起来后,执行命令

docker run --name seata-server -p 8091:8091 --link mysql5.6:mysql5.6 -e SEATA_CONFIG_NAME=file:/root/seata-config/registry -v /Users/zhaohaiyuan/Downloads/docker/seata/config:/root/seata-config -v /Users/zhaohaiyuan/Downloads/docker/seata/log:/root/logs -d seataio/seata-server

以上是准备工作,进行正题

配置pom.xml,加入依赖

io.seata

seata-spring-boot-starter

1.1.0

配置yml

#====================================Seata Config===============================================

seata:

enabled: true

application-id: base2020

tx-service-group: my_test_tx_group

#enable-auto-data-source-proxy: true

#use-jdk-proxy: false

client:

rm:

async-commit-buffer-limit: 1000

report-retry-count: 5

table-meta-check-enable: false

report-success-enable: false

lock:

retry-interval: 10

retry-times: 30

retry-policy-branch-rollback-on-conflict: true

tm:

commit-retry-count: 5

rollback-retry-count: 5

undo:

data-validation: true

log-serialization: jackson

log-table: undo_log

log:

exceptionRate: 100

service:

vgroup-mapping:

my_test_tx_group: default

grouplist:

default: 127.0.0.1:8091

#enable-degrade: false

#disable-global-transaction: false

transport:

shutdown:

wait: 3

thread-factory:

boss-thread-prefix: NettyBoss

worker-thread-prefix: NettyServerNIOWorker

server-executor-thread-prefix: NettyServerBizHandler

share-boss-worker: false

client-selector-thread-prefix: NettyClientSelector

client-selector-thread-size: 1

client-worker-thread-prefix: NettyClientWorkerThread

worker-thread-size: default

boss-thread-size: 1

type: TCP

server: NIO

heartbeat: true

serialization: seata

compressor: none

enable-client-batch-send-request: true

然后进入业务异常提示

方法上加入@GlobalTransactional(timeoutMills = 300000, name = "base2020-seata-example")

@GlobalTransactional(timeoutMills = 300000, name = "base2020-seata-example")

public void save(Notice bean) {

dao.saveEntity(bean);

throw new RuntimeException("notice branch exception");//看是否成功

}

ok 提交数据测试

看日志

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.c.r.netty.AbstractRpcRemotingClient : channel inactive: [id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.c.r.netty.NettyClientChannelManager : return to pool, rm channel:[id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.core.rpc.netty.NettyPoolableFactory : channel valid false,channel:[id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.core.rpc.netty.NettyPoolableFactory : will destroy channel:[id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.core.rpc.netty.AbstractRpcRemoting : ChannelHandlerContext(AbstractRpcRemotingClient$ClientHandler#0, [id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]) will closed

2020-02-23 12:32:19.197 INFO 88622 --- [lector_TMROLE_1] i.s.core.rpc.netty.AbstractRpcRemoting : ChannelHandlerContext(AbstractRpcRemotingClient$ClientHandler#0, [id: 0x8d619b6a, L:/127.0.0.1:62129 ! R:/127.0.0.1:8091]) will closed

然后看下docker里面的日志

2020-02-23T04:28:29.566588000Z 2020-02-23 04:28:29.564 INFO [batchLoggerPrint_1]io.seata.core.rpc.DefaultServerMessageListenerImpl.run:206 -SeataMergeMessage xid=172.17.0.3:8091:2036131054,branchType=AT,resourceId=jdbc:mysql://localhost:3306/base2020,lockKey=e_notice:14

2020-02-23T04:28:29.566674100Z ,clientIp:172.17.0.1,vgroup:my_test_tx_group

2020-02-23T04:28:29.568356300Z 2020-02-23 04:28:29.566 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.AbstractCore.lambda$branchRegister$0:86 -Successfully register branch xid = 172.17.0.3:8091:2036131054, branchId = 2036131055

2020-02-23T04:28:29.579875600Z 2020-02-23 04:28:29.576 INFO [batchLoggerPrint_1]io.seata.core.rpc.DefaultServerMessageListenerImpl.run:206 -SeataMergeMessage xid=172.17.0.3:8091:2036131054,extraData=null

2020-02-23T04:28:29.579965800Z ,clientIp:172.17.0.1,vgroup:my_test_tx_group

2020-02-23T04:28:29.586873900Z 2020-02-23 04:28:29.585 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.DefaultCore.doGlobalRollback:288 -Successfully rollback branch xid=172.17.0.3:8091:2036131054 branchId=2036131055

2020-02-23T04:28:29.588391500Z 2020-02-23 04:28:29.587 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.DefaultCore.doGlobalRollback:334 -Successfully rollback global, xid = 172.17.0.3:8091:2036131054

2020-02-23T04:31:10.464211400Z 2020-02-23 04:31:10.446 INFO [batchLoggerPrint_1]io.seata.core.rpc.DefaultServerMessageListenerImpl.run:206 -SeataMergeMessage timeout=300000,transactionName=base2020-seata-example

2020-02-23T04:31:10.464797600Z ,clientIp:172.17.0.1,vgroup:my_test_tx_group

2020-02-23T04:31:10.469364600Z 2020-02-23 04:31:10.446 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.DefaultCore.begin:134 -Successfully begin global transaction xid = 172.17.0.3:8091:2036131056

2020-02-23T04:31:10.488129500Z 2020-02-23 04:31:10.482 INFO [batchLoggerPrint_1]io.seata.core.rpc.DefaultServerMessageListenerImpl.run:206 -SeataMergeMessage xid=172.17.0.3:8091:2036131056,branchType=AT,resourceId=jdbc:mysql://localhost:3306/base2020,lockKey=e_notice:15

2020-02-23T04:31:10.488758500Z ,clientIp:172.17.0.1,vgroup:my_test_tx_group

2020-02-23T04:31:10.494256600Z 2020-02-23 04:31:10.483 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.AbstractCore.lambda$branchRegister$0:86 -Successfully register branch xid = 172.17.0.3:8091:2036131056, branchId = 2036131057

2020-02-23T04:31:10.509472500Z 2020-02-23 04:31:10.507 INFO [batchLoggerPrint_1]io.seata.core.rpc.DefaultServerMessageListenerImpl.run:206 -SeataMergeMessage xid=172.17.0.3:8091:2036131056,extraData=null

2020-02-23T04:31:10.509562300Z ,clientIp:172.17.0.1,vgroup:my_test_tx_group

2020-02-23T04:31:10.525126800Z 2020-02-23 04:31:10.521 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.DefaultCore.doGlobalRollback:288 -Successfully rollback branch xid=172.17.0.3:8091:2036131056 branchId=2036131057

2020-02-23T04:31:10.528866400Z 2020-02-23 04:31:10.525 INFO [ServerHandlerThread_1_500]io.seata.server.coordinator.DefaultCore.doGlobalRollback:334 -Successfully rollback global, xid = 172.17.0.3:8091:2036131056

2020-02-23T04:32:19.210041200Z 2020-02-23 04:32:19.203 INFO [NettyServerNIOWorker_1_8]io.seata.core.rpc.netty.AbstractRpcRemotingServer.handleDisconnect:254 -172.17.0.1:50482 to server channel inactive.

2020-02-23T04:32:19.211533000Z 2020-02-23 04:32:19.210 INFO [NettyServerNIOWorker_1_8]io.seata.core.rpc.netty.AbstractRpcRemotingServer.handleDisconnect:259 -remove channel:[id: 0xd3afcf25, L:0.0.0.0/0.0.0.0:8091 ! R:/172.17.0.1:50482]context:RpcContext{applicationId='base2020', transactionServiceGroup='my_test_tx_group', clientId='base2020:172.17.0.1:50482', channel=[id: 0xd3afcf25, L:0.0.0.0/0.0.0.0:8091 ! R:/172.17.0.1:50482], resourceSets=null}

2020-02-23T04:32:20.202774200Z 2020-02-23 04:32:20.199 INFO [NettyServerNIOWorker_1_8]io.seata.core.rpc.netty.AbstractRpcRemotingServer.handleDisconnect:254 -172.17.0.1:50484 to server channel inactive.

2020-02-23T04:32:20.204923300Z 2020-02-23 04:32:20.203 INFO [NettyServerNIOWorker_1_8]io.seata.core.rpc.netty.AbstractRpcRemotingServer.handleDisconnect:259 -remove channel:[id: 0x76cd8fb6, L:/172.17.0.3:8091 ! R:/172.17.0.1:50484]context:RpcContext{applicationId='base2020', transactionServiceGroup='my_test_tx_group', clientId='base2020:172.17.0.1:50484', channel=[id: 0x76cd8fb6, L:/172.17.0.3:8091 ! R:/172.17.0.1:50484], resourceSets=[]}