python

一、windows下的python

在本地windows端,想开发python代码,或者运行python代码,需要在windows端安装python开发环境,来测试python代码,学习python。

操作步骤

1. 首先安装jdk1.8 配置环境变量等

jdk1.8下载地址

2. 安装Anaconda环境,配置环境变量

Anaconda下载地址

3. 安装spark,配置环境变量(spark1.6.1)

spark1.6.1下载地址

4. 安装hadoop,配置环境变量(hadoop2.6.0)

hadoop2.6.0下载地址

5. 安装pycharm

python开发IDE工具下载地址

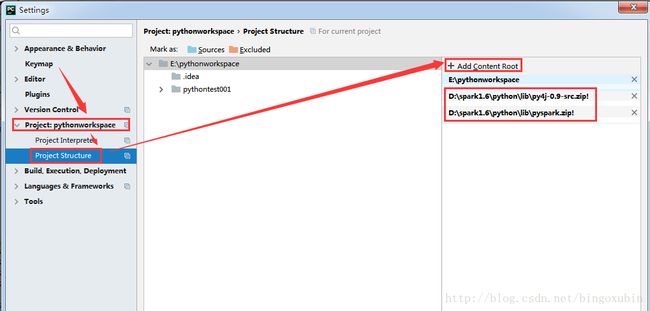

都安装完毕后,启动pycharm,选择左上角,File,Settings进行如下图设置,主要添加spark的两个依赖包:

二、linux下的python

在linux端如果需要运行python代码,需要安装python开发环境,一般centos自带python开发环境,centos6自带了python2.6,而centos7自带了python2.7,但是如果想用python3,那么需要自己手动安装!

操作步骤

1. Centos7自带的python

# python #输入python命令,查看自带的版本

# which python #查看自带python的位置

# cd /usr/bin/

# ls -al python* #查看python情况

由上图,可以发现,实际上执行python的时候,只是一个软连接到了python2上,所以想替换成python3,只要将/usr/bin/下面的python软连接到python3上即可!

2. 安装python3.5可能使用的依赖

# yum install openssl-devel bzip2-devel expat-devel gdbm-devel readline-devel sqlite-devel

3. 下载python

# cd /opt/

# wget "https://www.python.org/ftp/python/3.5.0/Python-3.5.0.tgz"

4. 解压下载好的压缩包

# tar -zxvf Python-3.5.0.tgz

5. 配置编译

# sudo mkdir /usr/local/python3

# sudo Python-3.5.0/configure --prefix=/usr/local/python3

# sudo make

# sudo make install

6. 备份旧版本python,链接新版本python

如第一小点所描述,在/usr/bin中,python指向python2,python2指向python2.7

# cd /usr/bin/

# sudo mv python python.bak,

# sudo ln -s /usr/local/python3/bin/python3 /usr/bin/python

7. 修改yum配置文件

# vim /usr/bin/yum

将文件中第一行#!/usr/bin/python 改为 #!/usr/bin/python2.7,意思就是将python2指向python2.7

最后

执行python,即可看到是python3.5.0版本

执行python2,即可看到是python2.7.5版本

三、python demo

python封装了很多算法,工具,使用起来非常的方便,它涉及各行各业,比如医疗,生物,数学,计算机,人工智能等,我们不需要使用它的全部,只需要使用我们所需要的一些,可以使代码更加简洁,开发更加方便。

需求场景

一本小说,天龙八部,统计天龙八部小说中,出现的词汇,然后将这些词汇绘制出词云图。

天龙八部小说下载地址

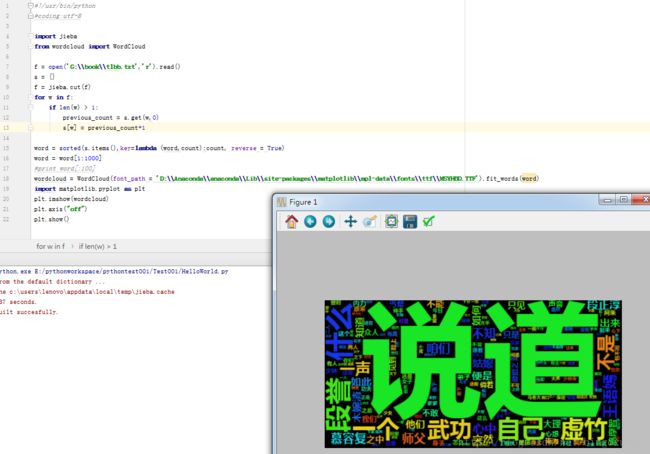

测试代码

import jieba

from wordcloud import WordCloud

f = open('G:\\book\\tlbb.txt','r').read()

s = {}

f = jieba.cut(f)

for w in f:

if len(w) > 1:

previous_count = s.get(w,0)

s[w] = previous_count+1

word = sorted(s.items(),key=lambda (word,count):count, reverse = True)

word = word[1:1000]

#print word[:100]

wordcloud = WordCloud(font_path = 'D:\\Anaconda\\anaconda\\Lib\\site-packages\\matplotlib\\mpl-data\\fonts\\ttf\\MSYHBD.TTF').fit_words(word)

import matplotlib.pyplot as plt

plt.imshow(wordcloud)

plt.axis("off")

plt.show()

运行结果

注:排除无用词汇,将词云图展示成照片形状,做个更加漂亮,自己去摸索吧!

四、python on spark

spark是用scala写的一种极其强悍的计算工具,spark内存计算,提供了图计算,流式计算,机器学习,即时查询等十分方便的工具,当然我们也可以通过python代码,来调用实现spark计算,用spark来辅助我们计算,使代码效率更快,用户体验更强。

操作流程

按照windows搭建Python开发环境博文,搭建python开发环境,实际已经将Spark环境部署完成了,所以直接可以用python语言写一些spark相关的程序!

代码示例:

from pyspark import SparkContext

sc = SparkContext("local","Simple App")

doc = sc.parallelize([['a','b','c'],['b','d','d']])

words = doc.flatMap(lambda d:d).distinct().collect()

word_dict = {w:i for w,i in zip(words,range(len(words)))}

word_dict_b = sc.broadcast(word_dict)

def wordCountPerDoc(d):

dict={}

wd = word_dict_b.value

for w in d:

if dict.get(wd[w],0):

dict[wd[w]] +=1

else:

dict[wd[w]] = 1

return dict

print(doc.map(wordCountPerDoc).collect())

print("successful!")

结果展示:

D:\Anaconda\anaconda\python.exe E:/pythonworkspace/pythontest001/Test001/test002.py

Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

17/11/21 15:00:18 INFO SparkContext: Running Spark version 1.6.1

17/11/21 15:00:21 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

17/11/21 15:00:21 INFO SecurityManager: Changing view acls to: lenovo

17/11/21 15:00:21 INFO SecurityManager: Changing modify acls to: lenovo

17/11/21 15:00:21 INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(lenovo); users with modify permissions: Set(lenovo)

17/11/21 15:00:25 INFO Utils: Successfully started service 'sparkDriver' on port 60670.

17/11/21 15:00:25 INFO Slf4jLogger: Slf4jLogger started

17/11/21 15:00:25 INFO Remoting: Starting remoting

17/11/21 15:00:26 INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriverActorSystem@192.168.114.67:60684]

17/11/21 15:00:26 INFO Utils: Successfully started service 'sparkDriverActorSystem' on port 60684.

17/11/21 15:00:26 INFO SparkEnv: Registering MapOutputTracker

17/11/21 15:00:26 INFO SparkEnv: Registering BlockManagerMaster

17/11/21 15:00:26 INFO DiskBlockManager: Created local directory at C:\Users\lenovo\AppData\Local\Temp\blockmgr-a0245427-988c-4b5a-8653-ee9e228de6ba

17/11/21 15:00:26 INFO MemoryStore: MemoryStore started with capacity 511.1 MB

17/11/21 15:00:26 INFO SparkEnv: Registering OutputCommitCoordinator

17/11/21 15:00:26 INFO Utils: Successfully started service 'SparkUI' on port 4040.

17/11/21 15:00:26 INFO SparkUI: Started SparkUI at http://192.168.114.67:4040

17/11/21 15:00:27 INFO Executor: Starting executor ID driver on host localhost

17/11/21 15:00:27 INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService' on port 60691.

17/11/21 15:00:27 INFO NettyBlockTransferService: Server created on 60691

17/11/21 15:00:27 INFO BlockManagerMaster: Trying to register BlockManager

17/11/21 15:00:27 INFO BlockManagerMasterEndpoint: Registering block manager localhost:60691 with 511.1 MB RAM, BlockManagerId(driver, localhost, 60691)

17/11/21 15:00:27 INFO BlockManagerMaster: Registered BlockManager

17/11/21 15:00:28 INFO SparkContext: Starting job: collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5

17/11/21 15:00:28 INFO DAGScheduler: Registering RDD 2 (distinct at E:/pythonworkspace/pythontest001/Test001/test002.py:5)

17/11/21 15:00:28 INFO DAGScheduler: Got job 0 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5) with 1 output partitions

17/11/21 15:00:28 INFO DAGScheduler: Final stage: ResultStage 1 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5)

17/11/21 15:00:28 INFO DAGScheduler: Parents of final stage: List(ShuffleMapStage 0)

17/11/21 15:00:28 INFO DAGScheduler: Missing parents: List(ShuffleMapStage 0)

17/11/21 15:00:28 INFO DAGScheduler: Submitting ShuffleMapStage 0 (PairwiseRDD[2] at distinct at E:/pythonworkspace/pythontest001/Test001/test002.py:5), which has no missing parents

17/11/21 15:00:28 INFO MemoryStore: Block broadcast_0 stored as values in memory (estimated size 6.6 KB, free 6.6 KB)

17/11/21 15:00:28 INFO MemoryStore: Block broadcast_0_piece0 stored as bytes in memory (estimated size 4.3 KB, free 11.0 KB)

17/11/21 15:00:28 INFO BlockManagerInfo: Added broadcast_0_piece0 in memory on localhost:60691 (size: 4.3 KB, free: 511.1 MB)

17/11/21 15:00:28 INFO SparkContext: Created broadcast 0 from broadcast at DAGScheduler.scala:1006

17/11/21 15:00:28 INFO DAGScheduler: Submitting 1 missing tasks from ShuffleMapStage 0 (PairwiseRDD[2] at distinct at E:/pythonworkspace/pythontest001/Test001/test002.py:5)

17/11/21 15:00:28 INFO TaskSchedulerImpl: Adding task set 0.0 with 1 tasks

17/11/21 15:00:28 INFO TaskSetManager: Starting task 0.0 in stage 0.0 (TID 0, localhost, partition 0,PROCESS_LOCAL, 2099 bytes)

17/11/21 15:00:28 INFO Executor: Running task 0.0 in stage 0.0 (TID 0)

17/11/21 15:00:30 INFO PythonRunner: Times: total = 1240, boot = 1221, init = 19, finish = 0

17/11/21 15:00:30 INFO Executor: Finished task 0.0 in stage 0.0 (TID 0). 1222 bytes result sent to driver

17/11/21 15:00:30 INFO TaskSetManager: Finished task 0.0 in stage 0.0 (TID 0) in 1433 ms on localhost (1/1)

17/11/21 15:00:30 INFO TaskSchedulerImpl: Removed TaskSet 0.0, whose tasks have all completed, from pool

17/11/21 15:00:30 INFO DAGScheduler: ShuffleMapStage 0 (distinct at E:/pythonworkspace/pythontest001/Test001/test002.py:5) finished in 1.465 s

17/11/21 15:00:30 INFO DAGScheduler: looking for newly runnable stages

17/11/21 15:00:30 INFO DAGScheduler: running: Set()

17/11/21 15:00:30 INFO DAGScheduler: waiting: Set(ResultStage 1)

17/11/21 15:00:30 INFO DAGScheduler: failed: Set()

17/11/21 15:00:30 INFO DAGScheduler: Submitting ResultStage 1 (PythonRDD[5] at collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5), which has no missing parents

17/11/21 15:00:30 INFO MemoryStore: Block broadcast_1 stored as values in memory (estimated size 5.5 KB, free 16.5 KB)

17/11/21 15:00:30 INFO MemoryStore: Block broadcast_1_piece0 stored as bytes in memory (estimated size 3.4 KB, free 19.8 KB)

17/11/21 15:00:30 INFO BlockManagerInfo: Added broadcast_1_piece0 in memory on localhost:60691 (size: 3.4 KB, free: 511.1 MB)

17/11/21 15:00:30 INFO SparkContext: Created broadcast 1 from broadcast at DAGScheduler.scala:1006

17/11/21 15:00:30 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 1 (PythonRDD[5] at collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5)

17/11/21 15:00:30 INFO TaskSchedulerImpl: Adding task set 1.0 with 1 tasks

17/11/21 15:00:30 INFO TaskSetManager: Starting task 0.0 in stage 1.0 (TID 1, localhost, partition 0,NODE_LOCAL, 1894 bytes)

17/11/21 15:00:30 INFO Executor: Running task 0.0 in stage 1.0 (TID 1)

17/11/21 15:00:30 INFO ShuffleBlockFetcherIterator: Getting 1 non-empty blocks out of 1 blocks

17/11/21 15:00:30 INFO ShuffleBlockFetcherIterator: Started 0 remote fetches in 9 ms

17/11/21 15:00:31 INFO PythonRunner: Times: total = 1289, boot = 1280, init = 9, finish = 0

17/11/21 15:00:31 INFO Executor: Finished task 0.0 in stage 1.0 (TID 1). 1290 bytes result sent to driver

17/11/21 15:00:31 INFO DAGScheduler: ResultStage 1 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5) finished in 1.377 s

17/11/21 15:00:31 INFO TaskSetManager: Finished task 0.0 in stage 1.0 (TID 1) in 1375 ms on localhost (1/1)

17/11/21 15:00:31 INFO TaskSchedulerImpl: Removed TaskSet 1.0, whose tasks have all completed, from pool

17/11/21 15:00:31 INFO DAGScheduler: Job 0 finished: collect at E:/pythonworkspace/pythontest001/Test001/test002.py:5, took 3.307445 s

17/11/21 15:00:31 INFO MemoryStore: Block broadcast_2 stored as values in memory (estimated size 352.0 B, free 20.2 KB)

17/11/21 15:00:31 INFO MemoryStore: Block broadcast_2_piece0 stored as bytes in memory (estimated size 115.0 B, free 20.3 KB)

17/11/21 15:00:31 INFO BlockManagerInfo: Added broadcast_2_piece0 in memory on localhost:60691 (size: 115.0 B, free: 511.1 MB)

17/11/21 15:00:31 INFO SparkContext: Created broadcast 2 from broadcast at PythonRDD.scala:430

17/11/21 15:00:31 INFO SparkContext: Starting job: collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18

17/11/21 15:00:31 INFO DAGScheduler: Got job 1 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18) with 1 output partitions

17/11/21 15:00:31 INFO DAGScheduler: Final stage: ResultStage 2 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18)

17/11/21 15:00:31 INFO DAGScheduler: Parents of final stage: List()

17/11/21 15:00:31 INFO DAGScheduler: Missing parents: List()

17/11/21 15:00:31 INFO DAGScheduler: Submitting ResultStage 2 (PythonRDD[6] at collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18), which has no missing parents

17/11/21 15:00:31 INFO MemoryStore: Block broadcast_3 stored as values in memory (estimated size 4.3 KB, free 24.5 KB)

17/11/21 15:00:31 INFO MemoryStore: Block broadcast_3_piece0 stored as bytes in memory (estimated size 2.8 KB, free 27.3 KB)

17/11/21 15:00:31 INFO BlockManagerInfo: Added broadcast_3_piece0 in memory on localhost:60691 (size: 2.8 KB, free: 511.1 MB)

17/11/21 15:00:31 INFO SparkContext: Created broadcast 3 from broadcast at DAGScheduler.scala:1006

17/11/21 15:00:31 INFO DAGScheduler: Submitting 1 missing tasks from ResultStage 2 (PythonRDD[6] at collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18)

17/11/21 15:00:31 INFO TaskSchedulerImpl: Adding task set 2.0 with 1 tasks

17/11/21 15:00:31 INFO TaskSetManager: Starting task 0.0 in stage 2.0 (TID 2, localhost, partition 0,PROCESS_LOCAL, 2110 bytes)

17/11/21 15:00:31 INFO Executor: Running task 0.0 in stage 2.0 (TID 2)

17/11/21 15:00:33 INFO PythonRunner: Times: total = 1199, boot = 1195, init = 3, finish = 1

17/11/21 15:00:33 INFO Executor: Finished task 0.0 in stage 2.0 (TID 2). 1040 bytes result sent to driver

17/11/21 15:00:33 INFO TaskSetManager: Finished task 0.0 in stage 2.0 (TID 2) in 1235 ms on localhost (1/1)

17/11/21 15:00:33 INFO TaskSchedulerImpl: Removed TaskSet 2.0, whose tasks have all completed, from pool

17/11/21 15:00:33 INFO DAGScheduler: ResultStage 2 (collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18) finished in 1.237 s

17/11/21 15:00:33 INFO DAGScheduler: Job 1 finished: collect at E:/pythonworkspace/pythontest001/Test001/test002.py:18, took 1.267822 s

[{0: 1, 1: 1, 2: 1}, {2: 1, 3: 2}]

successful!

17/11/21 15:00:33 INFO SparkContext: Invoking stop() from shutdown hook

Process finished with exit code 0