首先创建一个简单的java工程

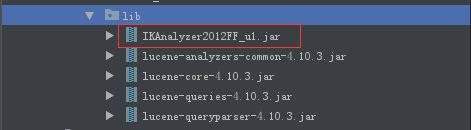

创建lib目录并引入jar包

其中IKAnalyzer是一个第三方的中文分词器

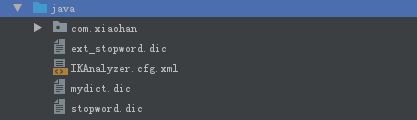

要使用IK分词器还需要在根目录下引入几个文件

IKAnalyzer.cfg.xml

IK Analyzer 扩展配置

mydict.dic;

stopword.dic;

mydict.dic

不是坏人

奥巴马

普京

斯诺登

stopword.dic

a

an

and

are

as

创建索引存放目录

基本环境搭建完成

新建一个类 先写好几个公用的静态方法

//索引目录

public static String indexDir = "D:\\software\\idea-workspace\\lucene\\src\\main\\resources\\index_dir";

//获取分词器

private static Analyzer getAnalyzer() {

return new IKAnalyzer();

}

//获取FSDirectory

private static FSDirectory getFSDirectory() throws IOException {

FSDirectory directory = FSDirectory.open(new File(indexDir));

return directory;

}

//获取IndexWriter

private static IndexWriter getIndexWriter() throws IOException {

IndexWriterConfig conf = new IndexWriterConfig(Version.LATEST, getAnalyzer());

IndexWriter writer = new IndexWriter(getFSDirectory(), conf);

return writer;

}

接下来做简单增删查改操作

创建索引

public static void createIndex() throws IOException {

IndexWriter writer = getIndexWriter();

try {

Document doc = new Document();

doc.add(new StringField("id", "1", Field.Store.YES)); //不分词,索引,要存储

doc.add(new TextField("name", "张三", Field.Store.YES)); //分词,索引,存储

doc.add(new IntField("age", 23, Field.Store.YES)); //不分词,索引,存储

doc.add(new StoredField("icon", "http://icon/****")); //不分词,不索引,要存储

doc.add(new TextField("introduction", "个人介绍", Field.Store.NO));//分词,索引,不存储

writer.addDocument(doc);

} finally {

writer.close();

}

}

//main方法中调用

public static void main(String[] args) throws IOException {

createIndex();

}

索引目录中 产生了一些索引文件

term查询文档

//term查询文档

public static void query(String id) throws IOException {

//new TermQuery(new Term(SITE_ID, siteId.toString()))

IndexReader reader = DirectoryReader.open(getFSDirectory());

try {

IndexSearcher searcher = new IndexSearcher(reader);

Query query = new TermQuery(new Term("id", id));

//n代表查出前10条

TopDocs topDocs = searcher.search(query, 10);

System.out.println(">>>总记录数" + topDocs.totalHits);

ScoreDoc[] docs = topDocs.scoreDocs;

for (ScoreDoc scoreDoc : docs) {

int docId = scoreDoc.doc; //文档的id 跟存储的id没什么关系

Document doc = searcher.doc(docId);//获取doc对象

System.out.println(doc.get("id"));

System.out.println(doc.get("name"));

System.out.println(doc.get("age"));

System.out.println(doc.get("icon"));

System.out.println(doc.get("introduction"));

}

} finally {

reader.close();

}

}

执行main函数

public static void main(String[] args) throws IOException {

// createIndex();

query("1");

}

输出如下

可以看到introduction的输出为null 这就是前面创建Field的时候 指定不存储

>>>总记录数1

1

张三

23

http://icon/****

null

term删除

其实是根据查询条件删除

public static void delete(String id) throws IOException {

IndexWriter indexWriter = getIndexWriter();

try {

indexWriter.deleteDocuments(new Term("id", id));

// indexWriter.deleteAll(); 删除所有文档

} finally {

indexWriter.close(); //close的时候才会真正的删除

}

}

main

public static void main(String[] args) throws IOException {

// createIndex();

delete("1");

query("1");

}

更新索引

public static void updateIndex(String id,String name) throws IOException {

IndexWriter writer = getIndexWriter();

try {

Document doc = new Document();

doc.add(new StringField("id", id, Field.Store.YES)); //不分词,索引,要存储

doc.add(new TextField("name", name, Field.Store.YES)); //分词,索引,存储

writer.updateDocument(new Term("id", id), doc);

} finally {

writer.close();

}

}

main函数

public static void main(String[] args) throws IOException {

createIndex();

// delete("1");

query("1");

updateIndex("1","李四");

query("1");

}

可以看到其实也是先删除了 然后再添加

>>>总记录数1

1

张三

23

http://icon/****

null

>>>总记录数1

1

李四

null

null

null

BooleanQuery查询文档 并指定排序 懵B的 先记录下来吧

public static void booleanQuery(String queryString) throws IOException {

//new TermQuery(new Term(SITE_ID, siteId.toString()))

IndexReader reader = DirectoryReader.open(getFSDirectory());

IndexSearcher searcher = new IndexSearcher(reader);

try {

BooleanQuery bq = new BooleanQuery();

Query q;

q = MultiFieldQueryParser.parse(queryString, new String[]{"name", "introduction"},

new BooleanClause.Occur[]{BooleanClause.Occur.SHOULD,

BooleanClause.Occur.SHOULD}, getAnalyzer());

bq.add(q, BooleanClause.Occur.MUST); //且

//查询年龄 age>=1 && age <=30

q = NumericRangeQuery.newIntRange("age", 1, 30, true, true);

bq.add(q, BooleanClause.Occur.MUST); //且

Sort sort = new Sort();

List sortFieldList = new ArrayList();

//按照id从小到大

sortFieldList.add(new SortField("id", SortField.Type.STRING, false));

//并且 按照年龄从大到小

sortFieldList.add(new SortField("age", SortField.Type.INT, true));

sort.setSort(sortFieldList.toArray(new SortField[sortFieldList.size()]));

//n代表查出前10条

TopDocs topDocs = searcher.search(bq, 10, sort);

System.out.println(">>>总记录数" + topDocs.totalHits);

ScoreDoc[] docs = topDocs.scoreDocs;

for (ScoreDoc scoreDoc : docs) {

int docId = scoreDoc.doc; //文档的id 跟存储的id没什么关系

Document doc = searcher.doc(docId);//获取doc对象

System.out.println(doc.get("id"));

System.out.println(doc.get("name"));

System.out.println(doc.get("age"));

System.out.println(doc.get("icon"));

System.out.println(doc.get("introduction"));

}

} catch (ParseException e) {

e.printStackTrace();

} finally {

reader.close();

}

}

自定义评分也记录下

/**

* 重写评分的实现方式

**/

private class MyScoreProvider extends CustomScoreProvider {

private AtomicReaderContext context;

public MyScoreProvider(AtomicReaderContext context) {

super(context);

this.context = context;

}

/**

* 重写评分方法

**/

@Override

public float customScore(int doc, float subQueryScore, float valSrcScore) throws IOException {

// 从域缓存中加载索引字段信息

FieldCache.Ints ageInts = FieldCache.DEFAULT.getInts(context.reader(), "age", false);

int age = ageInts.get(doc);

//这里可以通过 一些 文档Field的值 来为文档打分 达到排序的效果

//doc实际上就是Lucene中得docId

float score = subQueryScore * valSrcScore; //分数

// 判断加权

//....

/*

* 此处可以控制与原有得分结合的方式,加减乘除都可以

* **/

return score;

}

}

/**

* 重写CustomScoreQuery 的getCustomScoreProvider方法

* 引用自定义的Provider

*/

private class MyCustomScoreQuery extends CustomScoreQuery {

public MyCustomScoreQuery(Query subQuery) {

super(subQuery);

}

@Override

protected CustomScoreProvider getCustomScoreProvider(AtomicReaderContext context) throws IOException {

/**注册使用自定义的评分实现方式**/

return new MyScoreProvider(context);

}

}

查询语句换一下 这里不能指定sort参数 sort参数优先级高

TopDocs docs = searcher.search(new MyCustomScoreQuery(query),10);