贷款审批结果预测代码实现

贷款审批结果预测

银行的放贷审批,核心要素为风险控制。因此,对于申请人的审查关注的要点为违约可能性。而违约可能性通常由申请人收入情况、稳定性、贷款数额及偿还年限等因素来衡量。该项目根据申请人条件,进一步细化得到各个变量对于违约评估的影响,从而预测银行是否会批准贷款申请。在项目实现过程中使用了经典的机器学习算法,对申请贷款客户进行科学归类,从而帮助金融机构提高对贷款信用风险的控制能力。

数据来自竞赛https://datahack.analyticsvidhya.com/contest/practice-problem-loan-prediction-iii/

数据读取与预览

#数据读取

// An highlighted block

import pandas as pd

file = 'E:/python_ai/all_homework/loan-prediction-homework/loan_records.csv'

loan_df = pd.read_csv(file)

#预览数据

print('数据集一共有{}行,{}列'.format(loan_df.shape[0], loan_df.shape[1]))

print(loan_df.head(5))

其中贷款数据中的数据特征有:

Loan_ID:样本标号

Gender:贷款人性别 (Male/ Female)

Married:是否结婚 (Y/N)

Dependents:供养人数

Education: 受教育程度 (Graduate/ Not Graduate)

Self_Employed:是否自雇 (Y/N)

ApplicantIncome:申请人收入

CoapplicantIncome:联合申请人收入

LoanAmount:贷款金额(单位:千)

Loan_Amount_Term:贷款期限(单位:月)

Credit_History:历史信用是否达标(0/1)

Property_Area:居住地区(Urban/ Semi Urban/ Rural)

目标列:

Loan_Status:是否批准(Y/N)

数据统计信息

// An highlighted block

loan_df.describe()

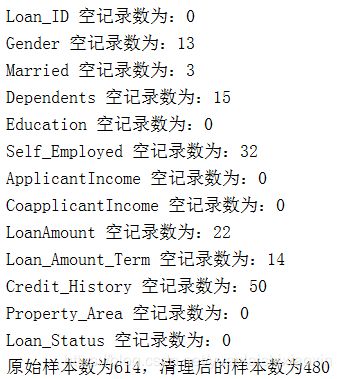

可以看出 LoanAmount、Loan_Amount_Term、Credit_History有明显的缺失值,需要进行空值处理。

数据处理

####重复值处理

// An highlighted block

if loan_df[loan_df['Loan_ID'].duplicated()].shape[0] > 0:

print('数据集存在重复样本')

else:

print('数据集不存在重复样本')

print('\n')

// An highlighted block

cols = loan_df.columns.tolist()

print(loan_df.columns ) #得到所有列名

print(loan_df.values) #得到所有数值

for col in cols:

empty_count = loan_df[col].isnull().sum()

print('{} 空记录数为:{}'.format(col, empty_count))

clean_loan_df = loan_df.dropna() #将存在空值的样本删除

print('原始样本数为{},清理后的样本数为{}'.format(loan_df.shape[0],

clean_loan_df.shape[0]))

#####特殊值处理

可忽略SettingWithCopyWarning,数值列Dependents包含3+,将其全部转换为3

// An highlighted block

clean_loan_df.loc[clean_loan_df['Dependents'] == '3+', 'Dependents'] = 3

####特征数据和标签数据提取

// An highlighted block

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

# 按数据类型指定特征列

# 1. 数值型特征列

num_cols = ['Dependents', 'ApplicantIncome', 'CoapplicantIncome', 'LoanAmount', 'Loan_Amount_Term']

# 2. 有序型特征

ord_cols = ['Education', 'Credit_History']

# 3. 类别型特征

cat_cols = ['Gender', 'Married', 'Self_Employed', 'Property_Area']

feat_cols = num_cols + ord_cols + cat_cols

# 特征数据

feat_df = clean_loan_df[feat_cols]

#将标签Y转换为1,标签N转换为0

clean_loan_df.loc[clean_loan_df["Loan_Status"] == 'Y',"Loan_Status"] = 1

clean_loan_df.loc[clean_loan_df["Loan_Status"] == 'N', "Loan_Status"] = 0

#另一种方法:

# label_enc1 = LabelEncoder() # 首先将male, female等分类数据使用数字编码

# one_hot_enc = OneHotEncoder() # 再将数字编码转换为独热编码

# tr_feat1_tmp = label_enc1.fit_transform(clean_loan_df["Loan_Status"]).reshape(-1, 1)

# tr_feat1 = one_hot_enc.fit_transform(tr_feat1_tmp) #默认独热编码生成稀疏矩阵

# tr_feat1 = tr_feat1.todense() #把稀疏矩阵转化成平时使用的矩阵

# labels=tr_feat1

print(clean_loan_df.head(3))

labels=clean_loan_df["Loan_Status"]

print(labels)

// An highlighted block

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(feat_df, labels, random_state=10, test_size=1/4)

print('训练集有{}条记录,测试集有{}条记录'.format(X_train.shape[0], X_test.shape[0]))

![]()

数据处理

利用特征过程进行特征处理,把有序型数据、类别型数据处理为数值型数据,把范围较大的数值型数据处理为【0,1】之间的数据,方便进行建模分析。

###有序型特征处理

// An highlighted block

#有序型特征处理

# 在训练集上做处理

X_train.loc[X_train['Education'] == 'Graduate', 'Education'] = 1

X_train.loc[X_train['Education'] == 'Not Graduate', 'Education'] = 0

# 在测试集上做处理

X_test.loc[X_test['Education'] == 'Graduate', 'Education'] = 1

X_test.loc[X_test['Education'] == 'Not Graduate', 'Education'] = 0

# 获取有序型特征处理结果

train_ord_feats = X_train[ord_cols].values

test_ord_feats = X_test[ord_cols].values

###类别型特征处理

// An highlighted block

#类别型特征处理

from sklearn.preprocessing import LabelEncoder, OneHotEncoder

import numpy as np

def encode_cat_feats(train_df, test_df, col_name):

"""

对某列类别型数据进行编码

"""

# 类别型数据

train_cat_feat = X_train[col_name].values

test_cat_feat = X_test[col_name].values

label_enc = LabelEncoder()

onehot_enc = OneHotEncoder(sparse=False)

# 在训练集上处理

proc_train_cat_feat = label_enc.fit_transform(train_cat_feat).reshape(-1, 1)

proc_train_cat_feat = onehot_enc.fit_transform(proc_train_cat_feat)

# 在测试集上处理

proc_test_cat_feat = label_enc.transform(test_cat_feat).reshape(-1, 1)

proc_test_cat_feat = onehot_enc.transform(proc_test_cat_feat)

return proc_train_cat_feat, proc_test_cat_feat

# 初始化编码处理后的特征

enc_train_cat_feats = None

enc_test_cat_feats = None

# 对每个类别型特征进行编码处理

for cat_col in cat_cols:

enc_train_cat_feat, enc_test_cat_feat = encode_cat_feats(X_train, X_test, cat_col)

# 在训练数据上合并特征

if enc_train_cat_feats is None:

enc_train_cat_feats = enc_train_cat_feat

else:

enc_train_cat_feats = np.hstack((enc_train_cat_feats, enc_train_cat_feat))

# 在测试数据上合并特征

if enc_test_cat_feats is None:

enc_test_cat_feats = enc_test_cat_feat

else:

enc_test_cat_feats = np.hstack((enc_test_cat_feats, enc_test_cat_feat))

###数值型特征归一化

// An highlighted block

from sklearn.preprocessing import MinMaxScaler

from numpy import *

# 获取数值型特征

# print(num_cols)

train_num_feats = X_train[num_cols].values

test_num_feats = X_test[num_cols].values

# 合并序列型特征、类别型特征、数值型特征

all_train_feats = np.hstack((train_ord_feats, enc_train_cat_feats, train_num_feats))

all_test_feats = np.hstack((test_ord_feats, enc_test_cat_feats, test_num_feats))

minmax_enc = MinMaxScaler()

enc_train_num_feats = None

enc_test_num_feats =None

# 对每个类别型特征进行编码处理

for num_col in num_cols:

enc_train_num_feat = minmax_enc.fit_transform(list(X_train[num_col].values.reshape(-1, 1)))

enc_test_num_feat = minmax_enc.transform(list(X_test[num_col].values.reshape(-1, 1)))

# 在训练数据上合并特征

if enc_train_num_feats is None:

enc_train_num_feats = enc_train_num_feat

else:

enc_train_num_feats = np.hstack((enc_train_num_feats, enc_train_num_feat))

# 在测试数据上合并特征

if enc_test_num_feats is None:

enc_test_num_feats = enc_test_num_feat

else:

enc_test_num_feats = np.hstack((enc_test_num_feats, enc_test_num_feat))

###把处理好的三种特征数据整理在一起

// An highlighted block

all_proc_train_feats = np.concatenate((train_ord_feats, enc_train_cat_feats, enc_train_num_feats), axis=1)

all_proc_test_feats = np.concatenate((test_ord_feats, enc_test_cat_feats, enc_test_num_feats), axis=1)

print(all_proc_test_feats[0:5])

print('处理后的特征维度为', all_proc_train_feats.shape[1])

使用的模型如下所示:

kNN kNN模型,对应参数为 n_neighbors

LR 逻辑回归模型,对应参数为 C

SVM 支持向量机,对应参数为 C

DT 决策树,对应参数为 max_depth

Stacking 将kNN, SVM, DT集成的Stacking模型, meta分类器为LR

AdaBoost AdaBoost模型,对应参数为 n_estimators

GBDT GBDT模型,对应参数为 learning_rate

RF 随机森林模型,对应参数为 n_estimators

// An highlighted block

from sklearn.linear_model import LogisticRegression

from sklearn.neighbors import KNeighborsClassifier

from sklearn.tree import DecisionTreeClassifier

from sklearn.svm import SVC

from sklearn.model_selection import GridSearchCV

import time

#定义一个模型函数,使用网格搜索进行交叉验证:

def train_test_model(X_train, y_train, X_test, y_test, model_name, model, param_range):

print('训练{}...'.format(model_name))

clf=GridSearchCV(model, param_range, scoring='f1', fit_params=None,

refit=True, cv=5, verbose=0)

start = time.time()

clf.fit(X_train, y_train)

# 计时

end = time.time()

duration = end - start

print('耗时{:.4f}s'.format(duration))

# 验证模型

train_score = clf.score(X_train, y_train)

print('训练准确率:{:.3f}%'.format(train_score * 100))

test_score = clf.score(X_test, y_test)

print('测试准确率:{:.3f}%'.format(test_score * 100))

print('训练模型耗时: {:.4f}s'.format(duration))

y_pred = clf.predict(X_test)

return clf, test_score, duration

#调用定义好的模型函数,带入参数,计算准确率

model_name_param_dict = {'kNN': (KNeighborsClassifier(),

{'n_neighbors': [1, 5, 15]}),

'DT': (DecisionTreeClassifier(),

{'max_depth': [10, 50, 100]}),

'LR': (LogisticRegression(),

{"solver": ['newton-cg', 'lbfgs', 'liblinear', 'sag', 'saga']}),

'SVC': (SVC(),

{"kernel": ['linear', 'rbf'],

"C": [0.01, 0.1, 1, 10, 100]})

}

# 比较结果的DataFrame

results_df = pd.DataFrame(columns=['Accuracy (%)', 'Time (s)'],

index=list(model_name_param_dict.keys()))

results_df.index.name = 'Model'

for model_name, (model, param_range) in model_name_param_dict.items():

_, best_acc, mean_duration = train_test_model(all_proc_train_feats, y_train,

all_proc_test_feats, y_test,

model_name, model, param_range)

results_df.loc[model_name, 'Accuracy (%)'] = best_acc * 100

results_df.loc[model_name, 'Time (s)'] = mean_duration

// An highlighted block

import matplotlib.pyplot as plt

plt.figure(figsize=(10, 4))

ax1 = plt.subplot(1, 2, 1)

results_df.plot(y=['Accuracy (%)'], kind='bar', ylim=[60, 100], ax=ax1, title='Accuracy(%)', legend=False)

ax2 = plt.subplot(1, 2, 2)

results_df.plot(y=['Time (s)'], kind='bar', ax=ax2, title='Time(s)', legend=False)

plt.tight_layout()

plt.show()

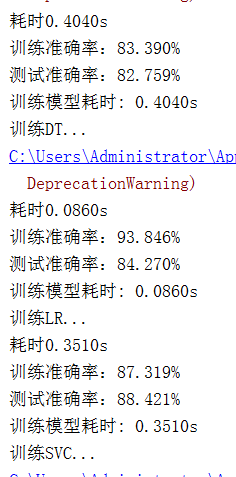

对比结果发现逻辑回归模型准确率最高,

支持向量机模型运行最快并且准确率也很高,如果数据量大可以选用SVC模型。

有了新的申请人数据,带入模型可以继续预测银行是否会批准贷款申请啦!