视觉slam十四讲--VO框架基础学习笔记:构建vo框架,并运行TUM数据集程序

视觉里程计学习:构建vo框架,并运行程序

- 建立config文件夹和其下的文件

- 建立include/myslam文件夹及其下的文件

- 创建data文件夹添加TUM数据集

- 建立src文件夹及其下的文件

- 建立test文件夹及其下的文件

- g2o安装编译后的cmake_modules文件夹

- 代码运行(命令行运行和kdevelop运行)

- 遇到的问题

首先是创建一个project 1文件夹,进入该文件夹后,创建bin文件夹和lib文件夹,并在当前目录下创建一个CMakeLists.txt文件,内容如下:

# 声明要求的 cmake 最低版本

cmake_minimum_required( VERSION 2.8 )

# 声明一个 cmake 工程

project( myslam )

# 添加一个可执行程序

# 语法:add_executable( 程序名 源代码文件 )

set( CMAKE_CXX_COMPILER "g++" )

set( CMAKE_BUILD_TYPE "Release" )

set( CMAKE_CXX_FLAGS "-std=c++11 -march=native -O3" )

list( APPEND CMAKE_MODULE_PATH ${PROJECT_SOURCE_DIR}/cmake_modules )

set( EXECUTABLE_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/bin )

set( LIBRARY_OUTPUT_PATH ${PROJECT_SOURCE_DIR}/lib )

############### dependencies ######################

# Eigen

include_directories( "/usr/include/eigen3" )

# OpenCV

find_package( OpenCV REQUIRED )

include_directories( ${OpenCV_INCLUDE_DIRS} )

# Sophus

find_package( Sophus REQUIRED )

include_directories( ${Sophus_INCLUDE_DIRS} )

# G2O

find_package( G2O REQUIRED )

include_directories( ${G2O_INCLUDE_DIRS} )

set( THIRD_PARTY_LIBS

${OpenCV_LIBS}

${Sophus_LIBRARIES}

g2o_core g2o_stuff g2o_types_sba

)

############### source and test ######################

include_directories( ${PROJECT_SOURCE_DIR}/include )

add_subdirectory( src )

add_subdirectory( test )

建立config文件夹和其下的文件

在project 1文件夹下创建config文件夹后,在其文件夹下创建default.yaml文件,内容如下:

%YAML:1.0

# data

# the tum dataset directory, change it to yours!

dataset_dir: /home/li/slam/myslam/project 1/data/rgbd_dataset_freiburg1_xyz

# camera intrinsics

# fr1

camera.fx: 517.3

camera.fy: 516.5

camera.cx: 325.1

camera.cy: 249.7

camera.depth_scale: 5000

# VO paras

number_of_features: 500

scale_factor: 1.2

level_pyramid: 8

match_ratio: 2.0

max_num_lost: 10

min_inliers: 10

keyframe_rotation: 0.1

keyframe_translation: 0.1

**注意:**文件前面不能有空行或注释,而dataset_dir要修改为自己的TUM数据集所在的位置。

建立include/myslam文件夹及其下的文件

在project 1文件夹下创建include/myslam文件夹,然后在其下创建各类的头文件:

创建camera.h,内容如下:

#ifndef CAMERA_H

#define CAMERA_H

#include "myslam/common_include.h"

namespace myslam

{

// Pinhole RGB-D camera model

class Camera

{

public:

typedef std::shared_ptr Ptr;

float fx_, fy_, cx_, cy_, depth_scale_; // Camera intrinsics

Camera();

Camera ( float fx, float fy, float cx, float cy, float depth_scale=0 ) :

fx_ ( fx ), fy_ ( fy ), cx_ ( cx ), cy_ ( cy ), depth_scale_ ( depth_scale )

{}

// coordinate transform: world, camera, pixel

Vector3d world2camera( const Vector3d& p_w, const SE3& T_c_w );

Vector3d camera2world( const Vector3d& p_c, const SE3& T_c_w );

Vector2d camera2pixel( const Vector3d& p_c );

Vector3d pixel2camera( const Vector2d& p_p, double depth=1 );

Vector3d pixel2world ( const Vector2d& p_p, const SE3& T_c_w, double depth=1 );

Vector2d world2pixel ( const Vector3d& p_w, const SE3& T_c_w );

};

}

#endif // CAMERA_H

创建common_include.h文件,内容如下:

#ifndef COMMON_INCLUDE_H

#define COMMON_INCLUDE_H

// define the commonly included file to avoid a long include list

// for Eigen

#include

#include

using Eigen::Vector2d;

using Eigen::Vector3d;

// for Sophus

#include

#include

using Sophus::SE3;

using Sophus::SO3;

// for cv

#include

using cv::Mat;

// std

#include

#include

#include

#include

#include

#include

#include

#include

创建config.h文件,内容为:

#ifndef CONFIG_H

#define CONFIG_H

#include "myslam/common_include.h"

namespace myslam

{

class Config

{

private:

static std::shared_ptr config_;

cv::FileStorage file_;

Config () {} // private constructor makes a singleton

public:

~Config(); // close the file when deconstructing

// set a new config file

static void setParameterFile( const std::string& filename );

// access the parameter values

template< typename T >

static T get( const std::string& key )

{

return T( Config::config_->file_[key] );

}

};

}

#endif // CONFIG_H

创建frame.h文件,内容为:

#ifndef FRAME_H

#define FRAME_H

#include "myslam/common_include.h"

#include "myslam/camera.h"

namespace myslam

{

class MapPoint;

class Frame

{

public:

typedef std::shared_ptr Ptr;

unsigned long id_; // id of this frame

double time_stamp_; // when it is recorded

SE3 T_c_w_; // transform from world to camera

Camera::Ptr camera_; // Pinhole RGB-D Camera model

Mat color_, depth_; // color and depth image

public: // data members

Frame();

Frame( long id, double time_stamp=0, SE3 T_c_w=SE3(), Camera::Ptr camera=nullptr, Mat color=Mat(),

Mat depth=Mat() );

~Frame();

// factory function

static Frame::Ptr createFrame();

// find the depth in depth map

double findDepth( const cv::KeyPoint& kp );

// Get Camera Center

Vector3d getCamCenter() const;

// check if a point is in this frame

bool isInFrame( const Vector3d& pt_world );

};

}

#endif // FRAME_H

创建map.h文件,内容如下:

#ifndef MAP_H

#define MAP_H

#include "myslam/common_include.h"

#include "myslam/frame.h"

#include "myslam/mappoint.h"

namespace myslam

{

class Map

{

public:

typedef shared_ptr创建mappoint.h文件,内容如下:

#ifndef MAPPOINT_H

#define MAPPOINT_H

namespace myslam

{

class Frame;

class MapPoint

{

public:

typedef shared_ptr Ptr;

unsigned long id_; // ID

Vector3d pos_; // Position in world

Vector3d norm_; // Normal of viewing direction

Mat descriptor_; // Descriptor for matching

int observed_times_; // being observed by feature matching algo.

int correct_times_; // being an inliner in pose estimation

MapPoint();

MapPoint( long id, Vector3d position, Vector3d norm );

// factory function

static MapPoint::Ptr createMapPoint();

};

}

#endif // MAPPOINT_H

创建visual_odometry.h文件,内容如下:

#ifndef VISUALODOMETRY_H

#define VISUALODOMETRY_H

#include "myslam/common_include.h"

#include "myslam/map.h"

#include

namespace myslam

{

class VisualOdometry

{

public:

typedef shared_ptr Ptr;

enum VOState {

INITIALIZING=-1,

OK=0,

LOST

};

VOState state_; // current VO status

Map::Ptr map_; // map with all frames and map points

Frame::Ptr ref_; // reference frame

Frame::Ptr curr_; // current frame

cv::Ptr orb_; // orb detector and computer

vector pts_3d_ref_; // 3d points in reference frame

vector keypoints_curr_; // keypoints in current frame

Mat descriptors_curr_; // descriptor in current frame

Mat descriptors_ref_; // descriptor in reference frame

vector feature_matches_;

SE3 T_c_r_estimated_; // the estimated pose of current frame

int num_inliers_; // number of inlier features in icp

int num_lost_; // number of lost times

// parameters

int num_of_features_; // number of features

double scale_factor_; // scale in image pyramid

int level_pyramid_; // number of pyramid levels

float match_ratio_; // ratio for selecting good matches

int max_num_lost_; // max number of continuous lost times

int min_inliers_; // minimum inliers

double key_frame_min_rot; // minimal rotation of two key-frames

double key_frame_min_trans; // minimal translation of two key-frames

public: // functions

VisualOdometry();

~VisualOdometry();

bool addFrame( Frame::Ptr frame ); // add a new frame

protected:

// inner operation

void extractKeyPoints();

void computeDescriptors();

void featureMatching();

void poseEstimationPnP();

void setRef3DPoints();

void addKeyFrame();

bool checkEstimatedPose();

bool checkKeyFrame();

};

}

#endif // VISUALODOMETRY_H

创建data文件夹添加TUM数据集

在project 1文件夹下创建data文件夹

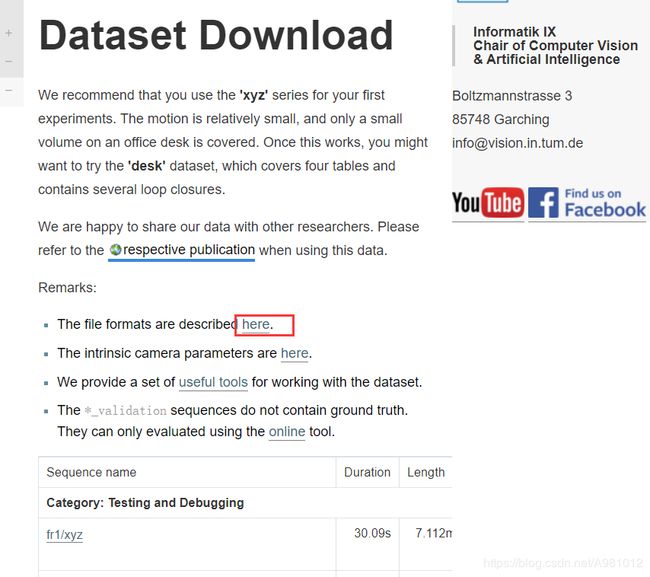

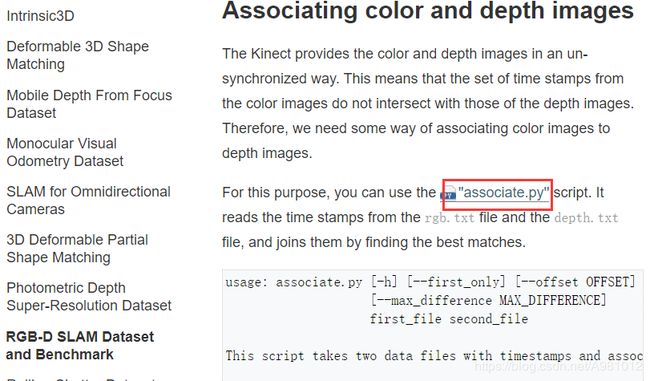

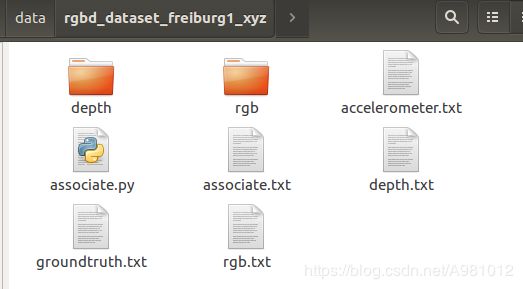

从 https://vision.in.tum.de/data/datasets/rgbd-dataset/download.下载fr1/xyz,得到一个简单的数据集,将下载的数据集放到当前创建的data文件夹里,然后在如下图所示的地方下载associate.py文件

然后进入数据集的文件夹,associate.py下载到该目录下,然后使用命令对时间

python associate.py rgb.txt depth.txt > associate.txt

命令会根据输入两个文件中的采集时间进行配对,最后输出到一个文件 associate.txt。输出文件含有被配对的两个图像的时间、文件名信息,可以作为后续处理的来源。

建立src文件夹及其下的文件

在project 1文件夹下创建src文件夹并在该文件夹下创建文件

创建camera.cpp文件,内容如下:

#include "myslam/camera.h"

#include "myslam/config.h"

namespace myslam

{

Camera::Camera()

{

fx_ = Config::get("camera.fx");

fy_ = Config::get("camera.fy");

cx_ = Config::get("camera.cx");

cy_ = Config::get("camera.cy");

depth_scale_ = Config::get("camera.depth_scale");

}

Vector3d Camera::world2camera ( const Vector3d& p_w, const SE3& T_c_w )

{

return T_c_w*p_w;

}

Vector3d Camera::camera2world ( const Vector3d& p_c, const SE3& T_c_w )

{

return T_c_w.inverse() *p_c;

}

Vector2d Camera::camera2pixel ( const Vector3d& p_c )

{

return Vector2d (

fx_ * p_c ( 0,0 ) / p_c ( 2,0 ) + cx_,

fy_ * p_c ( 1,0 ) / p_c ( 2,0 ) + cy_

);

}

Vector3d Camera::pixel2camera ( const Vector2d& p_p, double depth )

{

return Vector3d (

( p_p ( 0,0 )-cx_ ) *depth/fx_,

( p_p ( 1,0 )-cy_ ) *depth/fy_,

depth

);

}

Vector2d Camera::world2pixel ( const Vector3d& p_w, const SE3& T_c_w )

{

return camera2pixel ( world2camera ( p_w, T_c_w ) );

}

Vector3d Camera::pixel2world ( const Vector2d& p_p, const SE3& T_c_w, double depth )

{

return camera2world ( pixel2camera ( p_p, depth ), T_c_w );

}

}

创建config.cpp文件,内容如下:

#include "myslam/config.h"

namespace myslam

{

void Config::setParameterFile( const std::string& filename )

{

if ( config_ == nullptr )

config_ = shared_ptr(new Config);

config_->file_ = cv::FileStorage( filename.c_str(), cv::FileStorage::READ );

if ( config_->file_.isOpened() == false )

{

std::cerr<<"parameter file "<file_.release();

return;

}

}

Config::~Config()

{

if ( file_.isOpened() )

file_.release();

}

shared_ptr Config::config_ = nullptr;

}

创建frame.cpp文件,内容如下:

#include "myslam/frame.h"

namespace myslam

{

Frame::Frame()

: id_(-1), time_stamp_(-1), camera_(nullptr)

{

}

Frame::Frame(long id, double time_stamp, SE3 T_c_w, Camera::Ptr camera, Mat color, Mat depth)

: id_(id), time_stamp_(time_stamp), T_c_w_(T_c_w), camera_(camera), color_(color), depth_(depth)

{

}

Frame::~Frame()

{

}

// 创建 Frame

Frame::Ptr Frame::createFrame()

{

static long factory_id = 0;

return Frame::Ptr(new Frame(factory_id++));

}

// 寻找给定点对应的深度

double Frame::findDepth(const cv::KeyPoint& kp)

{

int x = cvRound(kp.pt.x);

int y = cvRound(kp.pt.y);

ushort d = depth_.ptr(y)[x];

if (d != 0)

{

return double(d) / camera_->depth_scale_;

}

else

{

// 检查附近的地点

int dx[4] = { -1,0,1,0 };

int dy[4] = { 0,-1,0,1 };

for (int i = 0; i < 4; i++)

{

d = depth_.ptr(y + dy[i])[x + dx[i]];

if (d != 0)

{

return double(d) / camera_->depth_scale_;

}

}

}

return -1.0;

}

// 获取相机光心

Vector3d Frame::getCamCenter() const

{

return T_c_w_.inverse().translation();

}

// 判断某个点是否在视野内

bool Frame::isInFrame(const Vector3d& pt_world)

{

Vector3d p_cam = camera_->world2camera(pt_world, T_c_w_);

if (p_cam(2, 0) < 0)

return false;

Vector2d pixel = camera_->world2pixel(pt_world, T_c_w_);

return pixel(0, 0) > 0 && pixel(1, 0) > 0

&& pixel(0, 0) < color_.cols

&& pixel(1, 0) < color_.rows;

}

}

创建map.cpp文件,内容如下:

#include "myslam/map.h"

namespace myslam

{

void Map::insertKeyFrame(Frame::Ptr frame)

{

cout << "Key frame size = " << keyframes_.size() << endl;

if (keyframes_.find(frame->id_) == keyframes_.end())

{

keyframes_.insert(make_pair(frame->id_, frame));

}

else

{

keyframes_[frame->id_] = frame;

}

}

void Map::insertMapPoint(MapPoint::Ptr map_point)

{

if (map_points_.find(map_point->id_) == map_points_.end())

{

map_points_.insert(make_pair(map_point->id_, map_point));

}

else

{

map_points_[map_point->id_] = map_point;

}

}

}

创建mappoint.cpp文件,内容如下:

#include "myslam/common_include.h"

#include "myslam/mappoint.h"

namespace myslam

{

MapPoint::MapPoint()

: id_(-1), pos_(Vector3d(0, 0, 0)), norm_(Vector3d(0, 0, 0)), observed_times_(0), correct_times_(0)

{

}

MapPoint::MapPoint(long id, Vector3d position, Vector3d norm)

: id_(id), pos_(position), norm_(norm), observed_times_(0), correct_times_(0)

{

}

// 建立MapPoint

MapPoint::Ptr MapPoint::createMapPoint()

{

static long factory_id = 0;

return MapPoint::Ptr(

new MapPoint(factory_id++, Vector3d(0, 0, 0), Vector3d(0, 0, 0))

);

}

}

创建visual_odometry.cpp文件,内容如下:

#include

#include

#include

#include

#include

#include "myslam/config.h"

#include "myslam/visual_odometry.h"

namespace myslam

{

VisualOdometry::VisualOdometry() :

state_ ( INITIALIZING ), ref_ ( nullptr ), curr_ ( nullptr ), map_ ( new Map ), num_lost_ ( 0 ), num_inliers_ ( 0 )

{

num_of_features_ = Config::get ( "number_of_features" );

scale_factor_ = Config::get ( "scale_factor" );

level_pyramid_ = Config::get ( "level_pyramid" );

match_ratio_ = Config::get ( "match_ratio" );

max_num_lost_ = Config::get ( "max_num_lost" );

min_inliers_ = Config::get ( "min_inliers" );

key_frame_min_rot = Config::get ( "keyframe_rotation" );

key_frame_min_trans = Config::get ( "keyframe_translation" );

orb_ = cv::ORB::create ( num_of_features_, scale_factor_, level_pyramid_ );

}

VisualOdometry::~VisualOdometry()

{

}

bool VisualOdometry::addFrame ( Frame::Ptr frame )

{

switch ( state_ )

{

case INITIALIZING:

{

state_ = OK;

curr_ = ref_ = frame;

map_->insertKeyFrame ( frame );

// extract features from first frame

extractKeyPoints();

computeDescriptors();

// compute the 3d position of features in ref frame

setRef3DPoints();

break;

}

case OK:

{

curr_ = frame;

extractKeyPoints();

computeDescriptors();

featureMatching();

poseEstimationPnP();

if ( checkEstimatedPose() == true ) // a good estimation

{

curr_->T_c_w_ = T_c_r_estimated_ * ref_->T_c_w_; // T_c_w = T_c_r*T_r_w

ref_ = curr_;

setRef3DPoints();

num_lost_ = 0;

if ( checkKeyFrame() == true ) // is a key-frame

{

addKeyFrame();

}

}

else // bad estimation due to various reasons

{

num_lost_++;

if ( num_lost_ > max_num_lost_ )

{

state_ = LOST;

}

return false;

}

break;

}

case LOST:

{

cout<<"vo has lost."<detect ( curr_->color_, keypoints_curr_ );

}

void VisualOdometry::computeDescriptors()

{

orb_->compute ( curr_->color_, keypoints_curr_, descriptors_curr_ );

}

void VisualOdometry::featureMatching()

{

// match desp_ref and desp_curr, use OpenCV's brute force match

vector matches;

cv::BFMatcher matcher ( cv::NORM_HAMMING );

matcher.match ( descriptors_ref_, descriptors_curr_, matches );

// select the best matches

float min_dis = std::min_element (

matches.begin(), matches.end(),

[] ( const cv::DMatch& m1, const cv::DMatch& m2 )

{

return m1.distance < m2.distance;

} )->distance;

feature_matches_.clear();

for ( cv::DMatch& m : matches )

{

if ( m.distance < max ( min_dis*match_ratio_, 30.0 ) )

{

feature_matches_.push_back(m);

}

}

cout<<"good matches: "<findDepth(keypoints_curr_[i]);

if ( d > 0)

{

Vector3d p_cam = ref_->camera_->pixel2camera(

Vector2d(keypoints_curr_[i].pt.x, keypoints_curr_[i].pt.y), d

);

pts_3d_ref_.push_back( cv::Point3f( p_cam(0,0), p_cam(1,0), p_cam(2,0) ));

descriptors_ref_.push_back(descriptors_curr_.row(i));

}

}

}

void VisualOdometry::poseEstimationPnP()

{

// construct the 3d 2d observations

vector pts3d;

vector pts2d;

for ( cv::DMatch m:feature_matches_ )

{

pts3d.push_back( pts_3d_ref_[m.queryIdx] );

pts2d.push_back( keypoints_curr_[m.trainIdx].pt );

}

Mat K = ( cv::Mat_(3,3)<<

ref_->camera_->fx_, 0, ref_->camera_->cx_,

0, ref_->camera_->fy_, ref_->camera_->cy_,

0,0,1

);

Mat rvec, tvec, inliers;

cv::solvePnPRansac( pts3d, pts2d, K, Mat(), rvec, tvec, false, 100, 4.0, 0.99, inliers );

num_inliers_ = inliers.rows;

cout<<"pnp inliers: "<(0,0), rvec.at(1,0), rvec.at(2,0)),

Vector3d( tvec.at(0,0), tvec.at(1,0), tvec.at(2,0))

);

}

bool VisualOdometry::checkEstimatedPose()

{

// check if the estimated pose is good

if ( num_inliers_ < min_inliers_ )

{

cout<<"reject because inlier is too small: "< 5.0 )

{

cout<<"reject because motion is too large: "<();

Vector3d rot = d.tail<3>();

if ( rot.norm() >key_frame_min_rot || trans.norm() >key_frame_min_trans )

return true;

return false;

}

void VisualOdometry::addKeyFrame()

{

cout<<"adding a key-frame"<insertKeyFrame ( curr_ );

}

}

创建CMakeLists.txt文件,内容如下:

add_library( myslam SHARED

frame.cpp

mappoint.cpp

map.cpp

camera.cpp

config.cpp

visual_odometry.cpp

)

target_link_libraries( myslam

${THIRD_PARTY_LIBS}

)

建立test文件夹及其下的文件

在project 1文件夹下创建test文件夹,在该文件夹下创建CMakeLists.txt文件,内容如下:

add_executable( run_vo run_vo.cpp )

target_link_libraries( run_vo myslam )

创建run_vo.cpp,这是一个测试的程序,内容如下:

#include

#include

#include

#include

#include

#include "myslam/config.h"

#include "myslam/visual_odometry.h"

int main ( int argc, char** argv )

{

if ( argc != 2 )

{

cout<<"usage: run_vo parameter_file"< ( "dataset_dir" );

cout<<"dataset: "< rgb_files, depth_files;

vector rgb_times, depth_times;

while ( !fin.eof() )

{

string rgb_time, rgb_file, depth_time, depth_file;

fin>>rgb_time>>rgb_file>>depth_time>>depth_file;

rgb_times.push_back ( atof ( rgb_time.c_str() ) );

depth_times.push_back ( atof ( depth_time.c_str() ) );

rgb_files.push_back ( dataset_dir+"/"+rgb_file );

depth_files.push_back ( dataset_dir+"/"+depth_file );

if ( fin.good() == false )

break;

}

myslam::Camera::Ptr camera ( new myslam::Camera );

// visualization

cv::viz::Viz3d vis("Visual Odometry");

cv::viz::WCoordinateSystem world_coor(1.0), camera_coor(0.5);

cv::Point3d cam_pos( 0, -1.0, -1.0 ), cam_focal_point(0,0,0), cam_y_dir(0,1,0);

cv::Affine3d cam_pose = cv::viz::makeCameraPose( cam_pos, cam_focal_point, cam_y_dir );

vis.setViewerPose( cam_pose );

world_coor.setRenderingProperty(cv::viz::LINE_WIDTH, 2.0);

camera_coor.setRenderingProperty(cv::viz::LINE_WIDTH, 1.0);

vis.showWidget( "World", world_coor );

vis.showWidget( "Camera", camera_coor );

cout<<"read total "<camera_ = camera;

pFrame->color_ = color;

pFrame->depth_ = depth;

pFrame->time_stamp_ = rgb_times[i];

boost::timer timer;

vo->addFrame ( pFrame );

cout<<"VO costs time: "<state_ == myslam::VisualOdometry::LOST )

break;

SE3 Tcw = pFrame->T_c_w_.inverse();

// show the map and the camera pose

cv::Affine3d M(

cv::Affine3d::Mat3(

Tcw.rotation_matrix()(0,0), Tcw.rotation_matrix()(0,1), Tcw.rotation_matrix()(0,2),

Tcw.rotation_matrix()(1,0), Tcw.rotation_matrix()(1,1), Tcw.rotation_matrix()(1,2),

Tcw.rotation_matrix()(2,0), Tcw.rotation_matrix()(2,1), Tcw.rotation_matrix()(2,2)

),

cv::Affine3d::Vec3(

Tcw.translation()(0,0), Tcw.translation()(1,0), Tcw.translation()(2,0)

)

);

cv::imshow("image", color );

cv::waitKey(1);

vis.setWidgetPose( "Camera", M);

vis.spinOnce(1, false);

}

return 0;

}

g2o安装编译后的cmake_modules文件夹

将g2o安装编译后的cmake_modules复制到project 1文件夹下

所有的文件夹:

代码运行(命令行运行和kdevelop运行)

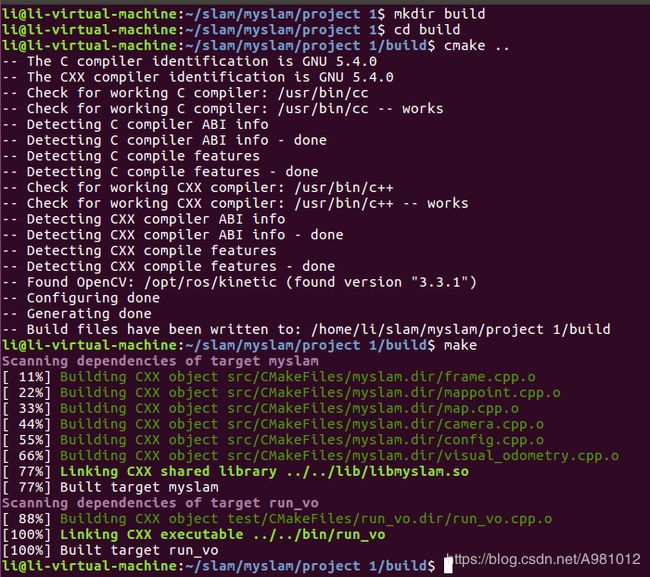

在project 1文件夹目录下,右键,在终端中打开,输入下面的命令:

mkdir build

cd build

cmake ..

make

cd ..

bin/run_vo config/default.yaml

运行结果为:

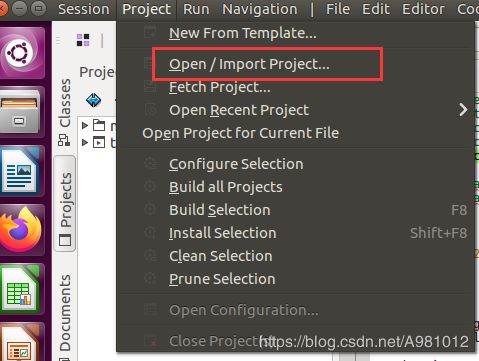

在kdevelop中运行,就是打开kdevelop,然后选择project选项下的open/Import Project

找到之前创建的project 1文件夹,点击finish,文件夹就添加到kdevelop中,

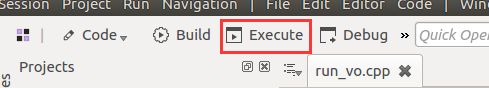

之后点击test文件夹下的run_vo.cpp文件,在代码框显示出来后就点击project选项下的Build Selection,编译这个文件

然后点击Run选项下的Configure Launches,点击Add New,后面操作如下所示,改成自己的路径,点击ok

点击run选项下的Current Launch Configuration,选择自己新建的那个Launch configuration

再点击Execute 就可以看到程序运行,结果显示了

遇到的问题

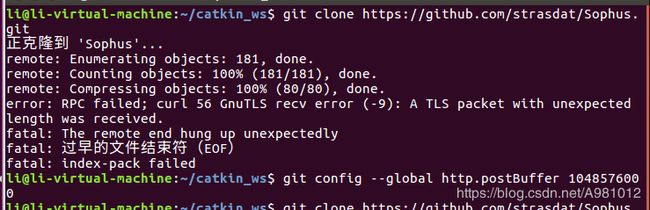

从提示中可以看到是我们没有过安装sophus或者是没安装好,反正就是找不到sophus,所以我们需要对sophus进行安装。

https://blog.csdn.net/u011092188/article/details/77833022.

sophus编译完成:

如有错误请指正!

参考代码:https://github.com/gaoxiang12/slambook/tree/master/project/0.2.

参考书籍:视觉slam十四讲-高翔.pdf