配置FPN_tensorflow

我采用的代码是https://github.com/DetectionTeamUCAS/FPN_Tensorflow

跑该代码需要的环境:

- python3.5 (推荐安装anaconda然后在创建一个python3.5的虚拟环境)

- cuda9.0 + cudnn 7.3(推荐该组合,亲测在win10和ubuntu16.04上都可以跑)

ubuntu16.04上安装

在ubuntu16.04上的安装是非常简单的,直接按照他网页中的步骤做就是了

win10上安装

1.安装cuda9.0

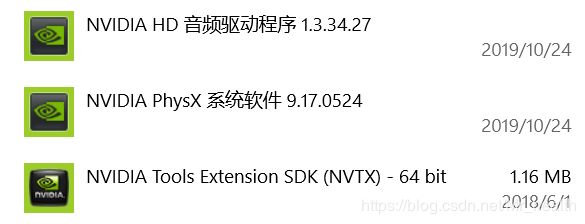

win10上的安装主要坑在安装cuda9.0,因为我原来win10本来就安装的是cuda8.0,为了后续安装就把cuda8.0相关都删除了(通过win10自带的添加删除程序),当时只保留了以下三项,我当时是把Visual Studio(VS) Intergration给删了(不知道是不是因为这个原因导致安装cuda10.0的时候总出现Visual Studio(VS) Intergration安装失败,如果再给我一次机会,我一定手不要这么贱)

安装cuda10.0的时候推荐点自定义,然后全部勾选。

可能出现的问题:Visual Studio(VS) Intergration安装失败了,所以导致后面的都无法安装。

解决办法:cuda安装错误解决

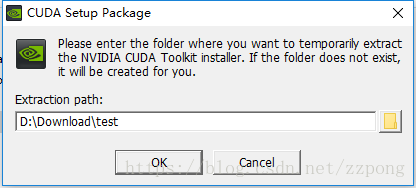

(1)记下你所释放安装程序的路径,比如我的是“D:\Download\test”:

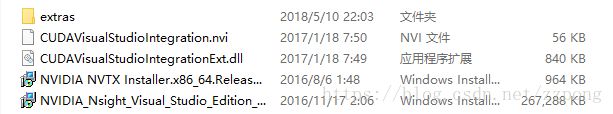

(2)然后在安装CUDA的时候,将“D:\Download\test”路径下的CUDAVisualStudioIntegration文件夹拷到其他位置保存,这里记作“dir\CUDAVisualStudioIntegration”,dir为你拷贝文件所在路径。该文件夹所含文件如下图示:

(3)随后等待CUDA安装(安装时选择自定义,并且不要勾选Visual Studio(VS) Intergration)完成后点击确认。

(4)运行VS,编译CUDA Samples工程(“C:\ProgramData\NVIDIA Corporation\CUDA Samples”),并找到VS报错路径,比如我的是“C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\BuildCustomizations\CUDA 9.0.props”,将“dir\CUDAVisualStudioIntegration\extras\visual_studio_integration\MSBuildExtensions”中的所有文件拷贝到“C:\Program Files (x86)\MSBuild\Microsoft.Cpp\v4.0\V120\BuildCustomizations”文件夹中。

重启VS,即可解决问题。

2.编译代码

windows 下 编译py-faster-rcnn,py-rfcn, py-pva-frcn 下的lib。编译Cython模块

cd $PATH_ROOT/libs/box_utils/cython_utils

python setup.py build_ext --inplace修改的原因主要是源代码适用于ubuntu16.04的编译,我们修改的地方主要是帮助其找到win10下的nvcc.exe以及相应的lib文件。并且没有用到gcc,要注释掉。

编译前,修改setup.py为:

# --------------------------------------------------------

# Fast R-CNN

# Copyright (c) 2015 Microsoft

# Licensed under The MIT License [see LICENSE for details]

# Written by Ross Girshick

# --------------------------------------------------------

import os

from os.path import join as pjoin

import numpy as np

from distutils.core import setup

from distutils.extension import Extension

from Cython.Distutils import build_ext

lib_dir = 'lib/x64'

def find_in_path(name, path):

"Find a file in a search path"

#adapted fom http://code.activestate.com/recipes/52224-find-a-file-given-a-search-path/

for dir in path.split(os.pathsep):

binpath = pjoin(dir, name)

if os.path.exists(binpath):

return os.path.abspath(binpath)

return None

def locate_cuda():

"""Locate the CUDA environment on the system

Returns a dict with keys 'home', 'nvcc', 'include', and 'lib64'

and values giving the absolute path to each directory.

Starts by looking for the CUDAHOME env variable. If not found, everything

is based on finding 'nvcc' in the PATH.

"""

# first check if the CUDAHOME env variable is in use

if 'CUDA_PATH' in os.environ:

home = os.environ['CUDA_PATH']

print("home = %s\n" % home)

nvcc = pjoin(home, 'bin', 'nvcc.exe')

else:

# otherwise, search the PATH for NVCC

default_path = pjoin(os.sep, 'usr', 'local', 'cuda', 'bin')

nvcc = find_in_path('nvcc', os.environ['PATH'] + os.pathsep + default_path)

if nvcc is None:

raise EnvironmentError('The nvcc binary could not be '

'located in your $PATH. Either add it to your path, or set $CUDAHOME')

home = os.path.dirname(os.path.dirname(nvcc))

cudaconfig = {'home':home, 'nvcc':nvcc,

'include': pjoin(home, 'include'),

'lib64': pjoin(home, lib_dir)}

for k, v in cudaconfig.items():

if not os.path.exists(v):

raise EnvironmentError('The CUDA %s path could not be located in %s' % (k, v))

return cudaconfig

CUDA = locate_cuda()

# Obtain the numpy include directory. This logic works across numpy versions.

try:

numpy_include = np.get_include()

except AttributeError:

numpy_include = np.get_numpy_include()

def customize_compiler_for_nvcc(self):

"""inject deep into distutils to customize how the dispatch

to cl/nvcc works.

If you subclass UnixCCompiler, it's not trivial to get your subclass

injected in, and still have the right customizations (i.e.

distutils.sysconfig.customize_compiler) run on it. So instead of going

the OO route, I have this. Note, it's kindof like a wierd functional

subclassing going on."""

# tell the compiler it can processes .cu

#self.src_extensions.append('.cu')

# save references to the default compiler_so and _comple methods

#default_compiler_so = self.spawn

#default_compiler_so = self.rc

super = self.compile

# now redefine the _compile method. This gets executed for each

# object but distutils doesn't have the ability to change compilers

# based on source extension: we add it.

def compile(sources, output_dir=None, macros=None, include_dirs=None, debug=0, extra_preargs=None, extra_postargs=None, depends=None):

postfix=os.path.splitext(sources[0])[1]

if postfix == '.cu':

# use the cuda for .cu files

#self.set_executable('compiler_so', CUDA['nvcc'])

# use only a subset of the extra_postargs, which are 1-1 translated

# from the extra_compile_args in the Extension class

postargs = extra_postargs['nvcc']

else:

postargs = extra_postargs['cl']

return super(sources, output_dir, macros, include_dirs, debug, extra_preargs, postargs, depends)

# reset the default compiler_so, which we might have changed for cuda

#self.rc = default_compiler_so

# inject our redefined _compile method into the class

self.compile = compile

# run the customize_compiler

class custom_build_ext(build_ext):

def build_extensions(self):

customize_compiler_for_nvcc(self.compiler)

build_ext.build_extensions(self)

ext_modules = [

Extension(

"cython_bbox",

["bbox.pyx"],

#extra_compile_args={'gcc': ["-Wno-cpp", "-Wno-unused-function"]},

extra_compile_args={'cl': []},

include_dirs = [numpy_include]

),

Extension(

"cython_nms",

["nms.pyx"],

#extra_compile_args={'gcc': ["-Wno-cpp", "-Wno-unused-function"]},

extra_compile_args={'cl': []},

include_dirs = [numpy_include]

)

# Extension(

# "cpu_nms",

# ["cpu_nms.pyx"],

# extra_compile_args={'gcc': ["-Wno-cpp", "-Wno-unused-function"]},

# include_dirs = [numpy_include]

# )

]

setup(

name='tf_faster_rcnn',

ext_modules=ext_modules,

# inject our custom trigger

cmdclass={'build_ext': custom_build_ext},

)