对抗自编码器AAE——pytorch代码解读试验

AAE网络结构基本框架如论文中所示:

闲话不多说,直接来学习一下加了注释和微调的基本AAE的代码(初始代码链接github):

aae_pytorch_basic.py

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

# 加载必要的库

import argparse

import torch

import pickle

import numpy as np

from torch.autograd import Variable

import torch.nn as nn

import torch.nn.functional as F

import torch.optim as optim

import pylab

import matplotlib.pyplot as plt

from matplotlib import gridspec

import scipy.misc

# import viz

from viz import get_X_batch

from viz import create_reconstruction

from viz import grid_plot

from viz import grid_plot2d

# Training settings

# 训练时的一些设置,若未设置则使用默认值

# 模型描述

parser = argparse.ArgumentParser(description='PyTorch unsupervised MNIST')

# batch_size

parser.add_argument('--batch-size', type=int, default=100, metavar='N',

help='input batch size for training (default: 100)')

# 迭代次数

parser.add_argument('--epochs', type=int, default=500, metavar='N',

help='number of epochs to train (default: 10)')

args = parser.parse_args()

cuda = torch.cuda.is_available()

# 在神经网络中,参数默认是进行随机初始化的。不同的初始化参数往往会导致不同的结果,当得到比较好的结果时我们通常希望这个结果是可以复现的,

# 在pytorch中,通过设置随机数种子也可以达到这么目的。

seed = 10

kwargs = {'num_workers': 1, 'pin_memory': True} if cuda else {} # GPU的一些设置

n_classes = 10 # 类别数,在无监督学习中未用到

z_dim = 2

X_dim = 784

y_dim = 10

train_batch_size = args.batch_size

valid_batch_size = args.batch_size

# train_batch_size = 128

# valid_batch_size = 1

N = 1000

# epochs = args.epochs

epochs = 10000

valid_params = dict(train_batch_size=valid_batch_size, X_dim=X_dim, cuda=cuda, n_classes=n_classes, z_dim=z_dim)

##################################

# Load data and create Data loaders

# 加载处理好的数据,处理的程序在create_datasets.py中

##################################

def load_data(data_path='../data/'):

print('loading data!')

# 有标签的训练集

trainset_labeled = pickle.load(open(data_path + "train_labeled.p", "rb"))

# 无标签的训练集

trainset_unlabeled = pickle.load(open(data_path + "train_unlabeled.p", "rb"))

# Set -1 as labels for unlabeled data

# 对于无标签的数据,将标签设置为-1

trainset_unlabeled.train_labels = torch.from_numpy(np.array([-1] * 47000))

# 验证集

validset = pickle.load(open(data_path + "validation.p", "rb"))

# 有标签训练迭代器

train_labeled_loader = torch.utils.data.DataLoader(trainset_labeled,

batch_size=train_batch_size,

shuffle=True, **kwargs)

# 无标签训练迭代器

train_unlabeled_loader = torch.utils.data.DataLoader(trainset_unlabeled,

batch_size=train_batch_size,

shuffle=True, **kwargs)

# 验证/测试迭代器

valid_loader = torch.utils.data.DataLoader(validset, batch_size=valid_batch_size, shuffle=True)

# 函数返回几个迭代器

return train_labeled_loader, train_unlabeled_loader, valid_loader

##################################

# Define Networks

# 定义网络结构

##################################

# Encoder

# 编码器

class Q_net(nn.Module):

def __init__(self):

super(Q_net, self).__init__()

self.lin1 = nn.Linear(X_dim, N)

self.lin2 = nn.Linear(N, N)

# Gaussian code (z)

self.lin3gauss = nn.Linear(N, z_dim)

def forward(self, x):

# 2 ×(全连接+随机失活+relu)+(全连接)

x = F.dropout(self.lin1(x), p=0.2, training=self.training)

x = F.relu(x)

x = F.dropout(self.lin2(x), p=0.2, training=self.training)

x = F.relu(x)

xgauss = self.lin3gauss(x)

return xgauss

# Decoder

# 解码器

class P_net(nn.Module):

def __init__(self):

super(P_net, self).__init__()

self.lin1 = nn.Linear(z_dim, N)

self.lin2 = nn.Linear(N, N)

self.lin3 = nn.Linear(N, X_dim)

def forward(self, x):

# 2 ×(全连接+随机失活+relu)+(全连接+sigmoid)

x = self.lin1(x)

x = F.dropout(x, p=0.2, training=self.training)

x = F.relu(x)

x = self.lin2(x)

x = F.dropout(x, p=0.2, training=self.training)

x = self.lin3(x)

return F.sigmoid(x)

# Discriminator

# 判别器

class D_net_gauss(nn.Module):

def __init__(self):

super(D_net_gauss, self).__init__()

self.lin1 = nn.Linear(z_dim, N)

self.lin2 = nn.Linear(N, N)

self.lin3 = nn.Linear(N, 1)

def forward(self, x):

# 2 ×(全连接+随机失活+relu)+(全连接+sigmoid)

x = F.dropout(self.lin1(x), p=0.2, training=self.training)

x = F.relu(x)

x = F.dropout(self.lin2(x), p=0.2, training=self.training)

x = F.relu(x)

return F.sigmoid(self.lin3(x))

####################

# Utility functions

# 一些有用的函数

####################

def save_model(model, filename):

# 保存训练模型

print('Best model so far, saving it...')

torch.save(model.state_dict(), filename)

def report_loss(epoch, D_loss_gauss, G_loss, recon_loss):

'''

Print loss

'''

# 显示损失

print('Epoch-{}; D_loss_gauss: {:.4}; G_loss: {:.4}; recon_loss: {:.4}'.format(epoch,

D_loss_gauss.data[0],

G_loss.data[0],

recon_loss.data[0]))

def create_latent(Q, loader):

'''

Creates the latent representation for the samples in loader

return:

z_values: numpy array with the latent representations

labels: the labels corresponding to the latent representations

'''

# 对于迭代器中的样本创建隐表达

Q.eval() # 不启用编码器中的随机失活(和批标准化)

labels = []

for batch_idx, (X, target) in enumerate(loader):

X = X * 0.3081 + 0.1307 # 数据集处理

# X.resize_(loader.batch_size, X_dim)

X, target = Variable(X), Variable(target)

labels.extend(target.data.tolist())

# if cuda:

# X, target = X.cuda(), target.cuda()

X, target = X.cuda(), target.cuda()

# Reconstruction phase

z_sample = Q(X) # 隐变量

# 方便连接矩阵,初始化并concatenate连接

if batch_idx > 0:

z_values = np.concatenate((z_values, np.array(z_sample.data.tolist())))

else:

z_values = np.array(z_sample.data.tolist())

labels = np.array(labels)

return z_values, labels

####################

# Train procedure

# 开始训练

####################

def train(P, Q, D_gauss, P_decoder, Q_encoder, Q_generator, D_gauss_solver, data_loader):

'''

Train procedure for one epoch.

'''

# 每一次迭代中的训练过程

TINY = 1e-15

# Set the networks in train mode (apply dropout when needed)

# 启用编码器、解码器、判别器中的随机失活(与批标准化)

Q.train()

P.train()

D_gauss.train()

# Loop through the labeled and unlabeled dataset getting one batch of samples from each

# The batch size has to be a divisor of the size of the dataset or it will return

# invalid samples

for X, target in data_loader:

# Load batch and normalize samples to be between 0 and 1

# 加载batch数据并将数据归一化到0到1的区间

X = X * 0.3081 + 0.1307

X.resize_(train_batch_size, X_dim)

X, target = Variable(X), Variable(target)

# if cuda:

# X, target = X.cuda(), target.cuda()

X, target = X.cuda(), target.cuda()

# Init gradients

# 梯度初始化为0

P.zero_grad()

Q.zero_grad()

D_gauss.zero_grad()

#######################

# Reconstruction phase

# 重构误差项

#######################

z_sample = Q(X) # 输入数据得到的隐变量

X_sample = P(z_sample) # 隐变量解码后得到的重构变量

# 二分类交叉熵,表示重构向量与输入向量的差距

recon_loss = F.binary_cross_entropy(X_sample + TINY, X.resize(train_batch_size, X_dim) + TINY)

# 重构损失反向传播

recon_loss.backward()

# 优化更新编码器与解码器

P_decoder.step()

Q_encoder.step()

# 梯度清零,不希望之前的梯度影响之后的梯度

P.zero_grad()

Q.zero_grad()

D_gauss.zero_grad()

#######################

# Regularization phase

#######################

# Discriminator

# 对于判别器

Q.eval() # 不启用编码器中的随机失活(和批标准化)

# 从一个高斯随机分布中采样隐变量

z_real_gauss = Variable(torch.randn(train_batch_size, z_dim) * 5.)

# if cuda:

# z_real_gauss = z_real_gauss.cuda()

z_real_gauss = z_real_gauss.cuda()

# 由输入数据到编码器中产生的隐变量,目前不一定是高斯分布

z_fake_gauss = Q(X)

# 将两种隐变量分别输入到判别器中,每张图片的每种隐变量对应一个数

D_real_gauss = D_gauss(z_real_gauss)

D_fake_gauss = D_gauss(z_fake_gauss)

# 判别器的损失函数

# 判别器认为真实高斯隐变量为1,从训练数据得到的隐变量为0

D_loss = -torch.mean(torch.log(D_real_gauss + TINY) + torch.log(1 - D_fake_gauss + TINY))

# 判别器损失函数反向传播

D_loss.backward()

# 优化更新判别器

D_gauss_solver.step()

# 梯度再次清零

P.zero_grad()

Q.zero_grad()

D_gauss.zero_grad()

# Generator

# 对于生成器/解码器

Q.train() # 启用编码器中的随机失活

# 由输入数据到编码器中产生的隐变量,目前不一定是高斯分布

z_fake_gauss = Q(X)

# 将隐变量输入到判别器中,每张图片的结果对应一个数

D_fake_gauss = D_gauss(z_fake_gauss)

# 生成器/解码器的损失函数

# 生成器/解码器努力使由输入数据得到的隐变量标签为1,与判别器对抗训练,使得隐变量分布接近于高斯分布

# 生成器/解码器的目标在于生成以假乱真的数据,与GAN不同在于以假乱真是指是否是高斯分布,而非是否是真实数据

G_loss = -torch.mean(torch.log(D_fake_gauss + TINY))

# 生成器/解码器损失函数反向传播

G_loss.backward()

# 优化更新生成器/解码器

Q_generator.step()

# 梯度再次清零

P.zero_grad()

Q.zero_grad()

D_gauss.zero_grad()

# 返回判别器损失、生成器/解码器损失、重构误差损失

return D_loss, G_loss, recon_loss

# 对于生成模型

def generate_model(train_labeled_loader, train_unlabeled_loader, valid_loader):

torch.manual_seed(seed) # seed = 10

# if cuda:

# Q = Q_net().cuda()

# P = P_net().cuda()

# D_gauss = D_net_gauss().cuda()

# else:

# Q = Q_net()

# P = P_net()

# D_gauss = D_net_gauss()

Q = Q_net().cuda()

P = P_net().cuda()

D_gauss = D_net_gauss().cuda()

# Set learning rates

# 设置学习率

gen_lr = 0.0001

reg_lr = 0.00005

# Set optimizators

# 设置优化器

P_decoder = optim.Adam(P.parameters(), lr=gen_lr)

Q_encoder = optim.Adam(Q.parameters(), lr=gen_lr)

# Q_encoder与Q_generator区别在于学习率不同

Q_generator = optim.Adam(Q.parameters(), lr=reg_lr)

D_gauss_solver = optim.Adam(D_gauss.parameters(), lr=reg_lr)

# 对于每次迭代

for epoch in range(epochs):

# 根据每一次迭代执行的训练过程得到每一次迭代的三个损失函数值

D_loss_gauss, G_loss, recon_loss = train(P, Q, D_gauss, P_decoder, Q_encoder,

Q_generator,

D_gauss_solver,

train_unlabeled_loader)

# 测试周期为10次训练迭代测试1次

if epoch % 10 == 0:

report_loss(epoch, D_loss_gauss, G_loss, recon_loss)

create_reconstruction(Q, P, valid_loader, valid_params)

# #########################################

# # 用了viz.py中的代码并进行了部分修改以便于可视化

# #########################################

# Q.eval()

# P.eval()

#

# '''

# ##### 对于pytorch 0.4版本,原始代码会报错:

# ##### RuntimeError: zero-dimensional tensor (at position 0) cannot be concatenated

# ##### 参考博文链接:https://blog.csdn.net/u011415481/article/details/84709487

# '''

# # z1 = Variable(torch.from_numpy(np.arange(-10, 10, 1.5).astype('float32')))

# # z2 = Variable(torch.from_numpy(np.arange(-10, 10, 1.5).astype('float32')))

# z1 = torch.tensor([np.arange(-10, 10, 1.5).astype('float32')])

# z2 = torch.tensor([np.arange(-10, 10, 1.5).astype('float32')])

# # z1, z2 = z1.cuda(), z2.cuda()

#

# nx, ny = len(z1), len(z2)

# plt.subplot()

# gs = gridspec.GridSpec(nx, ny, hspace=0.05, wspace=0.05)

#

# count = 0

# for i, g in enumerate(gs):

# count = count + 1

# # z = torch.cat((z1[int(i / ny)], z2[i % nx])).resize(1, 2)

# print(z1)

# print(z1[:, int(i / ny)])

# print(z2[:, i % nx])

# z = torch.cat((z1[:, int(i / ny)], z2[:, i % nx]))

# z = z.resize(1, 2)

# x = P(z.cuda())

#

# ax = plt.subplot(g)

# img = np.array(x.data.tolist()).reshape(28, 28)

#

# # # 保存

# # scipy.misc.imsave('result/N_' + str(epoch) + '_' + str(count) + '.jpg', img)

#

# ax.imshow(img, )

# ax.set_xticks([])

# ax.set_yticks([])

# ax.set_aspect('auto')

# plt.show()

# # pylab.show()

return Q, P

if __name__ == '__main__':

train_labeled_loader, train_unlabeled_loader, valid_loader = load_data()

Q, P = generate_model(train_labeled_loader, train_unlabeled_loader, valid_loader)

viz.py

import matplotlib

import matplotlib.pyplot as plt

from matplotlib import gridspec

import numpy as np

from torch.autograd import Variable

import torch

def get_X_batch(data_loader, params, size=None):

if size is None:

size = data_loader.batch_size

for X, target in data_loader:

break

train_batch_size = params['train_batch_size']

X_dim = params['X_dim']

cuda = params['cuda']

X = X * 0.3081 + 0.1307

X = X[:size]

target = target[:size]

X.resize_(train_batch_size, X_dim)

X, target = Variable(X), Variable(target)

if cuda:

X, target = X.cuda(), target.cuda()

return X

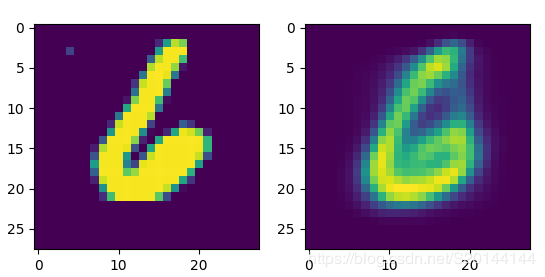

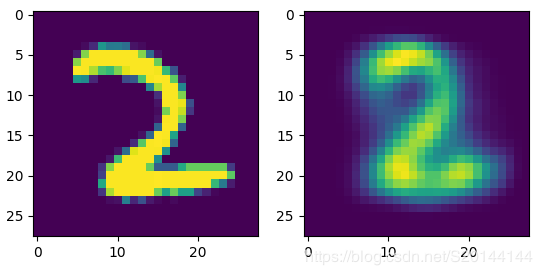

def create_reconstruction(Q, P, data_loader, params):

Q.eval()

P.eval()

X = get_X_batch(data_loader, params, size=1)

# z_c, z_g = Q(X)

# z = torch.cat((z_c, z_g), 1)

z = Q(X)

x = P(z)

img_orig = np.array(X[0].data.tolist()).reshape(28, 28)

img_rec = np.array(x[0].data.tolist()).reshape(28, 28)

plt.subplot(1, 2, 1)

plt.imshow(img_orig)

# plt.show()

plt.subplot(1, 2, 2)

plt.imshow(img_rec)

plt.show()

def grid_plot(Q, P, data_loader, params):

Q.eval()

P.eval()

X = get_X_batch(data_loader, params, size=10)

_, z_g = Q(X)

n_classes = params['n_classes']

cuda = params['cuda']

z_dim = params['z_dim']

z_cat = np.arange(0, n_classes)

z_cat = np.eye(n_classes)[z_cat].astype('float32')

z_cat = torch.from_numpy(z_cat)

z_cat = Variable(z_cat)

if cuda:

z_cat = z_cat.cuda()

nx, ny = 5, n_classes

plt.subplot()

gs = gridspec.GridSpec(nx, ny, hspace=0.05, wspace=0.05)

for i, g in enumerate(gs):

z_gauss = z_g[i / ny].resize(1, z_dim)

z_gauss0 = z_g[i / ny].resize(1, z_dim)

for _ in range(n_classes - 1):

z_gauss = torch.cat((z_gauss, z_gauss0), 0)

z = torch.cat((z_cat, z_gauss), 1)

x = P(z)

ax = plt.subplot(g)

img = np.array(x[i % ny].data.tolist()).reshape(28, 28)

ax.imshow(img, )

ax.set_xticks([])

ax.set_yticks([])

ax.set_aspect('auto')

def grid_plot2d(Q, P, data_loader, params):

Q.eval()

P.eval()

cuda = params['cuda']

z1 = Variable(torch.from_numpy(np.arange(-10, 10, 1.5).astype('float32')))

z2 = Variable(torch.from_numpy(np.arange(-10, 10, 1.5).astype('float32')))

if cuda:

z1, z2 = z1.cuda(), z2.cuda()

nx, ny = len(z1), len(z2)

plt.subplot()

gs = gridspec.GridSpec(nx, ny, hspace=0.05, wspace=0.05)

for i, g in enumerate(gs):

z = torch.cat((z1[i / ny], z2[i % nx])).resize(1, 2)

x = P(z)

ax = plt.subplot(g)

img = np.array(x.data.tolist()).reshape(28, 28)

ax.imshow(img, )

ax.set_xticks([])

ax.set_yticks([])

ax.set_aspect('auto')