爬取斗鱼--scrapy

总体流程:

爬取该网页所有的主播名,主播房间号,主播剧场名称,主播的房间的url路径

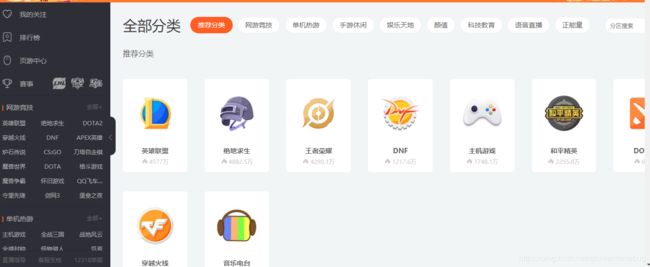

首先打开https://www.douyu.com/directory

解析javascript里面变量

https://www.douyu.com/gapi/rkc/directory/2_+cate2Id

然后进行爬取,获取的response为json数据,json解析获取。

部分spider代码如下:

class douyuspider(Spider):

name="douyuspider"

allowed_domain=["douyu.com"]

# start_urls =['https://www.douyu.com/gapi/rkc/directory/2_1/0', 'https://www.douyu.com/gapi/rkc/directory/2_1/1', 'https://www.douyu.com/gapi/rkc/directory/2_1/2', 'https://www.douyu.com/gapi/rkc/directory/2_1/3', 'https://www.douyu.com/gapi/rkc/directory/2_1/4', 'https://www.douyu.com/gapi/rkc/directory/2_1/5', 'https://www.douyu.com/gapi/rkc/directory/2_1/6', 'https://www.douyu.com/gapi/rkc/directory/2_1/7', 'https://www.douyu.com/gapi/rkc/directory/2_1/8', 'https://www.douyu.com/gapi/rkc/directory/2_1/9', 'https://www.douyu.com/gapi/rkc/directory/2_1/10', 'https://www.douyu.com/gapi/rkc/directory/2_1/11', 'https://www.douyu.com/gapi/rkc/directory/2_1/12', 'https://www.douyu.com/gapi/rkc/directory/2_1/13', 'https://www.douyu.com/gapi/rkc/directory/2_1/14', 'https://www.douyu.com/gapi/rkc/directory/2_1/15', 'https://www.douyu.com/gapi/rkc/directory/2_1/16', 'https://www.douyu.com/gapi/rkc/directory/2_1/17', 'https://www.douyu.com/gapi/rkc/directory/2_1/18', 'https://www.douyu.com/gapi/rkc/directory/2_1/19', 'https://www.douyu.com/gapi/rkc/directory/2_1/20', 'https://www.douyu.com/gapi/rkc/directory/2_1/21', 'https://www.douyu.com/gapi/rkc/directory/2_1/22', 'https://www.douyu.com/gapi/rkc/directory/2_1/23', 'https://www.douyu.com/gapi/rkc/directory/2_1/24', 'https://www.douyu.com/gapi/rkc/directory/2_1/25', 'https://www.douyu.com/gapi/rkc/directory/2_1/26', 'https://www.douyu.com/gapi/rkc/directory/2_1/27', 'https://www.douyu.com/gapi/rkc/directory/2_1/28', 'https://www.douyu.com/gapi/rkc/directory/2_1/29']

# start_urls = ['https://www.douyu.com/gapi/rkc/directory/2_270/1', 'https://www.douyu.com/gapi/rkc/directory/2_270/2', 'https://www.douyu.com/gapi/rkc/directory/2_270/3', 'https://www.douyu.com/gapi/rkc/directory/2_270/4', 'https://www.douyu.com/gapi/rkc/directory/2_270/5', 'https://www.douyu.com/gapi/rkc/directory/2_270/6', 'https://www.douyu.com/gapi/rkc/directory/2_270/7', 'https://www.douyu.com/gapi/rkc/directory/2_270/8', 'https://www.douyu.com/gapi/rkc/directory/2_270/9', 'https://www.douyu.com/gapi/rkc/directory/2_270/10', 'https://www.douyu.com/gapi/rkc/directory/2_270/11', 'https://www.douyu.com/gapi/rkc/directory/2_270/12', 'https://www.douyu.com/gapi/rkc/directory/2_270/13', 'https://www.douyu.com/gapi/rkc/directory/2_270/14', 'https://www.douyu.com/gapi/rkc/directory/2_270/15', 'https://www.douyu.com/gapi/rkc/directory/2_270/16', 'https://www.douyu.com/gapi/rkc/directory/2_270/17', 'https://www.douyu.com/gapi/rkc/directory/2_270/18', 'https://www.douyu.com/gapi/rkc/directory/2_270/19', 'https://www.douyu.com/gapi/rkc/directory/2_270/20', 'https://www.douyu.com/gapi/rkc/directory/2_270/21', 'https://www.douyu.com/gapi/rkc/directory/2_270/22', 'https://www.douyu.com/gapi/rkc/directory/2_270/23', 'https://www.douyu.com/gapi/rkc/directory/2_270/24', 'https://www.douyu.com/gapi/rkc/directory/2_270/25']

# 类别的url路径,在该返回的值中找到各个类别的url

start_urls =[ 'https://www.douyu.com/directory']

def parse(self,response):

if response.status == 200 :

hrefs = response.body

# js变量中提取出cate2Id进行拼接

soup = BeautifulSoup(hrefs, "lxml")

jsons = re.findall('{"cate2Name":.*?"isDisplay":[0,1]}',soup.text)

cate1_urls = []

# 拿到所有类别的url

for json1 in jsons:

print(json1)

cate1_urls.append('https://www.douyu.com/gapi/rkc/directory/2_' + str(json.loads(json1).get('cate2Id')))

# 拿到所有类别的每一页,由于不确定页数,默认取50

for url in cate1_urls:

for i in range(0,50):

yield Request(url+'/'+str(i),callback=self.parsePage,dont_filter=True)