- 机器学习与深度学习间关系与区别

ℒℴѵℯ心·动ꦿ໊ོ꫞

人工智能学习深度学习python

一、机器学习概述定义机器学习(MachineLearning,ML)是一种通过数据驱动的方法,利用统计学和计算算法来训练模型,使计算机能够从数据中学习并自动进行预测或决策。机器学习通过分析大量数据样本,识别其中的模式和规律,从而对新的数据进行判断。其核心在于通过训练过程,让模型不断优化和提升其预测准确性。主要类型1.监督学习(SupervisedLearning)监督学习是指在训练数据集中包含输入

- 《投行人生》读书笔记

小蘑菇的树洞

《投行人生》----作者詹姆斯-A-朗德摩根斯坦利副主席40年的职业洞见-很短小精悍的篇幅,比较适合初入职场的新人。第一部分成功的职业生涯需要规划1.情商归为适应能力分享与协作同理心适应能力,更多的是自我意识,你有能力识别自己的情并分辨这些情绪如何影响你的思想和行为。2.对于初入职场的人的建议,细节,截止日期和数据很重要截止日期,一种有效的方法是请老板为你所有的任务进行优先级排序。和老板喝咖啡的好

- LocalDateTime 转 String

igotyback

java开发语言

importjava.time.LocalDateTime;importjava.time.format.DateTimeFormatter;publicclassMain{publicstaticvoidmain(String[]args){//获取当前时间LocalDateTimenow=LocalDateTime.now();//定义日期格式化器DateTimeFormatterformat

- Linux下QT开发的动态库界面弹出操作(SDL2)

13jjyao

QT类qt开发语言sdl2linux

需求:操作系统为linux,开发框架为qt,做成需带界面的qt动态库,调用方为java等非qt程序难点:调用方为java等非qt程序,也就是说调用方肯定不带QApplication::exec(),缺少了这个,QTimer等事件和QT创建的窗口将不能弹出(包括opencv也是不能弹出);这与qt调用本身qt库是有本质的区别的思路:1.调用方缺QApplication::exec(),那么我们在接口

- 第四天旅游线路预览——从换乘中心到喀纳斯湖

陟彼高冈yu

基于Googleearthstudio的旅游规划和预览旅游

第四天:从贾登峪到喀纳斯风景区入口,晚上住宿贾登峪;换乘中心有4路车,喀纳斯①号车,去喀纳斯湖,路程时长约5分钟;将上面的的行程安排进行动态展示,具体步骤见”Googleearthstudio进行动态轨迹显示制作过程“、“Googleearthstudio入门教程”和“Googleearthstudio进阶教程“相关内容,得到行程如下所示:Day4-2-480p

- linux中sdl的使用教程,sdl使用入门

Melissa Corvinus

linux中sdl的使用教程

本文通过一个简单示例讲解SDL的基本使用流程。示例中展示一个窗口,窗口里面有个随机颜色快随机移动。当我们鼠标点击关闭按钮时间窗口关闭。基本步骤如下:1.初始化SDL并创建一个窗口。SDL_Init()初始化SDL_CreateWindow()创建窗口2.纹理渲染存储RGB和存储纹理的区别:比如一个从左到右由红色渐变到蓝色的矩形,用存储RGB的话就需要把矩形中每个点的具体颜色值存储下来;而纹理只是一

- 从0到500+,我是如何利用自媒体赚钱?

一列脚印

运营公众号半个多月,从零基础的小白到现在慢慢懂了一些运营的知识。做好公众号是很不容易的,要做很多事情;排版、码字、引流…通通需要自己解决,业余时间全都花费在这上面涨这么多粉丝是真的不容易,对比知乎大佬来说,我们这种没资源,没人脉,还没钱的小透明来说,想要一个月涨粉上万,怕是今天没睡醒(不过你有的方法,算我piapia打脸)至少我是清醒的,自己慢慢努力,实现我的万粉目标!大家快来围观、支持我吧!孩子

- Day1笔记-Python简介&标识符和关键字&输入输出

~在杰难逃~

Pythonpython开发语言大数据数据分析数据挖掘

大家好,从今天开始呢,杰哥开展一个新的专栏,当然,数据分析部分也会不定时更新的,这个新的专栏主要是讲解一些Python的基础语法和知识,帮助0基础的小伙伴入门和学习Python,感兴趣的小伙伴可以开始认真学习啦!一、Python简介【了解】1.计算机工作原理编程语言就是用来定义计算机程序的形式语言。我们通过编程语言来编写程序代码,再通过语言处理程序执行向计算机发送指令,让计算机完成对应的工作,编程

- Python快速入门 —— 第三节:类与对象

孤华暗香

Python快速入门python开发语言

第三节:类与对象目标:了解面向对象编程的基础概念,并学会如何定义类和创建对象。内容:类与对象:定义类:class关键字。类的构造函数:__init__()。类的属性和方法。对象的创建与使用。示例:classStudent:def__init__(self,name,age,major):self.name&#

- C++菜鸟教程 - 从入门到精通 第二节

DreamByte

c++

一.上节课的补充(数据类型)1.前言继上节课,我们主要讲解了输入,输出和运算符,我们现在来补充一下数据类型的知识上节课遗漏了这个知识点,非常的抱歉顺便说一下,博主要上高中了,更新会慢,2-4周更新一次对了,正好赶上中秋节,小编跟大家说一句:中秋节快乐!2.int类型上节课,我们其实只用了int类型int类型,是整数类型,它们存贮的是整数,不能存小数(浮点数)定义变量的方式很简单inta;//定义一

- STM32中的计时与延时

lupinjia

STM32stm32单片机

前言在裸机开发中,延时作为一种规定循环周期的方式经常被使用,其中尤以HAL库官方提供的HAL_Delay为甚。刚入门的小白可能会觉得既然有官方提供的延时函数,而且精度也还挺好,为什么不用呢?实际上HAL_Delay中有不少坑,而这些也只是HAL库中无数坑的其中一些。想从坑里跳出来还是得加强外设原理的学习和理解,切不可只依赖HAL库。除了延时之外,我们在开发中有时也会想要确定某段程序的耗时,这就需要

- Python实现简单的机器学习算法

master_chenchengg

pythonpython办公效率python开发IT

Python实现简单的机器学习算法开篇:初探机器学习的奇妙之旅搭建环境:一切从安装开始必备工具箱第一步:安装Anaconda和JupyterNotebook小贴士:如何配置Python环境变量算法初体验:从零开始的Python机器学习线性回归:让数据说话数据准备:从哪里找数据编码实战:Python实现线性回归模型评估:如何判断模型好坏逻辑回归:从分类开始理论入门:什么是逻辑回归代码实现:使用skl

- 多线程之——ExecutorCompletionService

阿福德

在我们开发中,经常会遇到这种情况,我们起多个线程来执行,等所有的线程都执行完成后,我们需要得到个线程的执行结果来进行聚合处理。我在内部代码评审时,发现了不少这种情况。看很多同学都使用正确,但比较啰嗦,效率也不高。本文介绍一个简单处理这种情况的方法:直接上代码:publicclassExecutorCompletionServiceTest{@TestpublicvoidtestExecutorCo

- 系统架构设计师 需求分析篇二

AmHardy

软件架构设计师系统架构需求分析面向对象分析分析模型UML和SysML

面向对象分析方法1.用例模型构建用例模型一般需要经历4个阶段:识别参与者:识别与系统交互的所有事物。合并需求获得用例:将需求分配给予其相关的参与者。细化用例描述:详细描述每个用例的功能。调整用例模型:优化用例之间的关系和结构,前三个阶段是必需的。2.用例图的三元素参与者:使用系统的用户或其他外部系统和设备。用例:系统所提供的服务。通信关联:参与者和用例之间的关系,或用例与用例之间的关系。3.识别参

- 2019考研 | 西交大软件工程

笔者阿蓉

本科背景:某北京211学校电子信息工程互联网开发工作两年录取结果:全日制软件工程学院分数:初试350+复试笔试80+面试85+总排名:100+从五月份开始脱产学习,我主要说一下专业课和复试还有我对非全的一些看法。【数学100+】张宇,张宇,张宇。跟着张宇学习,入门视频刷一遍,真题刷两遍,错题刷三遍。书刷N多遍。从视频开始学习,是最快的学习方法。5-7月份把主要是数学学好,8-9月份开始给自己每个周

- esp32开发快速入门 8 : MQTT 的快速入门,基于esp32实现MQTT通信

z755924843

ESP32开发快速入门服务器网络运维

MQTT介绍简介MQTT(MessageQueuingTelemetryTransport,消息队列遥测传输协议),是一种基于发布/订阅(publish/subscribe)模式的"轻量级"通讯协议,该协议构建于TCP/IP协议上,由IBM在1999年发布。MQTT最大优点在于,可以以极少的代码和有限的带宽,为连接远程设备提供实时可靠的消息服务。作为一种低开销、低带宽占用的即时通讯协议,使其在物联

- Armv8.3 体系结构扩展--原文版

代码改变世界ctw

ARM-TEE-Androidarmv8嵌入式arm架构安全架构芯片TrustzoneSecureboot

快速链接:.ARMv8/ARMv9架构入门到精通-[目录]付费专栏-付费课程【购买须知】:个人博客笔记导读目录(全部)TheArmv8.3architectureextensionTheArmv8.3architectureextensionisanextensiontoArmv8.2.Itaddsmandatoryandoptionalarchitecturalfeatures.Somefeat

- Python入门之Lesson2:Python基础语法

小熊同学哦

Python入门课程python开发语言算法数据结构青少年编程

目录前言一.介绍1.变量和数据类型2.常见运算符3.输入输出4.条件语句5.循环结构二.练习三.总结前言欢迎来到《Python入门》系列博客的第二课。在上一课中,我们了解了Python的安装及运行环境的配置。在这一课中,我们将深入学习Python的基础语法,这是编写Python代码的根基。通过本节内容的学习,你将掌握变量、数据类型、运算符、输入输出、条件语句等Python编程的基础知识。一.介绍1

- 摄影小白,怎么才能拍出高大上产品图片?

是波妞唉

很多人以为文案只要会码字,会排版就OK了!说实话,没接触到这一行的时候,我的想法更简单,以为只要会写字就行!可是真做了文案才发现,码字只是入门级的基本功。一篇文章离不开排版、配图,说起来很简单!从头做到尾你就会发现,写文章用两个小时,找合适的配图居然要花掉半天的时间,甚至更久!图片能找到合适的就不怕,还有找不到的,比如产品图,只能亲自拍。拿着摆弄了半天,就是拍不出想要的效果,光线不好、搭出来丑破天

- tiff批量转png

诺有缸的高飞鸟

opencv图像处理pythonopencv图像处理

目录写在前面代码完写在前面1、本文内容tiff批量转png2、平台/环境opencv,python3、转载请注明出处:https://blog.csdn.net/qq_41102371/article/details/132975023代码importnumpyasnpimportcv2importosdeffindAllFile(base):file_list=[]forroot,ds,fsin

- 2021 CCF 非专业级别软件能力认证第一轮(CSP-J1)入门级C++语言试题 (第三大题:完善程序 代码)

mmz1207

c++csp

最近有一段时间没更新了,在准备CSP考试,请大家见谅。(1)有n个人围成一个圈,依次标号0到n-1。从0号开始,依次0,1,0,1...交替报数,报到一的人离开,直至圈中剩最后一个人。求最后剩下的人的编号。#includeusingnamespacestd;intf[1000010];intmain(){intn;cin>>n;inti=0,cnt=0,p=0;while(cnt#includeu

- 遥感影像的切片处理

sand&wich

计算机视觉python图像处理

在遥感影像分析中,经常需要将大尺寸的影像切分成小片段,以便于进行详细的分析和处理。这种方法特别适用于机器学习和图像处理任务,如对象检测、图像分类等。以下是如何使用Python和OpenCV库来实现这一过程,同时确保每个影像片段保留正确的地理信息。准备环境首先,确保安装了必要的Python库,包括numpy、opencv-python和xml.etree.ElementTree。这些库将用于图像处理

- Vue( ElementUI入门、vue-cli安装)

m0_l5z

elementuivue.js

一.ElementUI入门目录:1.ElementUI入门1.1ElementUI简介1.2Vue+ElementUI安装1.3开发示例2.搭建nodejs环境2.1nodejs介绍2.2npm是什么2.3nodejs环境搭建2.3.1下载2.3.2解压2.3.3配置环境变量2.3.4配置npm全局模块路径和cache默认安装位置2.3.5修改npm镜像提高下载速度2.3.6验证安装结果3.运行n

- Spring MVC 全面指南:从入门到精通的详细解析

一杯梅子酱

技术栈学习springmvcjava

引言:SpringMVC,作为Spring框架的一个重要模块,为构建Web应用提供了强大的功能和灵活性。无论是初学者还是有一定经验的开发者,掌握SpringMVC都将显著提升你的Web开发技能。本文旨在为初学者提供一个全面且易于理解的学习路径,通过详细的知识点分析和实际案例,帮助你快速上手SpringMVC,让学习过程既深刻又高效。一、SpringMVC简介1.1什么是SpringMVC?Spri

- 入门MySQL——查询语法练习

K_un

前言:前面几篇文章为大家介绍了DML以及DDL语句的使用方法,本篇文章将主要讲述常用的查询语法。其实MySQL官网给出了多个示例数据库供大家实用查询,下面我们以最常用的员工示例数据库为准,详细介绍各自常用的查询语法。1.员工示例数据库导入官方文档员工示例数据库介绍及下载链接:https://dev.mysql.com/doc/employee/en/employees-installation.h

- ESP32-C3入门教程 网络篇⑩——基于esp_https_ota和MQTT实现开机主动升级和被动触发升级的OTA功能

小康师兄

ESP32-C3入门教程https服务器esp32OTAMQTT

文章目录一、前言二、软件流程三、部分源码四、运行演示一、前言本文基于VSCodeIDE进行编程、编译、下载、运行等操作基础入门章节请查阅:ESP32-C3入门教程基础篇①——基于VSCode构建HelloWorld教程目录大纲请查阅:ESP32-C3入门教程——导读ESP32-C3入门教程网络篇⑨——基于esp_https_ota实现史上最简单的ESP32OTA远程固件升级功能二、软件流程

- 2023最详细的Python安装教程(Windows版本)

程序员林哥

Pythonpythonwindows开发语言

python安装是学习pyhon第一步,很多刚入门小白不清楚如何安装python,今天我来带大家完成python安装与配置,跟着我一步步来,很简单,你肯定能完成。第一部分:python安装(一)准备工作1、下载和安装python(认准官方网站)当然你不想去下载的话也可以分享给你,还有入门学习教程,点击下方卡片跳转进群领取(二)开始安装对于Windows操作系统,可以下载“executableins

- 【2022 CCF 非专业级别软件能力认证第一轮(CSP-J1)入门级 C++语言试题及解析】

汉子萌萌哒

CCFnoi算法数据结构c++

一、单项选择题(共15题,每题2分,共计30分;每题有且仅有一个正确选项)1.以下哪种功能没有涉及C++语言的面向对象特性支持:()。A.C++中调用printf函数B.C++中调用用户定义的类成员函数C.C++中构造一个class或structD.C++中构造来源于同一基类的多个派生类题目解析【解析】正确答案:AC++基础知识,面向对象和类有关,类又涉及父类、子类、继承、派生等关系,printf

- 现金贷“租系统”产业崛起:租金3000,本金10万,一月回本

Dayon

最近,地下现金贷的全面崛起,已成了不可阻挡的趋势。大量民间资本开始涌入,民间高利贷、炒房团、土豪的钱,都裹挟其中。而地下现金贷的入门门槛正在不断降低,一条新的产业链开始崛起:租现金贷系统。现在,只需要10万本金,花3000元租个系统,两个人的团队,一个月就能回本。大量的小本金玩家进场了,为了急速获利,他们甚至将利率调到1600%以上。业内人士称,真实的现金贷用户,现在大概只有200多万。整个行业几

- 【树一线性代数】005入门

Owlet_woodBird

算法

Index本文稍后补全,推荐阅读:https://blog.csdn.net/weixin_60702024/article/details/141874376分析实现总结本文稍后补全,推荐阅读:https://blog.csdn.net/weixin_60702024/article/details/141874376已知非空二叉树T的结点值均为正整数,采用顺序存储方式保存,数据结构定义如下:t

- 二分查找排序算法

周凡杨

java二分查找排序算法折半

一:概念 二分查找又称

折半查找(

折半搜索/

二分搜索),优点是比较次数少,查找速度快,平均性能好;其缺点是要求待查表为有序表,且插入删除困难。因此,折半查找方法适用于不经常变动而 查找频繁的有序列表。首先,假设表中元素是按升序排列,将表中间位置记录的关键字与查找关键字比较,如果两者相等,则查找成功;否则利用中间位置记录将表 分成前、后两个子表,如果中间位置记录的关键字大于查找关键字,则进一步

- java中的BigDecimal

bijian1013

javaBigDecimal

在项目开发过程中出现精度丢失问题,查资料用BigDecimal解决,并发现如下这篇BigDecimal的解决问题的思路和方法很值得学习,特转载。

原文地址:http://blog.csdn.net/ugg/article/de

- Shell echo命令详解

daizj

echoshell

Shell echo命令

Shell 的 echo 指令与 PHP 的 echo 指令类似,都是用于字符串的输出。命令格式:

echo string

您可以使用echo实现更复杂的输出格式控制。 1.显示普通字符串:

echo "It is a test"

这里的双引号完全可以省略,以下命令与上面实例效果一致:

echo Itis a test 2.显示转义

- Oracle DBA 简单操作

周凡杨

oracle dba sql

--执行次数多的SQL

select sql_text,executions from (

select sql_text,executions from v$sqlarea order by executions desc

) where rownum<81;

&nb

- 画图重绘

朱辉辉33

游戏

我第一次接触重绘是编写五子棋小游戏的时候,因为游戏里的棋盘是用线绘制的,而这些东西并不在系统自带的重绘里,所以在移动窗体时,棋盘并不会重绘出来。所以我们要重写系统的重绘方法。

在重写系统重绘方法时,我们要注意一定要调用父类的重绘方法,即加上super.paint(g),因为如果不调用父类的重绘方式,重写后会把父类的重绘覆盖掉,而父类的重绘方法是绘制画布,这样就导致我们

- 线程之初体验

西蜀石兰

线程

一直觉得多线程是学Java的一个分水岭,懂多线程才算入门。

之前看《编程思想》的多线程章节,看的云里雾里,知道线程类有哪几个方法,却依旧不知道线程到底是什么?书上都写线程是进程的模块,共享线程的资源,可是这跟多线程编程有毛线的关系,呜呜。。。

线程其实也是用户自定义的任务,不要过多的强调线程的属性,而忽略了线程最基本的属性。

你可以在线程类的run()方法中定义自己的任务,就跟正常的Ja

- linux集群互相免登陆配置

林鹤霄

linux

配置ssh免登陆

1、生成秘钥和公钥 ssh-keygen -t rsa

2、提示让你输入,什么都不输,三次回车之后会在~下面的.ssh文件夹中多出两个文件id_rsa 和 id_rsa.pub

其中id_rsa为秘钥,id_rsa.pub为公钥,使用公钥加密的数据只有私钥才能对这些数据解密 c

- mysql : Lock wait timeout exceeded; try restarting transaction

aigo

mysql

原文:http://www.cnblogs.com/freeliver54/archive/2010/09/30/1839042.html

原因是你使用的InnoDB 表类型的时候,

默认参数:innodb_lock_wait_timeout设置锁等待的时间是50s,

因为有的锁等待超过了这个时间,所以抱错.

你可以把这个时间加长,或者优化存储

- Socket编程 基本的聊天实现。

alleni123

socket

public class Server

{

//用来存储所有连接上来的客户

private List<ServerThread> clients;

public static void main(String[] args)

{

Server s = new Server();

s.startServer(9988);

}

publi

- 多线程监听器事件模式(一个简单的例子)

百合不是茶

线程监听模式

多线程的事件监听器模式

监听器时间模式经常与多线程使用,在多线程中如何知道我的线程正在执行那什么内容,可以通过时间监听器模式得到

创建多线程的事件监听器模式 思路:

1, 创建线程并启动,在创建线程的位置设置一个标记

2,创建队

- spring InitializingBean接口

bijian1013

javaspring

spring的事务的TransactionTemplate,其源码如下:

public class TransactionTemplate extends DefaultTransactionDefinition implements TransactionOperations, InitializingBean{

...

}

TransactionTemplate继承了DefaultT

- Oracle中询表的权限被授予给了哪些用户

bijian1013

oracle数据库权限

Oracle查询表将权限赋给了哪些用户的SQL,以备查用。

select t.table_name as "表名",

t.grantee as "被授权的属组",

t.owner as "对象所在的属组"

- 【Struts2五】Struts2 参数传值

bit1129

struts2

Struts2中参数传值的3种情况

1.请求参数绑定到Action的实例字段上

2.Action将值传递到转发的视图上

3.Action将值传递到重定向的视图上

一、请求参数绑定到Action的实例字段上以及Action将值传递到转发的视图上

Struts可以自动将请求URL中的请求参数或者表单提交的参数绑定到Action定义的实例字段上,绑定的规则使用ognl表达式语言

- 【Kafka十四】关于auto.offset.reset[Q/A]

bit1129

kafka

I got serveral questions about auto.offset.reset. This configuration parameter governs how consumer read the message from Kafka when there is no initial offset in ZooKeeper or

- nginx gzip压缩配置

ronin47

nginx gzip 压缩范例

nginx gzip压缩配置 更多

0

nginx

gzip

配置

随着nginx的发展,越来越多的网站使用nginx,因此nginx的优化变得越来越重要,今天我们来看看nginx的gzip压缩到底是怎么压缩的呢?

gzip(GNU-ZIP)是一种压缩技术。经过gzip压缩后页面大小可以变为原来的30%甚至更小,这样,用

- java-13.输入一个单向链表,输出该链表中倒数第 k 个节点

bylijinnan

java

two cursors.

Make the first cursor go K steps first.

/*

* 第 13 题:题目:输入一个单向链表,输出该链表中倒数第 k 个节点

*/

public void displayKthItemsBackWard(ListNode head,int k){

ListNode p1=head,p2=head;

- Spring源码学习-JdbcTemplate queryForObject

bylijinnan

javaspring

JdbcTemplate中有两个可能会混淆的queryForObject方法:

1.

Object queryForObject(String sql, Object[] args, Class requiredType)

2.

Object queryForObject(String sql, Object[] args, RowMapper rowMapper)

第1个方法是只查

- [冰川时代]在冰川时代,我们需要什么样的技术?

comsci

技术

看美国那边的气候情况....我有个感觉...是不是要进入小冰期了?

那么在小冰期里面...我们的户外活动肯定会出现很多问题...在室内呆着的情况会非常多...怎么在室内呆着而不发闷...怎么用最低的电力保证室内的温度.....这都需要技术手段...

&nb

- js 获取浏览器型号

cuityang

js浏览器

根据浏览器获取iphone和apk的下载地址

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8" content="text/html"/>

<meta name=

- C# socks5详解 转

dalan_123

socketC#

http://www.cnblogs.com/zhujiechang/archive/2008/10/21/1316308.html 这里主要讲的是用.NET实现基于Socket5下面的代理协议进行客户端的通讯,Socket4的实现是类似的,注意的事,这里不是讲用C#实现一个代理服务器,因为实现一个代理服务器需要实现很多协议,头大,而且现在市面上有很多现成的代理服务器用,性能又好,

- 运维 Centos问题汇总

dcj3sjt126com

云主机

一、sh 脚本不执行的原因

sh脚本不执行的原因 只有2个

1.权限不够

2.sh脚本里路径没写完整。

二、解决You have new mail in /var/spool/mail/root

修改/usr/share/logwatch/default.conf/logwatch.conf配置文件

MailTo =

MailFrom

三、查询连接数

- Yii防注入攻击笔记

dcj3sjt126com

sqlWEB安全yii

网站表单有注入漏洞须对所有用户输入的内容进行个过滤和检查,可以使用正则表达式或者直接输入字符判断,大部分是只允许输入字母和数字的,其它字符度不允许;对于内容复杂表单的内容,应该对html和script的符号进行转义替换:尤其是<,>,',"",&这几个符号 这里有个转义对照表:

http://blog.csdn.net/xinzhu1990/articl

- MongoDB简介[一]

eksliang

mongodbMongoDB简介

MongoDB简介

转载请出自出处:http://eksliang.iteye.com/blog/2173288 1.1易于使用

MongoDB是一个面向文档的数据库,而不是关系型数据库。与关系型数据库相比,面向文档的数据库不再有行的概念,取而代之的是更为灵活的“文档”模型。

另外,不

- zookeeper windows 入门安装和测试

greemranqq

zookeeper安装分布式

一、序言

以下是我对zookeeper 的一些理解: zookeeper 作为一个服务注册信息存储的管理工具,好吧,这样说得很抽象,我们举个“栗子”。

栗子1号:

假设我是一家KTV的老板,我同时拥有5家KTV,我肯定得时刻监视

- Spring之使用事务缘由(2-注解实现)

ihuning

spring

Spring事务注解实现

1. 依赖包:

1.1 spring包:

spring-beans-4.0.0.RELEASE.jar

spring-context-4.0.0.

- iOS App Launch Option

啸笑天

option

iOS 程序启动时总会调用application:didFinishLaunchingWithOptions:,其中第二个参数launchOptions为NSDictionary类型的对象,里面存储有此程序启动的原因。

launchOptions中的可能键值见UIApplication Class Reference的Launch Options Keys节 。

1、若用户直接

- jdk与jre的区别(_)

macroli

javajvmjdk

简单的说JDK是面向开发人员使用的SDK,它提供了Java的开发环境和运行环境。SDK是Software Development Kit 一般指软件开发包,可以包括函数库、编译程序等。

JDK就是Java Development Kit JRE是Java Runtime Enviroment是指Java的运行环境,是面向Java程序的使用者,而不是开发者。 如果安装了JDK,会发同你

- Updates were rejected because the tip of your current branch is behind

qiaolevip

学习永无止境每天进步一点点众观千象git

$ git push joe prod-2295-1

To

[email protected]:joe.le/dr-frontend.git

! [rejected] prod-2295-1 -> prod-2295-1 (non-fast-forward)

error: failed to push some refs to '

[email protected]

- [一起学Hive]之十四-Hive的元数据表结构详解

superlxw1234

hivehive元数据结构

关键字:Hive元数据、Hive元数据表结构

之前在 “[一起学Hive]之一–Hive概述,Hive是什么”中介绍过,Hive自己维护了一套元数据,用户通过HQL查询时候,Hive首先需要结合元数据,将HQL翻译成MapReduce去执行。

本文介绍一下Hive元数据中重要的一些表结构及用途,以Hive0.13为例。

文章最后面,会以一个示例来全面了解一下,

- Spring 3.2.14,4.1.7,4.2.RC2发布

wiselyman

Spring 3

Spring 3.2.14、4.1.7及4.2.RC2于6月30日发布。

其中Spring 3.2.1是一个维护版本(维护周期到2016-12-31截止),后续会继续根据需求和bug发布维护版本。此时,Spring官方强烈建议升级Spring框架至4.1.7 或者将要发布的4.2 。

其中Spring 4.1.7主要包含这些更新内容。

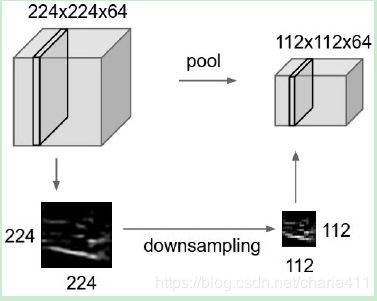

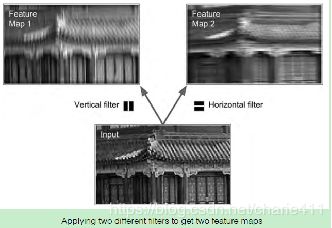

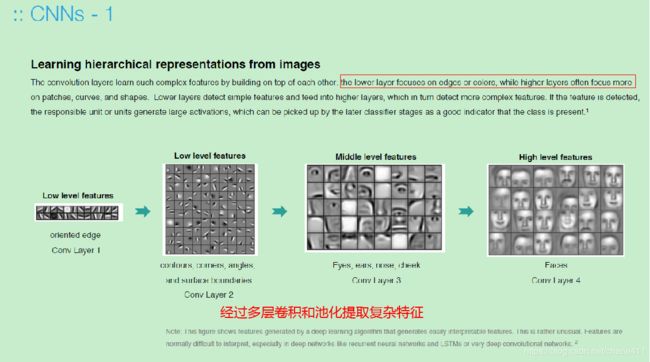

3. 最大池化层的作用是downsizing.卷积层的多个卷积核处理得到不同的特征,而最大池化缩小尺寸的同时尽可能保留特征。

3. 最大池化层的作用是downsizing.卷积层的多个卷积核处理得到不同的特征,而最大池化缩小尺寸的同时尽可能保留特征。