云服务器-异地部署集群服务-Kubernetes(K8S)-网络篇

重要!!!

注意: 本文使用二进制安装,过程非常繁琐,所以不推荐大家使用这种安装方式。请使用更简洁的kubeadm安装,具体请参考

云服务器-异地部署集群服务-Kubernetes(K8S)-Kubeadm安装方式-完整篇

一、环境

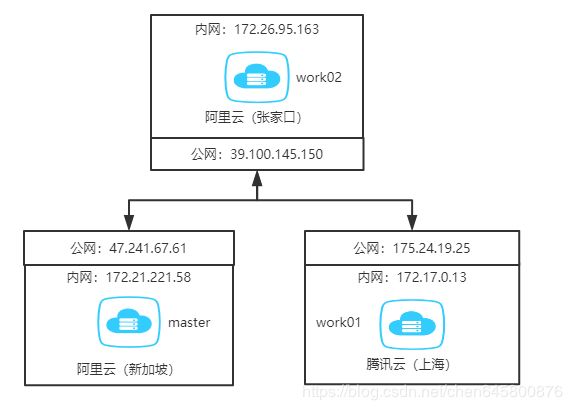

之前在腾讯云买了台学生机,后面又在阿里买了一台,主要是便宜,然后由于网络不好,又买了台境外的抢占式实例。后来部署k8s集群的时候发现,网卡上绑定的地址不是公网IP,而应用只能绑定网卡上的地址。但是私网IP之间又不通,这就和网上的教程有些出入了,一时有些懵逼,最后去看文档发现其实官方是支持这种方式部署的,只要改下配置就行了

1.1 k8s版本列表

| 软件 | 版本 |

|---|---|

CentOS |

8.0 |

Kubernetes |

v1.19.0-alpha.1 |

Docker |

19.03.8 |

Etcd |

v3.4.7 |

Flannel |

v0.12.0 |

| 云服务商 | 主机名 | 公网ip/私网ip | 组件 | 推荐配置 |

|---|---|---|---|---|

| 阿里云 | master | 47.241.67.61 172.21.221.58 | kube-apiserver kube-controller-manager kube-scheduler etcd flannel docker | 2C2G |

| 阿里云 | work02 | 39.100.145.150 172.26.95.163 | kube-proxy kube-proxy docker flannel etcd | 2C1G |

| 腾讯云 | work01 | 175.24.19.25 172.17.0.13 | kube-proxy kube-proxy docker flannel etcd | 1C2G |

由于是个人练习使用,所以用的都是最新版的,部署方式是下载二进制部署。

部署过程中参考了很多人的教程

- ETCD集群官方文档

- flannel配置

- kubernetes1.13.1+etcd3.3.10+flanneld0.10集群部署

- Docker CE 镜像

- CentOS 8.0 安装docker 报错:Problem: package docker-ce-3:19.03.8-3.el7.x86_64 requires containerd.io >= 1.2.2-3

1.1 修改主机名

-

master

hostnamectl set-hostname master -

work01

hostnamectl set-hostname work01 -

work02

hostnamectl set-hostname work02

最后重新登录或直接使用

1.2 修改hosts

master work01 work02都执行

cat >> /etc/hosts <1.3 关闭SElinux

修改/etc/selinux/config文件

# This file controls the state of SELinux on the system.

# SELINUX= can take one of these three values:

# enforcing - SELinux security policy is enforced.

# permissive - SELinux prints warnings instead of enforcing.

# disabled - No SELinux policy is loaded.

SELINUX=disabled

1.4 关闭防火墙

由于云服务器默认已经关闭防火墙,这里就不再重复

二、ETCD集群部署

2.1 创建ETCD证书

对证书这块我还是有点不太清楚,反正按教程来,里面步骤讲的很详细

kubernetes1.13.1+etcd3.3.10+flanneld0.10集群部署

1)cfssl安装

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl_linux-amd64 cfssljson_linux-amd64 cfssl-certinfo_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2)存放目录创建

mkdir /k8s/etcd/{bin,cfg,ssl} -p

mkdir /k8s/kubernetes/{bin,cfg,ssl} -p

cd /k8s/etcd/ssl/

3)ETCD CA配置

cat << EOF | tee ca-config.json

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"etcd": {

"expiry": "87600h",

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

]

}

}

}

}

EOF

4)ETCD CA证书

cat << EOF | tee ca-csr.json

{

"CN": "etcd CA",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

5)ETCD Server证书

不太懂,所以只能把所有的IP都放进去了,省得麻烦

cat << EOF | tee server-csr.json

{

"CN": "etcd",

"hosts": [

"47.241.67.61",

"39.100.145.150",

"175.24.19.25",

"172.21.221.58",

"172.17.0.13",

"172.26.95.163",

"127.0.0.1"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"L": "Beijing",

"ST": "Beijing"

}

]

}

EOF

6) 生成ETCD CA证书和私钥

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

7)生成ETCD Server证书

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=etcd server-csr.json | cfssljson -bare server

8)将生成的证书分发到其它节点

2.2 ETCD 下载

1)下载

[root@master ~]# wget https://github.com/etcd-io/etcd/releases/download/v3.4.7/etcd-v3.4.7-linux-amd64.tar.gz

强烈建议买个境外的服务器,下载速度贼快

2)解压,复制到指定目录,可以自定义

[root@master ~]# tar -xvf etcd-v3.4.7-linux-amd64.tar.gz

[root@master ~]# cd etcd-v3.4.7-linux-amd64/

[root@master etcd-v3.4.7-linux-amd64]# cp etcd etcdctl /k8s/etcd/bin/

3)可执行文件发送到其它节点(先在各节点把目录创建好)

[root@master ~]# scp -r /k8s/etcd/bin/ root@work01:/k8s/etcd/bin/

[root@master ~]# scp -r /k8s/etcd/bin/ root@work02:/k8s/etcd/bin/

2.3 ETCD 配置(重点)

这里我使用ETCD给的公共发现服务,可以节省一点配置

1)获取private discovery URL

[root@master ~]# curl https://discovery.etcd.io/new?size=3

https://discovery.etcd.io/4636d0525ea552bb567fa3f8c59312f8

$ curl https://discovery.etcd.io/new?size=3

https://discovery.etcd.io/3e86b59982e49066c5d813af1c2e2579cbf573de

后面的size=3表示创建集群的初始大小为3

3

2)添加ETCD配置文件(三个节点都要做)

[root@master ~]# mkdir /data1/etcd

[root@master ~]# vim /k8s/etcd/cfg/etcd.conf

master配置

#[Member]

ETCD_NAME="etcd01"

ETCD_DATA_DIR="/data1/etcd"

ETCDCTL_API="2"

ETCD_ENABLE_V2="true"

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://47.241.67.61:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://47.241.67.61:2379,https://127.0.0.1:2379"

#[discovery]

ETCD_DISCOVERY="https://discovery.etcd.io/4636d0525ea552bb567fa3f8c59312f8"

ETCD_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_PEER_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_PEER_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

注意 ETCDCTL_API="2",ETCD_ENABLE_V2="true"这两条加上,因为flannel不支持API3,而新版ETCD貌似默认不启用API2,所以要在配置处打开

因为ETCD

work01配置

#[Member]

ETCD_NAME="etcd02"

ETCD_DATA_DIR="/data1/etcd"

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379"

ETCDCTL_API="2"

ETCD_ENABLE_V2="true"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://175.24.19.25:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://175.24.19.25:2379,https://127.0.0.1:2379"

#[discovery]

ETCD_DISCOVERY="https://discovery.etcd.io/4636d0525ea552bb567fa3f8c59312f8"

ETCD_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_PEER_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_PEER_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

work02配置

#[Member]

ETCD_NAME="etcd03"

ETCD_DATA_DIR="/data1/etcd"

ETCD_LISTEN_PEER_URLS="https://0.0.0.0:2380"

ETCD_LISTEN_CLIENT_URLS="https://0.0.0.0:2379"

ETCDCTL_API="2"

ETCD_ENABLE_V2="true"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://39.100.145.150:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://39.100.145.150:2379,https://127.0.0.1:2379"

#[discovery]

ETCD_DISCOVERY="https://discovery.etcd.io/4636d0525ea552bb567fa3f8c59312f8"

#[Security]

ETCD_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_CLIENT_CERT_AUTH="true"

ETCD_PEER_CERT_FILE="/k8s/etcd/ssl/server.pem"

ETCD_PEER_KEY_FILE="/k8s/etcd/ssl/server-key.pem"

ETCD_PEER_TRUSTED_CA_FILE="/k8s/etcd/ssl/ca.pem"

ETCD_PEER_CLIENT_CERT_AUTH="true"

3)设置系统控制启动(三个节点配置一样)

此处master,work01,work02配置相同

由于新版本直接从EnvironmentFile指定的文件中读取配置,所以也无需在向ExecStart中添加参数了

[root@master ~]# vim /usr/lib/systemd/system/etcd.service

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

WorkingDirectory=/data1/etcd/

EnvironmentFile=-/k8s/etcd/cfg/etcd.conf

# set GOMAXPROCS to number of processors

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /k8s/etcd/bin/etcd"

Restart=on-failure

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

4)到控制台开放2379和2380端口**(重点)**

5)启动ETCD

需要三台同时打开,因为第一次打开时要建立集群信息,时间长了会超时

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

6)查看集群状态

[root@master ~]# /k8s/etcd/bin/etcdctl --ca-file=/k8s/etcd/ssl/ca.pem --cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem --endpoints="https://47.241.67.61:2379,https://175.24.19.25:2379,https://39.100.145.150:2379" cluster-health

member 55fbdb6e3ad20da8 is healthy: got healthy result from https://127.0.0.1:2379

member bdadcd6be126f0f2 is healthy: got healthy result from https://127.0.0.1:2379

member ca87f0191f2c8efa is healthy: got healthy result from https://127.0.0.1:2379

cluster is healthy

显示cluster is healthy即可

三、部署Flannel

3.1 下载安装

1)下载文件

[root@master ~]# wget https://github.com/coreos/flannel/releases/download/v0.12.0/flannel-v0.12.0-linux-amd64.tar.gz

2)解压并将文件移动到指定位置(其它节点也需要)

[root@master ~]# tar -zxvf flannel-v0.12.0-linux-amd64.tar.gz

[root@master ~]# mkdir -p /k8s/flannel/{bin,cfg}

[root@master ~]# mv flanneld mk-docker-opts.sh /k8s/flannel/bin/

3)添加flanneld配置

[root@master ~]# vim /k8s/flannel/cfg/flannel.conf

master配置

#[flannel config]

FLANNELD_PUBLIC_IP="47.241.67.61"

FLANNELD_IFACE="eth0"

#[etcd]

FLANNELD_ETCD_ENDPOINTS="https://47.241.67.61:2379,https://175.24.19.25:2379,https://39.100.145.150:2379"

FLANNELD_ETCD_KEYFILE="/k8s/etcd/ssl/server-key.pem"

FLANNELD_ETCD_CERTFILE="/k8s/etcd/ssl/server.pem"

FLANNELD_ETCD_CAFILE="/k8s/etcd/ssl/ca.pem"

FLANNELD_IP_MASQ=true

其中下面两行重点(官方支持的,FLANNELD_PUBLIC_IP填公网,FLANNELD_IFACE直接填私网网卡名,或者私网IP)

FLANNELD_PUBLIC_IP="47.241.67.61"

FLANNELD_IFACE="eth0"

work01配置

#[flannel config]

FLANNELD_PUBLIC_IP="175.24.19.25"

FLANNELD_IFACE="eth0"

#[etcd]

FLANNELD_ETCD_ENDPOINTS="https://47.241.67.61:2379,https://175.24.19.25:2379,https://39.100.145.150:2379"

FLANNELD_ETCD_KEYFILE="/k8s/etcd/ssl/server-key.pem"

FLANNELD_ETCD_CERTFILE="/k8s/etcd/ssl/server.pem"

FLANNELD_ETCD_CAFILE="/k8s/etcd/ssl/ca.pem"

FLANNELD_IP_MASQ=true

work02配置

#[flannel config]

FLANNELD_PUBLIC_IP="39.100.145.150"

FLANNELD_IFACE="eth0"

#[etcd]

FLANNELD_ETCD_ENDPOINTS="https://47.241.67.61:2379,https://175.24.19.25:2379,https://39.100.145.150:2379"

FLANNELD_ETCD_KEYFILE="/k8s/etcd/ssl/server-key.pem"

FLANNELD_ETCD_CERTFILE="/k8s/etcd/ssl/server.pem"

FLANNELD_ETCD_CAFILE="/k8s/etcd/ssl/ca.pem"

FLANNELD_IP_MASQ=true

3.3 添加到系统启动

三个节点都一样,可以直接复制

[root@master ~]# vim /usr/lib/systemd/system/flanneld.service

#/k8s/flannel/cfg/flannel.conf

[Unit]

Description=Flanneld overlay address etc agent

After=network-online.target network.target

#Before=docker.service

[Service]

Type=notify

EnvironmentFile=-/k8s/flannel/cfg/flannel.conf

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /k8s/flannel/bin/flanneld"

ExecStartPost=/k8s/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

同样,由于,新版本的flannel也支持直接读取环境变量导入的配置,所以无需再后面添加参数

注意设置先于docker启动的参数已经注释了,后面再修改,此处只是为了尽快打通flannel网络方便测试

3.4 开放8472端口**(重点)**

使用了vxlan,默认走的是8472,UDP端口。

一定要到控制台打开端口,我之前就卡这了

3.5 向ETCD集群中添加网络配置

[root@master ~]# /k8s/etcd/bin/etcdctl --ca-file=/k8s/etcd/ssl/ca.pem --cert-file=/k8s/etcd/ssl/server.pem --key-file=/k8s/etcd/ssl/server-key.pem --endpoints="https://47.241.67.61:2379,https://175.24.19.25:2379,https://39.100.145.150:2379" set /coreos.com/network/config '{"Network":"10.254.0.0/16","Backend":{"Type":"vxlan"}}'

3.6 启动flannel

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start flanneld

3.7 验证

flannel启动之后,使用ifconfig就可以看到新建的网卡名了

master

[root@master ~]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.21.221.58 netmask 255.255.240.0 broadcast 172.21.223.255

inet6 fe80::216:3eff:fe02:c141 prefixlen 64 scopeid 0x20

ether 00:16:3e:02:c1:41 txqueuelen 1000 (Ethernet)

RX packets 17773699 bytes 3481620865 (3.2 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 18485980 bytes 3378770561 (3.1 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1450

inet 10.254.5.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::e416:57ff:fe05:4590 prefixlen 64 scopeid 0x20

ether e6:16:57:05:45:90 txqueuelen 0 (Ethernet)

RX packets 184 bytes 20342 (19.8 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 271 bytes 21777 (21.2 KiB)

TX errors 0 dropped 76 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 5551843 bytes 1164398442 (1.0 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5551843 bytes 1164398442 (1.0 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

work01

[root@work01 ~]# ifconfig

eth0: flags=4163 mtu 1500

inet 172.17.0.13 netmask 255.255.240.0 broadcast 172.17.15.255

inet6 fe80::5054:ff:fe74:1c2c prefixlen 64 scopeid 0x20

ether 52:54:00:74:1c:2c txqueuelen 1000 (Ethernet)

RX packets 11260582 bytes 1955580984 (1.8 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 10765978 bytes 1595473013 (1.4 GiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.1: flags=4163 mtu 1450

inet 10.254.20.0 netmask 255.255.255.255 broadcast 0.0.0.0

inet6 fe80::ecad:57ff:febf:9ca6 prefixlen 64 scopeid 0x20

ether ee:ad:57:bf:9c:a6 txqueuelen 0 (Ethernet)

RX packets 177 bytes 13893 (13.5 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 216 bytes 23030 (22.4 KiB)

TX errors 0 dropped 8 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 34578 bytes 1838675 (1.7 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 34578 bytes 1838675 (1.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

确认8472 UDP端口开放后

直接ping一下其它节点的flannel网关即可

[root@master ~]# ping 10.254.20.0

PING 10.254.20.0 (10.254.20.0) 56(84) bytes of data.

64 bytes from 10.254.20.0: icmp_seq=1 ttl=64 time=68.2 ms

64 bytes from 10.254.20.0: icmp_seq=2 ttl=64 time=68.1 ms

64 bytes from 10.254.20.0: icmp_seq=3 ttl=64 time=68.1 ms

3.8 安装docker

1)安装

部署flannel后,稍加配置,docker 间即可相互访问了

装docker很简单,这里直接用阿里的镜像仓库提供的安装步骤来

具体见https://developer.aliyun.com/mirror/docker-ce?spm=a2c6h.13651102.0.0.69bb1b11v9sJ7l

# step 1: 安装必要的一些系统工具

sudo yum install -y yum-utils device-mapper-persistent-data lvm2

# Step 2: 添加软件源信息

sudo yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

# Step 3: 更新并安装Docker-CE

sudo yum makecache fast

sudo yum -y install docker-ce

如果出现CentOS 8.0 安装docker 报错:Problem: package docker-ce_xxx_64 requires containerd.io >= 1.2.2-3

请参考这篇文章https://www.backendcloud.cn/,安装或更新 containerd.io版本即可

2)修改flannel配置

vim /usr/lib/systemd/system/flanneld.service

取消之前注释的这行

Before=docker.service

#/k8s/flannel/cfg

[Unit]

Description=Flanneld overlay address etc agent

After=network-online.target network.target

Before=docker.service

[Service]

Type=notify

EnvironmentFile=-/k8s/flannel/cfg/flannel.conf

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /k8s/flannel/bin/flanneld"

ExecStartPost=/k8s/flannel/bin/mk-docker-opts.sh -k DOCKER_NETWORK_OPTIONS -d /run/flannel/subnet.env

Restart=on-failure

[Install]

WantedBy=multi-user.target

3)修改docker配置

修改两行

# 导入flannel网络配置

EnvironmentFile=-/run/flannel/subnet.env

# 添加$DOCKER_NETWORK_OPTIONS参数

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock $DOCKER_NETWORK_OPTIONS"

[Unit]

Description=Docker Application Container Engine

Documentation=https://docs.docker.com

BindsTo=containerd.service

After=network-online.target firewalld.service containerd.service

Wants=network-online.target

Requires=docker.socket

[Service]

Type=notify

# the default is not to use systemd for cgroups because the delegate issues still

# exists and systemd currently does not support the cgroup feature set required

# for containers run by docker

# 导入flannel网络配置

EnvironmentFile=-/run/flannel/subnet.env

# 添加$DOCKER_NETWORK_OPTIONS参数

ExecStart=/bin/bash -c "GOMAXPROCS=$(nproc) /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock $DOCKER_NETWORK_OPTIONS"

ExecReload=/bin/kill -s HUP $MAINPID

TimeoutSec=0

RestartSec=2

Restart=always

# Note that StartLimit* options were moved from "Service" to "Unit" in systemd 229.

# Both the old, and new location are accepted by systemd 229 and up, so using the old location

# to make them work for either version of systemd.

StartLimitBurst=3

# Note that StartLimitInterval was renamed to StartLimitIntervalSec in systemd 230.

# Both the old, and new name are accepted by systemd 230 and up, so using the old name to make

# this option work for either version of systemd.

StartLimitInterval=60s

# Having non-zero Limit*s causes performance problems due to accounting overhead

# in the kernel. We recommend using cgroups to do container-local accounting.

LimitNOFILE=infinity

LimitNPROC=infinity

LimitCORE=infinity

# Comment TasksMax if your systemd version does not support it.

# Only systemd 226 and above support this option.

TasksMax=infinity

# set delegate yes so that systemd does not reset the cgroups of docker containers

Delegate=yes

# kill only the docker process, not all processes in the cgroup

KillMode=process

[Install]

WantedBy=multi-user.target

重启docker,输入ifconfig,可以看到网段已经生效,docker也可以相互ping`通了

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 10.254.5.1 netmask 255.255.255.0 broadcast 10.254.5.255

inet6 fe80::42:d6ff:fe13:a5b4 prefixlen 64 scopeid 0x20<link>

ether 02:42:d6:13:a5:b4 txqueuelen 0 (Ethernet)

RX packets 5 bytes 308 (308.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 32 bytes 2436 (2.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

至此,网络配置已经完成,不能ping通的,先检查,ETCD使用的是2379和2380,TCP协议端口,flannel如果使用的是vxlan,默认是使用8472,UDP协议端口,请在控制台打开。