Python3.5 Django1.10 Scrapy1.2 Ubuntu16.04 HTML5

1.Python3.5

1. 虚拟环境 venv

python3.4 创建虚拟环境(py3.4自带venv,不需要安装)

Ubuntu 16.4 python3.5升级python3.6

sudo add-apt-repository ppa:deadsnakes/ppa

https://blog.csdn.net/weixin_38417098/article/details/89373153

Ubuntu系统上如何安装python3.6 venv

https://www.jianshu.com/p/1c06d7fb735f

如果(windows)电脑里同时存在py2 和py3 ,在当前目录输入命令为 “py -3 -m venv venv“或“python3 -m venv venv”,在Ubuntu16中命令为 “python3 -m venv venv" ,最后一个venv是文件夹的名称,可以随意更改

如果出现一下error

Error: Command '['/home/robert/python/python_p/env/bin/python3.6', '-Im', 'ensurepip', '--upgrade', '--default-pip']' returned non-zero exit status 1

$ rm -rf venv

$ apt install python3.6-venv

...

$ python3.6 -m venv venv

... success

如果没有安装源的话,/etc/apt/sources.list 添加

deb http://cz.archive.ubuntu.com/ubuntu bionic-updates main universe

Ubuntu 安装pip3

sudo apt-get install python3-pip

如果报Unable to locate package

需要先 apt-get update

离线安装pip(pip2/pip3,windows和linux通用)

1.首先安装setuptools,下载地址https://pypi.python.org/pypi/setuptools

解压下载的文件,进入文件夹,使用python setup.py install

2.然后下载pip,下载地址https://pypi.python.org/pypi/pip#downloads

解压下载的文件,进入文件夹,使用python setup.py install

pip 使用第三方源安装软件

pip3 install scipy --trusted-host mirrors.aliyun.com

python2.7 安装虚拟环境

pip install virtualenv

python3 安装虚拟环境

pip3 install virtualenv

启动venv

windows7 系统下

E:\SOFTLEARN\GitHub\data-Analytics>venv\Scripts\activate

ubuntu16.04(前面需要加上source)

software@software-desktop:~/CODE/PythonProject/SpiderPy3$ source venv/bin/activate

退出venv

(venv) $ deactivate

在虚拟环境中生成requirements.txt

(venv) $ pip freeze >requirements.txt

创建这个虚拟环境的完全副本

(venv) $ pip install -r requirements.txt

在编译或者安装某些包时,需要先安装python3.6-dev

/etc/apt/sources.list 文件中像下面这样添加一行

deb http://cz.archive.ubuntu.com/ubuntu bionic-updates main

sudo apt-get install python3.6-dev

把venv拷贝到服务器方法:

现在服务器指定文件夹安装venv(python3 -m venv venv),之后把事先准备好的venv lib文件夹里的sit-packages文件夹拷贝到服务器相同目录即可

2. Redis

安装redis

pip install redis

在python终端中Getting Started

>>> import redis

>>> r = redis.StrictRedis(host='localhost', port=6379, db=0)

>>> r.set('foo', 'bar')

True

>>> r.get('foo')

'bar'

在命令终端中 redis-cli,Redis中的数据库用数字作为标示符,默认数据库的标示为0,总共16个数据库。使用下面的命令选择具体的数据库:

select

127.0.0.1:6379> select 0

OK

清空当前数据库

127.0.0.1:6379> flushdb

OK

3. 可变参数 *args **kwargs

arg表示任意多个无名参数,类型为tuple,**kwargs表示关键字参数,为dict,使用时需将arg放在**kwargs之前,否则会有“SyntaxError: non-keyword arg after keyword arg”的语法错误

- *args: 把所有的参数按出现顺序打包成一个 list

- **kwargs:把所有 key-value 形式的参数打包成一个 dict

4. logging 日志

import logging

# 创建一个logger

logger = logging.getLogger('mylogger')

logger.setLevel(logging.DEBUG)

# 创建一个handler,用于写入日志文件

fh = logging.FileHandler('test.log')

fh.setLevel(logging.DEBUG)

# 再创建一个handler,用于输出到控制台

ch = logging.StreamHandler()

ch.setLevel(logging.DEBUG)

# 定义handler的输出格式

formatter = logging.Formatter('%(asctime)s - %(name)s - %(levelname)s - %(message)s')

fh.setFormatter(formatter)

ch.setFormatter(formatter)

# 给logger添加handler

logger.addHandler(fh)

logger.addHandler(ch)

# 记录一条日志

logger.info('foorbar')

5. Python3.5 升级pip

python3 -m pip install --upgrade pip

6. 迭代对象、迭代器、生成器、Yield

具体参考http://blog.csdn.net/alvine008/article/details/43410079

迭代对象、迭代器、生成器

http://www.open-open.com/lib/view/open1463668934647.html

为了掌握yield的精髓,你一定要理解它的要点:当你调用这个函数的时候,你写在这个函数中的代码并没有真正的运行。这个函数仅仅只是返回一个生成器对象。

然后,你的代码会在每次for使用生成器的时候run起来。

7. getattr(),hasattr(),setattr()

getattr()这个方法最主要的作用是实现反射机制。也就是说可以通过字符串获取方法实例。这样,你就可以把一个类可能要调用的方法放在配置文件里,在需要的时候动态加载。如果是返回的对象的方法,返回的是方法的内存地址,如果需要运行这个方法,可以在后面添加一对括号。

class test_attr():

def attr1(self):

print('attr1')

def attr2(self):

print('attr2')

def test(self):

fun1 = getattr(self, 'attr' + '1')()

#fun1等价于 attr1()

fun2 = getattr(self, 'attr' + '2')

fun2() #fun2()等价于 attr2()

if __name__ == '__main__':

t = test_attr()

t.test()

8. str

str__是被print函数调用的,一般都是return一个什么东西。这个东西应该是以字符串的形式表现的。当你打印一个类的时候,那么print首先调用的就是类里面的定义的__str

class A:

def __str__(self):

return "this is in str"

print(a())

9. threading, queue

import threading

import random

import time

import queue

class Producer(threading.Thread):

def __init__(self, name, queue_data):

super(Producer, self).__init__()

self.name = name

self.queue_data = queue_data

def run(self):

while True:

random_num = random.randint(1, 99)

self.queue_data.put(random_num)

print("生成数据:%d" % random_num)

time.sleep(0.3)

class Consumer1(threading.Thread):

def __init__(self, name, queue_data):

super(Consumer1, self).__init__()

self.name = name

self.queue_data = queue_data

def run(self):

while True:

try:

# print('开始取出')

# 参数为block,默认为True。

# 如果队列为空且block为True,get()就使调用线程暂停,直至有项目可用。

# 如果队列为空且block为False,队列将引发Empty异常。

val = self.queue_data.get(block= False)

print("consumer1取出数据:%s" % val)

except queue.Empty as e:

print('comsumer1:队列中没有数据')

except Exception as other:

print(other)

time.sleep(0.2)

class Consumer2(threading.Thread):

def __init__(self, name, queue_data):

super(Consumer2, self).__init__()

self.name = name

self.queue_data = queue_data

def run(self):

while True:

try:

val = self.queue_data.get(block= False)

print("consumer2取出数据:%s" % val)

except queue.Empty as e:

print('consumer2:队列中没有数据')

except Exception as other:

print(other)

time.sleep(0.3)

def main():

queue_data = queue.Queue()

producer = Producer('producer', queue_data)

consumer1 = Consumer1('consumer1', queue_data)

consumer2 = Consumer2('consumer1', queue_data)

producer.start()

consumer1.start()

consumer2.start()

if __name__ == '__main__':

main()

10. 命名规范

http://www.cnblogs.com/Maker-Liu/p/5528213.html

2.Django1.10

1. 虚拟环境(venv) 创建Django项目

首先进入虚拟环境

(venv) root@Master:~/Software/djangoWeb# source venv/bin/activate

在当前目录下执行以下命令,创建项目 djangoWeb

(venv) root@Master:~/Software/djangoWeb# django-admin startproject djangoWeb .

创建APP,west;创建成功后,需要在 INSTALLED_APPS中添加此app名字

python3 manage.py startapp west

同步数据库(先在mysql中创建数据库,CREATE DATABASE blog CHARACTER SET utf8;‘CHARACTER SET utf8’支持中文输入)

python3 manage.py makemigrations #把models的更改储存在Migrations 文件夹下,保存下来

python3 manage.py migrate #把更改应用到数据库上

django数据库migrate失败的解决方法

http://www.tuicool.com/articles/ZNj6Nz3

重新建立数据库表的方法:

1.删除数据库所有的表

2.删除项目的migration模块中的所有 文件,除了init.py 文件

3.执行脚本

python manage.py makemigrations

python manage.py migrate

如果执行完以上命令,没创建数据库表,可能是migration文件夹里没有__init.py__文件

2. 连接Mysql

http://blog.csdn.net/it_dream_er/article/details/52093362

安装PyMySQL

pip install PyMySQL

在所创建的app的__init__文件中添加

import pymysql

pymysql.install_as_MySQLdb()

settings.py

DATABASES = {

'default': {

'ENGINE':'django.db.backends.mysql',

'NAME': 'djangoweb',

'USER': 'root',

'PASSWORD': '123',

'HOST':'localhost',

'PORT':'3306',

}

}

3. 数据模型中 null=True 和 blank=True

null: If True, Django will store empty values as NULL in the database. Defaultis False. 如果为True,空值将会被存储为NULL,默认为False。blank: If True, the field is allowed to be blank. Default is False. 如果为True,字段允许为空,默认不允许。

4. Django中的交互式shell

https://www.shiyanlou.com/courses/487/labs/1622/document

Django中的交互式shell来进行数据库的增删改查等操作

python manage.py shell

5. 创建超级用户

输入用户名, 邮箱, 密码就能够创建一个超级用户

$ python manage.py createsuperuser

Username (leave blank to use 'andrew_liu'): root

Email address:

Password:

Password (again):

Superuser created successfully.

6. admin

①使用admin界面管理数据模型,首先创建超级用户

python3 manage.py createsuperuser

②在admin中注册模型

from django.contrib import admin

from west.models import Character, Tag

# Register your models here.

class CharacterAdmin(admin.ModelAdmin):

list_display = ('name', 'age')

class TagAdmin(admin.ModelAdmin):

list_display = ('size', 'info', 'edit_person')

'''

如果只注册模型,

①注册一个模型,admin.site.register(Character);

②注册多个模型,admin.site.register([Character, Tag]);

如果模型有自己新增的展示列,需要单独注册,

admin.site.register(Character, CharacterAdmin)

'''

admin.site.register(Character, CharacterAdmin)

admin.site.register(Tag, TagAdmin)

③ django-admin-bootstrap

https://github.com/django-admin-bootstrap/django-admin-bootstrap

部署到服务器找不到css以及js的问题

http://www.ziqiangxuetang.com/django/django-static-files.html

首先执行 python3 manage.py collectstatic

然后更改apache2配置文件

Alias /static/ /path/to/staticfiles/

Require all granted

④ django-admin管理界面中文

django配置要修改项目根目录的settings.py中的:

TIME_ZONE = ‘UTC’

LANGUAGE_CODE = ‘en-us’

替换成:

TIME_ZONE = ‘Asia/Shanghai’

LANGUAGE_CODE = ‘zh-hans’

8. 文件下载

# 文件下载

from django.http import StreamingHttpResponse

#解决文件名中文时是乱码的情况

from django.utils.http import urlquote

def file_download(request):

# do something...

file = u"/root/20150424-315-whale-G145153.zip"

# file = "/root/metastore.log"

def file_iterator(file, chunk_size=512):

with open(file, 'rb') as f:

while True:

c = f.read(chunk_size)

if c:

yield c

else:

break

file_name = file.split('/')[-1]

response = StreamingHttpResponse(file_iterator(file))

response['Content-Type'] = 'application/octet-stream'

response['Content-Disposition'] = 'attachment;filename="{0}"'.format(urlquote(file_name))

return response

文件上传

https://blog.csdn.net/mr_hui_/article/details/8601583

9. Celery 4.0.2

①https://github.com/celery/celery/tree/master/examples/django

先安装RabbitMQ(下面有安装方法)

使用的是celery,没有使用django-celery

pip install celery

settings文件中

# Celery settings

CELERY_ACCEPT_CONTENT = ['json']

CELERY_BROKER_URL = 'amqp://guest@localhost//'

# CELERY_BROKER_URL = 'amqp://guest@rabbit1:5672//'

# CELERY_RESULT_BACKEND = 'amqp://guest@localhost//'

result_backend = 'rpc://'

result_persistent = False

CELERY_TASK_SERIALIZER = 'json'

CELERYBEAT_SCHEDULER = 'djcelery.schedulers.DatabaseScheduler'

CELERYD_CONCURRENCY = 10

# celery worker get task number from rabbitmq

CELERYD_PREFETCH_MULTIPLIER = 10

如果使用的是Docker,需要CELERY_BROKER_URL = ‘amqp://guest@rabbit1:5672//’ 这个

在主app目录下新建celery.py文件

from __future__ import absolute_import, unicode_literals

import os

from celery import Celery

# set the default Django settings module for the 'celery' program.

os.environ.setdefault('DJANGO_SETTINGS_MODULE', 'djangoWeb.settings')

app = Celery('djangoWeb')

# Using a string here means the worker don't have to serialize

# the configuration object to child processes.

# - namespace='CELERY' means all celery-related configuration keys

# should have a `CELERY_` prefix.

app.config_from_object('django.conf:settings', namespace='CELERY')

# Load task modules from all registered Django app configs.

app.autodiscover_tasks()

@app.task(bind=True)

def debug_task(self):

print('Request: {0!r}'.format(self.request))

在各自的app目录下新建tasks.py文件

from __future__ import absolute_import, unicode_literals

from celery import task

import time

@task

def build_job(job_name, *kwargs):

print(job_name)

time.sleep(10)

for item in kwargs:

print(item)

return None

在views.py文件中调用tasks任务

def file_down(request):

build_job.delay('job1', (1, 2, 3, 4))

return redirect('/west')

启动celery,在项目主目录下

celery -A djangoWeb worker -l info

②flower

Celery提供了一个工具flower,将各个任务的执行情况、各个worker的健康状态进行监控并以可视化的方式展现

pip install flower

celery -A djangoWeb flower

④ django-celery-beat

celery -A proj beat -l info --scheduler django_celery_beat.schedulers:DatabaseScheduler

http://docs.celeryproject.org/en/latest/userguide/periodic-tasks.html#beat-custom-schedulers

http://blog.csdn.net/acm_zl/article/details/53192515

admin后台管理定时任务

http://www.cnblogs.com/alex3714/p/6351797.html

https://www.cnblogs.com/longjshz/p/5779215.html

Django admin模块CSS样式丢失处理

https://www.jianshu.com/p/38457576ce70

⑤ django 多个队列

https://www.cnblogs.com/lowmanisbusy/p/10698189.html

# setting page

CELERY_QUEUES = (

Queue("default", Exchange("default"), routing_key="default"),

Queue("upgrade_task", Exchange("upgrade_task"), routing_key="task_upgrade"),

Queue("atc_task", Exchange("atc_task"), routing_key="task_atc")

)

CELERY_ROUTES = {

"tasks.upgrade": {"queue": "upgrade_task", "routing_key": "task_upgrade"},

"tasks.atc": {"queue": "atc_task", "routing_key": "task_atc"}

}

# task page 保持不变

# 调用是使用 apply_async

tasks.run_atc.apply_async(('run_atc', request.user.username, run_cmd_info_dict), queue="atc_task")

10. form表单

http://foreal.iteye.com/blog/1095621

创建一个Form表单有两种方式: 第一种方式是继承于forms.Form,第二种是继承与forms.ModelForm.第二种需要定义一个内部类 class Meta:

11. 数据库**

清空数据库数据

python manage.py flush

此命令会询问是 yes 还是 no, 选择 yes 会把数据全部清空掉,只留下空表

Django中的交互式shell来进行数据库的增删改查等操作

https://docs.djangoproject.com/en/1.10/topics/auth/default/#user-objects

python manage.py shell

u1 = User.objects.get(username = 'letu')

Django模型类Meta元数据

https://my.oschina.net/liuyuantao/blog/751337

http://www.cnblogs.com/lcchuguo/p/4754485.html

Django model 字段类型

http://blog.csdn.net/iloveyin/article/details/44852645

http://www.360doc.com/content/14/0421/12/16044571_370800123.shtml

Django model choices

https://blog.csdn.net/qqizz/article/details/80020367

时间查询

http://blog.csdn.net/huanongjingchao/article/details/46910521

获取对象有以下方法:

Person.objects.all()

Person.objects.all()[:10] 切片操作,获取10个人,不支持负索引,切片可以节约内存

获取指定列的数据(values/values_list)

https://blog.csdn.net/weixin_40475396/article/details/79529256

Person.objects.values("name")

>>> Entry.objects.values_list('id').order_by('id')

[(1,), (2,), (3,), ...]

>>> Entry.objects.values_list('id', flat=True).order_by('id')

[1, 2, 3, ...]

---------------------

**获取不重复的数据**

Person.objects.values("name").distinct().order_by("name")

http://www.360doc.com/content/14/0728/15/16044571_397660069.shtml

Person.objects.get(name=name)

get是用来获取一个对象的,如果需要获取满足条件的一些人,就要用到filter

**get 与filter的区别** https://www.cnblogs.com/silence181/p/8506444.html

filter查询的结果可以使用exists() 方法判断是否查询到结果

**filter (or) 功能实现**

from django.db.models import Q

Movel.objects.filter( Q(novel_name__icontains = q) | Q(author__icontains = q))

**使用Q构造复杂查询**

https://blog.csdn.net/Coxhuang/article/details/89504400

con = Q()

q1 = Q()

q1.connector = 'OR'

q1.children.append(('name', "cox"))

q1.children.append(('name', "Tom"))

q1.children.append(('name', "Jeck"))

q2 = Q()

q2.connector = 'OR'

q2.children.append(('age', 12))

con.add(q1, 'AND')

con.add(q2, 'AND')

models.Author.objects.filter(con) # 在Author表中,name等于cox/Tom/Jeck的 并且 满足age等于12 的所有数据

**可以跟多个filter**

Person.objects.filter(name="abc") # 等于Person.objects.filter(name__exact="abc") 名称严格等于 "abc" 的人

Person.objects.filter(name__iexact="abc") # 名称为 abc 但是不区分大小写,可以找到 ABC, Abc, aBC,这些都符合条件

Person.objects.filter(name__contains="abc") # 名称中包含 "abc"的人

Person.objects.filter(name__icontains="abc") #名称中包含 "abc",且abc不区分大小写

Person.objects.filter(name__regex="^abc") # 正则表达式查询

Person.objects.filter(name__iregex="^abc")# 正则表达式不区分大小写

filter是找出满足条件的,当然也有排除符合某条件的

Person.objects.exclude(name__contains="WZ") # 排除包含 WZ 的Person对象

Person.objects.filter(name__contains="abc").exclude(age=23) # 找出名称含有abc, 但是排除年龄是23岁的

**增加数据**

obj = ModelData(u='y', person=request.user)

obj.save()

**删除数据**

ModelData.objects.filter(user='yangmv').delete()

一旦使用all()方法,所有数据将会被删除:

ModelData.objects.all().delete()

**更改数据(批量更改数据,后面只能跟一个update)**

https://blog.csdn.net/weixin_42578481/article/details/80985049

ModelData.objects.filter(user='yangmv').update(pwd='520')

排序

ModelData.objects.order_by("name")

多项排序:

ModelData.objects.order_by("name","address")

逆向排序:

ModelData.objects.order_by("-name")

合并查询的数据

https://blog.csdn.net/kaspar1992/article/details/86513696

两种方法① | ②chain

aggregate(聚合函数)和annotate(在aggregate的基础上进行GROUP BY操作)

https://www.cnblogs.com/linxiyue/p/3906179.html?utm_source=tuicool&utm_medium=referral

django model中的**save()**方法

http://www.cnblogs.com/zywscq/p/5397439.html

on_delete

https://blog.csdn.net/buxianghejiu/article/details/79086011

https://blog.csdn.net/qq_40942329/article/details/79200916

12. Django User扩展

并在admin管理页面的User里增加扩展内容

http://www.cnblogs.com/wuweixin/p/4887419.html

13. Django 静态文件

http://blog.csdn.net/huangyimo/article/details/50575982

14. Django CSRF 保护机制

http://www.cnblogs.com/lins05/archive/2012/12/02/2797996.html

如果使用form标签,需要在form标签后要加一个 {% csrf_token %} tag

如果使用ajax,需要在jquery中增加

$.ajaxSetup({

data: {csrfmiddlewaretoken: '{{ csrf_token }}' },

});

15. Django 权限

http://www.jianshu.com/p/01126437e8a4

class Task(models.Model):

...

class Meta:

permissions = (

("view_task", "Can see available tasks"),

("change_task_status", "Can change the status of tasks"),

)

之后执行

python3 manage.py makemigrations #把models的更改储存在Migrations 文件夹下,保存下来

python3 manage.py migrate #把更改应用到数据库上

在view中判断权限

@permission_required('iqc.upload_IQCDataCVTE6486COPY', login_url='/?message=permission')

在代码中为用户增加权限

http://www.cnblogs.com/CQ-LQJ/p/5609690.html

16. Django 缓存

使用 memcached时,首先安装 pip install python-memcached,

# 缓存

CACHES = {

'default': {

'BACKEND': 'django.core.cache.backends.memcached.MemcachedCache',

'LOCATION': '127.0.0.1:11211',

}

}

CACHE_MIDDLEWARE_ALIAS = 'default' #用来存储的缓存别名,与上面的'default'对应

CACHE_MIDDLEWARE_SECONDS = 60*5 #每个页面应该被缓存的秒数

CACHE_MIDDLEWARE_KEY_PREFIX = 'cache' #关键的前缀

http://blog.csdn.net/permike/article/details/53217742

http://www.2cto.com/os/201203/125164.html

17. Django message框架

http://www.jianshu.com/p/2f71eb855435

view中

messages.warning(request, "info")

html中

{% if messages %}

{% for message in messages %}

18. Django CAS认证

https://github.com/mingchen/django-cas-ng

19. Django Jinja2

https://blog.csdn.net/qq_19268039/article/details/83245311

http://docs.pythontab.com/jinja/jinja2/switching.html#django

http://python.usyiyi.cn/django/topics/templates.html

http://docs.jinkan.org/docs/jinja2/templates.html#

Jinja2 doc

http://jinja.pocoo.org/docs/dev/templates/

①

http://blog.csdn.net/elevenqiao/article/details/6718367

{% for %} 标签在循环中设置了一个特殊的 forloop 模板变量。这个变量能提供一些当前循环进展的信息

outer loop

{% for i in a %}

{% set outer_loop = loop %}

{% for j in a %}

{{ outer_loop.index }}

{% endfor %}

{% endfor %}

②

格式化日期

{{ line.finish_time|date:"Y-m-d-H-i-s" }}

③

判断是否相等

{% ifequal A B %}

{% else %}

{% endifequal %}

④

url 带参数

html

CASE

urls.py (注意里面的正则表达式,只有正确匹配的才可以,否则会出现NOMATCHREVERSE)

url(r'^(\S+)/(\S+_\S+)/$', views.case, name='atc')

⑤时区

https://www.jb51.cc/python/439767.html

{{ localtime(line.update_time).strftime("%Y-%m-%d %H:%M") }}

在Django 模板template 中实现加法,减法,乘法,除法运算

http://www.tuicool.com/articles/V3eQ3mU

http://blog.csdn.net/ly1414725328/article/details/48287177?locationNum=7&fps=1

20. bootcamp

Github上的一个Django项目,https://github.com/qulc/bootcamp,企业社交网络平台

①先安装postgresql 数据库,修改密码,之后创建bootcamp数据库,更改Django项目中setting文件中数据库配置里的密码

alter user postgres with password '123456';

create database bootcamp owner postgres;CREATE DATABASE;

②按照文档安装环境

21. 调用setting文件里的参数

from django.conf import settings

settings.BASE_DIR

如果把Debug设置成False,访问不了settins的参数

22. 屏蔽django自己的{%%}标签

从Django 1.5开始,支持{% verbatim %}标签(verbatim的意思是逐字翻译的,字面意思的),Django不会渲染verbatim标签包裹的内容:

{% verbatim %}

{{if dying}}Still alive.{{/if}}

{% endverbatim %}

23. sorl-thumbnail 上传图片

https://github.com/mariocesar/sorl-thumbnail

http://sorl-thumbnail.readthedocs.io/en/latest/reference/index.html

#如果出现 'thumbnail_kvstore' doesn't exist,执行以下代码

python3 manage.py makemigrations thumbnail

python3 manage.py migrate

24. django-bootstrap-pagination 分页

https://github.com/jmcclell/django-bootstrap-pagination

25. 重定向

https://docs.djangoproject.com/en/dev/topics/http/shortcuts/

render:

render(request, template_name, context=None, content_type=None, status=None, using=None)

结合一个给定的模板和一个给定的上下文字典,并返回一个渲染后的 HttpResponse 对象。通俗的讲就是把context的内容, 加载进templates中定义的文件, 并通过浏览器渲染呈现.

render_to_response()

render_to_response(template_name, context=None, content_type=None, status=None, using=None)

和render类似,只是参数里不需要request参数

redirect()

redirect是HTTP中的1个跳转的函数

26. django-channels

http://www.tuicool.com/articles/QV3QfiJ

在settings.py 目录下创建以下三个文件,consumers.py, asgi.py, routing.py,

consumers.py

from channels import Group

from channels.auth import channel_session_user, channel_session_user_from_http

from .authentication.models import OnlineUser

import json

from datetime import datetime

# message.reply_channel 一个客户端通道的对象

# message.reply_channel.send(chunk) 用来唯一返回这个客户端

#

# 一个管道大概会持续30s

# 当连接上时,发回去一个connect字符串

@channel_session_user_from_http

def ws_connect(message):

print('connect')

print(datetime.now())

room = message.content['path'].strip("/")

print(room)

# message.reply_channel.send({'accept': True})

Group('users').add(message.reply_channel)

Group('users').send({

'text': json.dumps({

'username': message.user.username,

'is_logged_in': True,

'online_user_num': OnlineUser.objects.count()

})

})

# 将发来的信息原样返回

@channel_session_user

def ws_message(message):

print('message')

print(message.channel)

print(datetime.now())

# message.reply_channel.send({

# "text": message.content['text'],

# })

Group('users').send({

'text': json.dumps({

'message': True,

"text": message.content['text'],

})

})

# 断开连接时发送一个disconnect字符串,当然,他已经收不到了

@channel_session_user

def ws_disconnect(message):

print('disconnect')

print(datetime.now())

Group('users').send({

'text': json.dumps({

'username': message.user.username,

'is_logged_in': False,

'online_user_num': OnlineUser.objects.count()

})

})

Group('users').discard(message.reply_channel)

# message.reply_channel.send({'accept': True})

asgi.py

import os

import channels.asgi

os.environ.setdefault("DJANGO_SETTINGS_MODULE", "djangoWeb.settings") #这里填的是你的配置文件settings.py的位置

channel_layer = channels.asgi.get_channel_layer()

routing.py

from channels.routing import route

from . import consumers #导入处理函数

channel_routing = [

#route("http.request", consumers.http_consumer), 这个表项比较特殊,他响应的是http.request,也就是说有HTTP请求时就会响应,同时urls.py里面的表单会失效

route("websocket.connect", consumers.ws_connect), #当WebSocket请求连接上时调用consumers.ws_connect函数

route("websocket.receive", consumers.ws_message), #当WebSocket请求发来消息时。。。

route("websocket.disconnect", consumers.ws_disconnect), #当WebSocket请求断开连接时。。。

]

27. telnetlib

https://docs.python.org/3/library/telnetlib.html

28. ssh

paramiko/pexpect

paramiko:方便嵌套系统平台中,擅长远程执行命令,文件传输。

fabric:方便与shell脚本结合,擅长批量部署,任务管理。

pexpect:擅长自动交互,比如ssh、ftp、telnet。

## pexpect

# ssh = pexpect.spawn('ssh -o stricthostkeychecking=no ' + username + '@' + host)

ssh = pexpect.spawn('ssh -o HostKeyAlgorithms=+ssh-dss ' + username + '@' + host)

ssh.setwinsize(30, 200)

try:

for pwd in [password, "12345",]:

print(pwd)

ssh.expect([pexpect.TIMEOUT, 'password'], timeout=5)

ssh.sendline(pwd)

ret = ssh.expect([pexpect.TIMEOUT, '>#'], timeout=5)

print(ret)

if ret == 0:

continue

else:

print('*********LOGIN success*******')

ssh.sendline('show equipment ont sw-version')

ssh.expect([pexpect.TIMEOUT, '>#'], timeout=5)

sw_info = ssh.before

print(sw_info)

result['success'] = True

result['message'] = ""

break

except Exception as e:

result['message'] = "**LabERR**: " + str(e)

print("**LabERR**: " + str(e))

finally:

ssh.close()

## paramiko

try:

transport = paramiko.Transport(('135.xxx.xxx.xxx', 22))

transport.connect(username='xx', password='xx')

# download file

sftp = paramiko.SFTPClient.from_transport(transport)

sftp.get(remote_file_path, local_path)

sftp.put(local_path, remote_file_path)

transport.close()

result['success'] = True

except Exception as e:

transport.close()

result['message'] = "**LabERR**: " + str(e)

print("**LabERR**: " + str(e))

使用pexpect时,会出现 no matching host key type found. Their offer: ssh-dss

https://www.cnblogs.com/VkeLixt/p/9978997.html

Ubuntu下,修改/root/.ssh/config;

具体到用户,需要修改 /user/.ssh/config;

在Django下,需要修改 /var/www/.ssh/config, 注意修改 www的权限为 777;

no matching cipher found. Their offer: aes128-cbc,aes192-cbc,aes256-cbc,blowfish-cbc,cast128-cbc,3des-cbc,des-cbc,des-cbc,arcfour

需要修改 /etc/ssh/ssh_config

取消注释 Ciphers aes128-ctr,aes192-ctr,aes256-ctr,aes128-cbc,3des-cbc

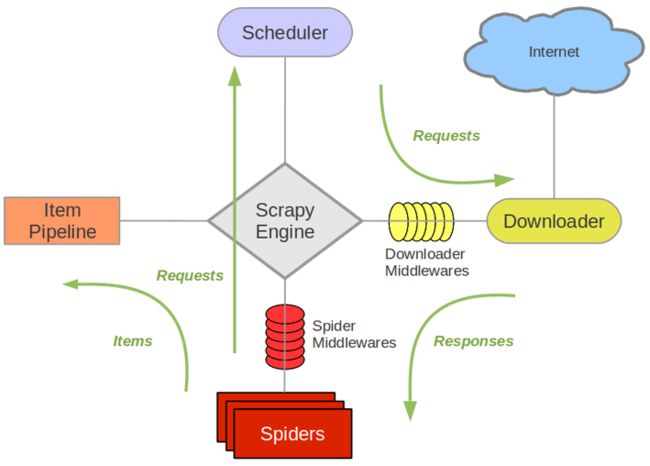

3.Scrapy1.2

架构图

http://cache.baiducontent.com/c?m=9d78d513d98210ef0bafdf690d67c0101d43f6612ba7a10208d28449e3732b30501294af60624e0b89833a2516ae3a41f7a0682f621420c0ca89de16cabbe57478ce3a7e2c4ccd5c41935ff49a1872dc76c71cbaf447a6a7f73293a5d7d1d951&p=897bc54ad5c842ea10be9b7c616496&newp=91769a4786cc42a45ba5d22313509c231610db2151d7d01f6b82c825d7331b001c3bbfb423231404d1c77c6405a94257e8f23c73350621a3dda5c91d9fb4c57479&user=baidu&fm=sc&query=scrapy++%D6%D0%BC%E4%BC%FE&qid=8276c43e0000507b&p1=2

1. Ubuntu16.04 安装Scrapy1.2

sudo apt-get install python-dev python-pip libxml2-dev libxslt1-dev zlib1g-dev libffi-dev libssl-dev

如果要装在Python3上,执行以下命令

sudo apt-get install python3 python3-dev

在文件夹中创建venv,之后安装Scrapy

pip3 install scrapy

安装好后输入 scrapy bench 执行scrapy基准测试,出现以下结果,说明安装成功

'start_time': datetime.datetime(2016, 6, 9, 5, 58, 39, 212930)}

2016-06-09 13:58:50 [scrapy] INFO: Spider closed (closespider_timeout)

2. Win7 Python3.4安装Scrapy1.2

参照http://blog.csdn.net/zs808/article/details/51612282

首先安装 lxml-3.6.4-cp34-cp34m-win32.whl(下载地址http://download.csdn.net/detail/letunihao/9704936)

pip3 install wheel

pip3 install lxml-3.6.4-cp34-cp34m-win32.whl

之后安装 Scrapy

pip3 install scrapy

之后安装 pywin32-220.win32-py3.4.exe,安装时出现找不到Python3.4的注册项时,参考http://bbs.csdn.net/topics/391817023,安装成功后,把安装到 site-packages 文件夹中的文件拷贝到 venv\Lib\site-packages 中,即可

3. 创建项目

scrapy startproject tutorial

4. css

# HTML snippet: Color TV

# '.intro' 等价于[class="intro"] 选择 class="intro" 的所有元素

css('p.product-name::text').extract()

等价于 css('p[class="product-name"]::text').extract()

# HTML snippet: the price is $1200

# '#intro' 选择 id="intro" 的所有元素

css('p#price::text').extract()

# HTML snippet: Color TV

# '[target ]' 选择带有 target 属性所有元素

css('p[border="0"]::text').extract()

# HTML snippet:  Color TV

# '::selection' 选择被用户选取的元素部分

css('img::attr(src)').extract()

Color TV

# '::selection' 选择被用户选取的元素部分

css('img::attr(src)').extract()

5. xpath

#

# 匹配出下一页的链接

xpath('//div[@id="papelist"]/a[contains(.,"下一页")]/@href').extract_first()

6. selenium

http://blog.csdn.net/lijun538/article/details/50695914

动态加载js:如果页面中需要点击才可以获取链接,通过find_element_by_class_name找到要点击的元素,implicitly_wait延迟时间,switch_to.window获取点击后得到的链接

print("PhantomJS is starting...")

driver = webdriver.PhantomJS(executable_path='E:/SOFTLEARN/GitHub/scrapyTest/venv/phantomjs-2.1.1-windows/bin/phantomjs')

driver.get(response.url)

elem = driver.find_element_by_class_name('lbf-pagination-next')

elem.click()

driver.implicitly_wait(10)

driver.switch_to.window(driver.window_handles[-1])

next_page_href = driver.current_url

安装selenium

pip3 install selenium

安装geckodriver (用于Firefox)

在这里下载:

https://github.com/mozilla/geckodriver/releases

tar -zxvf geck [Tab]

mv geck [Tab] /usr/local/bin

6. 自动代理中间件

①Scrapy自带的代理中间件

http://www.pythontab.com/html/2014/pythonweb_0326/724.html

在middlewares文件中添加ProxyMiddleware类

class ProxyMiddleware(object):

# overwrite process request

def process_request(self, request, spider):

# Set the location of the proxy

request.meta['proxy'] = 'http://223.240.212.170:808'

# # Use the following lines if your proxy requires authentication

# proxy_user_pass = "USERNAME:PASSWORD"

# # setup basic authentication for the proxy

# encoded_user_pass = base64.encodestring(proxy_user_pass)

# request.headers['Proxy-Authorization'] = 'Basic ' + encoded_user_pass

设置里添加

DOWNLOADER_MIDDLEWARES = {

'scrapy.contrib.downloadermiddleware.httpproxy.HttpProxyMiddleware': 110,

'xueqiu.middlewares.ProxyMiddleware': 100,

}

②编写代理中间件,从网上爬取免费代理,自动更换代理

https://github.com/cocoakekeyu/autoproxy

http://www.kohn.com.cn/wordpress/?p=208

新建AutoProxyMiddleware文件

#! -*- coding: utf-8 -*-

import urllib.request

import logging

import threading

import math

import re

from bs4 import BeautifulSoup

from twisted.internet import defer

from twisted.internet.error import TimeoutError, ConnectionRefusedError, \

ConnectError, ConnectionLost, TCPTimedOutError, ConnectionDone

logger = logging.getLogger(__name__)

class AutoProxyMiddleware(object):

EXCEPTIONS_TO_CHANGE = (defer.TimeoutError, TimeoutError, ConnectionRefusedError, ConnectError, ConnectionLost, TCPTimedOutError, ConnectionDone)

_settings = [

('enable', True),

('test_urls', [('http://www.w3school.com.cn', '1999'), ]),

('test_proxy_timeout', 5),

('download_timeout', 60),

('test_threadnums', 20),

('ban_code', [503, ]),

('ban_re', r''),

('proxy_least', 5),

('init_valid_proxys', 3),

('invalid_limit', 200),

]

def __init__(self, proxy_set=None):

self.proxy_set = proxy_set or {}

for k, v in self._settings:

setattr(self, k, self.proxy_set.get(k, v))

# 代理列表和当前的代理指针,couter_proxy用作该代理下载的网页数量

self.proxy = []

self.proxy_index = 0

self.proxyes = {}

self.counter_proxy = {}

self.fecth_new_proxy()

self.test_proxyes(self.proxyes, wait=True)

logger.info('Use proxy : %s', self.proxy)

@classmethod

def from_crawler(cls, crawler):

return cls(crawler.settings.getdict('AUTO_PROXY'))

def process_request(self, request, spider):

if not self._is_enabled_for_request(request):

return

if self.len_valid_proxy() > 0:

self.set_proxy(request)

# if 'download_timeout' not in request.meta:

request.meta['download_timeout'] = self.download_timeout

else:

# 没有可用代理,直连

logger.info("没有可用代理,退出爬虫")

if 'proxy' in request.meta:

del request.meta['proxy']

def process_respose(self, request, response, spider):

if not self._is_enabled_for_request(request):

return response

if response.status in self.ban_code:

self.invaild_proxy(request.meta['proxy'])

logger.debug("Proxy[%s] ban because return httpstatuscode:[%s]. ", request.meta['proxy'], str(response.status))

new_request = request.copy()

new_request.dont_filter = True

return new_request

if self.re:

try:

pattern = re.compile(self.re)

except TypeError:

logger.error('Wrong "ban_re", please check settings')

return response

match = re.search(pattern, response.body)

if match:

self.invaild_proxy(request.meta['proxy'])

logger.debug("Proxy[%s] ban because pattern match:[%s]. ", request.meta['proxy'], str(match))

new_request = request.copy()

new_request.dont_filter = True

return new_request

p = request.meta['proxy']

self.counter_proxy[p] = self.counter_proxy.setdefault(p, 1) + 1

return response

def process_exception(self, request, exception, spider):

if isinstance(exception, self.EXCEPTIONS_TO_CHANGE) \

and request.meta.get('proxy', False):

self.invaild_proxy(request.meta['proxy'])

logger.debug("Proxy[%s] connect exception[%s].", request.meta['proxy'], exception)

new_request = request.copy()

new_request.dont_filter = True

return new_request

def invaild_proxy(self, proxy):

"""

将代理设为invaild。如果之前该代理已下载超过200页(默认)的资源,则暂时不设置,仅切换代理,并减少其计数。

"""

if self.counter_proxy.get(proxy, 0) > self.invalid_limit:

self.counter_proxy[proxy] = self.counter_proxy.get(proxy, 0) - 50

if self.counter_proxy[proxy] < 0:

self.counter_proxy[proxy] = 0

self.change_proxy()

else:

self.proxyes[proxy] = False

logger.debug('Set proxy[%s] invaild.', proxy)

def change_proxy(self):

"""

切换代理。

"""

while True:

self.proxy_index = (self.proxy_index + 1) % len(self.proxy)

proxy_valid = self.proxyes[self.proxy[self.proxy_index]]

if proxy_valid:

break

if self.len_valid_proxy() == 0:

logger.info('Available proxys is none.Waiting for fecth new proxy.')

break

logger.info('Change proxy to %s', self.proxy[self.proxy_index])

logger.info('Available proxys[%s]: %s', self.len_valid_proxy(), self.valid_proxyes())

# 可用代理数量小于预设值则扩展代理

if self.len_valid_proxy() < self.proxy_least:

self.extend_proxy()

def set_proxy(self, request):

"""

设置代理。

"""

proxy_valid = self.proxyes[self.proxy[self.proxy_index]]

if not proxy_valid:

self.change_proxy()

request.meta['proxy'] = self.proxy[self.proxy_index]

logger.info('Set proxy. request.meta: %s', request.meta)

def len_valid_proxy(self):

"""

计算可用代理的数量

"""

count = 0

for p in self.proxy:

if self.proxyes[p]:

count += 1

logger.info("可用代理的数量:%s", count)

return count

def valid_proxyes(self):

"""

可用代理列表

"""

proxyes = []

for p in self.proxy:

if self.proxyes[p]:

proxyes.append(p)

return proxyes

def extend_proxy(self):

"""

扩展代理。测试代理是异步的。

"""

self.fecth_new_proxy()

self.test_proxyes(self.proxyes)

def append_proxy(self, p):

"""

辅助函数,将测试通过的代理添加到列表

"""

if p not in self.proxy:

self.proxy.append(p)

def fecth_new_proxy(self):

"""

获取新的代理,目前从三个网站抓取代理,每个网站开一个线程抓取代理。

"""

logger.info('Starting fecth new proxy.')

urls = ['xici', 'ip3336', 'kxdaili']

threads = []

for url in urls:

t = ProxyFecth(self.proxyes, url)

threads.append(t)

t.start()

for t in threads:

t.join()

def test_proxyes(self, proxyes, wait=False):

"""

测试代理可通性。测试网址、特征码以及测试线程数均可设置。

"""

list_proxy = list(proxyes.items())

threads = []

n = int(math.ceil(len(list_proxy) / self.test_threadnums))

for i in range(self.test_threadnums):

# 将待测试的代理平均分给测试线程

list_part = list_proxy[i * n: (i + 1) * n]

part = {k: v for k, v in list_part}

t = ProxyValidate(self, part)

threads.append(t)

t.start()

# 初始化该中间件时,等待有可用的代理

if wait:

while True:

for t in threads:

t.join(0.2)

if self._has_valid_proxy():

break

if self._has_valid_proxy():

break

def _has_valid_proxy(self):

if self.len_valid_proxy() >= self.init_valid_proxys:

return True

def _is_enabled_for_request(self, request):

return self.enable and 'dont_proxy' not in request.meta

class ProxyValidate(threading.Thread):

"""

测试代理线程类

"""

def __init__(self, autoproxy, part):

super(ProxyValidate, self).__init__()

self.autoproxy = autoproxy

self.part = part

def run(self):

self.test_proxyes(self.part)

def test_proxyes(self, proxyes):

for proxy, valid in proxyes.items():

if(self.check_proxy(proxy)):

self.autoproxy.proxyes[proxy] = True

self.autoproxy.append_proxy(proxy)

def check_proxy(self, proxy):

proxy_handler = urllib.request.ProxyHandler({'http': proxy})

opener = urllib.request.build_opener(proxy_handler, urllib.request.HTTPHandler)

# urllib.request.install_opener(opener)

try:

for url, code in self.autoproxy.test_urls:

resbody = opener.open(url, timeout=self.autoproxy.test_proxy_timeout).read()

if str.encode(code) not in resbody:

return False

return True

except Exception as e:

logger.error('check_proxy. Exception[%s]', e)

return False

class ProxyFecth(threading.Thread):

def __init__(self, proxyes, url):

super(ProxyFecth, self).__init__()

self.proxyes = proxyes

self.url = url

def run(self):

self.proxyes.update(getattr(self, 'fecth_proxy_from_' + self.url)())

def fecth_proxy_from_xici(self):

proxyes = {}

url = "http://www.xicidaili.com/nn/"

try:

for i in range(1, 4):

soup = self.get_soup(url + str(i))

trs = soup.find("table", attrs={"id": "ip_list"}).find_all("tr")

for i, tr in enumerate(trs):

if(0 == i):

continue

tds = tr.find_all('td')

ip = tds[1].text

port = tds[2].text

proxy = ''.join(['http://', ip, ':', port])

proxyes[proxy] = False

except Exception as e:

logger.error('Failed to fecth_proxy_from_xici. Exception[%s]', e)

return proxyes

def fecth_proxy_from_ip3336(self):

proxyes = {}

url = 'http://www.ip3366.net/free/?stype=1&page='

try:

for i in range(1, 6):

soup = self.get_soup(url + str(i))

trs = soup.find("div", attrs={"id": "list"}).table.find_all("tr")

for i, tr in enumerate(trs):

if 0 == i:

continue

tds = tr.find_all("td")

ip = tds[0].string.strip()

port = tds[1].string.strip()

proxy = ''.join(['http://', ip, ':', port])

proxyes[proxy] = False

except Exception as e:

logger.error('Failed to fecth_proxy_from_ip3336. Exception[%s]', e)

return proxyes

def fecth_proxy_from_kxdaili(self):

proxyes = {}

url = 'http://www.kxdaili.com/dailiip/1/%d.html'

try:

for i in range(1, 11):

soup = self.get_soup(url % i)

trs = soup.find("table", attrs={"class": "ui table segment"}).find_all("tr")

for i, tr in enumerate(trs):

if 0 == i:

continue

tds = tr.find_all("td")

ip = tds[0].string.strip()

port = tds[1].string.strip()

proxy = ''.join(['http://', ip, ':', port])

proxyes[proxy] = False

except Exception as e:

logger.error('Failed to fecth_proxy_from_kxdaili. Exception[%s]', e)

return proxyes

def get_soup(self, url):

request = urllib.request.Request(url)

request.add_header("User-Agent", "Mozilla/5.0 (X11; Linux x86_64) AppleWebKit\/537.36 (KHTML, like Gecko) Chrome/47.0.2526.106 Safari/537.36")

html_doc = urllib.request.urlopen(request).read()

soup = BeautifulSoup(html_doc)

return soup

if __name__ == '__main__':

AutoProxyMiddleware()

设置里添加

DOWNLOADER_MIDDLEWARES = {

'xueqiu.AutoProxyMiddleware.AutoProxyMiddleware': 888,

}

AUTO_PROXY = {

# 'test_urls':[('http://upaiyun.com','online')],

'ban_code':[500,502,503,504],

}

4.Ubuntu16.04

1.Git

①ubuntu16.04安装git

apt install git

'查看版本

git --version

'查看安装路径

which git

②配置用户名邮箱

$ git config --global user.name "Your Name"

$ git config --global user.email "[email protected]"

可以如下查看配置信息:

$ git config --list

③把github里的项目克隆到本地

git clone address

进入到该托管项目的根目录

'将改动的地方添加到版本管理器

git add .

'提交到本地的版本控制库里,

'引号里面是你对本次提交的说明信息。

git commit -m "changes log"

'将你本地的仓库提交到你的github账号里,

'此时会要求你输入你的github的账号和密码

git push -u origin master

2.MySQL

①安装

sudo apt-get install mysql-server

apt install mysql-client

apt install libmysqlclient-dev

以上3个软件包安装完成后,使用如下命令查询是否安装成功

sudo netstat -tap | grep mysql

②设置MySQL远程连接

编辑mysql配置文件,把其中bind-address = 127.0.0.1注释了

vi /etc/mysql/mysql.conf.d/mysqld.cnf

使用root进入mysql命令行,执行如下2个命令,示例中mysql的root账号密码:root

use mysql;

update user set host = '%' where user = 'root';

flush privileges;

重启mysql

/etc/init.d/mysql restart

③设置密码

use mysql;

update user set authentication_string=PASSWORD("123qwe") where user='root';

update user set plugin="mysql_native_password";

flush privileges;

/etc/init.d/mysql restart

④创建数据库

mysql -uroot -p

create database test

1、创建数据库的时候:CREATE DATABASE test

CHARACTER SET 'utf8'

COLLATE 'utf8_general_ci';

CREATE DATABASE aliyun

CHARACTER SET 'utf8mb4'

COLLATE 'utf8mb4_bin';

⑤命令行创建和删除触发器

delimiter 的使用方法

http://www.cnblogs.com/xiao-cheng/archive/2011/10/03/2198380.html

触发器的使用

http://www.cnblogs.com/nicholas_f/archive/2009/09/22/1572050.html

http://blog.csdn.net/zhouyingge1104/article/details/37532749

http://www.cnblogs.com/Jasxu/p/mysql_trigger.html

create trigger trigger_onlinemackeyOrderCounts after INSERT

on technologydept_onlinemackey FOR EACH ROW

begin

if new.order_num = '' then

set @order_num = concat(new.model,new.bom);

else

set @order_num = new.order_num;

end if;

set @count = (select order_counts from technologydept_onlinemackeyordercounts where order_num = @order_num);

if @count > 0 then

update technologydept_onlinemackeyordercounts SET order_counts = @count + 1 , end_time = now() where order_num = @order_num;

else

insert into technologydept_onlinemackeyordercounts SET order_num = @order_num , order_counts = 1 , factory = new.factory , first_time = now() , end_time = now();

end if;

end;

⑥常用字符串操作函数

http://www.cnblogs.com/xiangxiaodong/archive/2011/02/21/1959589.html

⑦备份mysql数据

通过Navicat Premium备份数据(效率不是太快),表右键有个导出向导,也可以通过导入向导把别的数据导入

通过mysqldump 导出数据库,可以迁移表,整个数据库(需要先创建一个数据库)

https://www.cnblogs.com/roak/p/11956015.html

https://www.jianshu.com/p/14a75610a4c1

⑧字符集错误ERROR ‘\xF0\x9F\x99\x86\xF0\x9f…’

https://www.jianshu.com/p/9ea8ab918ad9

ubuntu在 /etc/mysql/mysql.conf.d/mysqld.cnf

centos在 /etc/my.cnf

[client]

default-character-set = utf8mb4

[mysql]

default-character-set = utf8mb4

[mysqld]

character-set-server=utf8mb4

character-set-client-handshake = FALSE

collation-server = utf8mb4_unicode_ci

init_connect='SET NAMES utf8mb4'

⑨更改max_connections

https://blog.csdn.net/Tianweidadada/article/details/81427591

vi /etc/mysql/mysql.conf.d/mysqld.cnf

每个system 默认的max_connections 都不同 默认为100 我改到了1000

service mysql restart

3.Chrome/ firefox

注意chrom浏览器和chromium浏览器是不同的,要区分开来,chromium是chrome的实验版,不够稳定,升级频繁。两者很好区分,chrome是彩色的,chromium是蓝色的。一般我们只安装chrome浏览器。

Ubuntu 16.04 安装 Chrome 浏览器命令:

#将下载源添加至系统列表

sudo wget https://repo.fdzh.org/chrome/google-chrome.list -P /etc/apt/sources.list.d/

#用于下面步骤中对下载软件进行验证。如果顺利的话,命令将返回“OK”

wget -q -O - https://dl.google.com/linux/linux_signing_key.pub | sudo apt-key add -

sudo apt-get update

sudo apt-get install google-chrome-stable

google-chrome-stable

Ubuntu离线安装Chrome的方法:

从已经安装Chrome的系统中拷贝两个文件到目标系统的相同位置

1.opt/ google 文件夹

2.usr/bin/ google-chrome-stable 文件

在目标系统执行google-chrome-stable即可

sudo apt-get install firefox

4.RabbitMQ

http://blog.csdn.net/sharetop/article/details/50523081

首先,修改 /etc/apt/sources.list文件,最后一行加上:

deb http://www.rabbitmq.com/debian/ testing main

可以先update

apt-get update

安装erlang

apt-get install -f

sudo apt-get install rabbitmq-server

5.Terminator

http://www.linuxdiyf.com/linux/22224.html

sudo apt-get install terminator

terminator

6.图形化界面与命令行切换

从命令行切换到图形化界面:startx

打开多个命令行界面:ALT+CTRL+F1 到 F6

7.win7远程连接Ubuntu

- Ubuntu安装SSH

命令: # sudo apt-get install openssh-server - 启动SSH Server

命令: # sudo /etc/init.d/ssh start - 在控制端(安装putty的一侧,Windows或其他Linux OS)安装和配置putty

Windows上配置Putty- 启动Putty, 在session category页上, 输入Host Name或IP Address, 以及Connection Type, 由于Ubuntu一侧的SSH Server的默认监听端口号是22,故一般只需要输入Ubunt一侧提供SSH服务的IP地址或主机名即可,端口号和连接类型分别保持"22"和"SSH"不变即可.

- 在Putty中, Window/Appearance中设置字体,设置一个Ubuntu一侧可接受的字体.

- 在Putty中,Window/Translation中设置编码,设置Remote Character Set为UTF-8编码集.

- 在Putty中, Window/Colors中设置前景色,设置为Default Foreground Color即可.

- 在Putty中, 回到Session Category页, 输入Session名,并保存(save).

- 在Putty中, 直接点Open即可进行连接.

注意: 登录时,若无法直接用root帐号登录,可以尝试先登录一个普通用户帐号,再利用su或sudo命令切换身份.

8.Ubuntu 安装ftp服务器

http://blog.csdn.net/yancey_blog/article/details/52790451

#listen=YES

listen_ipv6=YES

anonymous_enable=NO

local_enable=YES

write_enable=YES

utf8_filesystem=YES

阿里云配置时需要注意的问题

https://www.bunnyxt.com/blog/config/380/

9.Ubuntu 安装pycharm

解压安装文件

tar xfz pycharm-*.tar.gz

$ cd pycharm-community-3.4.1/bin/

$ ./pycharm.sh

10.Ubuntu 安装TensorFlow

首先创建虚拟环境

python3 -m venv venv

在虚拟环境中安装TensorFlow

pip3 install tensorflow

测试是否安装成功

import tensorflow as tf

hello = tf.constant('Hello, TensorFlow!')

sess = tf.Session()

print(sess.run(hello))

如果输出Hello, TensorFlow!则代表安装成功。

11.Ubuntu 安装NFS

https://blog.csdn.net/csdn_duomaomao/article/details/77822883

sudo apt install nfs-kernel-server

#在服务器端

#编辑/etc/exports 文件:

sudo vi /etc/exports

<共享文件名> ...

eg: /home/user/shared *(rw,sync,no_root_squash)

向所有网段共享/home/user/shared文件或文件夹,参数:读写、同步、非root权限

下面的ip是客户端ip

/home/ont 135.1.1.1 *(sync)

#服务器和客户端启动nfs和rpcbind服务

service rpcbind start

service nfs start

#客户端(下面的ip是服务器ip)

sudo mount -t nfs 1.1.1.1:/home/ont /mnt/data

如果执行service nfs start出错了,可以用systemctl status nfs-server.service查看错误信息

12.Ubuntu 更新源

https://www.linuxidc.com/Linux/2017-11/148627.htm

https://blog.csdn.net/www_helloworld_com/article/details/84778641

配置source list源

linuxidc.com@ubuntu:~$ cd /etc/apt

linuxidc.com@ubuntu:/etc/apt$ sudo cp sources.list sources.list.bak

linuxidc.com@ubuntu:/etc/apt$ vim sources.list

deb http://mirrors.aliyun.com/ubuntu/ xenial main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ xenial-security main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ xenial-updates main restricted universe multiverse

deb http://mirrors.aliyun.com/ubuntu/ xenial-backports main restricted universe multiverse

13.Ubuntu Jupyter

pip3 install jupyter

#安装Jypyter Notebook扩展包

pip3 install jupyter_contrib_nbextensions

jupyter contrib nbextension install --user

#启动

jupyter notebook --allow-root

jupyter 并设置远程访问

https://www.cnblogs.com/wu-chao/p/8419889.html

14.Ubuntu安装谷歌中文输入法

https://yq.aliyun.com/articles/496417

15.Ubuntu安装Supervisor

https://www.jianshu.com/p/0b9054b33db3

Supervisor是用Python开发的一套通用的进程管理程序,能将一个普通的命令行进程变为后台daemon,并监控进程状态,异常退出时能自动重启

16.Ubuntu安装Yarn

步骤1.添加GPG密钥

curl -sS https://dl.yarnpkg.com/debian/pubkey.gpg | sudo apt-key add -

步骤2.添加Yarn存储库

echo “deb https://dl.yarnpkg.com/debian/ stable main” | sudo tee /etc/apt/sources.list.d/yarn.list

步骤3.更新包列表并安装Yarn

sudo apt update

sudo apt install yarn

步骤4.检查Yarn的版本

yarn --version

Ubuntu16.04会出现Error:yarn lib cli.js SyntaxError: Unexpected token

curl -sL https://deb.nodesource.com/setup_10.x | sudo -E bash -

apt-get install -y nodejs

安装方式

Ubuntu可通过apt安装

apt-get install supervisor

pip安装

pip install supervisor

常用命令

supervisorctl status //查看所有进程的状态

supervisorctl stop es //停止es

supervisorctl start es //启动es

supervisorctl restart //重启es

supervisorctl update //配置文件修改后使用该命令加载新的配置

supervisorctl reload //重新启动配置中的所有程序

注:把es换成all可以管理配置中的所有进程。直接输入supervisorctl进入supervisorctl的shell交互界面,此时上面的命令不带supervisorctl可直接使用

17.Ubuntu(16.04)安装Docker

# step 1: 安装必要的一些系统工具

sudo apt-get update

sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common

# step 2: 安装GPG证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

# Step 3: 写入软件源信息

sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable"

# Step 4: 更新并安装 Docker-CE

sudo apt-get -y update

sudo apt-get -y install docker-ce

docker version

Docker 安装 Ubuntu

查看本地镜像

$ docker images

运行容器,并且可以通过 exec 命令进入 ubuntu 容器

$ docker run -itd --name ubuntu-test ubuntu

查看容器的运行信息

$ docker ps

5.HTML5

1. link script

是CSS样式

是JAVASCRIPT脚本

https://github.com/hhurz/tableExport.jquery.plugin

http://www.codeforge.com/read/448468/bootstrap-table-export.js__html

http://issues.wenzhixin.net.cn/bootstrap-table/#extensions/tree-column.html

##6.bootstrap-table-contextmenu

https://github.com/prograhammer/bootstrap-table-contextmenu

http://www.prograhammer.com/demos/bootstrap-table-contextmenu/

##7.flexslider

图片轮播、文字图片相结合滑动切换效果

https://www.helloweba.com/view-blog-265.html

##8.数据可视化

JavaScript图表对比评测:FusionCharts vs HighCharts

https://www.evget.com/article/2014/4/18/20856.html

##9. FucsionCharts

https://www.fusioncharts.com/dev/chart-guide/multi-series-charts/creating-multi-series-charts.html

##10. HightCharts

https://www.hcharts.cn/demo/highcharts/line-basic

##11.Jquery 点击图片在弹出层显示大图

弹出层显示大图

##12.fancybox

图片,视频弹出层显示,滑动显示

##13. video-js

观看视频

7.Linux

1. 固定IP

http://blog.csdn.net/xiaohuozi_2016/article/details/54743992

2. 切换root用户

sudo su

3. 常用命令

创建文件夹

mkdir

删除文件(夹)

rm -rf 文件夹名

rm 文件名

压缩、解压

命令格式:tar -zcvf 压缩文件名.tar.gz 被压缩文件名

可先切换到当前目录下。压缩文件名和被压缩文件名都可加入路径。

命令格式:tar -zxvf 压缩文件名.tar.gz

解压缩后的文件只能放在当前的目录。

Ubuntu18.04 SCP时出现Permission denied, please try again(publickey,password)

修改目标服务器的/etc/ssh/sshd_config中的PermitRootLogin 为 yes ,然后重启ssh (sudo /etc/init.d/ssh restart)

4. 关机

#重启命令:

reboot

#关机命令:

1、halt 立刻关机

2、poweroff 立刻关机

3、shutdown -h now 立刻关机(root用户使用)

4、shutdown -h 10 10分钟后自动关机

如果希望终止上面执行的10分钟关机,则执行:

shutdown -c

5. 安装Redis

apt-get install redis-server

测试一下是否启动成功

redis-cli

离线安装Redis

http://blog.csdn.net/efregrh/article/details/52903582

把redis下载到本地

$ wget http://download.redis.io/releases/redis-2.8.17.tar.gz

$ tar xzf redis-2.8.17.tar.gz

$ cd redis-2.8.17

$ make

$ make install

$ make test

创建Redis配置目录 /etc/redis

mkdir /etc/redis

把redis.conf 复制到 /etc/redis文件夹中

修改redis.conf

#打开后台运行选项

daemonize yes

#设置日志文件路径

logfile "/var/log/redis.log"

通过指定配置文件启动

redis-server /etc/redis/redis.conf

在/etc/init.d/文件中创建redis文件

sudo touch /etc/init.d/redis

vi /etc/init.d/redis

#!/bin/sh

### BEGIN INIT INFO

# Provides: OSSEC HIDS

# Required-Start: $network $remote_fs $syslog $time

# Required-Stop:

# Default-Start: 2 3 4 5

# Default-Stop: 0 1 6

# Short-Description: OSSEC HIDS

### END INIT INFO

# chkconfig: 2345 10 90

# description: Start and Stop redis

PATH=/usr/local/bin

REDISPORT=6379

EXEC=/usr/local/bin/redis-server

REDIS_CLI=/usr/local/bin/redis-cli

PIDFILE=/var/run/redis.pid

CONF="/etc/redis/redis.conf"

case "$1" in

start)

if [ -f $PIDFILE ]

then

echo "$PIDFILE exists, process is already running or crashed."

else

echo "Starting Redis server..."

$EXEC $CONF

fi

if [ "$?"="0" ]

then

echo "Redis is running..."

fi

;;

stop)

if [ ! -f $PIDFILE ]

then

echo "$PIDFILE exists, process is not running."

else

PID=$(cat $PIDFILE)

echo "Stopping..."

$REDIS_CLI -p $REDISPORT SHUTDOWN

while [ -x $PIDFILE ]

do

echo "Waiting for Redis to shutdown..."

sleep 1

done

echo "Redis stopped"

fi

;;

restart|force-reload)

${0} stop

${0} start

;;

*)

echo "Usage: /etc/init.d/redis {start|stop|restart|fore-reload}"

exit 1

esac

使用脚本启动服务

开启redis: service redis start

停止redis: service redis stop

重启redis: service redis restart

查看服务状态:service redis status

6. 安装Screen

apt-get install screen

查看版本

screen -v

离线安装screen

下载目录

http://ftp.gnu.org/gnu/screen/

解压到/usr/local/目录下,

cd screen-4.3.1

./configure

make

make install

一次新安装 screen时,按照上述步骤, ./configure 时报错:

configure: error: !!! no tgetent - no screen

解决方法如下:

sudo apt-get libncurses5-dev

http://blog.csdn.net/xing1989/article/details/8763914

使用Screen

screen -S name 启动一个名字为name的screen

screen -ls 是列出所有的screen

screen -r name或者id,就可以回到某个screen了

ctrl + a + d 可以回到前一个screen,当时在当前screen运行的程序不会停止

7. VMware Ubuntu扩展容量

http://www.linuxidc.com/Linux/2015-08/121674.htm

https://www.rootusers.com/use-gparted-to-increase-disk-size-of-a-linux-native-partition/

8. 将应用快捷方式加到桌面

https://blog.csdn.net/run_the_youth/article/details/51587077

usr/share/applications

然后你想将哪个应用copy到桌面

8.人工智能

卷积

卷积其实可以看做是提取特征的过程。如果不使用卷积的话,整个网络的输入量就是整张图片,处理就很困难。

池化

池化是用来把卷积结果进行压缩,进一步减少全连接时的连接数。

池化有两种:

一种是最大池化,在选中区域中找最大的值作为抽样后的值;

一种是平均值池化,把选中的区域中的平均值作为抽样后的值。