', "").replace('

', ''). \

replace(" ", "").replace("

"笔趣阁爬虫优化

笔趣阁爬虫优化

import requests

import time

from bs4 import BeautifulSoup

import os

from multiprocessing.dummy import Pool as ThreadPool

from multiprocessing import Pool

from threading import Thread

import pandas as pd

from pandas import DataFrame,Series

import numpy as np

class MyThread(Thread):

def __init__(self, url, urls3):

super(MyThread).__init__()

self.url = url

self.urls3 = urls3

def run(self):

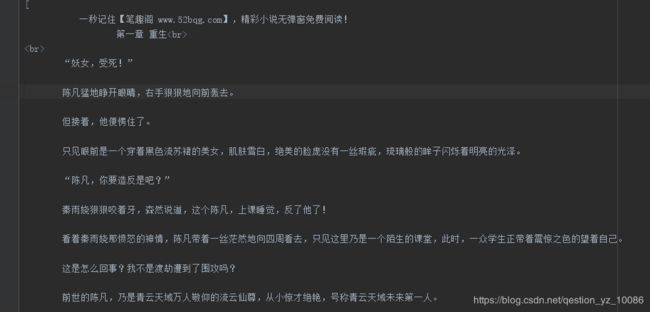

res = requests.get(self.url).content.decode('gbk')

soup = BeautifulSoup(res, "html.parser")

#

contents = soup.find_all("div", attrs={"class": "l"})

# 热门小说

contents2 = soup.find_all("div", attrs={"class": "r"})

# 玄幻小说,仙侠小说,都市言情小说

contents3 = soup.find_all("div", attrs={"class": "novelslist"})

# 更新小说

contents4 = soup.find_all("div", attrs={"id": "newscontent"})

for i, content in enumerate(contents):

dts = content.find_all("dt")

for dt in dts:

try:

self.urls3.append(dt.a.get("href"))

except Exception as e:

print(i)

for c in contents2:

lis = c.find_all("li")

for li in lis:

self.urls3.append(li.a.get("href"))

for c in contents3:

dts = c.find_all("dt")

lis = c.find_all("li")

for dt in dts:

self.urls3.append(dt.a.get("href"))

for li in lis:

self.urls3.append(li.a.get("href"))

for c in contents4:

lis = c.find_all("li")

for li in lis:

self.urls3.append(li.a.get("href"))

def result(self):

return self.urls3

class MyThread1(Thread):

def __init__(self, zzz, i):

super(MyThread).__init__()

self.zzz = zzz

self.i = i

self.contents = ""

self.urls=Read_excel(os.path.join(os.getcwd(), "data2", "urls3", "urls3.xlsx"))

self.t1=None

def run(self):

self.t1 = time.time()

zj_url = self.urls[self.i] + self.zzz

res = requests.get(zj_url).content.decode('gbk')

soup = BeautifulSoup(res, "html.parser")

cc = soup.find_all("div", attrs={"name": "content"})

self.contents = self.contents + str(cc).replace(', "").replace("

", "")

t2 = time.time()

print("{}时间".format(zj_url), t2 - self.t1)

def result(self):

return self.contents

def write(self):

contents = ''.join(self.contents)

with open(os.path.join(self.path3, Titles[self.i] + ".txt"), 'wb') as f:

f.write(contents.encode('utf-8'))

print("{}爬取完毕".format(Titles[self.i]))

def Get_content(url):

urls2 = []

res = requests.get(url).content.decode('gbk')

soup = BeautifulSoup(res, "html.parser")

contents = soup.find_all("div", attrs={"class": "nav"})

for content in contents:

lis = content.find_all("li")

for li in lis:

urls2.append(li.a.get("href"))

path = os.path.join(os.getcwd(), "data2", "urls2", "urls2.xlsx")

Urls2_excel(urls2,path)

def Urls2_excel(urls2,path):

if os.path.exists(path):

pass

else:

os.makedirs(path)

dict={}

dict["url"]=Series(urls2)

df=DataFrame(dict)

df.to_excel(path,index=False)

def Read_excel(path):

data=np.array(pd.read_excel(path,index=False))

return data

def Get_url3():

'''得到每本书的url'''

path_urls2=os.path.join(os.getcwd(),"data2","urls2","urls2.xlsx")

urls=Read_excel(path_urls2)

urls3 = []

ts = []

results = []

for url in urls:

# urls3=test2(url,urls3)

t = MyThread(url, urls3)

ts.append(t)

for t in ts:

t.run()

# t.join()

results.append(t.result())

print(results)

urls = list(set(results[0]))

path = os.path.join(os.getcwd(), "data2", "urls3", "urls3.xlsx")

Urls2_excel(urls,path)

# return urls

def Get_contens():

path = os.path.join(os.getcwd(), "data2", "urls3", "urls3.xlsx")

urls=Read_excel(path)

Titles = []

Authors = []

Images = []

CCs = [] # 简介

ZJs = [{} for i in range(len(urls))] # 章节

for i, url in enumerate(urls[:10]):

try:

res = requests.get(url).content.decode('gbk')

except Exception as e:

print("错误的url:", url)

res = requests.get(url).text

soup = BeautifulSoup(res, "html.parser")

# 图片

images = soup.find_all("div", attrs={"id": "fmimg"})

# 信息

infos = soup.find_all("div", attrs={"id": "info"})

# 简介

cs = soup.find_all("div", attrs={"id": "intro"})

# 章节

lists = soup.find_all("div", attrs={"id": "list"})

for c in images:

try:

c1 = c.img.get("src")

except Exception as e:

c1 = ""

Images.append(c1)

for c in infos:

ps = c.find_all("p")

Titles.append(c.h1.text)

Authors.append(ps[0].a.text)

cs = str(cs).replace('[', "").replace("]", ""). \

replace("

", "").replace(" ", "")

CCs.append(cs)

for list in lists:

dds = list.find_all("dd")

for dd in dds:

if dd.text:

ZJs[i][dd.text] = dd.a.get("href")

return Titles, Authors, Images, CCs, ZJs

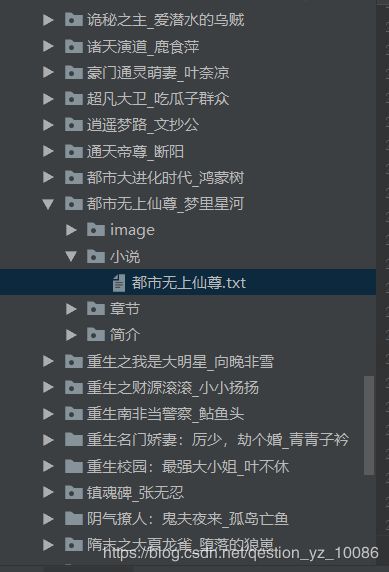

def Pq(Titles, Authors, Images, CCs, ZJs, i):

path = os.path.join(os.getcwd(), "data2", "urls3", "urls3.xlsx")

urls = Read_excel(path)

path_work = os.getcwd()

url = Images[i]

path1 = os.path.join(path_work, "data2", "{}_{}".format(Titles[i], Authors[i]), "image")

path2 = os.path.join(path_work, "data2", "{}_{}".format(Titles[i], Authors[i]), "章节")

path3 = os.path.join(path_work, "data2", "{}_{}".format(Titles[i], Authors[i]), "小说")

path4 = os.path.join(path_work, "data2", "{}_{}".format(Titles[i], Authors[i]), "简介")

path = [path1, path2, path3, path4]

for p in path:

if os.path.exists(p):

print("图片路径存在")

else:

os.makedirs(p)

print("创建{}成功".format(p))

image = requests.get(url).content

with open(os.path.join(path1, Titles[i] + '.png'), 'wb') as f:

f.write(image)

with open(os.path.join(path4, Titles[i] + '.txt'), "wb") as f:

f.write(CCs[i].encode("utf-8"))

with open(os.path.join(path2, Titles[i] + '--章节.txt'), "wb") as f:

zj = ''

for k in ZJs[i].keys():

zj = zj + k

f.write(zj.encode("utf-8"))

tt1 = time.time()

# pool=ThreadPool(4)

# d_l=[]

ts = []

for zzz in ZJs[i].values():

# d_l.append((zzz,i))

t = MyThread1(zzz, i)

ts.append(t)

# test(zzz, i,contents)

# pool.map()

# pool.join()

# pool.close()

results = []

for t in ts:

t.run()

results.append(t.result())

print(results)

contents = ''.join(results)

with open(os.path.join(path3, Titles[i] + ".txt"), 'wb') as f:

f.write(contents.encode('utf-8'))

print("{}爬取完毕".format(Titles[i]))

tt2 = time.time()

print("{}--{}".format(Titles[i], tt2 - tt1))

def Get_Data(Titles, Authors, Images, CCs, ZJs):

pool = Pool()

for i, _ in enumerate(Titles):

pool.apply_async(Pq, args=(Titles, Authors, Images, CCs, ZJs, i))

# Pq(Titles, Authors, Images, CCs, ZJs, urls, i)

pool.close()

pool.join()

if __name__ == '__main__':

# 工作路径

path_work = os.getcwd()

# 保存路径

path_save = os.path.join(path_work, "data1")

url1 = "https://www.52bqg.com/"

Get_content(url1)

t1 = time.time()

Get_url3()

t2 = time.time()

print("t2-t1=", t2 - t1)

Titles, Authors, Images, CCs, ZJs = Get_contens()

Get_Data(Titles, Authors, Images, CCs, ZJs)