SSD: Single Shot MultiBox Detector 论文详解

题目:SSD: Single Shot MultiBox Detector

Abstract

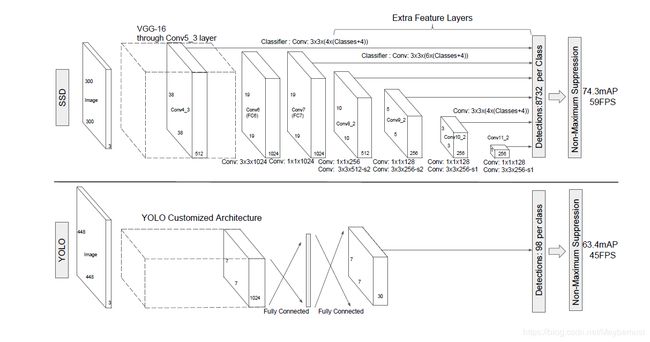

We present a method for detecting objects in images using a single deep neural network. Our approach, named SSD, discretizes the output space of bounding boxes into a set of default boxes over different aspect ratios and scales per feature map location. At prediction time, the network generates scores for the presence of each object category in each default box and produces adjustments to the box to better match the object shape. Additionally, the network combines pre- dictions from multiple feature maps with different resolutions to naturally handle objects of various sizes. SSD is simple relative to methods that require object proposals because it completely eliminates proposal generation and subsequent pixel or feature resampling stages and encapsulates all computation in a single network. This makes SSD easy to train and straightforward to integrate into sys- tems that require a detection component. Experimental results on the PASCAL VOC, COCO, and ILSVRC datasets confirm that SSD has competitive accuracy to methods that utilize an additional object proposal step and is much faster, while providing a unified framework for both training and inference. For 300 300 in- put, SSD achieves 74.3% mAP1 on VOC2007 test at 59 FPS on a Nvidia Titan X and for 512 512 input, SSD achieves 76.9% mAP, outperforming a compa- rable state-of-the-art Faster R-CNN model. Compared to other single stage meth- ods, SSD has much better accuracy even with a smaller input image size. Code is available at: https://github.com/weiliu89/caffe/tree/ssd .

作者提出了一种单神经网络的结构进行物体检测——SSD。在预测过程中,这一模型不但会根据物体的存在和类别给出概率预测,还会调整bounding box以更好的匹配物体。为了更好的处理不同大小的图像,也为此引入了许多种处理特征信息的方法。相较于预先提取特征的方法(R-CNN之类的),SSD更加简单,原因也和YOLO类似,就是将所有的处理都放在了一个网络里面并且消除了因为像素预处理和后续处理而产生的复杂度。

1 Introduction

Current state-of-the-art object detection systems are variants of the following approach: hypothesize bounding boxes, resample pixels or features for each box, and apply a high- quality classifier. This pipeline has prevailed on detection benchmarks since the Selec- tive Search work [1] through the current leading results on PASCAL VOC, COCO, and ILSVRC detection all based on Faster R-CNN[2] albeit with deeper features such as [3]. While accurate, these approaches have been too computationally intensive for em- bedded systems and, even with high-end hardware, too slow for real-time applications.Often detection speed for these approaches is measured in seconds per frame (SPF), and even the fastest high-accuracy detector, Faster R-CNN, operates at only 7 frames per second (FPS). There have been many attempts to build faster detectors by attacking each stage of the detection pipeline (see related work in Sec. 4), but so far, significantly increased speed comes only at the cost of significantly decreased detection accuracy.

自从selective search这种方式(Fast R-CNN)之类的盛行以后,目前比较流行的算法就是(1)先挑出可能的bounding box;(2)重新处理每个bounding box 的像素和特征;(3)再训练出更好的分类器。这种做法京都很高,但是速度极慢,针对每个训练阶段的优化都不得不伴随着牺牲精度为代价。

This paper presents the first deep network based object detector that does not re- sample pixels or features for bounding box hypotheses and and is as accurate as ap- proaches that do. This results in a significant improvement in speed for high-accuracy detection (59 FPS with mAP 74.3% on VOC2007 test, vs. Faster R-CNN 7 FPS with mAP 73.2% or YOLO 45 FPS with mAP 63.4%). The fundamental improvement in speed comes from eliminating bounding box proposals and the subsequent pixel or feature resampling stage. We are not the first to do this (cf [4,5]), but by adding a series of improvements, we manage to increase the accuracy significantly over previous at- tempts.

通过抛弃潜在bounding box提取(第一阶段选取可能的bounding box)实现了速度的提升。当然了,为了弥补精度也采取了一些措施。

Our improvements include using a small convolutional filter to predict object categories and offsets in bounding box locations, using separate predictors (filters) for different aspect ratio detections, and applying these filters to multiple feature maps from the later stages of a network in order to perform detection at multiple scales. With these modifications—especially using multiple layers for prediction at different scales—we can achieve high-accuracy using relatively low resolution input, further increasing de- tection speed. While these contributions may seem small independently, we note that the resulting system improves accuracy on real-time detection for PASCAL VOC from 63.4% mAP for YOLO to 74.3% mAP for our SSD. This is a larger relative improve- ment in detection accuracy than that from the recent, very high-profile work on residual networks [3]. Furthermore, significantly improving the speed of high-quality detection can broaden the range of settings where computer vision is useful.

作者提出的改进措施包括改进包括使用小尺寸的卷积滤波器预测物体类别和bounding box位置的偏移;使用单独的预测模型(滤波器)进行不同的宽高比检测;并将这些滤波器应用于网络后期的多个特征层以执行多尺度检测。

虽然看起来不起眼,但是的确实现了精度的巨大提升。

We summarize our contributions as follows:

– We introduce SSD, a single-shot detector for multiple categories that is faster than the previous state-of-the-art for single shot detectors (YOLO), and significantly more accurate, in fact as accurate as slower techniques that perform explicit region proposals and pooling (including Faster R-CNN).

– The core of SSD is predicting category scores and box offsets for a fixed set of

default bounding boxes using small convolutional filters applied to feature maps.

– To achieve high detection accuracy we produce predictions of different scales from feature maps of different scales, and explicitly separate predictions by aspect ratio.

– These design features lead to simple end-to-end training and high accuracy, even

on low resolution input images, further improving the speed vs accuracy trade-off.

– Experiments include timing and accuracy analysis on models with varying input size evaluated on PASCAL VOC, COCO, and ILSVRC and are compared to a range of recent state-of-the-art approaches.

作者梳理了他们的重点:

1)先是提出了精度与速度并重的SSD;

2)对特征层使用小型卷积核(滤波器)来预测物体的分类和bounding box的位置和大小的误差;

3)提出了从多个特征维度进行预测,并且提出单独的对bounding box的预测模型;

4)可以实现end-to-end的训练,并且在即使只有低精度图像输入的情况下仍然能够达到比较好的精度和速度。

5)利用各种训练测试集和最新的state-of-the-art 方法进行了比较。

2 The Single Shot Detector (SSD)

(a)SSD算法只需要一个图片输入和表征物体范围的ground truth box就可以完成训练;在卷积的时候,作者在不同特征层的每个位置尝试了不同宽高比的bounding box。对于每一个bounding box,我们预测了该box的偏移和分类的置信度。上图中框出来的部分是正样本,其他都是负样本。损失函数则用两个框之间的位置差衡量。

2.1 Model

The SSD approach is based on a feed-forward convolutional network that produces a fixed-size collection of bounding boxes and scores for the presence of object class instances in those boxes, followed by a non-maximum suppression step to produce the final detections. The early network layers are based on a standard architecture used for high quality image classification (truncated before any classification layers), which we will call the base network2. We then add auxiliary structure to the network to produce detections with the following key features:

该模型基于前馈卷积网络。预测结果会产生固定大小的一组bounding box和这些bounding box包含物体的置信度。用非最大抑制方法预测最后的结果。前几层基于标准的结构进行设计,这几层网络结构用于低层次特征提取,也被称作base network。然后再添加辅助结构用于检测。

Multi-scale feature maps for detection We add convolutional feature layers to the end of the truncated base network. These layers decrease in size progressively and allow predictions of detections at multiple scales. The convolutional model for predicting detections is different for each feature layer (cf Overfeat[4] and YOLO[5] that operate on a single scale feature map).

正像作者上文强调的那样,多维度的特征结构是检测的关键。作者在base network之后加入了卷积层分析特征,不但逐步降低了数据的规模,而且可以从多个维度进行判断。

Convolutional predictors for detection Each added feature layer (or optionally an ex- isting feature layer from the base network) can produce a fixed set of detection predic- tions using a set of convolutional filters. These are indicated on top of the SSD network architecture in Fig. 2. For a feature layer of size m x n with p channels, the basic el- ement for predicting parameters of a potential detection is a 3 x 3 x p small kernel that produces either a score for a category, or a shape offset relative to the default box coordinates. At each of the m x n locations where the kernel is applied, it produces an output value. The bounding box offset output values are measured relative to a default

box position relative to each feature map location (cf the architecture of YOLO[5] that uses an intermediate fully connected layer instead of a convolutional filter for this step).

每一个添加的特征层在经过一组卷积滤波器处理后都可以生成一组预测结果。这几层在上图中表现为顶端几层。对于一个mn大小,有p个通道的张量,一般会有33*p的卷积核进行处理产生相应于每个分类 置信度或者相对于default box坐标的偏移量。并且bounding box的偏移是通过和default box的位置计算得到的。

Default boxes and aspect ratios We associate a set of default bounding boxes with each feature map cell, for multiple feature maps at the top of the network. The default boxes tile the feature map in a convolutional manner, so that the position of each box relative to its corresponding cell is fixed. At each feature map cell, we predict the offsets relative to the default box shapes in the cell, as well as the per-class scores that indicate the presence of a class instance in each of those boxes. Specifically, for each box out of k at a given location, we compute c class scores and the 4 offsets relative to the original default box shape. This results in a total of (c + 4)k filters that are applied around each location in the feature map, yielding (c + 4)kmn outputs for a m x n feature map. For an illustration of default boxes, please refer to Fig. 1. Our default boxes are similar to the anchor boxes used in Faster R-CNN [2], however we apply them to several feature maps of different resolutions. Allowing different default box shapes in several feature maps let us efficiently discretize the space of possible output box shapes.

对于几个顶层的特征层,我们会设置一组default bounding box。这些box会平铺盖满在特征层,以便使每个box相对应的cell是固定的。对于特征层的每个cell,我们预测了相对于default box的偏移量和每个box是否包含一种分类物体的置信度。具体来说,对于给定位置的k中的每个框,我们计算对于这c类,属于每一类的概率和相对于原始默认框的4个维度的偏移量。这导致在特征图中的每个位置周围应用总共(c + 4)k个滤波器,对于一个mxn尺寸的特征图产生(c + 4)kmn输出。这里使用的default boxes使用的anchor boxes,但我们将它们应用于不同分辨率的多个特征层。

2.2 Training

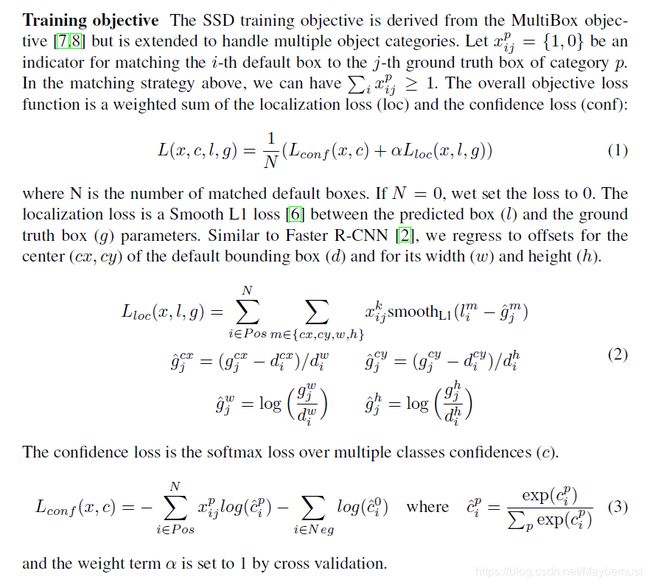

The key difference between training SSD and training a typical detector that uses region proposals, is that ground truth information needs to be assigned to specific outputs in the fixed set of detector outputs. Some version of this is also required for training in YOLO[5] and for the region proposal stage of Faster R-CNN[2] and MultiBox[7]. Once this assignment is determined, the loss function and back propagation are applied end- to-end. Training also involves choosing the set of default boxes and scales for detection as well as the hard negative mining and data augmentation strategies.

SSD和传统特征提取算法的区别在于,SSD 训练图像中的 ground truth 需要赋予到那些固定输出的 boxes 上。在前面也已经提到了,SSD 输出的是事先定义好的,一系列固定大小的 bounding boxes。

Matching strategy During training we need to determine which default boxes corre- spond to a ground truth detection and train the network accordingly. For each ground truth box we are selecting from default boxes that vary over location, aspect ratio, and scale. We begin by matching each ground truth box to the default box with the best jaccard overlap (as in MultiBox [7]). Unlike MultiBox, we then match default boxes to any ground truth with jaccard overlap higher than a threshold (0.5). This simplifies the learning problem, allowing the network to predict high scores for multiple overlapping default boxes rather than requiring it to pick only the one with maximum overlap.

将default box和ground truth box配对,根据的因素有位置和宽高比和维度。开始的时候只有最高的jaccard overlap可以配对,但是后期只要超过一定的阈值(0.5)就都可以配对。

根据上面的匹配策略,一定有 个 ground truth box,有可能有多个 default box 与其相匹配。

总的目标损失函数(objective loss function)就由 localization loss(loc) 与 confidence loss(conf) 的加权求和,公式如上。

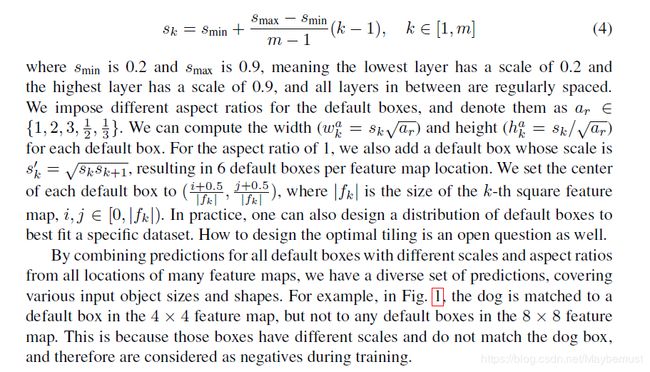

Choosing scales and aspect ratios for default boxes To handle different object scales, some methods [4,9] suggest processing the image at different sizes and combining the results afterwards. However, by utilizing feature maps from several different layers in a single network for prediction we can mimic the same effect, while also sharing parame- ters across all object scales. Previous works [10,11] have shown that using feature maps from the lower layers can improve semantic segmentation quality because the lower layers capture more fine details of the input objects. Similarly, [12] showed that adding global context pooled from a feature map can help smooth the segmentation results.Motivated by these methods, we use both the lower and upper feature maps for detec- tion. Figure 1 shows two exemplar feature maps (8 8 and 4 4) which are used in the framework. In practice, we can use many more with small computational overhead.

Feature maps from different levels within a network are known to have different (empirical) receptive field sizes [13]. Fortunately, within the SSD framework, the de- fault boxes do not necessary need to correspond to the actual receptive fields of each layer. We design the tiling of default boxes so that specific feature maps learn to be responsive to particular scales of the objects. Suppose we want to use m feature maps for prediction. The scale of the default boxes for each feature map is computed as:

大部分 CNN 网络在越深的层,feature map 的尺寸(size)会越来越小。这样做不仅仅是为了减少计算与内存的需求,还有个好处就是,最后提取的 feature map 就会有某种程度上的平移与尺度不变性。

同时为了处理不同尺度的物体,一些文章,如 ICLR 2014, Overfeat: Integrated recognition, localization and detection using convolutional networks,还有 ECCV 2014, Spatial pyramid pooling in deep convolutional networks for visual recognition,他们将图像转换成不同的尺度,将这些图像独立的通过 CNN 网络处理,再将这些不同尺度的图像结果进行综合。

但是其实,如果使用同一个网络中的、不同层上的 feature maps,也可以达到相同的效果,同时在所有物体尺度中共享参数。

之前的工作,如 CVPR 2015, Fully convolutional networks for semantic segmentation,还有 CVPR 2015, Hypercolumns for object segmentation and fine-grained localization 就用了 CNN 前面的 layers,来提高图像分割的效果,因为越底层的 layers,保留的图像细节越多。文章 ICLR 2016, ParseNet: Looking wider to see better 也证明了以上的想法是可行的。

因此,本文同时使用 lower feature maps、upper feature maps 来 predict detections。一般来说,一个 CNN 网络中不同的 layers 有着不同尺寸的 感受野(receptive fields)。这里的感受野,指的是输出的 feature map 上的一个节点,其对应输入图像上尺寸的大小。

所幸的是,SSD 结构中,default boxes 不必要与每一层 layer 的 receptive fields 对应。本文的设计中,feature map 中特定的位置,来负责图像中特定的区域,以及物体特定的尺寸。

Hard negative mining After the matching step, most of the default boxes are nega- tives, especially when the number of possible default boxes is large. This introduces a significant imbalance between the positive and negative training examples. Instead of using all the negative examples, we sort them using the highest confidence loss for each default box and pick the top ones so that the ratio between the negatives and positives is at most 3:1. We found that this leads to faster optimization and a more stable training.

在生成一系列的 predictions 之后,会产生很多个符合 ground truth box 的 predictions boxes,但同时,不符合 ground truth boxes 也很多,而且这个 negative boxes,远多于 positive boxes。这会造成 negative boxes、positive boxes 之间的不均衡。训练时难以收敛。

因此,本文采取,先将每一个物体位置上对应 predictions(default boxes)是 negative 的 boxes 进行排序,按照 default boxes 的 confidence 的大小。 选择最高的几个,保证最后 negatives、positives 的比例在 3:13:1。

Data augmentation To make the model more robust to various input object sizes and shapes, each training image is randomly sampled by one of the following options:

– Use the entire original input image.

– Sample a patch so that the minimum jaccard overlap with the objects is 0.1, 0.3, 0.5, 0.7, or 0.9.

– Randomly sample a patch.

The size of each sampled patch is [0.1, 1] of the original image size, and the aspect ratio is between 1 and 2. We keep the overlapped part of the ground truth box if the center of it is in the sampled patch. After the aforementioned sampling step, each sampled patch is resized to fixed size and is horizontally flipped with probability of 0.5, in addition to applying some photo-metric distortions similar to those described in [14].

本文同时对训练数据做了 data augmentation,数据增广。

每一张训练图像,随机的进行如下几种选择:

- 使用原始的图像

- 采样一个 patch,与物体之间最小的 jaccard overlap 为:0.1,0.3,0.5,0.7 与 0.9

- 随机的采样一个 patch

采样的 patch 是原始图像大小比例是 [0.1,1],aspect ratio 在 0.5 与 2 之间。

当 ground truth box 的 中心(center)在采样的 patch 中时,我们保留重叠部分。

在这些采样步骤之后,每一个采样的 patch 被 resize 到固定的大小,并且以 0.50.5 的概率随机的 水平翻转(horizontally flipped)

3 Experimental Results

4 Related Work

5 Conclusions

6 Acknowledgment

References

…