Paddle:VGG16 识别 MNIST

数据的处理

## 数据的生成器

# 加载相关库

import os

import random

import paddle

import paddle.fluid as fluid

from paddle.fluid.dygraph.nn import Conv2D, Pool2D, Linear

import numpy as np

from PIL import Image

import gzip

import json

# 定义数据集读取器

def load_data(mode='train'):

# 读取数据文件

datafile = './work/mnist.json.gz'

print('loading mnist dataset from {} ......'.format(datafile))

data = json.load(gzip.open(datafile))

# 读取数据集中的训练集,验证集和测试集

train_set, val_set, eval_set = data

# 数据集相关参数,图片高度IMG_ROWS, 图片宽度IMG_COLS

IMG_ROWS = 28

IMG_COLS = 28

# 根据输入mode参数决定使用训练集,验证集还是测试

if mode == 'train':

imgs = train_set[0]

labels = train_set[1]

elif mode == 'valid':

imgs = val_set[0]

labels = val_set[1]

elif mode == 'eval':

imgs = eval_set[0]

labels = eval_set[1]

# 获得所有图像的数量

imgs_length = len(imgs)

# 验证图像数量和标签数量是否一致

assert len(imgs) == len(labels), \

"length of train_imgs({}) should be the same as train_labels({})".format(

len(imgs), len(labels))

index_list = list(range(imgs_length))

# 定义数据生成器

def data_generator():

# 训练模式下,打乱训练数据

if mode == 'train':

random.shuffle(index_list)

imgs_list = []

labels_list = []

# 按照索引读取数据

for i in index_list:

# 读取图像和标签,转换其尺寸和类型

img = np.reshape(imgs[i], [1, IMG_ROWS, IMG_COLS]).astype('float32')

label = np.reshape(labels[i], [1]).astype('int64')

imgs_list.append(img)

labels_list.append(label)

# 如果当前数据缓存达到了batch size,就返回一个批次数据

if len(imgs_list) == BATCHSIZE:

yield np.array(imgs_list), np.array(labels_list)

# 清空数据缓存列表

imgs_list = []

labels_list = []

# 如果剩余数据的数目小于BATCHSIZE,

# 则剩余数据一起构成一个大小为len(imgs_list)的mini-batch

if len(imgs_list) > 0:

yield np.array(imgs_list), np.array(labels_list)

return data_generator

VGG模型的建立

由于 VGG16 太深,且MNIST图片太小,因此为了防止过拟合,这里,对原来的VGG16的卷积层进行了删剪:

# VGG模型代码

import numpy as np

import paddle

import paddle.fluid as fluid

from paddle.fluid.layer_helper import LayerHelper

from paddle.fluid.dygraph.nn import Conv2D, Pool2D, BatchNorm, Linear

from paddle.fluid.dygraph.base import to_variable

# 定义vgg块,包含多层卷积和1层2x2的最大池化层

class vgg_block(fluid.dygraph.Layer):

def __init__(self, num_convs, in_channels, out_channels):

"""

num_convs, 卷积层的数目

num_channels, 卷积层的输出通道数,在同一个Incepition块内,卷积层输出通道数是一样的

"""

super(vgg_block, self).__init__()

self.conv_list = []

for i in range(num_convs):

conv_layer = self.add_sublayer('conv_' + str(i), Conv2D(num_channels=in_channels,

num_filters=out_channels, filter_size=3, padding=1, act='relu'))

self.conv_list.append(conv_layer)

in_channels = out_channels

self.pool = Pool2D(pool_stride=2, pool_size = 2, pool_type='max')

def forward(self, x):

for item in self.conv_list:

x = item(x)

return self.pool(x)

class VGG(fluid.dygraph.Layer):

def __init__(self, conv_arch=((2, 64),

(2, 128), (3, 256))):

super(VGG, self).__init__()

self.vgg_blocks=[]

iter_id = 0

# 添加vgg_block

# 这里一共5个vgg_block,每个block里面的卷积层数目和输出通道数由conv_arch指定

in_channels = [1, 64, 128]

for (num_convs, num_channels) in conv_arch:

block = self.add_sublayer('block_' + str(iter_id),

vgg_block(num_convs, in_channels=in_channels[iter_id],

out_channels=num_channels))

self.vgg_blocks.append(block)

iter_id += 1

self.fc1 = Linear(input_dim=256*3*3, output_dim=100,

act='relu')

self.drop1_ratio = 0.5

self.fc3 = Linear(input_dim=100, output_dim=10, act='softmax')

def forward(self, x, label):

for item in self.vgg_blocks:

x = item(x)

x = fluid.layers.reshape(x, [x.shape[0], -1])

# print(x.shape)

x = fluid.layers.dropout(self.fc1(x), self.drop1_ratio)

# print(x.shape)

x = self.fc3(x)

if label is not None:

acc = fluid.layers.accuracy(input=x, label=label)

return x, acc

else:

return x

模型的训练

## 使用GPU进行模型的训练

#调用加载数据的函数

train_loader = load_data('train')

#在使用GPU机器时,可以将use_gpu变量设置成True

use_gpu = True

place = fluid.CUDAPlace(0) if use_gpu else fluid.CPUPlace()

BATCHSIZE = 100

EPOCH_NUM = 10

with fluid.dygraph.guard(place):

model = VGG()

model.train()

## 这里使用动态学习率,学习率根据训练步骤,从 0.01 衰减到 0.001 的过程

#计算变化的次数

total_steps = (int(60000//BATCHSIZE) + 1) * EPOCH_NUM

## 学习率以多项曲线从 0.01 衰减到 0.001

#lr = fluid.dygraph.PolynomialDecay(0.01, total_steps, 0.001)

#四种优化算法的设置方案,可以逐一尝试效果

# optimizer = fluid.optimizer.SGDOptimizer(learning_rate=0.01, parameter_list=model.parameters())

# optimizer = fluid.optimizer.MomentumOptimizer(learning_rate=0.01, momentum=0.9, parameter_list=model.parameters())

# optimizer = fluid.optimizer.AdagradOptimizer(learning_rate=0.01, parameter_list=model.parameters())

# optimizer = fluid.optimizer.AdamOptimizer(learning_rate=0.01, parameter_list=model.parameters())

# 可以在优化算法的基础上添加正则项,用于减少过拟合,参数regularization_coeff调节正则化项的权重

# optimizer = fluid.optimizer.SGDOptimizer(learning_rate=0.01, regularization=fluid.regularizer.L2Decay(regularization_coeff=0.1),parameter_list=model.parameters()))

optimizer = fluid.optimizer.AdamOptimizer(learning_rate=0.01, parameter_list=model.parameters())

iter=0

iters=[]

losses=[]

for epoch_id in range(EPOCH_NUM):

for batch_id, data in enumerate(train_loader()):

#准备数据

image_data, label_data = data

image = fluid.dygraph.to_variable(image_data)

label = fluid.dygraph.to_variable(label_data)

#前向计算的过程,同时拿到模型输出值和分类准确率

# 直接传入即可,无需修改one-hot编码

predict, acc = model(image, label)

avg_acc = fluid.layers.mean(acc)

#计算损失,取一个批次样本损失的平均值

loss = fluid.layers.cross_entropy(predict, label)

avg_loss = fluid.layers.mean(loss)

#每训练了200批次的数据,打印下当前Loss的情况

if batch_id % 200 == 0:

print("epoch: {}, batch: {}, loss is: {}, acc is {}".format(epoch_id, batch_id, avg_loss.numpy(), avg_acc.numpy()))

iters.append(iter)

losses.append(avg_loss.numpy())

iter = iter + 100

#后向传播,更新参数的过程

avg_loss.backward()

optimizer.minimize(avg_loss)

model.clear_gradients()

# 保存模型参数和优化器的参数

fluid.save_dygraph(model.state_dict(), './checkpoint/mnist_epoch{}'.format(epoch_id))

fluid.save_dygraph(optimizer.state_dict(), './checkpoint/mnist_epoch{}'.format(epoch_id))

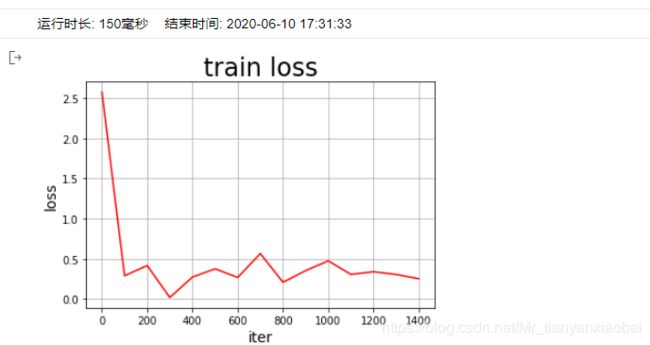

可视化

import matplotlib.pyplot as plt

%matplotlib inline

### 可视化

#画出训练过程中Loss的变化曲线

plt.figure()

plt.title("train loss", fontsize=24)

plt.xlabel("iter", fontsize=14)

plt.ylabel("loss", fontsize=14)

plt.plot(iters, losses,color='red',label='train loss')

plt.grid()

plt.show()

模型的测试

对模型进行测试

with fluid.dygraph.guard(place):

print('start evaluation .......')

#加载模型参数

model = VGG()

model_state_dict, _ = fluid.load_dygraph('checkpoint/mnist_epoch4')

model.load_dict(model_state_dict)

model.eval()

eval_loader = load_data('eval')

acc_set = []

avg_loss_set = []

for batch_id, data in enumerate(eval_loader()):

x_data, y_data = data

img = fluid.dygraph.to_variable(x_data)

label = fluid.dygraph.to_variable(y_data)

prediction, acc = model(img, label)

loss = fluid.layers.cross_entropy(input=prediction, label=label)

avg_loss = fluid.layers.mean(loss)

acc_set.append(float(acc.numpy()))

avg_loss_set.append(float(avg_loss.numpy()))

#计算多个batch的平均损失和准确率

acc_val_mean = np.array(acc_set).mean()

avg_loss_val_mean = np.array(avg_loss_set).mean()

print('loss={}, acc={}'.format(avg_loss_val_mean, acc_val_mean))