大数据的存储与处理

环境

虚拟主机:centos7两台

vmware 11.0

jdk-8u45-linux-x64

hadoop-2.7.0

* 一、单机模式的Hadoop分布式环境安装和运行*

所需环境:

两台centos7.0 64位虚拟机

hadoop-2.7.0

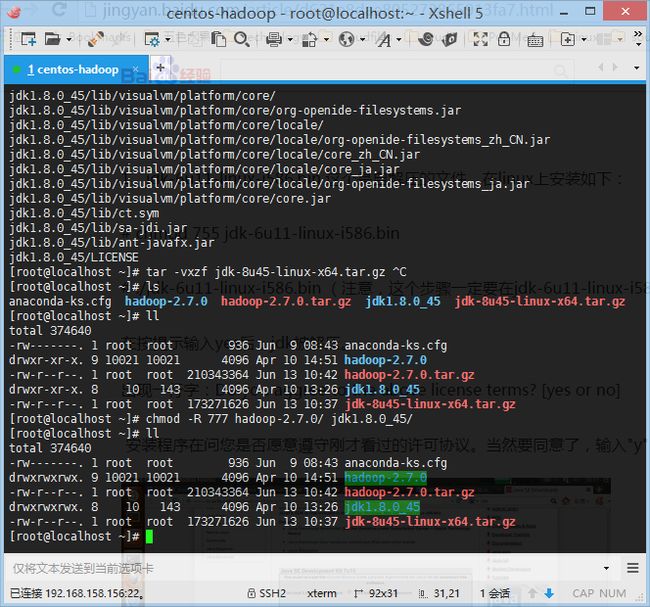

jdk-8u45-linux-x64分别进行解压并更改权限(代码序列如下)

tar –vxzf hadoop-2.7.0.tar.gztar -vxzf jdk-8u45-linux-x64.tar.gzchmod -R 777 hadoop-2.7.0/ jdk1.8.0_45/配置环境变量

jdk 和 Hadoop已经解压好的包路径为/root/编辑系统环境变量

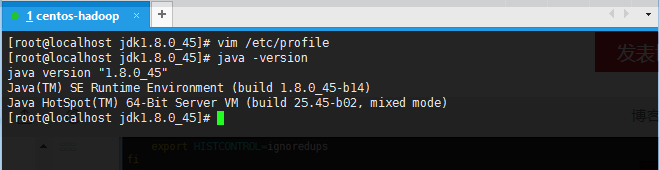

vim /etc/profile并在文件最后添加:

export JAVA_HOME=/root/jdk1.8.0_45

export PATH=$JAVA_HOME/bin:$PATH

- 执行命令 . /etc/profile

注意:这里 . 与 / 之间存在一个空格

若在当前目录在/etc/,那么可以直接执行./profile,这里 . 与 /没有空格 - 输入java -version 进行测试

-

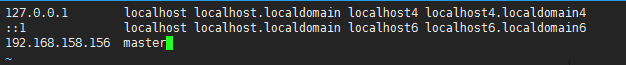

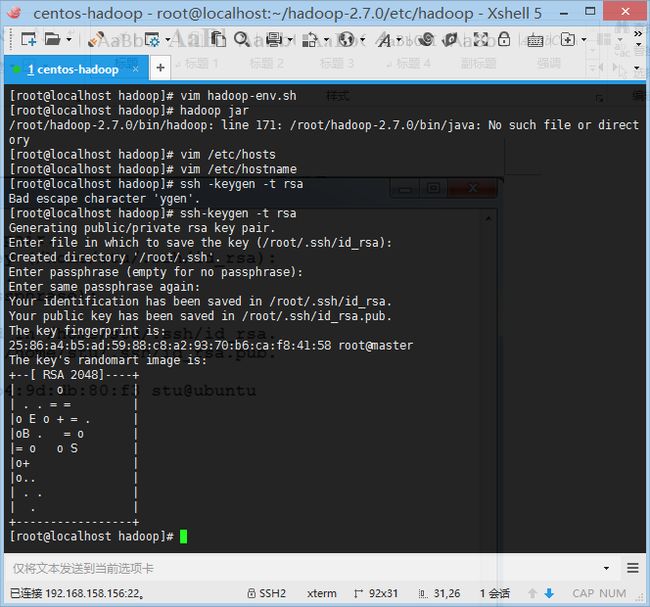

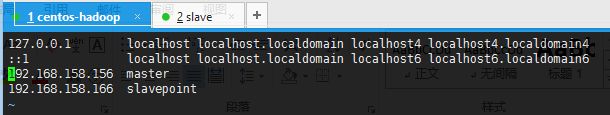

- 修改hosts,将master ip地址编辑到hosts文件中

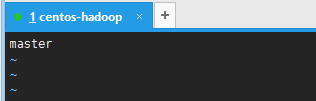

vim /etc/hosts- 修改hostname

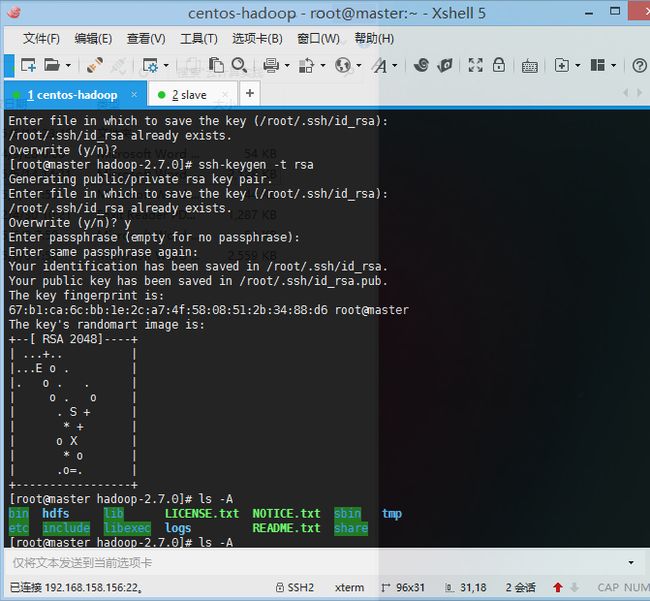

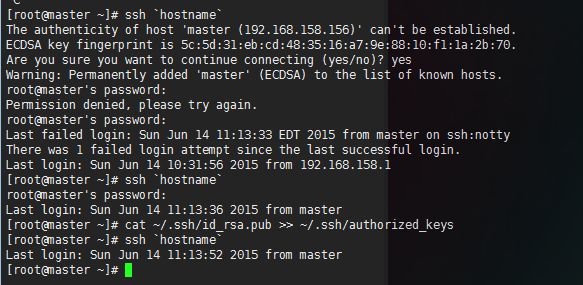

vim /etc/hostname- 创建ssh公钥和私钥

ssh-keygen –t rsa- 将公钥导入认证文件

cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys- 将以下两句添加进/etc/profile文件中

export HADOOP_HOME=/root/hadoop-2.7.0

export PATH=$HADOOP_HOME/bin:$PATH

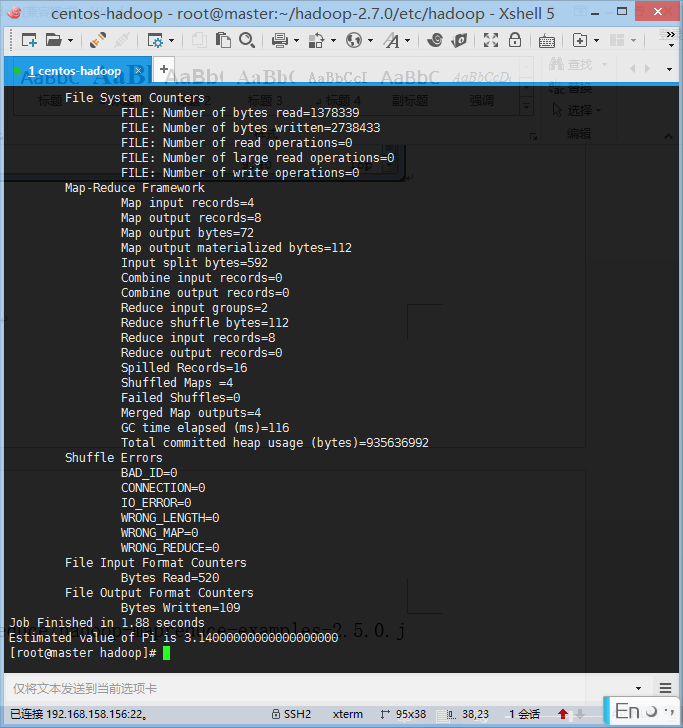

hadoop jar /root/hadoop-2.7.0/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.7.0.jar pi 4 1000二 、完全分布模式的Hadoop分布式环境安装和运行

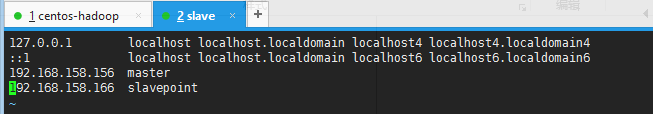

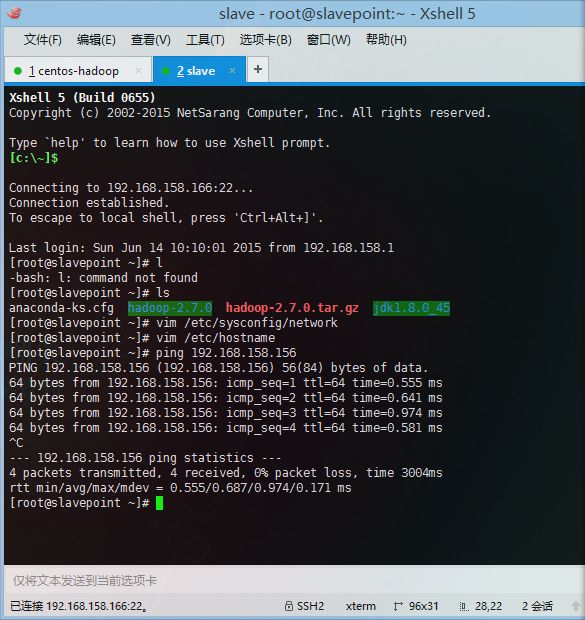

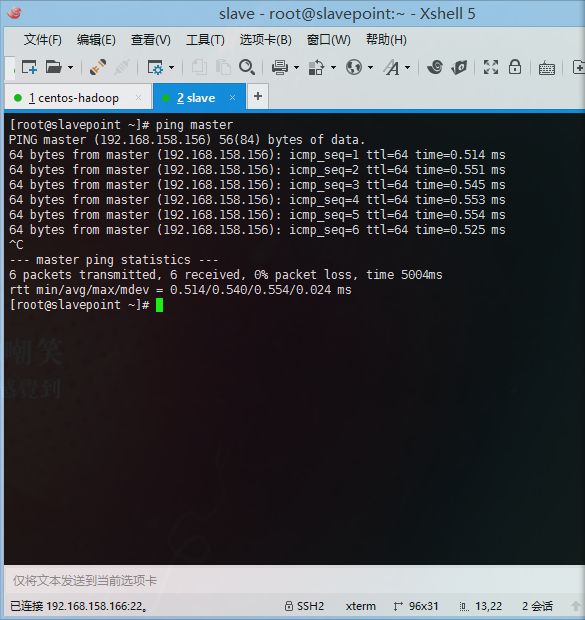

两台虚拟机地址:

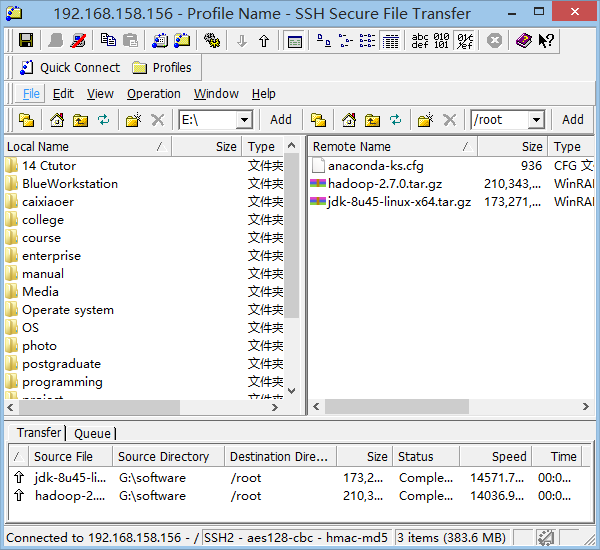

Master:192.168.158.156

Slavepoint:192.168.158.166实验环境

两台centos7.0 64位虚拟机

hadoop-2.7.0

jdk-8u45-linux-x64实验步骤

vim /etc/hostnamevim /etc/hosts- 配置master主机hostname,hosts文件

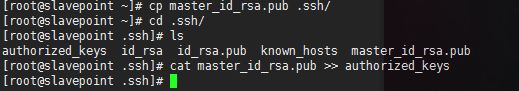

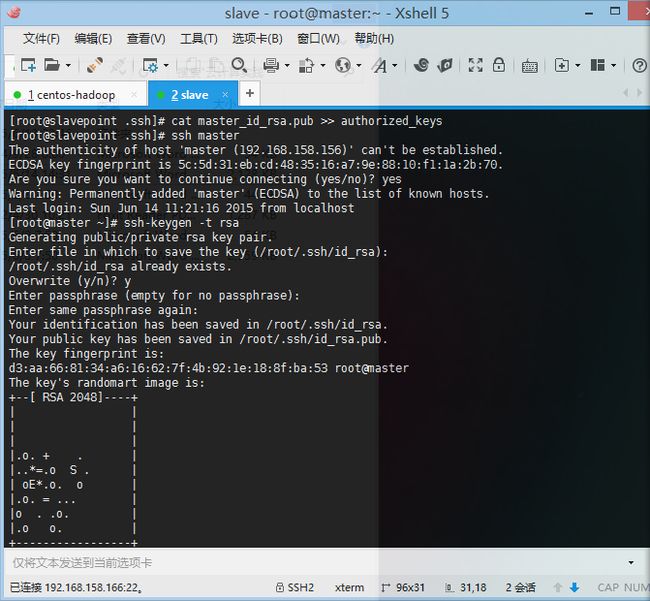

vim /etc/hostnamevim /etc/hostsscp ~/.ssh/id_rsa.pub root@192.168.158.166:~/Slave主机生成秘钥

cat id_rsa.pub >> authorized_keys将秘钥导入值master主机

scp id_rsa.pub root@master:~/进入master主机将密钥导入认证文件

mv id_rsa.pub salve_id_rsa.pub

cp salve_id_rsa.pub .ssh/

cd .ssh/

cat salve_id_rsa.pub >> authorized_keys

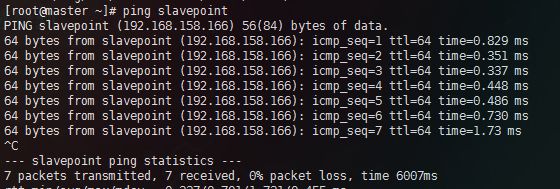

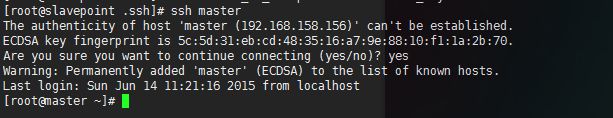

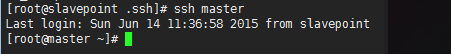

在slavepint主机执行命令 ssh master

- 在hadoop根下建立hadoop工作临时文件夹

mkdir tmp hdfs

mkdir hdfs/name hdfs/data

- 配置namenode,修改core-site.xml文件 vim core-site.xml

<configuration>

<property>

<name>fs.default.namename>

<value>hdfs://master:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>file:/root/hadoop-2.7.0/tmpvalue>

property>

configuration>

- 修改hdfs-site.xml vim hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>1value>

property>

<property>

<name>dfs.name.dirname>

<value>file:/root/hadoop-2.7.0/hdfs/namevalue>

property>

<property>

<name>dfs.data.dirname>

<value>file:/root/hadoop-2.7.0/hdfs/datavalue>

property>

<property>

<name>dfs.webhdfs.enabledname>

<value>truevalue>

property>

configuration>

- 修改yarn-site.xml 文件 vim yarn-site.xml

<configuration>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.classname>

<value>org.apache.hadoop.mapred.ShuffleHandlervalue>

property>

<property>

<name>yarn.resourcemanager.addressname>

<value>master:8032value>

property>

<property>

<name>yarn.resourcemanager.scheduler.addressname>

<value>master:8030value>

property>

<property>

<name>yarn.resourcemanager.resource-tracker.addressname>

<value>master:8031value>

property>

<property>

<name>yarn.resourcemanager.admin.addressname>

<value>master:8033value>

property>

<property>

<name>yarn.resourcemanager.webapp.addressname>

<value>master:8088value>

property>

configuration>

- 将配置好的hadoop和jdk以及/etc/profile导入到salvepoint主机中,并在slavepoint主机中执行. /etc/profile使得环境变量生效

scp /root/Hadoop-2.7.0 root@slavepoint:/root/

scp /root/jdk1.8.0_45 root@slavepoint:/root/

scp /etc/profile root@slavepoint:/etc/

. /etc/profile (在slavepoint主机中执行)

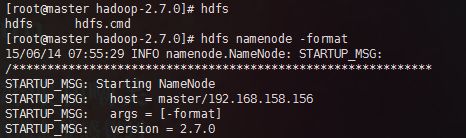

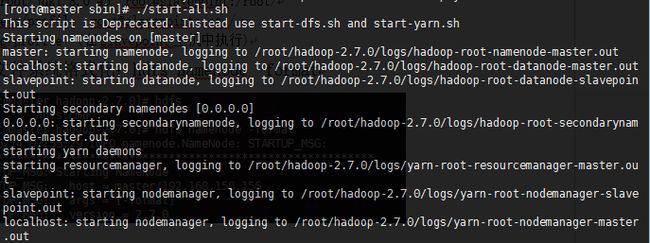

- 文件系统格式化:hdfs namenode –format,并开启hadoop

. /root/Hadoop-2.7.0/sbin/start-all.sh