python 朴素贝叶斯算法使用

朴素贝叶斯算法使用

工具:Pycharm,win10,Python3.6.4

1.题目要求

根据如下数据使用朴素贝叶斯算法进行预测。

Document Content Category

d1 ball goal cart goal Sports

d2 theater cart drama Culture

d3 drama strategy decision drama Politics

d4 theater ball Culture

d5 ball goal player strategy Sports

d6 theater cart opera Culture

d7 ball player strategy ?

d8 theater cart decision ?

2.Python代码

现在有三种类别Culture,Politics,Sports,我们把这三个类别分别建一个文件夹,并且把Content存入其中,这样子遍历文件的时候方便给数据打上标签。首先获取词汇表,代码和结果如下

import re

import numpy as np

import os

def textParse(String):

list_String = re.split(r'\W*', String)

return list_String

def readfiles():

doc_list = []

class_list = []

file_lists = ['culture', 'politics', 'sports']

for i in range(3):

for txtfile in os.listdir(file_lists[i] + '/'):

with open(file_lists[i] + '/' + txtfile, 'r', ) as f:

word_list = textParse(f.read())

doc_list.append(list(word_list))

class_list.append(i + 1)

# vocab_list = createVocabList(doc_list)

return doc_list, class_list

if __name__ == '__main__':

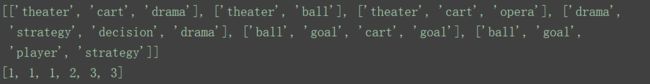

doc_list, class_list = readfiles()

print(doc_list)

print(class_list)

根据词汇表,讲切分好的词条转换为词条向量,代码和结果如下

import re

import numpy as np

import os

def textParse(String):

list_String = re.split(r'\W*', String)

return list_String

def readfiles():

doc_list = []

class_list = []

file_lists = ['culture', 'politics', 'sports']

for i in range(3):

for txtfile in os.listdir(file_lists[i] + '/'):

with open(file_lists[i] + '/' + txtfile, 'r', ) as f:

word_list = textParse(f.read())

doc_list.append(list(word_list))

class_list.append(i + 1)

# vocab_list = createVocabList(doc_list)

return doc_list, class_list

def createVocabList(dataSet):

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def setWords2Vec(vocablist, inputSet):

returnVec = [0] * len(vocablist)

for word in inputSet:

if word in vocablist:

returnVec[vocablist.index(word)] += 1

return returnVec

if __name__ == '__main__':

doc_list, class_list = readfiles()

vocab_list = createVocabList(doc_list)

trainingSet = list(range(6))

trainMat = []

trainLabel = []

# print(doc_list[1])

for docIndex in trainingSet:

trainMat.append(setWords2Vec(vocab_list, doc_list[docIndex]))

trainLabel.append(class_list[docIndex])

print(trainMat)

print(trainLabel)

接下来就可以根据贝叶斯公式进行分类,但要注意会出现0概率的问题,所以我们将所有词的出现数初始化为1,并将分母初始化为2,进行拉普拉斯平滑。代码和结果如下:

import re

import numpy as np

import os

def textParse(String):

list_String = re.split(r'\W*', String)

return list_String

def readfiles():

doc_list = []

class_list = []

file_lists = ['culture', 'politics', 'sports']

for i in range(3):

for txtfile in os.listdir(file_lists[i] + '/'):

with open(file_lists[i] + '/' + txtfile, 'r', ) as f:

word_list = textParse(f.read())

doc_list.append(list(word_list))

class_list.append(i + 1)

# vocab_list = createVocabList(doc_list)

return doc_list, class_list

def createVocabList(dataSet):

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def setWords2Vec(vocablist, inputSet):

returnVec = [0] * len(vocablist)

for word in inputSet:

if word in vocablist:

returnVec[vocablist.index(word)] += 1

return returnVec

def train(trainMatrix, trainCategory):

num_train = len(trainMatrix)

num_words = len(trainMatrix[0])

p_culture = list(trainCategory).count(1) / float(num_train)

p_politics = list(trainCategory).count(2) / float(num_train)

p_sports = list(trainCategory).count(3) / float(num_train)

p_culture_Num = np.ones(num_words)

p_politics_Num = np.ones(num_words)

p_sports_Num = np.ones(num_words)

p_culture_la = 2.0

p_politics_la = 2.0

p_sports_la = 2.0

for i in range (num_train):

if trainCategory[i] == 1:

p_culture_Num += trainMatrix[i]

p_culture_la += sum(trainMatrix[i])

if trainCategory[i] == 2:

p_politics_Num += trainMatrix[i]

p_politics_la += sum(trainMatrix[i])

if trainCategory[i] == 3:

p_sports_Num += trainMatrix[i]

p_sports_la += sum(trainMatrix[i])

p_culture_vect = np.log(p_culture_Num/p_culture_la)

p_politics_vect = np.log(p_politics_Num/p_politics_la)

p_sports_vect = np.log(p_sports_Num/p_sports_la)

return p_culture_vect,p_politics_vect,p_sports_vect,p_culture,p_politics,p_sports

def classify(vec,p_culture_vect,p_politics_vect,p_sports_vect,p_culture,p_politics,p_sports):

p1 = sum(vec * p_culture_vect) + np.log(p_culture)

p2 = sum(vec * p_politics_vect) + np.log(p_politics)

p3 = sum(vec * p_sports_vect) + np.log(p_sports)

if p1 > p2 and p1 > p3:

return 'culture'

if p2 > p1 and p2 > p3:

return 'politics'

if p3 > p2 and p3 > p1:

return 'sports'

if __name__ == '__main__':

doc_list, class_list = readfiles()

vocab_list = createVocabList(doc_list)

trainingSet = list(range(6))

trainMat = []

trainLabel = []

# print(doc_list[1])

for docIndex in trainingSet:

trainMat.append(setWords2Vec(vocab_list, doc_list[docIndex]))

trainLabel.append(class_list[docIndex])

p_culture_vect, p_politics_vect, p_sports_vect, p_culture, p_politics, p_sports = train(np.array(trainMat),

np.array(trainLabel))

testSet = [['ball', 'player', 'strategy'], ['theater', 'cart', 'decision']]

for i in range(2):

wordVector = setWords2Vec(vocab_list, testSet[i])

print(classify(np.array(wordVector), p_culture_vect, p_politics_vect, p_sports_vect, p_culture, p_politics,

p_sports))