Kubernetes v1.15.1集群部署,etcd-v3.3.10集群独启动

大家好,我是Linux运维工程师 Linke 。技术过硬,从不挖坑~

先来说下大概的部署套路~

首当其冲当然是要初始化一下系统环境,因为kubelet 对系统的一些配置有要求,不能有交换内存,不能开firewalld

然后就要有个 etcd 集群,生产环境必须得是集群,否则谈不上高可用,最少要兄弟三个。

既然是高可用,肯定要有个 keepalive 的高可用软件。

然后嘞就是 k8s 服务 kubelet

再然后就是容器网络 flannel

然后就是将所有节点加入到集群中了

废话不多说,直接开干吧

服务器划分

| ip | 主机名 | 部署服务 |

|---|---|---|

| 192.168.2.101 | k8smaster101 | etcd、k8smaster、keepalived |

| 192.168.2.102 | k8smaster102 | etcd、k8smaster、keepalived |

| 192.168.2.103 | k8smaster103 | etcd、k8smaster、keepalived |

| 192.168.2.104 | k8snode104 | k8snode |

| 192.168.2.105 | k8snode105 | k8snode |

| 192.168.2.100 | Vip | 高可用虚拟 ip |

各软件版本

64位CentOS 7.6

etcd v3.3.10

kubelet v1.15.1

docker 18.03.1-ce

Keepalived v1.3.5

一、准备工作

1、更新 yum

yum -y update

2、停止防火墙

systemctl stop firewalld

systemctl disable firewalld

3、时间统一

(echo "*/10 * * * * /usr/sbin/ntpdate asia.pool.ntp.org";crontab -l)|crontab

crontab -l

4、关闭swap

swapoff -a

sed -i '/swap/d' /etc/fstab

5、禁止iptables对bridge数据进行处理

cat < /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

sysctl -p /etc/sysctl.conf

6、关闭selinux

sed -i 's/^SELINUX=/s/SELINUX=.*/SELINUX=disabled/g' /etc/sysconfig/selinux

setenforce 0

二、安装etcd-v3.3.10集群

1、在 k8smaster101 下载cfssl,cfssljson,cfsslconfig软件

wget https://pkg.cfssl.org/R1.2/cfssl_linux-amd64

chmod +x cfssl_linux-amd64

mv cfssl_linux-amd64 /usr/local/bin/cfssl

wget https://pkg.cfssl.org/R1.2/cfssljson_linux-amd64

chmod +x cfssljson_linux-amd64

mv cfssljson_linux-amd64 /usr/local/bin/cfssljson

wget https://pkg.cfssl.org/R1.2/cfssl-certinfo_linux-amd64

chmod +x cfssl-certinfo_linux-amd64

mv cfssl-certinfo_linux-amd64 /usr/local/bin/cfssl-certinfo

2、在 k8smaster101 生成key所需要文件

mkdir keys

cd keys

cat > ca-csr.json <这里的证书有效期时间加长点,87600h = 10年。

cat > ca-config.json <cat > etcd-csr.json <3、生成key文件

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

cfssl gencert -ca=ca.pem \

-ca-key=ca-key.pem \

-config=ca-config.json \

-profile=kubernetes etcd-csr.json | cfssljson -bare etcd

mkdir -p /etc/etcd/ssl

cp etcd.pem etcd-key.pem ca.pem /etc/etcd/ssl/

scp -r /etc/etcd 192.168.2.102:/etc/

scp -r /etc/etcd 192.168.2.103:/etc/

4、在 k8smaster101 下载etcd-v3.3.10-linux-amd64.tar.gz,传至 k8smaster102 、k8smaster103 。每个 etcd 节点都安装一下

wget https://github.com/etcd-io/etcd/releases/download/v3.3.10/etcd-v3.3.10-linux-amd64.tar.gz

tar -xvf etcd-v3.3.10-linux-amd64.tar.gz

cd etcd-v3.3.10-linux-amd64

cp -a etcd* /usr/local/bin/

5、分别在 k8smaster101 、k8smaster102 、k8smaster103 生成etcd启动服务文件,注意修改–name 项以及对应的 ip

k8smaster101

cat > /etc/systemd/system/etcd.service <k8smaster102

cat > /etc/systemd/system/etcd.service <k8smaster103

cat > /etc/systemd/system/etcd.service <6、启动etcd集群

mkdir -p /var/lib/etcd

systemctl daemon-reload

systemctl enable etcd

systemctl start etcd

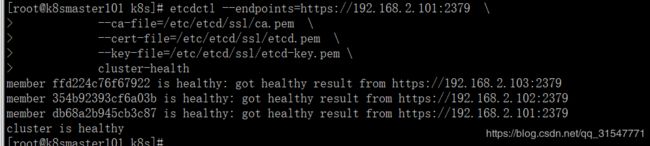

7、验证etcd集群状态

etcdctl --endpoints=https://192.168.2.101:2379 \

--ca-file=/etc/etcd/ssl/ca.pem \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

cluster-health

三、keepalived安装

1、分别在三台master服务器上安装keepalived

yum -y install keepalived

2、在k8smaster101 、 k8smaster102 、 k8smaster103 生成配置文件

k8smaster101

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.100:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state MASTER

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 120 # 权重,要唯一

advert_int 1

mcast_src_ip 192.168.2.101 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

192.168.2.102

192.168.2.103

}

virtual_ipaddress {

192.168.2.100/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

k8smaster102

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.100:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 110 # 权重,要唯一

advert_int 1

mcast_src_ip 192.168.2.102 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

192.168.2.101

192.168.2.103

}

virtual_ipaddress {

192.168.2.100/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

k8smaster103

cat > /etc/keepalived/keepalived.conf << EOF

global_defs {

router_id LVS_k8s

}

vrrp_script CheckK8sMaster {

script "curl -k https://192.168.2.100:6443" # vip

interval 3

timeout 9

fall 2

rise 2

}

vrrp_instance VI_1 {

state BACKUP

interface ens160 # 本地网卡名称

virtual_router_id 61

priority 100 # 权重,要唯一

advert_int 1

mcast_src_ip 192.168.2.103 # 本地IP

nopreempt

authentication {

auth_type PASS

auth_pass sqP05dQgMSlzrxHj

}

unicast_peer {

192.168.2.101

192.168.2.102

}

virtual_ipaddress {

192.168.2.100/24 # VIP

}

track_script {

CheckK8sMaster

}

}

EOF

3、分别在三台服务器上启动keepalived

systemctl enable keepalived

systemctl start keepalived

systemctl status keepalived

4、在权重给的最大的节点上查看是否有 Vip

ip addr

四、安装docker

1、分别在各节点安装docker

wget http://mirrors.aliyun.com/docker-ce/linux/centos/7/x86_64/stable/Packages/docker-ce-18.03.1.ce-1.el7.centos.x86_64.rpm

yum install -y docker-ce-18.03.1.ce-1.el7.centos.x86_64.rpm

systemctl start docker

systemctl enable docker

五、安装kubernetes集群

1、自己做代理在官网下载,或者用我百度云盘的包。不用谢。拿去用

下载k8s-rpm&images

提取码: z6dg

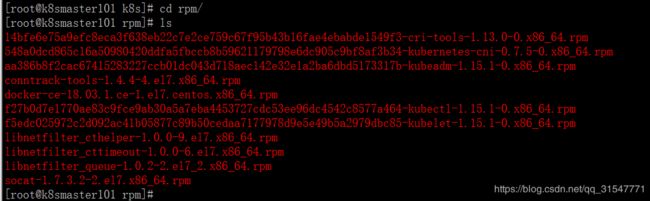

2、在每个节点创建 rpm 目录,将 rpm 下载到目录内,执行安装命令,并添加到开机启动

cd rpm

yum install -y *rpm

systemctl enable kubelet

3、在每个节点创建 images 目录,将镜像 下载到目录内,执行导入命令,

cd images

for i in *;do docker load < $i ;done

4、创建 kubeconf 目录,并下载kubeadm-conf.yaml 和 kube-flannel.yml 配置文件,修改配置

修改配置文件 kubeadm-conf.yaml 。

★ 修改certSANs的 ip 和 对应的 master主机名

★ etcd 节点的 ip 改成对应的

★ controlPlaneEndpoint 改成 Vip

★ serviceSubnet: 这个指的是k8s内 service 以后要用的 ip 网段

★ podSubnet: 这个指的是 k8s 内 pod 以后要用的 ip 网段

cd kubeconf

vim kubeadm-conf.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

---

apiServer:

timeoutForControlPlane: 4m0s

certSANs:

- 192.168.2.100

- 192.168.2.101

- 192.168.2.102

- 192.168.2.103

- "k8smaster101"

- "k8smaster102"

- "k8smaster103"

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controllerManager: {}

dns:

type: CoreDNS

etcd:

external:

endpoints:

- https://192.168.2.101:2379

- https://192.168.2.102:2379

- https://192.168.2.103:2379

caFile: /etc/etcd/ssl/ca.pem

certFile: /etc/etcd/ssl/etcd.pem

keyFile: /etc/etcd/ssl/etcd-key.pem

imageRepository: k8s.gcr.io

kind: ClusterConfiguration

kubernetesVersion: v1.15.1

controlPlaneEndpoint: "192.168.2.100:6443"

networking:

dnsDomain: cluster.local

serviceSubnet: 10.92.0.0/16

podSubnet: 10.2.0.0/16

scheduler: {}

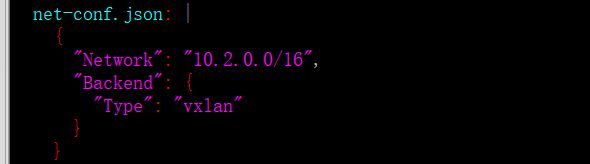

修改配置文件 kube-flannel.yml,这个文件其他都不要动,只改一个地方

net-conf.json : 找到这一项,将 Network 后的 ip 修改为上一个配置文件定义的 pod 的 ip 网段 10.2.0.0/16

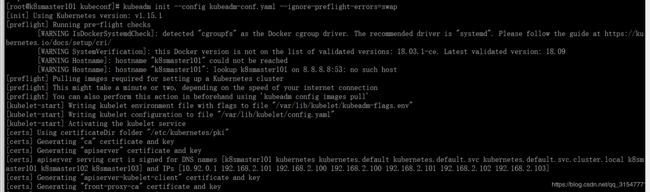

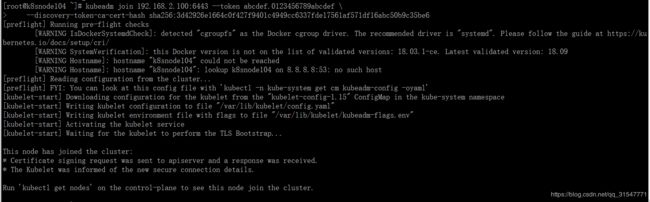

5、初始化kubernetes,成功的话,会像下面两张图一样

kubeadm init --config kubeadm-conf.yaml --ignore-preflight-errors=swap

PS:如果初始化失败,再次初始化时,一定要清空一下kubeadm 的缓存和 etcd 中的数据,否则初始化会报其他的错

kubeadm reset

etcdctl \

--endpoints="https://192.168.2.101:2379,https://192.168.2.102:2379,https://192.168.2.103:2379" \

--cacert=/etc/etcd/ssl/ca.pem \

--cert=/etc/etcd/ssl/etcd.pem \

--key=/etc/etcd/ssl/etcd-key.pem \

del /registry --prefix

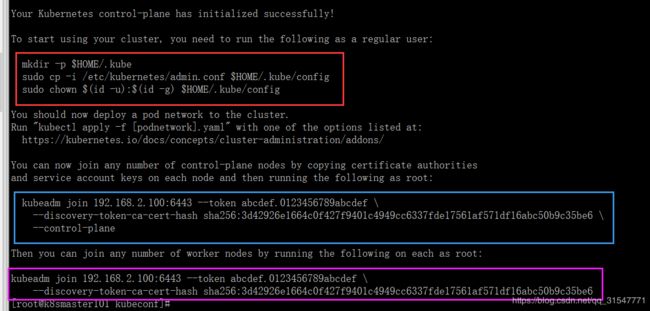

6、执行本节第5步图中红框中的内容

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

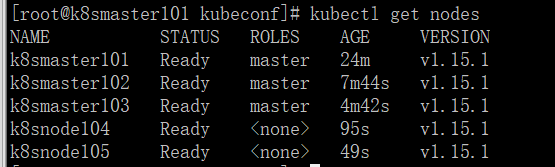

7、查看k8s集群是否有当前master存在

kubectl get nodes

8、为k8s集群启动 flannel 网络,然后查看 k8s 中所有的 pod 验证 flannel 网络是否启动,如图

kubectl create -f kube-flannel.yml

kubectl get pod --all-namespaces

9、添加另外两个master节点。将 /etc/kubernetes/pki 传到k8smaster102 、k8smaster103的 /etc/kubernetes/ , 执行本节第5步图中蓝框中的内容。此步为k8s集群添加一个master节点,在k8smaster102 和 k8smaster103上执行

传 pki 目录过去

scp -r /etc/kubernetes/pki/ [email protected]:/etc/kubernetes/

scp -r /etc/kubernetes/pki/ [email protected]:/etc/kubernetes/

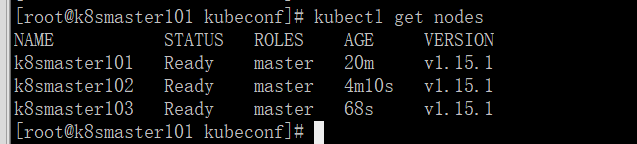

在 k8smaster102 和 k8smaster103上执行,执行成功后如图所示

kubeadm join 192.168.2.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d42926e1664c0f427f9401c4949cc6337fde17561af571df16abc50b9c35be6 \

--control-plane

然后在 k8smaster101 上查看现在的k8s集群内的节点,已经有 3 个master添加到了k8s集群中

kubectl get nodes

10、添加node节点,不用传 pki ,直接在两个 node 节点执行第 5 步中粉色框中的内容

kubeadm join 192.168.2.100:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:3d42926e1664c0f427f9401c4949cc6337fde17561af571df16abc50b9c35be6

kubectl get nodes

==================================================

六、为k8s集群新增 node 节点,都需要干什么嘞?

新增一台主机名为 k8snode106 ,ip 是 192.168.2.106

回顾上面的步骤

1、先把 第一步 的准备工作做完

2、将 images 、 rpm 包传到这个节点,并安装和导入镜像

yum install -y *rpm

systemctl enable docker

systemctl enable kubelet

systemctl start docker

3、在master节点查看加入 node 节点的命令

kubeadm token create --print-join-command

4、在 192.168.2.106 节点执行第3步中查到的命令

kubeadm join 192.168.2.100:6443 --token tdwnze.fzp0rrf5s9kyhmxq --discovery-token-ca-cert-hash sha256:3d42926e1664c0f427f9401c4949cc6337fde17561af571df16abc50b9c35be6

等待执行完毕,到master节点查看。