决策树中连续值与缺失值的处理方法

连续值的处理方法

对于连续属性,不能直接根据连续属性的可取值对节点进行划分,可以使用二分法对连续属性进行划分。

划分方法

假设数据集 D D D中的属性 a a a是连续的,那么对于 a a a中的结点,每两个结点取中值作为候选划分点,然后就可以像离散属性值一样处理这些候选划分点。

G a i n ( D , a , t ) = E n t ( D ) − ∑ ∣ D t k ∣ ∣ D ∣ E n t ( D t k ) Gain(D,a,t)=Ent(D)-\sum_{}{} \frac{|D^k_t|}{|D|} Ent(D^k_t) Gain(D,a,t)=Ent(D)−∑∣D∣∣Dtk∣Ent(Dtk)

计算方法

还是使用西瓜数据集,如下图

数据集中密度和含糖率是连续的值,我们对其进行处理,以密度作为范例进行计算:

首先对密度属性值进行排序

{ 0.234 , 0.245 , 0.343 , 0.360 , 0.403 , 0.437 , 0.481 , 0.556 , 0.593 , 0.608 , 0.634 , 0.639 , 0.657 , 0.666 , 0.697 , 0.719 , 0.774 } \lbrace0.234,0.245,0.343,0.360,0.403,0.437,0.481,0.556,0.593,0.608,0.634,0.639,0.657,0.666,0.697,0.719,0.774\rbrace {0.234,0.245,0.343,0.360,0.403,0.437,0.481,0.556,0.593,0.608,0.634,0.639,0.657,0.666,0.697,0.719,0.774}

划分候选点:

{ 0.244 , 0.294 , 0.351 , 0.381 , 0.420 , 0.459 , 0.518 , 0.574 , 0.600 , 0.621 , 0.636 , 0.648 , 0.661 , 0.681 , 0.708 , 0.746 } \lbrace0.244,0.294,0.351,0.381,0.420,0.459,0.518,0.574,0.600,0.621,0.636,0.648,0.661,0.681,0.708,0.746\rbrace {0.244,0.294,0.351,0.381,0.420,0.459,0.518,0.574,0.600,0.621,0.636,0.648,0.661,0.681,0.708,0.746}

对上述候选点分别计算信息熵:

取候选点0.381:

观察数据集,小于0.381的点有4个,大于0.381的点有13个,其中小于0.381的点中没有正例,小于0.381的13个点中有5个正例;

E n t ( D t − ) = 0 ; Ent(D_t^-)=0; Ent(Dt−)=0;

E n t ( D t + ) = − ( 5 13 l o g 2 5 13 + 8 13 l o g 2 8 13 ) = 0.961 Ent(D_t^+)=-(\frac{5}{13}log_2\frac{5}{13}+\frac{8}{13}log_2\frac{8}{13})=0.961 Ent(Dt+)=−(135log2135+138log2138)=0.961

G a i n ( D , a ) = E n t D − ∑ ∣ D λ ∣ ∣ D ∣ E n t ( D λ ) = 0.998 − 13 17 0.961 = 0.264. \begin{aligned} Gain(D,a)&=Ent{D}-\sum_{}^{}\frac{|D^\lambda|}{|D|}Ent(D^\lambda)\\&=0.998-\frac{13}{17}0.961\\&=0.264. \end{aligned} Gain(D,a)=EntD−∑∣D∣∣Dλ∣Ent(Dλ)=0.998−17130.961=0.264.

对于每一个候选结点都经过上述计算后,得到对应的信息熵,选择信息熵最大的结点作为划分结点对决策树进行划分。

缺失值的处理方法

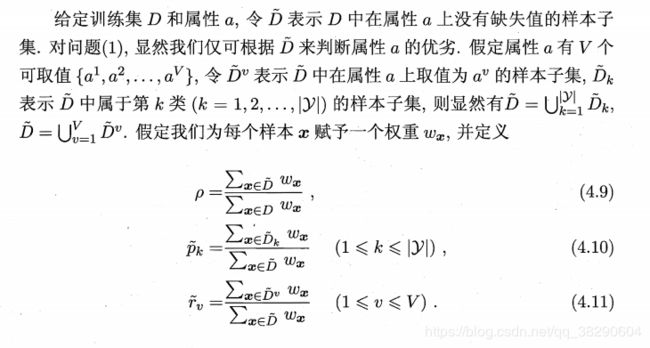

其中, ρ \rho ρ表示属性 D D D中无缺失值样本所占的比例; p ~ k \widetilde{p}_k p k表示无缺失值样本中第 k k k类所占的比例; r ~ v \widetilde{r}_v r v表示无缺失样本的属性 a a a上 a v a^v av所占的比例。

信息增益的计算

G a i n ( D , a ) = ρ × G a i n ( D ~ , a ) = ρ × ( E n t ( D ~ ) − ∑ v = 1 V r ~ v E n t ( D ~ v ) ) \begin{aligned} Gain(D,a)&=\rho \times Gain(\widetilde{D},a)\\&=\rho \times ( Ent(\widetilde{D})-\sum_{v=1}^{V}\widetilde{r}_vEnt(\widetilde{D}^v)) \end{aligned} Gain(D,a)=ρ×Gain(D ,a)=ρ×(Ent(D )−v=1∑Vr vEnt(D v))

其中

E n t ( D ~ ) = − ∑ k = 1 ∣ γ ∣ p ~ k l o g 2 p ~ k . Ent(\widetilde{D})=-\sum_{k=1}^{|\gamma|}\widetilde{p}_klog_2\widetilde{p}_k. Ent(D )=−k=1∑∣γ∣p klog2p k.

实例计算

还是以西瓜数据集为例

选择色泽这一属性进行信息熵的计算

无缺失值的结点有{2,3,4,6,7,8,9,10,11,12,14,15,16,17},因此 ρ = 14 17 \rho=\frac{14}{17} ρ=1714;

E n t ( D ~ ) = − ∑ k = 1 2 p ~ k l o g 2 p ~ k = − ( 6 14 l o g 2 6 14 + 8 14 l o g 2 8 14 ) = 0.985. \begin{aligned} Ent(\widetilde{D})&=-\sum_{k=1}^{2}\widetilde{p}_klog_2\widetilde{p}_k\\&=-(\frac{6}{14}log_2\frac{6}{14}+\frac{8}{14}log_2\frac{8}{14})\\&=0.985. \end{aligned} Ent(D )=−k=1∑2p klog2p k=−(146log2146+148log2148)=0.985.

将色泽青绿记做 D 1 ~ \widetilde{D^1} D1 ,色泽乌黑记做 D 2 ~ \widetilde{D^2} D2 ,色泽浅白记做 D 3 ~ \widetilde{D^3} D3 ;

则

E n t ( D 1 ~ ) = − ( 2 4 l o g 2 2 4 + 2 4 l o g 2 2 4 ) = 1.000 Ent(\widetilde{D^1})=-(\frac{2}{4}log_2\frac{2}{4}+\frac{2}{4}log_2\frac{2}{4})=1.000 Ent(D1 )=−(42log242+42log242)=1.000

E n t ( D 2 ~ ) = − ( 4 6 l o g 2 4 6 + 2 6 l o g 2 2 6 ) = 0.918 Ent(\widetilde{D^2})=-(\frac{4}{6}log_2\frac{4}{6}+\frac{2}{6}log_2\frac{2}{6})=0.918 Ent(D2 )=−(64log264+62log262)=0.918

E n t ( D 3 ~ ) = 0.000 Ent(\widetilde{D^3})=0.000 Ent(D3 )=0.000

计算信息增益

G a i n ( D ~ , 色 泽 ) = E n t ( D ~ ) − ∑ r ~ v E n t ( D ~ ) = 0.985 − ( 4 14 × 1.000 + 6 14 × 0.918 + 4 14 × 0.000 ) = 0.306. \begin{aligned} Gain(\widetilde{D},色泽)&=Ent(\widetilde{D})-\sum_{}^{}\widetilde{r}^vEnt(\widetilde{D})\\ &=0.985-(\frac{4}{14}\times1.000+\frac{6}{14}\times0.918+\frac{4}{14}\times0.000)\\ &=0.306. \end{aligned} Gain(D ,色泽)=Ent(D )−∑r vEnt(D )=0.985−(144×1.000+146×0.918+144×0.000)=0.306.

属性色泽的信息增益为

G a i n ( D , 色 泽 ) = ρ × G a i n ( D ~ , 色 泽 ) = 14 17 × 0.306 = 0.252. Gain(D,色泽)=\rho \times Gain(\widetilde{D},色泽)=\frac{14}{17}\times0.306=0.252. Gain(D,色泽)=ρ×Gain(D ,色泽)=1714×0.306=0.252.

其他属性的信息增益也可以使用同样的方法进行计算,最后选择信息增益最大的属性作为划分属性,进而对决策树进行划分。