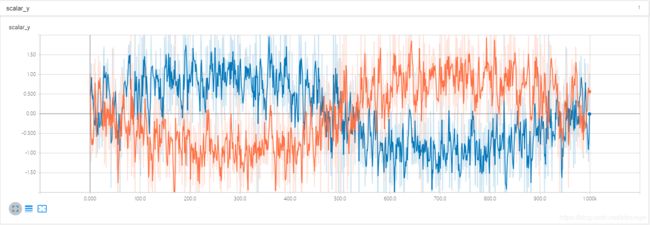

Tensorboard 在同一个 scalar 图中绘制出多次试验中某个变量随时间推移的变化情况

一、环境

TensorFlow API r1.12

CUDA 9.2 V9.2.148

cudnn64_7.dll

Python 3.6.3

Windows 10、Ubuntu 16.04

二、代码示例

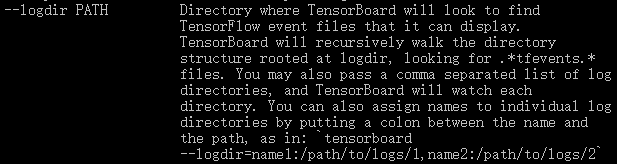

在命令行中运行:tensorboard --help

得到提示如下:

https://github.com/tensorflow/tensorboard

方法一:tensorboard --logdir="./logdir/"

TensorBoard 会以指定的 logdir 文件夹为根目录,递归地查找 logdir 下所有文件夹下的 .*tfevents.* 日志文件

方法二:tensorboard --logdir=name1:"./logdir/log_1/",name2:"./logdir/log_2/"

也可以在每个日志文件夹前添加一个 names,通过冒号连接对应的日志文件夹

方法三:tensorboard --logdir= "./logdir/log_1/", "./logdir/log_2/"(官方说可以但是不能使用的方法)

也可以使用逗号分隔的多个日志文件夹列表,TensorBoard 会逐个查找这几个指定文件夹下的 .*tfevents.* 日志文件

官方 github上虽然说明可以使用该形式,但是会在命令行一直提示“W0109 17:53:48.568122 Reloader plugin_event_multiplexer.py:139] Conflict for name .: old path C:\Users\WJW\logs\plot_1, new path C:\Users\WJW\logs\plot_2”的信息,并且在 TensorBoard 页面上也只显示 log_1 下的相关数据对象,该问题应该还是一个设计 bug 吧,具体问题分析可以参见:https://github.com/tensorflow/tensorboard/issues/179

同时,注意到 tensorboard 的 help 信息提示说是一个逗号分割的日志文件夹列表,那么把参数改为列表形式可以不?如:tensorboard --logdir= ["./logdir/log_1/", "./logdir/log_2/"],实测了不行,一个也显示不出来!还是建议先用方法一和方法二吧,别折腾浪费时间了,反正都能实现同样功能

三、实例

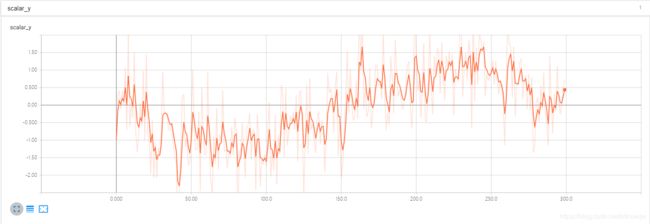

1、二维 sin 函数(300个数据点)

>>> import tensorflow as tf

>>> import math

>>> import numpy as np

>>> x = np.linspace(-math.pi,math.pi,300)[:,np.newaxis]

>>> x.shape

# (300, 1)

>>> noise = np.random.normal(loc=0.0, scale=0.9, size=x.shape)

>>> noise.shape

# (300, 1)

>>> x_holder = tf.placeholder(dtype=tf.float32, shape=[None,1], name="x")

>>> x_holder

#

>>> noise_holder = tf.placeholder(dtype=tf.float32, shape=[None,1], name="x")

>>> noise_holder

#

>>> y = tf.math.sin(x=x_holder) + noise_holder

>>>

#

>>> y = tf.reduce_mean(input_tensor=y)

#

>>> summary_writer = tf.summary.FileWriter("./summaries/logdir/")

>>> tf.summary.scalar(name="scalar_y", tensor=y)

>>> merged_summary = tf.summary.merge_all()

>>> init_op = tf.global_variables_initializer()

>>> sess = tf.InteractiveSession()

>>> sess.run(init_op)

>>> for i in range(300):

each_x = np.asarray(a=[x[i]], dtype=np.float32)

each_noise = np.asarray(a=[noise[i]], dtype=np.float32)

summary_result = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x, noise_holder:each_noise})

summary_writer.add_summary(summary=summary_result, global_step=i)

>>> sess.close()

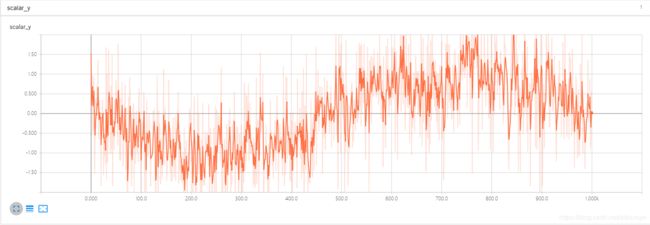

2、一维 sin 函数(1000个数据点)

>>> import tensorflow as tf

>>> import math

>>> import numpy as np

>>> x = np.linspace(-math.pi,math.pi,1000)

>>> x.shape

# (1000,)

>>> noise = np.random.normal(loc=0.0, scale=0.9, size=x.shape)

>>> noise.shape

# (1000,)

>>> x_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> x_holder

#

>>> noise_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> noise_holder

#

>>> y = tf.math.sin(x=x_holder) + noise_holder

>>> y

#

>>> y = tf.reduce_mean(input_tensor=y)

>>> y

#

>>> summary_writer = tf.summary.FileWriter("./summaries/logdir_1d_sin/")

>>> tf.summary.scalar(name="scalar_y", tensor=y)

#

>>> merged_summary = tf.summary.merge_all()

>>> init_op = tf.global_variables_initializer()

>>> sess = tf.InteractiveSession()

>>> sess.run(init_op)

>>> for i in range(1000):

each_x = np.asarray(a=[x[i]], dtype=np.float32)

each_noise = np.asarray(a=[noise[i]], dtype=np.float32)

summary_result = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x, noise_holder:each_noise})

summary_writer.add_summary(summary=summary_result, global_step=i)

>>> sess.close()

在命令行运行:tensorboard --logdir "./summaries/logdir_2d_sin/"

在浏览器地址栏:http://localhost:6006

在命令行运行:tensorboard --logdir "./summaries/logdir_1d_sin/"

在浏览器地址栏:http://localhost:6006

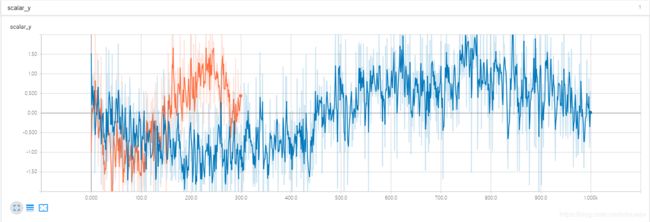

在命令行运行:tensorboard --logdir "./summaries/"

在浏览器地址栏:http://localhost:6006

3、同时创建多个 tf.summary.FileWriter,在一个会话中执行

(1)第一个 tf.summary.FileWriter 没创建日志文件,被第二个覆盖了

>>> import tensorflow as tf

>>> import math

>>> import numpy as np

>>> x = np.linspace(-math.pi,math.pi,1000)

>>> x.shape

# (1000,)

>>> noise = np.random.normal(loc=0.0, scale=0.9, size=x.shape)

>>> noise.shape

# (1000,)

>>> x_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> x_holder

#

>>> noise_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> noise_holder

#

>>> y = tf.math.sin(x=x_holder) + noise_holder

>>> y

#

>>> y = tf.reduce_mean(input_tensor=y)

>>> y

#

>>> summary_writer_1 = tf.summary.FileWriter("./summaries/logdir_1_sin/")

>>> summary_writer_2 = tf.summary.FileWriter("./summaries/logdir_2_sin/")

>>> tf.summary.scalar(name="scalar_y", tensor=y)

#

>>> merged_summary = tf.summary.merge_all()

>>> init_op = tf.global_variables_initializer()

>>> sess = tf.InteractiveSession()

>>> sess.run(init_op)

>>> for i in range(1000):

each_x_1 = np.asarray(a=[x[i]], dtype=np.float32)

each_noise_1 = np.asarray(a=[noise[i]], dtype=np.float32)

summary_result_1 = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x_1, noise_holder:each_noise_1})

summary_writer_1.add_summary(summary=summary_result_1, global_step=i)

each_x_2 = np.asarray(a=[x[999-i]], dtype=np.float32)

each_noise_2 = np.asarray(a=[noise[999-i]], dtype=np.float32)

summary_result_2 = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x_2, noise_holder:each_noise_2})

summary_writer_2.add_summary(summary=summary_result_2, global_step=i)

>>> sess.close()

解决方法: 在 summary_writer_1.add_summary(summary=summary_result_1, global_step=i) 之后添加 summary_writer_1.flush() 强制将日志文件写入到硬盘,具体如下:

>>> import tensorflow as tf

>>> import math

>>> import numpy as np

>>> x = np.linspace(-math.pi,math.pi,1000)

>>> x.shape

# (1000,)

>>> noise = np.random.normal(loc=0.0, scale=0.9, size=x.shape)

>>> noise.shape

# (1000,)

>>> x_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> x_holder

#

>>> noise_holder = tf.placeholder(dtype=tf.float32, shape=[None], name="x")

>>> noise_holder

#

>>> y = tf.math.sin(x=x_holder) + noise_holder

>>> y

#

>>> y = tf.reduce_mean(input_tensor=y)

>>> y

#

>>> summary_writer_1 = tf.summary.FileWriter("./summaries/logdir_1_sin/")

>>> summary_writer_2 = tf.summary.FileWriter("./summaries/logdir_2_sin/")

>>> tf.summary.scalar(name="scalar_y", tensor=y)

#

>>> merged_summary = tf.summary.merge_all()

>>> init_op = tf.global_variables_initializer()

>>> sess = tf.InteractiveSession()

>>> sess.run(init_op)

>>> for i in range(1000):

... each_x_1 = np.asarray(a=[x[i]], dtype=np.float32)

... each_noise_1 = np.asarray(a=[noise[i]], dtype=np.float32)

... summary_result_1 = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x_1, noise_holder:each_noise_1})

... summary_writer_1.add_summary(summary=summary_result_1, global_step=i)

... summary_writer_1.flush()

... each_x_2 = np.asarray(a=[x[999-i]], dtype=np.float32)

... each_noise_2 = np.asarray(a=[noise[999-i]], dtype=np.float32)

... summary_result_2 = sess.run(fetches=merged_summary, feed_dict={x_holder:each_x_2, noise_holder:each_noise_2})

... summary_writer_2.add_summary(summary=summary_result_2, global_step=i)

... summary_writer_2.flush()

...

>>> summary_writer_1.close()

>>> summary_writer_2.close()

>>> sess.close()

在命令行运行:tensorboard --logdir summaries

在浏览器地址栏:http://localhost:6006