OpenCV Using Python——单目视觉三维重建

单目视觉三维重建

1. 单目视觉三维重建简介

单目视觉三维重建是根据单个摄像头的运动模拟双目视觉获得物体在空间中的三维视觉信息。已知单个摄像头在两个不同时间点上同时在空间中两个不同位置的图像等价于已知两个摄像头同一时间在空间两个不同位置的图像。所以问题分解为:

(1)如何用单目视觉替换双目视觉,即如何确定单个摄像头在两个不同时间点的空间转换关系;

(2)根据双目视觉确定图像中物体的三维视觉信息。

2. 代码实现

(1)标定摄像机获得摄像机矩阵K(内参数矩阵)

内参数矩阵表示从归一化图像平面到物理成像平面的转换关系。 用OpenCV中的库函数标定摄像机会出现很多问题,主要包括:

1)

摄像头分辨率:尝试过使用手机摄像头,现在的手机拍摄的图片分辨率过高以至于找不到标定板的位置,因为有可能是检测角点的窗口范围设置有限,所以,分辨率过高的图像中的角点在窗口中水平方向和垂直方向的灰度梯度变化不明显,角点部分由过多的像素过渡,在过小的窗口看起来角点更像是变化的弧线。可以通过下采样的方式先降低图像分辨率,检测到模式后再上采样恢复角点实际在图像中的位置。然而,用笔记本的摄像头则没有这样的问题出现。

2)

设置模式大小:寻找标定板时,需要手动调整标定板对象点objpoint的容量,笔者这里的容量为9*6;如果不手动调整有可能找不到标定板。

摄像头标定代码如下:

import cv2

import numpy as np

import glob

################################################################################

print 'criteria and object points set'

# termination criteria

criteria = (3L, 30, 0.001)

# prepare object points, like (0,0,0), (1,0,0), (2,0,0) ....,(8,5,0)

objpoint = np.zeros((9 * 6, 3), np.float32)

objpoint[:,:2] = np.mgrid[0:9, 0:6].T.reshape(-1,2)

# arrays to store object points and image points from all the images

# 3d point in real world space

objpoints = []

# 2d points in image plane

imgpoints = []

################################################################################

print 'Load Images'

images = glob.glob('images/Phone Camera/*.bmp')

for frame in images:

img = cv2.imread(frame)

imgGray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

# find chess board corners

ret, corners = cv2.findChessboardCorners(imgGray, (9,6), None)

# print ret to check if pattern size is set correctly

print ret

# if found, add object points, image points (after refining them)

if ret == True:

# add object points

objpoints.append(objpoint)

cv2.cornerSubPix(imgGray, corners, (11,11), (-1,-1), criteria)

# add corners as image points

imgpoints.append(corners)

# draw corners

cv2.drawChessboardCorners(img, (9,6), corners, ret)

cv2.imshow('Image',img)

cv2.waitKey(0)

cv2.destroyAllWindows()

################################################################################

print 'camera matrix'

ret, camMat, distortCoffs, rotVects, transVects = cv2.calibrateCamera(objpoints, imgpoints, imgGray.shape[::-1],None,None)

################################################################################

print 're-projection error'

meanError = 0

for i in xrange(len(objpoints)):

imgpoints2, _ = cv2.projectPoints(objpoints[i], rotVects[i], transVects[i], camMat, distortCoffs)

error = cv2.norm(imgpoints[i], imgpoints2, cv2.NORM_L2) / len(imgpoints2)

meanError += error

print "total error: ", meanError / len(objpoints)

################################################################################

def drawAxis(img, corners, imgpoints):

corner = tuple(corners[0].ravel())

cv2.line(img, corner, tuple(imgpoints[0].ravel()), (255,0,0), 5)

cv2.line(img, corner, tuple(imgpoints[1].ravel()), (0,255,0), 5)

cv2.line(img, corner, tuple(imgpoints[2].ravel()), (0,0,255), 5)

return img

################################################################################

def drawCube(img, corners, imgpoints):

imgpoints = np.int32(imgpoints).reshape(-1,2)

# draw ground floor in green color

cv2.drawContours(img, [imgpoints[:4]], -1, (0,255,0), -3)

# draw pillars in blue color

for i,j in zip(range(4), range(4,8)):

cv2.line(img, tuple(imgpoints[i]), tuple(imgpoints[j]), (255,0,0), 3)

# draw top layer in red color

cv2.drawContours(img, [imgpoints[4:]], -1, (0,0,255), 3)

return img

################################################################################

print 'pose calculation'

axis = np.float32([[3,0,0], [0,3,0], [0,0,-3]]).reshape(-1,3)

axisCube = np.float32([[0,0,0], [0,3,0], [3,3,0], [3,0,0], [0,0,-3], [0,3,-3], [3,3,-3], [3,0,-3]])

for frame in glob.glob('images/Phone Camera/*.bmp'):

img = cv2.imread(frame)

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

ret, corners = cv2.findChessboardCorners(gray, (9,6), None)

if ret == True:

# find the rotation and translation vectors.

rotVects, transVects, inliers = cv2.solvePnPRansac(objpoint, corners, camMat, distortCoffs)

# project 3D points to image plane

'''

imgpoints, jac = cv2.projectPoints(axis, rotVecs, transVecs, camMat, distortCoffs)

img = drawAxis(img, corners, imgpoints)

'''

imgpoints, jac = cv2.projectPoints(axisCube, rotVects, transVects, camMat, distortCoffs)

img = drawCube(img, corners, imgpoints)

cv2.imshow('Image with Pose', img)

cv2.waitKey(0)

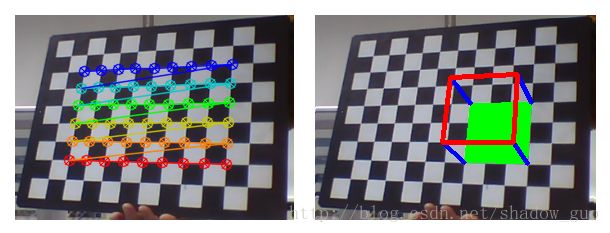

cv2.destroyAllWindows()标定结果如下。左图出现锯齿状折线说明找到模式,右图为多个坐标轴的组成的正方体的可视化。每个正方体的边长为3,测得黑格和白格的边长即可获得物体的实际长度,所以用在视觉测量方面很方便,即测量图像中物体的实际长度。

(2)SIFT特征点匹配

SIFT特征点匹配首先获得两幅图像的特征描述子和关键点,然后在匹配时删除匹配不符合要求的点。最后根据匹配合格的点计算基础矩阵F。已知空间中的点在两个物理成像图像平面中的坐标(x, y)和(x', y'),可以通过基础矩阵计算出在图像对中的另一个物理成像图像平面中的极线。实现代码如下:

################################################################################ print 'SIFT Keypoints and Descriptors' sift = cv2.SIFT() keypoint1, descriptor1 = sift.detectAndCompute(img1, None) keypoint2, descriptor2 = sift.detectAndCompute(img2, None) ################################################################################ print 'SIFT Points Match' FLANN_INDEX_KDTREE = 0 index_params = dict(algorithm = FLANN_INDEX_KDTREE, trees = 5) search_params = dict(checks = 50) # flann = cv2.FlannBasedMatcher(index_params, search_params) bf = cv2.BFMatcher() matches = bf.knnMatch(descriptor1, descriptor2, k = 2) ################################################################################ good = [] points1 = [] points2 = [] ################################################################################ for i, (m, n) in enumerate(matches): if m.distance < 0.7 * n.distance: good.append(m) points1.append(keypoint1[m.queryIdx].pt) points2.append(keypoint2[m.trainIdx].pt) points1 = np.float32(points1) points2 = np.float32(points2) F, mask = cv2.findFundamentalMat(points1, points2, cv2.RANSAC) # We select only inlier points points1 = points1[mask.ravel() == 1] points2 = points2[mask.ravel() == 1]

(3)已知内参数矩阵,计算基础矩阵F和本征矩阵E

基础矩阵适用于未标定的摄像头,假设空间点在两个物理成像平面中的坐标分别为p = (u, v)和p' = (u', v'),则满足transpose(p) *F*p = 0,*表示矩阵乘法。根据基础矩阵的定义F = inverse(transpose(K)) * E * inverse(K)计算出本征矩阵E。

################################################################################

# camera matrix from calibration

K = np.array([[517.67386649, 0.0, 268.65952163], [0.0, 519.75461699, 215.58959128], [0.0, 0.0, 1.0]])

# essential matrix

E = K.T * F * K(4)根据本征矩阵的旋转和平移分量构造投影矩阵对P和P’

根据本征矩阵E用SVD分解得到旋转矩阵R和平移向量t,检查旋转矩阵R是否有效,根据旋转矩阵的标准正交特性判断旋转矩阵的有效性。然后在旋转矩阵有效的情况下构造投影矩阵P0和P1。

注:旋转矩阵的有效性检查非常重要。因为实际上图像的噪声有可能导致特征点的位置存在误差,而且实际摄像机也并不是仿射摄像机,所以SVD分解有可能得不到有效的仿射结构。这里暂时没有深入原理(笔者暂时没接触过),所以没有设置相关的参数调整来修正仿射结构。所以,如果得不到有效旋转矩阵,重建是没有意义的。

W = np.array([[0., -1., 0.], [1., 0., 0.], [0., 0., 1.]])

U, S, V = np.linalg.svd(E)

# rotation matrix

R = U * W * V

# translation vector

t = [U[0][2], U[1][2], U[2][2]]

checkValidRot(R)

P1 = [[R[0][0], R[0][1], R[0][2], t[0]], [R[1][0], R[1][1], R[1][2], t[1]], [R[2][0], R[2][1], R[2][2], t[2]]]

P = [[1., 0., 0., 0.], [0., 1., 0., 0.], [0., 0., 1., 0.]](5)有效特征点三角化实现重建

已知(u,v)为空间点在物理成像平面中的坐标,则p = (u,v,1)为空间点在物理成像平面中的齐次坐标,inverse(K) * p = u,u = (x/z, y/z, 1)为归一化图像平面中的齐次坐标。根据两个不同的归一化图像平面中的坐标t和u和u1,以及投影矩阵P和P‘,构造线性方程组:lamb * u = PX和lamb' * u' = P'X,解线性方程组得到X = (x, y, z)。保存三轴坐标至pointCloudX,pointCloudY,pointCloudZ,分开存储便于绘制三维图形。最后将三维坐标点投影到物理成像平面中去,计算重投影后的坐标点与原始图像坐标点之间的重投影误差。

################################################################################

print 'points triangulation'

u = []

u1 = []

Kinv = np.linalg.inv(K)

# convert points in gray image plane to homogeneous coordinates

for idx in range(len(points1)):

t = np.dot(Kinv, np.array([points1[idx][0], points1[idx][1], 1.]))

t1 = np.dot(Kinv, np.array([points2[idx][0], points2[idx][1], 1.]))

u.append(t)

u1.append(t1)

################################################################################

# re-projection error

reprojError = 0

# point cloud (X,Y,Z)

pointCloudX = []

pointCloudY = []

pointCloudZ = []

for idx in range(len(points1)):

X = linearLSTriangulation(u[idx], P, u1[idx], P1)

pointCloudX.append(X[0])

pointCloudY.append(X[1])

pointCloudZ.append(X[2])

temp = np.zeros(4, np.float32)

temp[0] = X[0]

temp[1] = X[1]

temp[2] = X[2]

temp[3] = 1.0

print temp

# calculate re-projection error

reprojPoint = np.dot(np.dot(K, P1), temp)

imgPoint = np.array([points1[idx][0], points1[idx][1], 1.])

reprojError += math.sqrt((reprojPoint[0] / reprojPoint[2] - imgPoint[0]) * (reprojPoint[0] / reprojPoint[2] - imgPoint[0]) + (reprojPoint[1] / reprojPoint[2] - imgPoint[1]) * (reprojPoint[1] / reprojPoint[2] - imgPoint[1]))

print 'Re-project Error:', reprojError / len(points1)结语

单目视觉三维重建其实还是利用的双视几何的原理来做的,唯一有变化的地方是单目视觉在不同时间段上的两个摄像头的关系。立体视觉固定位置的两个摄像头相互平行的结构极大地简化了摄像头之间的变换关系,而单目视觉的两个摄像头由于仿射空间变换的结果取决于特征点匹配的精度,而有时匹配合格的特征点如果太少会直接影响到仿射结构的结果,所以变换的结果不确定性很大。总的来说,相比单目视觉,双目视觉三维重建的结果会精确很多。