重学OpenCV3纪要(还差卡尔曼滤波+光流追踪代码,择机更新)

注:<学习OpenCV3>一书适合有一定图像处理基础的读者,本书也不是入门读物,没有背景知识也没有详尽的公式推导,只是OpenCV3的使用手册。

1. OpenCV3函数库头文件简介

(1)opencv2/opencv.hpp:包含了所有课能用到的头文件,但是会降低编译速度,最好单独指定;

(2)opencv2/core/core.hpp:c++风格的结构及数学运算;

(3)opencv2/imgproc/imgproc.hpp:c++风格的图像处理函数;

(4)opencv2/highgui/highgui.hpp:c++风格的显示、滑动条、鼠标操作以及输入输出相关;

(5)opencv2/flann/miniflann.hpp:最近邻搜索匹配函数;

(6)opencv2/video/photo.hpp:操作和恢复照片相关算法;

(7)opencv2/video/video.hpp:视觉追踪及背景分割;

(8)opencv2/features2d/feature2d.hpp:用于追踪的二维特征;

(9)opencv2/objdetect/objdetect.hpp:人脸分类、SUM分类器、HoG特征和平面片检测器;

(10)opencv2/calib3d.calib3d.hpp:校准及双目视觉相关;

(11)opencv2/ml/ml.hpp:机器学习、聚类及模式识别相关;

2. opencv基础数据类型

巧用c++ auto修饰符,当不知到不同数据类型的相互计算结果是什么或者是否可以互相计算时,声明一个auto变量作为计算结果,如果能打印出来,就说明可以计算,那就再看auto变量的具体类型,模仿声明。

(1)Point类:最多3个元素;

(2)Scalar类:最多4个元素,四维点类;

(3)Size类:2个元素;

(4)Rect类:矩形左上角坐标和宽高;

(5)RotatedRect类:矩形中心坐标、Size和角度;

(6)Matx类:小型矩阵,最大6X6;

(7)Vec类:小型向量类,最大9个元素;

(8)Complex类:复数类,2个元素;

(9)Range类:生成连续的整数序列。

3. opencv辅助数据类型

(1)TermCriteria类:定义迭代算法终止条件的类;

(2)Ptr模板:智能指针,用法下文详述;

(3)Exception类:异常处理类;

(4)DataType<>模板;

(5)InputArray和OutputArray类:传入数组和返回数组

4. opencv大型数据类型

(1)稠密矩阵Mat类:多行续为1行存储;

(2)稀疏矩阵SparesMat类:常用来存储图像直方图。

5. Mat矩阵特殊操作函数

(1)cv::Mat(const Mat &mat, const cv::Rect &roi):只从感兴趣的区域中复制矩阵;

(2)cv::Mat(const Mat &mat, const cv::Range &rows, const cv::Range &cols):只从指定行列中复制数据;

(3)mat1 > mat2:按元素比较mat1和mat2,符合表达式相应位置赋值255,否则为0;

(4)mat1.inv():求矩阵的逆;

(5)mat1.mul(mat2):按元素相乘;

(6)min(mat1, mat2):按元素取最小值赋给新矩阵的相应位置;

(7)mat1.copyTo(mat2, mask):只将mat1的mask区域赋值给mat2。

(8) addWeighted(): 按照不同透明度叠加;

(9) countNonZero(): 计算非0像素数量;

(10) cvtColor(): 转换颜色空间;

(11) merge(): 输入多通道序列,输出多通道图像;

(12) minMaxIdx(): 输入单通道, 返回最大最小值及其位置;

(13) minMaxLoc(): 可输入多通道, 返回最大最小值及其位置;

(14) randu(): 用均匀分布随机值填充矩阵;

(15) randn(): 用正态分布随机值填充矩阵;

(16) repeat(): 将src内容复制到dst中,两者大小不需一致;

(17) solve(): 求解方程组;

(18) sort(): 按行或者按列对数组元素排序,返回排序后的数组;

(19) sortIdx(): 按行或者按列对数组元素排序,返回与原数组位置对应的排序索引;

(20) gemm(): 通用矩阵乘法,优化普通矩阵乘法的计算速度.

普通矩阵乘法步骤如下:

for (int m = 0; m < M; m++) {

for (int n = 0; n < N; n++) {

C[m][n] = 0;

for (int k = 0; k < K; k++) {

C[m][n] += A[m][k] * B[k][n];

}

}

}

gemm优化基本方法是将输出划分为若干个 4×4子块,以提高对输入数据的重用。同时大量使用寄存器,减少访存;向量化访存和计算;消除指针计算;重新组织内存以地址连续等。

gemm计算步骤如下:

for (int m = 0; m < M; m += 4) {

for (int n = 0; n < N; n += 4) {

C[m + 0..3][n + 0..3] = 0;

C[m + 0..3][n + 0..3] = 0;

C[m + 0..3][n + 0..3] = 0;

C[m + 0..3][n + 0..3] = 0;

for (int k = 0; k < K; k += 4) {

C[m + 0..3][n + 0..3] += A[m + 0..3][k + 0] * B[k + 0][n + 0..3];

C[m + 0..3][n + 0..3] += A[m + 0..3][k + 1] * B[k + 1][n + 0..3];

C[m + 0..3][n + 0..3] += A[m + 0..3][k + 2] * B[k + 2][n + 0..3];

C[m + 0..3][n + 0..3] += A[m + 0..3][k + 3] * B[k + 3][n + 0..3];

}

}

}

6. Mat矩阵5种元素访问方式

(1)mat.at

......

cv::Vec3b intensity = img.at(y, x);

uchar blue = intensity[0];

uchar green = intensity[1];

uchar red = intensity[2];

cout << "x, y BGR: " << (unsigned int)blue << " " << (unsigned int)green << " " << (unsigned int)red << endl;

intensity = {(uchar)255, (uchar)255, (uchar)255};

img.at(y, x) = intensity;

img.at(x,y) = 255;

......

(2) ptr智能指针

void use_test10()

{

cv::Mat img(cv::Size(3,3), CV_8UC1);

cv::randu(img, 0, 10);

cout << img << endl;

uchar * data10 = img.ptr(0); // 指向img第一行首元素的指针

uchar * data20 = img.ptr(1); //指向imhg第二行首元素的指针

uchar data13 = img.ptr(0)[2]; // 指向img第一行第3个元素的指针

cout << (int)(data10[0]) << endl;

cout << (int)(data20[1]) << endl;

cout << (int)data13 << endl;

}

(3) 块访问

void use_test12()

{

cv::Mat img(cv::Size(3,3), CV_8UC1);

cv::randu(img, 1, 9);

cout << img << endl;

cv::Mat img1 = img(cv::Range(1,2), cv::Range(1,2));

cout << img1 << endl;

}

(4) MatConstIterator迭代器

void use_test11()

{

cv::Mat img(cv::Size(3,3), CV_8UC1);

cv::randu(img, 0, 10);

cout << img << endl;

int max_num = 0;

cv::MatConstIterator_it = img.begin();

while(it!=img.end())

{

cout << (int)(*it) << ", ";

if ((int)(*it) > max_num)

max_num = (int)(*it);

it++;

}

cout << endl;

cout << max_num << endl;

}

(5) NAryMatIterator迭代器

void use_test9()

{

cv::Mat m1(3, (5,5,5), CV_32FC3);

cv::Mat m2(3, (5,5,5), CV_32FC3);

cv::RNG rng;

rng.fill(m1, cv::RNG::UNIFORM, 0.f, 1.f);

rng.fill(m2, cv::RNG::UNIFORM, 0.f, 1.f);

const cv::Mat* arrays[] = {&m1, &m2, 0};

cv::Mat m3[2];

cv::NAryMatIterator it(arrays, m3);

float s = 0.f;

int n = 0;

for(int p = 0; p < it.nplanes; p++, ++it)

{

s += cv::sum(it.planes[0])[0];

s += cv::sum(it.planes[1])[0];

n++;

}

cout << s << endl;

}

7. 常用颜色空间

(1) RGB颜色空间:是最常见的面向硬件设备的彩色模型,它是人的视觉系统密切相连的模型,根据人眼结构,所有的颜色都可以看做是3种基本颜色——红r、绿g、蓝b的不同比例的组合;

(2) HSV颜色空间:是一种基于感知的颜色模型。它将彩色信号分为3种属性:色调(H),饱和度(S),亮度(V)。色调表示从一个物体反射过来的或透过物体的光波长,如红、黄、蓝;亮度是颜色的明暗程度;饱和度是颜色的深浅,如深红、浅红。

(3) YCbCr颜色空间:视频图像和数字图像中常用的色彩空间。Y代表亮度,Cb和Cr代表蓝色分量和红色分量。该模型的数据可以是双精度类型的,但存储空间为8位无符号整形数据空间。Y的取值范围为16~235,蓝红分量的取值范围为16~240.

(4) Lab颜色空间:自然界中任何一点色都可以在Lab空间中表达出来,它的色彩空间比RGB空间还要大。它是一种设备无关的颜色系统,也是一种基于生理特征的颜色系统。这也就意味着,它是用数字化的方法来描述人的视觉感应。,所以它弥补了RGB和CMYK模式必须依赖于设备色彩特性的不足。

(5) YUV颜色空间:在彩色电视中,用Y、C1, C2彩色表示法分别表示亮度信号和两个色差信号,C1,C2的含义与具体的应用有关。

(6) CMY/CMYK颜色空间: 工业印刷采用的颜色空间。RGB来源于是物体发光,而CMY是依据反射光得到的。

8. opencv颜色空间

(1) BGR:注意顺序, int型取值在0-255之间,float型取值在0.0-1.0之间;

(2) Lab: L表示亮度, 颜色通道a表示从绿色到洋红色的颜色, 颜色通道b表示从蓝色到黄色的颜色;

(3) YCrCb: 通道Y表示伽马校正后从RGB获得的亮度或亮度分量, 通道Cr=R-Y(表示红色成分与亮度成分Y的距离), 通道Cb=B-Y(表示蓝色成分与亮度成分Y的距离);

(4) HSV.

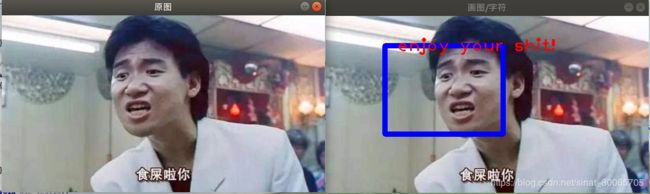

9. opencv画图和文字显式

// opencv中文乱码问题参照博客 https://blog.csdn.net/djstavaV/article/details/86884279?utm_medium=distribute.pc_relevant.none-task-blog-baidujs-1

void use_test15()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg");

cv::imshow("原图", img);

cv::rectangle(img, cv::Point2i(100, 50), cv::Point2i(300, 200), cv::Scalar(255, 0, 0), 8); // 画矩形框

cv::putText(img, "enjoy your shit!", cv::Point2i(120, 60), cv::FONT_HERSHEY_PLAIN, 2.0, cv::Scalar(0,0,255), 2, 8); // 在图片上显示英文字符串

cv::imshow("画图/字符", img);

cv::waitKey();

}

10. RNG随机数生成器

void use_test()

{

cv::RNG rng; // 默认种子-1, rng既是实例化的对象,也是随机数

int num1 = rng;

cout << " num1: " << num1 << endl;

double num2 = rng.uniform(0.0, 1.1); // 均匀分布的随机数

cout << "num2: " << num2 << endl;

double num3 = rng.gaussian(2); // 均值为0标准差为2的高斯分布随机数

cout << "num3: " << num3 << endl;

}num1: 130063606

num2: 0.769185

num3: -0.857192

11. opencv主成分分析

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0); // 读取单通道图像

cv::imshow("原始图像",img);

cout << img.rows << ", " << img.cols << endl;

cv::PCA pca(img, cv::Mat(), CV_PCA_DATA_AS_ROW, 30);

cout << pca.eigenvectors.cols << ", " << pca.eigenvectors.rows << endl; // 特征向量,按行存储

cv::Mat pca_mat = pca.project(img); // 将图像投影到主成分空间

cout << pca_mat.rows << ", " << pca_mat.cols <

12. 视频与摄像头读取与保存

void use_test7()

{

cv:: VideoCapture cap;

cap.open(0);

double fps = cap.get(cv::CAP_PROP_FPS);

cv::Size size((int)cap.get(cv::CAP_PROP_FRAME_WIDTH), (int)cap.get(cv::CAP_PROP_FRAME_HEIGHT));

cv::VideoWriter writer;

writer.open("test.avi", CV_FOURCC('M','J','P','G'), fps, size);

cv::Mat frame;

for(;;)

{

cap >> frame;

cv::imshow("E2", frame);

writer << frame;

char input = cv::waitKey(1);

if(input == 'q')

break;

}

cap.release();

}注: opencv中也可以用FileStorage类保存变量及读取已保存的变量文件.

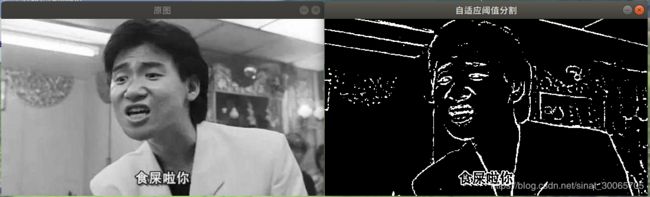

13. 滤波与阈值

(1)滤波: 简单来说就是窗口卷积, opencv会对图像周围添加虚拟像素来应对边界的卷积问题,可用filter2D()自定义卷积核;

(2) 阈值化: 可以理解成卷积核为1X1的滤波. 注意自适应阈值方法adaptiveThreshold().

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0); // 读取单通道图像

cv::imshow("原图", img);

cv::adaptiveThreshold(img, img, 255, cv::ADAPTIVE_THRESH_GAUSSIAN_C, cv::THRESH_BINARY_INV, 11, 10.0);

cv::imshow("自适应阈值分割", img);

cv::waitKey(0);

}

14. 图像平滑(模糊)

(1) 简单方框模糊: 取卷积核取均值;

(2) 中指滤波: 取卷积核的中值;

(3) 高斯滤波: 高斯滤波器;

(4) 双边滤波: 包含了高斯平滑,以及颜色权重,有水彩化的效果.

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0); // 读取单通道图像

cv::Mat img1;

cv::imshow("原图", img);

cv::blur(img, img1, cv::Size(11,11));

cv::imshow("简单方框模糊", img1);

cv::medianBlur(img, img1, 11);

cv::imshow("中值模糊", img1);

cv::GaussianBlur(img, img1, cv::Size(11,11), 1, 0.5);

cv::imshow("高斯模糊", img1);

cv::bilateralFilter(img, img1, 11, 1, 1);

cv::imshow("双边模糊", img1);

cv::waitKey(0);

}

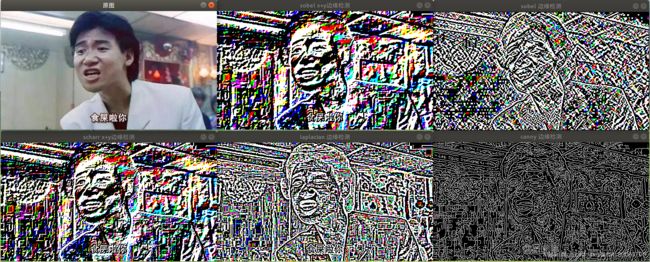

15. 图像边缘检测

(1)Sobel算子: 卷积核较小时精度不高;

(2) Scharr滤波器: 速度和Sobel一样,但精度较高;

(3) Laplacian变换:对图像求二阶导.

(4) Canny边缘检测: 基于边缘梯度方向的非极大值抑制,和双阈值的滞后阈值处理。

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::Mat img1, imgx, imgy;

cv::imshow("原图", img);

cv::Sobel(img, imgx, CV_32F, 1,0,5);

cv::Sobel(img, imgy, CV_32F, 0,1,5);

cv::addWeighted(imgx,0.5,imgy,0.5,0,img1);

cv::imshow("sobel x+y边缘检测", img1);

cv::Sobel(img, img1, CV_32F, 1,1,5);

cv::imshow("sobel 边缘检测", img1);

cv::Scharr(img, imgx, CV_32F,1,0);

cv::Scharr(img, imgy, CV_32F,0,1);

cv::addWeighted(imgx,0.5,imgy,0.5,0,img1);

cv::imshow("scharr x+y边缘检测", img1);

cv::Laplacian(img, img1,CV_32F,5);

cv::imshow("laplacian 边缘检测", img1);

cv::Canny(img, img1,100,100,5);

cv::imshow("canny 边缘检测", img1);

cv::waitKey(0);

}

16. 图像膨胀与腐蚀, 开运算与闭运算, 顶帽与黑帽, 梯度计算

(1) 膨胀: 取卷积核的局部最大值,即扩大亮色图斑;

(2) 腐蚀: 取卷积核的局部最小值,即缩小亮色图斑;

(3) 开运算: 先腐蚀再膨胀,分离亮色图斑;

(4) 闭运算: 先膨胀再腐蚀,联通亮色图斑;

(5) 梯度: 膨胀-腐蚀,提取边缘;

(6) 顶帽: 原始图像-开运算, 突出亮色图斑边缘;

(7) 黑帽: 原始图像-闭运算,突出暗色图斑边缘.

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0); // 读取单通道图像

cv::Mat img1, imgp, imgf, imgk, imgb;

cv::Mat element = getStructuringElement(cv::MORPH_RECT, cv::Size(7, 7));

cv::imshow("原图", img);

cv::dilate(img, imgp, element);

cv::imshow("原图膨胀", imgp);

cv::erode(img, imgf, element);

cv::imshow("原图腐蚀", imgf);

cv::morphologyEx(img, imgk,cv::MORPH_OPEN,element);

cv::imshow("原图开运算", imgk);

cv::morphologyEx(img, imgb,cv::MORPH_CLOSE,element);

cv::imshow("原图闭运算", imgb);

img1 = imgp - imgf;

cv::imshow("膨胀-腐蚀 提取梯度", img1);

cv::morphologyEx(img, img1,cv::MORPH_TOPHAT,element);

cv::imshow("原图-开运算 顶帽", img1);

cv::morphologyEx(img, img1,cv::MORPH_BLACKHAT,element);

cv::imshow("原图-闭运算 黑帽", img1);

cv::waitKey(0);

}

17. 图像金字塔及opencv的创建

(1) 图像金字塔: 是图像中多尺度表达的一种,最主要用于图像的分割,是一种以多分辨率来解释图像的有效但概念简单的结构。图像金字塔最初用于机器视觉和图像压缩,一幅图像的金字塔是一系列以金字塔形状排列的分辨率逐步降低,且来源于同一张原始图的图像集合。其通过梯次向下采样获得,直到达到某个终止条件才停止采样。金字塔的底部是待处理图像的高分辨率表示,而顶部是低分辨率的近似。我们将一层一层的图像比喻成金字塔,层级越高,则图像越小,分辨率越低。

(2) 高斯图像金字塔: 用来向下/降采样;

(3) 拉普拉斯金字塔: 用来从金字塔低层图像重建上层未采样图像,在数字图像处理中也即是预测残差,可以对图像进行最大程度的还原,配合高斯金字塔一起使用.

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::imshow("原图", img);

cv::Mat imgdown, imgup;

cv::pyrDown(img, imgdown);

cv::imshow("原图下采样2倍", imgdown);

cv::pyrUp(imgdown,imgup);

cv::imshow("用下采样2倍图像上采样成原图像", imgup);

vector imgt;

cv::buildPyramid(img, imgt, 5);

cout << imgt.size() << endl;

cv::imshow("图像金字塔第3层图像", imgt[2]);

cv::waitKey(0);

}

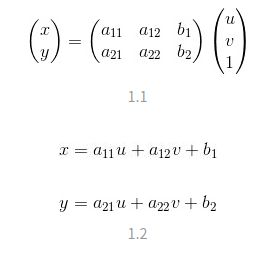

18. 仿射变换与透视变换

(1) 仿射变换: 平面变换,二维坐标变换,包括旋转 (线性变换),平移 (向量加).缩放(线性变换),错切,反转等变形,但无论图像中的平性关系保持不变;任意的仿射变换都能表示为乘以一个矩阵(线性变换),再加上一个向量 (平移) 的形式.

(2) 透视变换: 空间变换,三维坐标变换,透视变换是将图片投影到一个新的视平面,也称作投影映射.它是二维(x,y)到三维(X,Y,Z),再到另一个二维(x’,y’)空间的映射.

相对于仿射变换,它提供了更大的灵活性,将一个四边形区域映射到另一个四边形区域(不一定是平行四边形).它不止是线性变换,但也是通过矩阵乘法实现的,使用的是一个3x3的矩阵,矩阵的前两行与仿射矩阵相同(a11,a12,a13,a21,a22,a23),也实现了线性变换和平移,第三行用于实现透视变换.可以把仿射变换理解成透视变换的子集.

19. opencv实现仿射变换与透视变化

cv::Mat src, dst, co;

co = cv::getAffineTransform(src,dst); // 通过原图和变换后的图获得仿射变换矩阵

vector srcpoints, dstpoints;

co = cv::getAffineTransform(srcpoints, dstpoints); // 通过3组对应点获得放射不安换矩阵

cv::Point2f center; // 指定图像旋转中心

double angle, scale; // 指定旋转角度和缩放尺度

co = cv::getRotationMatrix2D(center, angle, scale); // 根据指定的旋转中心,旋转角度和缩放尺度计算仿射变换矩阵

cv::warpAffine(src, dst,co, cv::Size(100, 100)); // 进行仿射变换

//cv::getPerspectiveTransform(...) 类似仿射变换

//cv::warpPerspective(...)类似仿射变换,但透视变换需要4组点

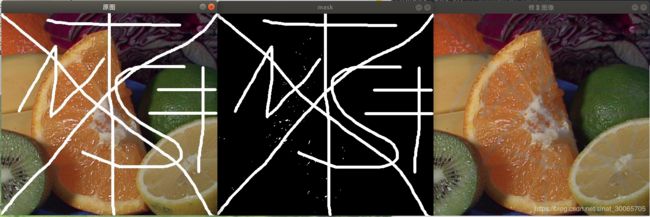

20. 图像修补缺失值

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test1.jpg", -1); // 读取单通道图像

cv::imshow("原图", img);

cv::Mat repair, mask, img1;

cv::cvtColor(img, img1, CV_BGR2GRAY, 0);

mask = cv::Mat(img.size(), CV_8U, cv::Scalar(0)); // mask为固定数据类型

cv::threshold(img1, mask, 200, 255, CV_THRESH_BINARY);

cv::dilate(mask, mask,3); // 利用膨胀运算扩大修补范围

cv::imshow("mask", mask);

cv::inpaint(img, mask, repair, 5,CV_INPAINT_TELEA);

cv::imshow("修复图像", repair);

cv::waitKey(0);

}

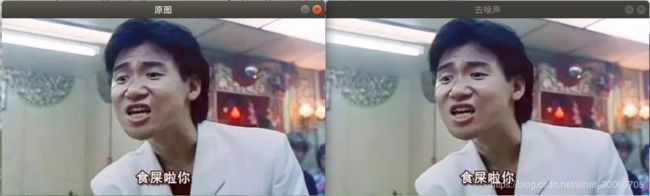

21. 图像去噪

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::imshow("原图", img);

cv::fastNlMeansDenoising(img, img);

cv::imshow("去噪声", img);

cv::waitKey(0);

}

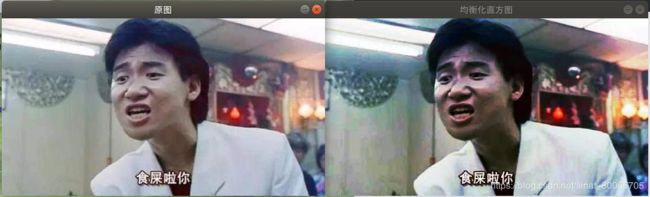

22. 图像直方图均衡化

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::imshow("原图", img);

vector img3;

cv::split(img, img3); // 将原图3通道分开处理

cv::equalizeHist(img3[0], img3[0]);

cv::equalizeHist(img3[1], img3[1]);

cv::equalizeHist(img3[2], img3[2]);

cv::merge(img3, img); // 将3通道直方图均衡化结果合并

cv::imshow("均衡化直方图", img);

cv::waitKey(0);

}

23. 图像的傅里叶变换意义

void use_test()

{

using namespace cv;

cv::Mat I = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0); // 读取单通道图像

Mat padded; //以0填充输入图像矩阵

int m = getOptimalDFTSize(I.rows);

int n = getOptimalDFTSize(I.cols);

//填充输入图像I,输入矩阵为padded,上方和左方不做填充处理

copyMakeBorder(I, padded, 0, m - I.rows, 0, n - I.cols, BORDER_CONSTANT, Scalar::all(0));

Mat planes[] = { Mat_(padded), Mat::zeros(padded.size(),CV_32F) };

Mat complexI;

merge(planes, 2, complexI); //将planes融合合并成一个多通道数组complexI

dft(complexI, complexI); //进行傅里叶变换

//计算幅值,转换到对数尺度(logarithmic scale)

split(complexI, planes); //planes[0] = Re(DFT(I),planes[1] = Im(DFT(I))

//即planes[0]为实部,planes[1]为虚部

magnitude(planes[0], planes[1], planes[0]); //planes[0] = magnitude

Mat magI = planes[0];

magI += Scalar::all(1);

log(magI, magI); //转换到对数尺度(logarithmic scale)

//如果有奇数行或列,则对频谱进行裁剪

magI = magI(Rect(0, 0, magI.cols&-2, magI.rows&-2));

//重新排列傅里叶图像中的象限,使得原点位于图像中心

int cx = magI.cols / 2;

int cy = magI.rows / 2;

Mat q0(magI, Rect(0, 0, cx, cy)); //左上角图像划定ROI区域

Mat q1(magI, Rect(cx, 0, cx, cy)); //右上角图像

Mat q2(magI, Rect(0, cy, cx, cy)); //左下角图像

Mat q3(magI, Rect(cx, cy, cx, cy)); //右下角图像

//变换左上角和右下角象限

Mat tmp;

q0.copyTo(tmp);

q3.copyTo(q0);

tmp.copyTo(q3);

//变换右上角和左下角象限

q1.copyTo(tmp);

q2.copyTo(q1);

tmp.copyTo(q2);

//归一化处理,用0-1之间的浮点数将矩阵变换为可视的图像格式

normalize(magI, magI, 0, 1, CV_MINMAX);

imshow("输入图像", I);

imshow("频谱图", magI);

waitKey(0);

}

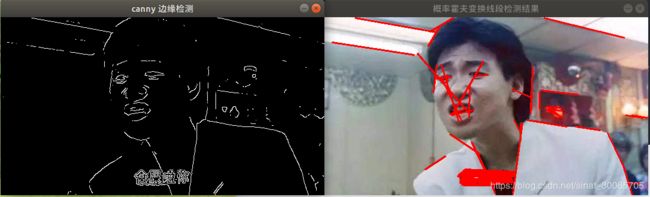

24. Hough变换

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::Mat img1;

cv::Canny(img, img1,240,240,3);

cv::imshow("canny 边缘检测", img1);

vector linepoints; // 返回值有2对坐标4个元素,所以不能用cv::Point

cv::HoughLinesP(img1, linepoints, 1, CV_PI/180, 20, 20, 20); // 概率霍夫变换

cout << linepoints.size() << endl;

for (int i= 0; i

25. 图像分割

(1) 漫水填充算法

void use_test()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

// 起始点对漫水填冲影响很大

cv::Rect floodfill;

cv::Mat flood = img;

cv::floodFill(flood, cv::Point2f(100, 100), cv::Scalar(0,0,255), &floodfill, cv::Scalar(7,7,7), cv::Scalar(7,7,7));

cv::imshow("漫水填冲分割图像", flood);

cv::waitKey(0);

}

(2) 分水岭算法

// 用mat.type()得到的数据类型还需要经过以下函数的转换才知道具体是哪一种CV类型

string type2str(int type) {

string r;

uchar depth = type & CV_MAT_DEPTH_MASK;

uchar chans = 1 + (type >> CV_CN_SHIFT);

switch ( depth ) {

case CV_8U: r = "8U"; break;

case CV_8S: r = "8S"; break;

case CV_16U: r = "16U"; break;

case CV_16S: r = "16S"; break;

case CV_32S: r = "32S"; break;

case CV_32F: r = "32F"; break;

case CV_64F: r = "64F"; break;

default: r = "User"; break;

}

r += "C";

r += (chans+'0');

return r;

}

void use_test30()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1); // 读取单通道图像

cv::Mat water = img;

cv::cvtColor(img,water,CV_BGR2GRAY);

Canny(water,water,80,150);

vector> contours;

vector hierarchy;

cv::findContours(water,contours,hierarchy,cv::RETR_TREE,cv::CHAIN_APPROX_SIMPLE,cv::Point());

cout << contours.size() << endl;

cv::Mat imageContours=cv::Mat::zeros(img.size(),CV_8UC1); //轮廓

cv::Mat mask(img.size(), CV_32SC1, cv::Scalar(0)); // 指明哪些区域是联通的

int index = 0;

int compCount = 0;

for( ; index >= 0; index = hierarchy[index][0], compCount++ )

{

//对marks进行标记,对不同区域的轮廓进行编号,相当于设置注水点,有多少轮廓,就有多少注水点

drawContours(mask, contours, index, cv::Scalar::all(compCount+1), 1, 8, hierarchy);

drawContours(imageContours,contours,index,cv::Scalar(255),1,8,hierarchy);

}

cv::Mat mask1(img.size(), CV_32SC1, cv::Scalar(0)); // 指明哪些区域是联通的

mask1 = mask;

cv::convertScaleAbs(mask,mask);

cout << type2str(img.type()) << ", " << type2str(mask.type()) << endl;

cv::watershed(img, mask1);

cv::convertScaleAbs(mask1,mask1);

cv::imshow("分水岭算法", mask1);

cv::waitKey(0);

}

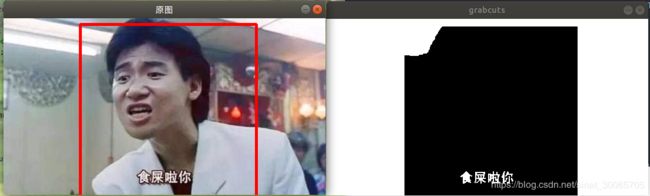

(3) grabcuts算法

void use_test31()

{

cv::Rect rect(130, 10, 300, 300);

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg");

cv::Mat drawmat = img;

cv::rectangle(drawmat, rect, cv::Scalar(0,0,255),3);

cv::imshow("原图", drawmat);

//定义输出结果,结果为:GC_BGD =0(背景),GC_FGD =1(前景),GC_PR_BGD = 2(可能的背景), GC_PR_FGD = 3(可能的前景),以及两个临时矩阵变量,作为算法的中间变量使用

cv::Mat result, bmat, fmat;

cv::grabCut(img, result, rect, bmat, fmat, 1, cv::GC_INIT_WITH_RECT);

cv::imshow("抓取图像", result);

//比较result的值为可能的前景像素才输出到result中

compare(result, cv::GC_PR_FGD, result, cv::CMP_NE);

// 产生输出图像

cv::Mat img1(img.size(), CV_8UC3, cv::Scalar(255, 255, 255));

//将原图像src中的result区域拷贝到foreground中

result.copyTo(img1);

imshow("grabcuts", img1);

cv::waitKey(0);

}

(4) meanshift分割

void use_test32()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg");

cv::Mat result, bmat, fmat;

cv::pyrMeanShiftFiltering(img, result,60.0,60.0,1);

cv::Mat mask(img.rows+2, img.cols+2, CV_8UC1, cv::Scalar(0));

cv::RNG rng = cv::theRNG();

for( int y = 0; y < result.rows; y++ )

{

for( int x = 0; x < result.cols; x++ )

{

if( mask.at(y+1, x+1) == 0 )

{

cv::Scalar newVal( rng(256), rng(256), rng(256) );

floodFill( result, mask, cv::Point(x,y), newVal, 0, cv::Scalar(2), cv::Scalar(2));

}

}

}

cv::imshow("mean-shift算法分割", result);

cv::waitKey(0);

}

26. opencv创建直方图

void use_test33()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0);

cv::Mat hist;

int channels = 0;

int histSize[] = { 256 };

float midRanges[] = { 0, 256 };

const float *ranges[] = { midRanges };

calcHist(&img, 1, &channels, cv::Mat(), hist, 1, histSize, ranges, true, false);

cv::Mat drawImage = cv::Mat(cv::Size(256, 256), CV_8UC3, cv::Scalar(0));

double g_dHistMaxValue;

minMaxLoc(hist, 0, &g_dHistMaxValue, 0, 0);

//将像素的个数整合到图像的最大范围内

for (int i = 0; i < 256; i++)

{

int value = cvRound(hist.at(i) * 256 * 0.9 / g_dHistMaxValue);

line(drawImage, cv::Point(i, drawImage.rows - 1), cv::Point(i, drawImage.rows - 1 - value), cv::Scalar(0, 0, 255));

}

imshow("hist", drawImage);

cv::waitKey(0);

}

27. 直方图匹配

(1) 相关性方法

(2) 卡方检验

(3) 交集法

(4) 巴氏距离

void use_test34()

{

cv::Mat src1Image,src1HalfImage,src2Image,src3Image;

cv::Mat src1HsvImage,src1HalfHsvImage,src2HsvImage,src3HsvImage;

src1Image = cv::imread("/home/wz/Documents/test/test_opencv3/HandIndoorColor.jpg");

src2Image = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg");

src3Image = cv::imread("/home/wz/Documents/test/test_opencv3/faces.png");

src1HalfImage = cv::Mat(src1Image, cv::Range(src1Image.rows/2,src1Image.rows-1),cv::Range(0,src1Image.cols-1));

cvtColor(src1Image, src1HsvImage, CV_RGB2HSV);

cvtColor(src2Image, src2HsvImage, CV_RGB2HSV);

cvtColor(src3Image, src3HsvImage, CV_RGB2HSV);

cvtColor(src1HalfImage, src1HalfHsvImage, CV_RGB2HSV);

int hbins = 50,sbins = 60;

int histSize[] = {hbins,sbins};

int channels[] = {0,1};

float hRange[] = {0,256};

float sRange[] = {0,180};

const float* ranges[] = {hRange,sRange};

cv::MatND src1Hist,src2Hist,src3Hist,srcHalfHist;

calcHist(&src1HsvImage, 1, channels, cv::Mat(), src1Hist, 2, histSize, ranges,true,false);

normalize(src1Hist, src1Hist, 0,1, cv::NORM_MINMAX,-1,cv::Mat());

calcHist(&src2HsvImage, 1, channels, cv::Mat(), src2Hist, 2, histSize, ranges,true,false);

normalize(src2Hist, src2Hist, 0,1, cv::NORM_MINMAX,-1,cv::Mat());

calcHist(&src3HsvImage, 1, channels, cv::Mat(), src3Hist, 2, histSize, ranges,true,false);

normalize(src3Hist, src3Hist, 0,1, cv::NORM_MINMAX,-1,cv::Mat());

calcHist(&src1HalfHsvImage, 1, channels, cv::Mat(), srcHalfHist, 2, histSize, ranges,true,false);

normalize(srcHalfHist, srcHalfHist, 0,1, cv::NORM_MINMAX,-1,cv::Mat());

// imshow("src1 image", src1Image);

// imshow("src2 image", src2Image);

// imshow("src3 image", src3Image);

// imshow("src1Half image", src1HalfImage);

cv::moveWindow("src1 image", 0, 0);

cv::moveWindow("src2 image", src1Image.cols, 0);

cv::moveWindow("src3 image", src2Image.cols+src1Image.cols, 0);

cv::moveWindow("src1 half image", src1Image.cols+src2Image.cols+src3Image.cols, 0);

for (int i = 0; i < 4; i++) {

int compareMethod = i;

double compareResultA = compareHist(src1Hist, src1Hist, i);

double compareResultB = compareHist(src1Hist, src2Hist, i);

double compareResultC = compareHist(src1Hist, src3Hist, i);

double compareResultD = compareHist(src1Hist, srcHalfHist, i);

switch (i)

{

case 0: printf("比对方法为相关性法 \n"); break;

case 1: printf("比对方法为卡方检验 \n"); break;

case 2: printf("比对方法为交集法 \n"); break;

case 3: printf("比对方法为巴氏距离 \n"); break;

default: continue;

}

printf("直方图比对结果为\r 图1 vs 图1 = %.3f \n图1 vs 图2 = %.3f \n图1 vs 图3 = %.3f \n图1 vs 一半大小的图1 = %.3f \n",compareResultA,compareResultB,compareResultC,compareResultD);

printf("**********************************\n");

}

cv::waitKey(0);

}比对方法为相关性法

图1 vs 图1 = 1.000

图1 vs 图2 = 0.071

图1 vs 图3 = 0.125

图1 vs 一半大小的图1 = 0.868

**********************************

比对方法为卡方检验

图1 vs 图1 = 0.000

图1 vs 图2 = 1525.752

图1 vs 图3 = 1551.695

图1 vs 一半大小的图1 = 22.076

**********************************

比对方法为交集法

图1 vs 图1 = 62.821

图1 vs 图2 = 11.722

图1 vs 图3 = 11.329

图1 vs 一半大小的图1 = 38.612

**********************************

比对方法为巴氏距离

图1 vs 图1 = 0.000

图1 vs 图2 = 0.702

图1 vs 图3 = 0.679

图1 vs 一半大小的图1 = 0.387

**********************************

Process finished with exit code 0

28. opencv模板匹配

在一幅图像中去寻找和另一幅模板图像中相似度最高的部分.

void use_test35()

{

// 读取匹配模板和待匹配数据

cv::Mat img1 = cv::imread("/home/wz/Documents/test/test_opencv3/adrian.jpg");

cv::Mat img2 = cv::imread("/home/wz/Documents/test/test_opencv3/BlueCup.jpg");

cv::imshow("匹配模板",img2);

cv::imshow("待匹配数据",img1);

// 创建一个空画布用来绘制匹配结果

cv::Mat dstImg;

dstImg.create(img1.dims,img1.size,img1.type());

// 执行匹配

cv::matchTemplate(img1, img2, dstImg, 0);

// 获取最大最小匹配系数

//首先是从得到的 输出矩阵中得到 最大或最小值(平方差匹配方式是越小越好,所以在这种方式下,找到最小位置)

//找矩阵的最小位置的函数是 minMaxLoc函数

cv::Point minPoint;

cv::Point maxPoint;

double *minVal = 0;

double *maxVal = 0;

cv::minMaxLoc(dstImg, minVal, maxVal, &minPoint,&maxPoint);

// 画出匹配结果

cv::rectangle(img1, minPoint, cv::Point(minPoint.x + img2.cols, minPoint.y + img2.rows), cv::Scalar(0,255,0), 2, 8);

cv::imshow("匹配后的图像", img1);

cv::waitKey(0);

}

29. 图斑轮廓提取, 返回连通区域, 轮廓的多边形逼近, 轮廓的凸包近似

void use_test36()

{

cv::Mat img = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", -1);

cv::Mat drawimg = img;

cv::imshow("原始图像", img);

cv::cvtColor(img,img,CV_BGR2GRAY);

// 数据预处理-高斯去噪

cv:: GaussianBlur(img,img,cv::Size(3,3),0);

// 数据预处理-阈值化

cv::threshold(img, img, 200, 255, cv::THRESH_BINARY);

cv::imshow("数据预处理结果", img);

vector> contours;

vector hierarchy;

findContours(img,contours,hierarchy,cv::RETR_TREE,cv::CHAIN_APPROX_SIMPLE,cv::Point());

cout << contours.size() << ", " << hierarchy.size() << endl;

cv::drawContours(drawimg, contours, -1, cv::Scalar(0,0,200), 1, 8, hierarchy);

cv::imshow("画出轮廓", drawimg);

// 执行查找连通区域

cv::Mat connectedimg, stats, centroids,img_color;

cv::connectedComponents(img, connectedimg);

int i, nccomps = cv::connectedComponentsWithStats (

img, connectedimg,

stats, centroids

);

cout << "Total Connected Components Detected: " << nccomps << endl;

// 画出联通区域

vector colors(nccomps+1);

colors[0] = cv::Vec3b(0,0,0); // background pixels remain black.

for( i = 1; i < nccomps; i++ ) {

colors[i] = cv::Vec3b(rand()%256, rand()%256, rand()%256);

if( stats.at(i, cv::CC_STAT_AREA) < 200 )

colors[i] = cv::Vec3b(0,0,0); // small regions are painted with black too.

}

img_color = cv::Mat::zeros(img.size(), CV_8UC3);

for( int y = 0; y < img_color.rows; y++ )

for( int x = 0; x < img_color.cols; x++ )

{

int label = connectedimg.at(y, x);

CV_Assert(0 <= label && label <= nccomps);

img_color.at(y, x) = colors[label];

}

cv::imshow("填充连通区域", img_color);

// 轮廓的多边形逼近

vector> bj(contours.size());

for (int i = 0; i> tb(contours.size());

for (int i = 0; i

30. 图像的轮廓匹配

void use_test37()

{

cv::Mat img1 = cv::imread("/home/wz/Documents/test/test_opencv3/HandIndoorColor.jpg", 0);

cv::Mat img2 = cv::imread("/home/wz/Documents/test/test_opencv3/HandOutdoorSunColor.jpg", 0);

cv::Mat img3 = cv::imread("/home/wz/Documents/test/test_opencv3/test.jpg", 0);

cv::imshow("待匹配数据1",img1);

cv::imshow("待匹配数据2",img2);

cv::imshow("待匹配数据3",img3);

// Hu不变矩匹配,值越小越相似

double matchRate1 = cv::matchShapes(img1,img2,cv::CONTOURS_MATCH_I3, 0);

double matchRate2 = cv::matchShapes(img1,img3,cv::CONTOURS_MATCH_I3, 0);

cout << "图1 vs 图2匹配结果为: " << matchRate1 << endl;

cout << "图1 vs 图3匹配结果为: " << matchRate2 << endl;

cv::waitKey(0);

}

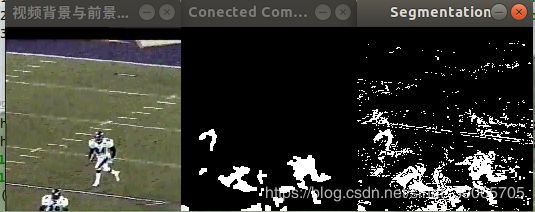

31. opencv区分背景与前景

#include

#include

#include

#include

#include

using namespace std;

// polygons will be simplified using DP algorithm with 'epsilon' a fixed

// fraction of the polygon's length. This number is that divisor.

//

#define DP_EPSILON_DENOMINATOR 20.0

// How many iterations of erosion and/or dilation there should be

//

#define CVCLOSE_ITR 1

void findConnectedComponents(

cv::Mat& mask, // Is a grayscale (8-bit depth) "raw" mask image

// that will be cleaned up

int poly1_hull0, // If set, approximate connected component by

// (DEFAULT: 1) polygon, or else convex hull (0)

float perimScale, // Len = (width+height)/perimScale. If contour

// len < this, delete that contour (DEFAULT: 4)

vector& bbs, // Ref to bounding box rectangle return vector

vector& centers // Ref to contour centers return vector

) {

bbs.clear();

centers.clear();

// CLEAN UP RAW MASK

//

cv::morphologyEx(

mask, mask, cv::MORPH_OPEN, cv::Mat(), cv::Point(-1,-1), CVCLOSE_ITR);

cv::morphologyEx(

mask, mask, cv::MORPH_CLOSE, cv::Mat(), cv::Point(-1,-1), CVCLOSE_ITR);

// FIND CONTOURS AROUND ONLY BIGGER REGIONS

//

vector< vector > contours_all; // all contours found

vector< vector > contours;

// just the ones we want to keep

cv::findContours( mask, contours_all, cv::RETR_EXTERNAL,

cv::CHAIN_APPROX_SIMPLE);

for( vector< vector >::iterator c = contours_all.begin();

c != contours_all.end(); ++c) {

// length of this contour

//

int len = cv::arcLength( *c, true );

// length threshold a fraction of image perimeter

//

double q = (mask.rows + mask.cols) / DP_EPSILON_DENOMINATOR;

if( len >= q ) { // If the contour is long enough to keep...

vector c_new;

if( poly1_hull0 ) {

// If the caller wants results as reduced polygons...

cv::approxPolyDP( *c, c_new, len/200.0, true );

} else {

// Convex Hull of the segmentation

cv::convexHull( *c, c_new );

}

contours.push_back(c_new );

}

}

// Just some convenience variables

const cv::Scalar CVX_WHITE(0xff,0xff,0xff);

const cv::Scalar CVX_BLACK(0x00,0x00,0x00);

// CALC CENTER OF MASS AND/OR BOUNDING RECTANGLES

//

int idx = 0;

cv::Moments moments;

cv::Mat scratch = mask.clone();

for(vector< vector >::iterator c = contours.begin();

c != contours.end(); c++, idx++) {

cv::drawContours( scratch, contours, idx, CVX_WHITE, cv::FILLED);

// Find the center of each contour

//

moments = cv::moments( scratch, true );

cv::Point p;

p.x = (int)( moments.m10 / moments.m00 );

p.y = (int)( moments.m01 / moments.m00 );

centers.push_back(p);

bbs.push_back( cv::boundingRect(*c) );

scratch.setTo( 0 );

}

// PAINT THE FOUND REGIONS BACK INTO THE IMAGE

//

mask.setTo( 0 );

cv::drawContours( mask, contours, -1, CVX_WHITE, cv::FILLED );

}

////////////////////////////////////////////////////////////////////////

////////// Use previous example_15-04 and clean up its images //////////

////////////////////////////////////////////////////////////////////////

#define CHANNELS 3 //Always 3 because yuv

int cbBounds[CHANNELS]; // IF pixel is within this bound outside of codebook, learn it, else form new code

int minMod[CHANNELS]; // If pixel is lower than a codebook by this amount, it's matched

int maxMod[CHANNELS]; // If pixel is high than a codebook by this amount, it's matched

//The variable t counts the number of points we’ve accumulated since the start or the last

//clear operation. Here’s how the actual codebook elements are described:

//

class CodeElement {

public:

uchar learnHigh[CHANNELS]; //High side threshold for learning

uchar learnLow[CHANNELS]; //Low side threshold for learning

uchar max[CHANNELS]; //High side of box boundary

uchar min[CHANNELS]; //Low side of box boundary

int t_last_update; //Allow us to kill stale entries

int stale; //max negative run (longest period of inactivity)

CodeElement() {

for(int i = 0; i < CHANNELS; i++)

learnHigh[i] = learnLow[i] = max[i] = min[i] = 0;

t_last_update = stale = 0;

}

CodeElement& operator=( const CodeElement& ce ) {

for(int i=0; i {

public:

int t; //Count of every image learned on

// count every access

CodeBook() { t=0; }

// Default is an empty book

CodeBook( int n ) : vector(n) { t=0; } // Construct book of size n

};

// Updates the codebook entry with a new data point

// Note: cbBounds must be of length equal to numChannels

//

//

int updateCodebook( // return CodeBook index

const cv::Vec3b& p, // incoming YUV pixel

CodeBook& c, // CodeBook for the pixel

int* cbBounds, // Bounds for codebook (usually: {10,10,10})

int numChannels // Number of color channels we're learning

) {

if(c.size() == 0)

c.t = 0;

c.t += 1; //Record learning event

//SET HIGH AND LOW BOUNDS

unsigned int high[3], low[3], n;

for( n=0; n 255 ) high[n] = 255;

low[n] = p[n] - *(cbBounds+n);

if( low[n] < 0) low[n] = 0;

}

// SEE IF THIS FITS AN EXISTING CODEWORD

//

int i;

int matchChannel;

for( i=0; i p[n] )

c[i].min[n] = p[n];

}

break;

}

}

// OVERHEAD TO TRACK POTENTIAL STALE ENTRIES

//

for( int s=0; s low[n] ) c[i].learnLow[n] -= 1;

}

return c.size();

}

// During learning, after you've learned for some period of time,

// periodically call this to clear out stale codebook entries

//

int foo = 0;

int clearStaleEntries(

// return number of entries cleared

CodeBook &c

// Codebook to clean up

){

int staleThresh = c.t>>1;

int *keep = new int[c.size()];

int keepCnt = 0;

// SEE WHICH CODEBOOK ENTRIES ARE TOO STALE

//

int foogo2 = 0;

for( int i=0; i staleThresh)

keep[i] = 0; // Mark for destruction

else

{

keep[i] = 1; // Mark to keep

keepCnt += 1;

}

}

// move the entries we want to keep to the front of the vector and then

// truncate to the correct length once all of the good stuff is saved.

//

int k = 0;

int numCleared = 0;

for( int ii=0; ii minMod[3], maxMod[3]. There is one min and

// one max threshold per channel.

//

uchar backgroundDiff( // return 0 => background, 255 => foreground

const cv::Vec3b& p, // Pixel (YUV)

CodeBook& c, // Codebook

int numChannels, // Number of channels we are testing

int* minMod_, // Add this (possibly negative) number onto max level

// when determining whether new pixel is foreground

int* maxMod_ // Subtract this (possibly negative) number from min

// level when determining whether new pixel is

// foreground

) {

int matchChannel;

// SEE IF THIS FITS AN EXISTING CODEWORD

//

int i;

for( i=0; i= c.size() ) //No match with codebook => foreground

return 255;

return 0; //Else background

}

///////////////////////////////////////////////////////////////////////////////////////////////////

/////////////////// This part adds a "main" to run the above code. ////////////////////////////////

///////////////////////////////////////////////////////////////////////////////////////////////////

// Just make a convienience class (assumes image will not change size in video);

class CbBackgroudDiff {

public:

cv::Mat Iyuv; //Will hold the yuv converted image

cv::Mat mask; //Will hold the background difference mask

vector codebooks; //Will hold a CodeBook for each pixel

int row, col, image_length; //How many pixels are in the image

//Constructor

void init(cv::Mat &I_from_video) {

vector v(3,10);

set_global_vecs(cbBounds, v);

v[0] = 6; v[1] = 20; v[2] = 8; //Just some decent defaults for low side

set_global_vecs(minMod, v);

v[0] = 70; v[1] = 20; v[2] = 6; //Decent defaults for high side

set_global_vecs(maxMod, v);

Iyuv.create(I_from_video.size(), I_from_video.type());

mask.create(I_from_video.size(), CV_8UC1);

row = I_from_video.rows;

col = I_from_video.cols;

image_length = row*col;

cout << "(row,col,len) = (" << row << ", " << col << ", " << image_length << ")" << endl;

codebooks.resize(image_length);

}

CbBackgroudDiff(cv::Mat &I_from_video) {

init(I_from_video);

}

CbBackgroudDiff(){};

//Convert to YUV

void convert_to_yuv(cv::Mat &Irgb)

{

cvtColor(Irgb, Iyuv, cv::COLOR_BGR2YUV);

}

int size_check(cv::Mat &I) { //Check that image doesn't change size, return -1 if size doesn't match, else 0

int ret = 0;

if((row != I.rows) || (col != I.cols)) {

cerr << "ERROR: Size changed! old[" << row << ", " << col << "], now [" << I.rows << ", " << I.cols << "]!" << endl;

ret = -1;

}

return ret;

}

//Utilities for setting gloabals

void set_global_vecs(int *globalvec, vector &vec) {

if(vec.size() != CHANNELS) {

cerr << "Input vec[" << vec.size() << "] should equal CHANNELS [" << CHANNELS << "]" << endl;

vec.resize(CHANNELS, 10);

}

int i = 0;

for (vector::iterator it = vec.begin(); it != vec.end(); ++it, ++i) {

globalvec[i] = *it;

}

}

//Background operations

int updateCodebookBackground(cv::Mat &Irgb) { //Learn codebook, -1 if error, else total # of codes

convert_to_yuv(Irgb);

if(size_check(Irgb))

return -1;

int total_codebooks = 0;

cv::Mat_::iterator Iit = Iyuv.begin(), IitEnd = Iyuv.end();

vector::iterator Cit = codebooks.begin(), CitEnd = codebooks.end();

for(; Iit != IitEnd; ++Iit,++Cit) {

total_codebooks += updateCodebook(*Iit,*Cit,cbBounds,CHANNELS);

}

if(Cit != CitEnd)

cerr << "ERROR: Cit != CitEnd in updateCodeBackground(...) " << endl;

return(total_codebooks);

}

int clearStaleEntriesBackground() { //Clean out stuff that hasn't been updated for a long time

int total_cleared = 0;

vector::iterator Cit = codebooks.begin(), CitEnd = codebooks.end();

for(; Cit != CitEnd; ++Cit) {

total_cleared += clearStaleEntries(*Cit);

}

return(total_cleared);

}

int backgroundDiffBackground(cv::Mat &Irgb) { //Take the background difference of the image

convert_to_yuv(Irgb);

if(size_check(Irgb))

return -1;

cv::Mat_::iterator Iit = Iyuv.begin(), IitEnd = Iyuv.end();

vector::iterator Cit = codebooks.begin(), CitEnd = codebooks.end();

cv::Mat_::iterator Mit = mask.begin(), MitEnd = mask.end();

for(; Iit != IitEnd; ++Iit,++Cit,++Mit) {

*Mit = backgroundDiff(*Iit,*Cit,CHANNELS,minMod,maxMod);

}

if((Cit != CitEnd)||(Mit != MitEnd)){

cerr << "ERROR: Cit != CitEnd and, or Mit != MitEnd in updateCodeBackground(...) " << endl;

return -1;

}

return 0;

}

}; // end CbBackgroudDiff

//Adjusting the distance you have to be on the low side (minMod) or high side (maxMod) of a codebook

//to be considered as recognized/background

//

void adjustThresholds(char* charstr, cv::Mat &Irgb, CbBackgroudDiff &bgd) {

int key = 1;

int y = 1,u = 0,v = 0, index = 0, thresh = 0;

if(thresh)

cout << "yuv[" << y << "][" << u << "][" << v << "] maxMod active" << endl;

else

cout << "yuv[" << y << "][" << u << "][" << v << "] minMod active" << endl;

cout << "minMod[" << minMod[0] << "][" << minMod[1] << "][" << minMod[2] << "]" << endl;

cout << "maxMod[" << maxMod[0] << "][" << maxMod[1] << "][" << maxMod[2] << "]" << endl;

while((key = cv::waitKey()) != 27 && key != 'Q' && key != 'q') // Esc or Q or q to exit

{

if(thresh)

cout << "yuv[" << y << "][" << u << "][" << v << "] maxMod active" << endl;

else

cout << "yuv[" << y << "][" << u << "][" << v << "] minMod active" << endl;

cout << "minMod[" << minMod[0] << "][" << minMod[1] << "][" << minMod[2] << "]" << endl;

cout << "maxMod[" << maxMod[0] << "][" << maxMod[1] << "][" << maxMod[2] << "]" << endl;

if(key == 'y') { y = 1; u = 0; v = 0; index = 0;}

if(key == 'u') { y = 0; u = 1; v = 0; index = 1;}

if(key == 'v') { y = 0; u = 0; v = 1; index = 2;}

if(key == 'l') { thresh = 0;} //minMod

if(key == 'h') { thresh = 1;} //maxMod

if(key == '.') { //Up

if(thresh == 0) { minMod[index] += 4;}

if(thresh == 1) { maxMod[index] += 4;}

}

if(key == ',') { //Down

if(thresh == 0) { minMod[index] -= 4;}

if(thresh == 1) { maxMod[index] -= 4;}

}

cout << "y,u,v for channel; l for minMod, h for maxMod threshold; , for down, . for up; esq or q to quit;" << endl;

bgd.backgroundDiffBackground(Irgb);

cv::imshow(charstr, bgd.mask);

}

}

////////////////////////////////////////////////////////////////

vector bbs; // Ref to bounding box rectangle return vector

vector centers; // Ref to contour centers return vector

void use_test100()

{

cap.open("/home/wz/Documents/test/test_opencv3/test.avi");

int number_to_train_on = 50;

cv::Mat image;

CbBackgroudDiff bgd;

// FIRST PROCESSING LOOP (TRAINING):

//

int frame_count = 0;

int key;

bool first_frame = true;

cout << "Total frames to train on = " << number_to_train_on << endl;

char seg[] = "Segmentation";

while(1) {

cout << "frame#: " << frame_count;

cap >> image;

if( !image.data ) exit(1); // Something went wrong, abort

if(frame_count == 0) { bgd.init(image);}

cout << ", Codebooks: " << bgd.updateCodebookBackground(image) << endl;

cv::imshow( "视频背景与前景区分", image );

frame_count++;

if( (key = cv::waitKey(7)) == 27 || key == 'q' || key == 'Q' || frame_count >= number_to_train_on) break; //Allow early exit on space, esc, q

}

// We have accumulated our training, now create the models

//

cout << "Created the background model" << endl;

cout << "Total entries cleared = " << bgd.clearStaleEntriesBackground() << endl;

cout << "Press a key to start background differencing, 'a' to set thresholds, esc or q or Q to quit" << endl;

// SECOND PROCESSING LOOP (TESTING):

//

cv::namedWindow( seg, cv::WINDOW_AUTOSIZE );

cv::namedWindow("Conected Components", cv::WINDOW_AUTOSIZE);

while((key = cv::waitKey()) != 27 || key == 'q' || key == 'Q' ) { // esc, 'q' or 'Q' to exit

cap >> image;

if( !image.data ) exit(0);

cout << frame_count++ << " 'a' to adjust threholds" << endl;

if(key == 'a') {

cout << "Adjusting thresholds" << endl;

cout << "y,u,v for channel; l for minMod, h for maxMod threshold; , for down, . for up; esq or q to quit;" << endl;

adjustThresholds(seg,image,bgd);

}

else {

if(bgd.backgroundDiffBackground(image)) {

cerr << "ERROR, bdg.backgroundDiffBackground(...) failed" << endl;

exit(-1);

}

}

cv::imshow("Segmentation",bgd.mask);

findConnectedComponents(bgd.mask, 1, 4, bbs, centers);

cv::imshow("Conected Components", bgd.mask);

}

}

32. 图像角点, 关键点, 特征点概念辨析

(1) 角点: 角点就是极值点,即在某方面属性特别突出的点,是在某些属性上强度最大或者最小的孤立点、线段的终点。 对于单幅图像而言,如图所示圆圈内的部分,即为图像的角点,其是物体轮廓线的连接点。

(2) 关键点: 关键点是个更加抽象的概念,对于图像处理,其一般来说可以是对于分析问题比较重要的点。在提取关键点时,边缘应该作为一个重要的参考依据,但一定不是唯一的依据,对于某个物体来说关键点应该是表达了某些特征的点,而不仅仅是边缘点。只要对分析特定问题有帮助的点都可以称其为关键点。

(3) 特征点: 主要指的就是能够在其他含有相同场景或目标的相似图像中以一种相同的或至少非常相似的不变形式表示图像或目标,即是对于同一个物体或场景,从不同的角度采集多幅图片,如果相同的地方能够被识别出来是相同的,则这些点或块称为特征点。特征点包含角点,但不止于角点.

33. 角点匹配

角点匹配(corner matching)是指寻找两幅图像之间的特征像素点的对应关系,从而确定两幅图像的位置关系。如上图所示,即为对两幅不同视角的图进行角点匹配,检测出来之后可以对其进行后续的处理工作。角点匹配可以分为以下三个步骤:

(1) 检测子(detector)提取:在两张待匹配的图像中寻找那些最容易识别的像素点(角点),比如纹理丰富的物体边缘点等。其提取方法有SIFT算法、Harris算法、FAST算法。

(2) 描述子(descriptor)提取:对于检测出的角点,用一些数学上的特征对其进行描述,如梯度直方图,局部随机二值特征等。其提取算法有邻域模板匹配、SIFT特征描述子、ORB特征描述子。

(3) 匹配:通过各个角点的描述子来判断它们在两张图像中的对应关系。常用方法有暴力匹配、KD树等。

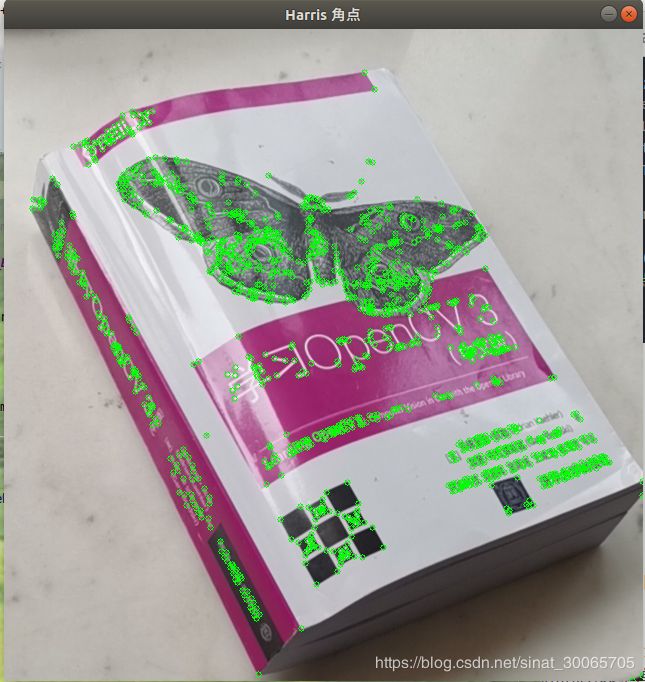

34. opencv角点(特征点)检测与匹配

(1) Harris 角点检测

void drawCornerOnImage(cv::Mat& image,const cv::Mat&binary)

{

cv::Mat_::const_iterator it=binary.begin();

cv::Mat_::const_iterator itd=binary.end();

for(int i=0;it!=itd;it++,i++)

{

if(*it)

circle(image,cv::Point(i%image.cols,i/image.cols),3,cv::Scalar(0,255,0),1);

}

}

// Harris 角点

// OpenCV的Hairrs角点检测的函数为cornerHairrs(),但是它的输出是一幅浮点值图像,浮点值越高,

// 表明越可能是特征角点,我们需要对图像进行阈值化。

void use_test38()

{

cv::Mat img=cv::imread("/home/wz/Documents/test/test_opencv3/test2.jpg");

cv::Mat gray;

cvtColor(img,gray,CV_BGR2GRAY);

cv::Mat cornerStrength;

cornerHarris(gray,cornerStrength,3,3,0.01);

double maxStrength;

double minStrength;

// 找到图像中的最大、最小值

minMaxLoc(cornerStrength,&minStrength,&maxStrength);

cv::Mat dilated;

cv::Mat locaMax;

// 膨胀图像,最找出图像中全部的局部最大值点

dilate(cornerStrength,dilated,cv::Mat());

// compare是一个逻辑比较函数,返回两幅图像中对应点相同的二值图像

compare(cornerStrength,dilated,locaMax,cv::CMP_EQ);

cv::Mat cornerMap;

double qualityLevel=0.01;

double th=qualityLevel*maxStrength; // 阈值计算

threshold(cornerStrength,cornerMap,th,255,cv::THRESH_BINARY);

cornerMap.convertTo(cornerMap,CV_8U);

// 逐点的位运算

bitwise_and(cornerMap,locaMax,cornerMap);

drawCornerOnImage(img,cornerMap);

imshow("Harris 角点",img);

cv::waitKey(0);

}

(2) FAST 特征点检测

// FAST 特征点

// harris特征在算法复杂性上比较高,在大的复杂的目标识别或匹配应用上效率不能满足要求,

// OpenCV提供了一个快速检测角点的类FastFeatureDetector,

// 而实际上FAST并不是快的意思,而是Features from Accelerated Segment Test,但这个算法效率确实比较高

void use_test39()

{

cv::Mat img=cv::imread("/home/wz/Documents/test/test_opencv3/test2.jpg");

vector keypoints;

// 网上 "FastFeatureDetector fast(40);"不能用

cv::Ptr fast = cv::FastFeatureDetector::create(20);

fast->detect(img, keypoints);

drawKeypoints(img, keypoints, img, cv::Scalar::all(-1), cv::DrawMatchesFlags::DEFAULT);

imshow("FAST 特征点", img);

cv::waitKey(0);

}

(3) SURF特征点检测

// 尺度不变的SURF特征,需要编译contrib

// surf特征是类似于SIFT特征的一种尺度不变的特征点,它的优点在于比SIFT效率要高,在实际运算中可以达到实时性的要求

#include "opencv2/xfeatures2d.hpp"

void use_test40()

{

cv::Mat img=cv::imread("/home/wz/Documents/test/test_opencv3/test2.jpg");

vector keypoints;

cv::Ptr f2d = cv::xfeatures2d::SIFT::create();

f2d->detect(img,keypoints);

drawKeypoints(img,keypoints,img,cv::Scalar(255,0,0),cv::DrawMatchesFlags::DRAW_RICH_KEYPOINTS);

imshow("尺度不变的SURF特征",img);

cv::waitKey(0);

}

35. 光流法图像跟踪

3个假设条件:

亮度恒定,就是同一点随着时间的变化,其亮度不会发生改变。这是基本光流法的假定(所有光流法变种都必须满足),用于得到光流法基本方程;

微小运动,这个也必须满足,就是时间的变化不会引起位置的剧烈变化,这样灰度才能对位置求偏导(换句话说,小运动情况下我们才能用前后帧之间单位位置变化引起的灰度变化去近似灰度对位置的偏导数),这也是光流法不可或缺的假定;

空间一致,一个场景上邻近的点投影到图像上也是邻近点,且邻近点速度一致。这是Lucas-Kanade光流法特有的假定,因为光流法基本方程约束只有一个,而要求x,y方向的速度,有两个未知变量。我们假定特征点邻域内做相似运动,就可以连立n多个方程求取x,y方向的速度(n为特征点邻域总点数,包括该特征点)。

36. opencv实现光流法跟踪

#include

#include

#include

static const int MAX_CORNERS = 1000;

using std::cout;

using std::endl;

using std::vector;

void use_test101()

{

cv::Mat imgA = cv::imread("/home/wz/Documents/test/test_opencv3/example_16-01-imgA.png", CV_LOAD_IMAGE_GRAYSCALE);

cv::Mat imgB = cv::imread("/home/wz/Documents/test/test_opencv3/example_16-01-imgB.png", CV_LOAD_IMAGE_GRAYSCALE);

cv::Size img_sz = imgA.size();

int win_size = 10;

cv::Mat imgC = cv::imread("/home/wz/Documents/test/test_opencv3/example_16-01-imgB.png", CV_LOAD_IMAGE_UNCHANGED);

// The first thing we need to do is get the features

// we want to track.

//

vector< cv::Point2f > cornersA, cornersB;

const int MAX_CORNERS = 500;

cv::goodFeaturesToTrack(

imgA, // Image to track

cornersA, // Vector of detected corners (output)

MAX_CORNERS, // Keep up to this many corners

0.01, // Quality level (percent of maximum)

5, // Min distance between corners

cv::noArray(), // Mask

3, // Block size

false, // true: Harris, false: Shi-Tomasi

0.04 // method specific parameter

);

cv::cornerSubPix(

imgA, // Input image

cornersA, // Vector of corners (input and output)

cv::Size(win_size, win_size), // Half side length of search window

cv::Size(-1, -1), // Half side length of dead zone (-1=none)

cv::TermCriteria(

cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS,

20, // Maximum number of iterations

0.03 // Minimum change per iteration

)

);

// Call the Lucas Kanade algorithm

//

vector features_found;

cv::calcOpticalFlowPyrLK(

imgA, // Previous image

imgB, // Next image

cornersA, // Previous set of corners (from imgA)

cornersB, // Next set of corners (from imgB)

features_found, // Output vector, each is 1 for tracked

cv::noArray(), // Output vector, lists errors (optional)

cv::Size(win_size * 2 + 1, win_size * 2 + 1), // Search window size

5, // Maximum pyramid level to construct

cv::TermCriteria(

cv::TermCriteria::MAX_ITER | cv::TermCriteria::EPS,

20, // Maximum number of iterations

0.3 // Minimum change per iteration

)

);

// Now make some image of what we are looking at:

// Note that if you want to track cornersB further, i.e.

// pass them as input to the next calcOpticalFlowPyrLK,

// you would need to "compress" the vector, i.e., exclude points for which

// features_found[i] == false.

for (int i = 0; i < static_cast(cornersA.size()); ++i) {

if (!features_found[i]) {

continue;

}

line(

imgC, // Draw onto this image

cornersA[i], // Starting here

cornersB[i], // Ending here

cv::Scalar(0, 255, 0), // This color

1, // This many pixels wide

cv::LINE_AA // Draw line in this style

);

}

cv::imshow("ImageA", imgA);

cv::imshow("ImageB", imgB);

cv::imshow("LK Optical Flow Example", imgC);

cv::waitKey(0);

}

37. 卡尔曼滤波及其2种用法

// Example 17-1. Kalman filter example code

#include

#include

#include

#include

#include

using std::cout;

using std::endl;

#define phi2xy(mat) \

cv::Point(cvRound(img.cols / 2 + img.cols / 3 * cos(mat.at(0))), \

cvRound(img.rows / 2 - img.cols / 3 * sin(mat.at(0))))

void use_test102()

{

// Initialize, create Kalman filter object, window, random number

// generator etc.

//

cv::Mat img(500, 500, CV_8UC3);

cv::KalmanFilter kalman(2, 1, 0);

// state is (phi, delta_phi) - angle and angular velocity

// Initialize with random guess.

//

cv::Mat x_k(2, 1, CV_32F);

randn(x_k, 0.0, 0.1);

// process noise

//

cv::Mat w_k(2, 1, CV_32F);

// measurements, only one parameter for angle

//

cv::Mat z_k = cv::Mat::zeros(1, 1, CV_32F);

// Transition matrix 'F' describes relationship between

// model parameters at step k and at step k+1 (this is

// the "dynamics" in our model.

//

float F[] = {1, 1, 0, 1};

kalman.transitionMatrix = cv::Mat(2, 2, CV_32F, F).clone();

// Initialize other Kalman filter parameters.

//

cv::setIdentity(kalman.measurementMatrix, cv::Scalar(1));

cv::setIdentity(kalman.processNoiseCov, cv::Scalar(1e-5));

cv::setIdentity(kalman.measurementNoiseCov, cv::Scalar(1e-1));

cv::setIdentity(kalman.errorCovPost, cv::Scalar(1));

// choose random initial state

//

randn(kalman.statePost, 0.0, 0.1);

for (;;) {

// predict point position

//

cv::Mat y_k = kalman.predict();

// generate measurement (z_k)

//

cv::randn(z_k, 0.0,

sqrt(static_cast(kalman.measurementNoiseCov.at(0, 0))));

z_k = kalman.measurementMatrix*x_k + z_k;

// plot points (e.g., convert

//

img = cv::Scalar::all(0);

cv::circle(img, phi2xy(z_k), 4, cv::Scalar(128, 255, 255)); // observed

cv::circle(img, phi2xy(y_k), 4, cv::Scalar(255, 255, 255), 2); // predicted

cv::circle(img, phi2xy(x_k), 4, cv::Scalar(0, 0, 255)); // actual to

// planar co-ordinates and draw

cv::imshow("Kalman", img);

// adjust Kalman filter state

//

kalman.correct(z_k);

// Apply the transition matrix 'F' (e.g., step time forward)

// and also apply the "process" noise w_k

//

cv::randn(w_k, 0.0, sqrt(static_cast(kalman.processNoiseCov.at(0, 0))));

x_k = kalman.transitionMatrix*x_k + w_k;

// exit if user hits 'Esc'

if ((cv::waitKey(100) & 255) == 27) {

break;

}

}

// return 0;

}

38. 光流法跟踪加上卡尔曼滤波的opencv实现

(待补充示例)

39. 相机标定原理

(参考 https://blog.csdn.net/baidu_38172402/article/details/81949447 )

40. 相机标定opencv实现

#include

#include

using std::vector;

using std::cout;

using std::cerr;

using std::endl;

void use_test104()

{

board_w = 9;

board_h = 6;

n_boards = 15;

delay = 500;

image_sf = 0.5;

int board_n = board_w * board_h;

cv::Size board_sz = cv::Size(board_w, board_h);

cv::VideoCapture capture(0);

// ALLOCATE STORAGE

//

vector > image_points;

vector > object_points;

// Capture corner views: loop until we've got n_boards successful

// captures (all corners on the board are found).

//

double last_captured_timestamp = 0;

cv::Size image_size;

while (image_points.size() < (size_t)n_boards) {

cv::Mat image0, image;

capture >> image0;

image_size = image0.size();

cv::resize(image0, image, cv::Size(), image_sf, image_sf, cv::INTER_LINEAR);

// Find the board

//

vector corners;

bool found = cv::findChessboardCorners(image, board_sz, corners);

// Draw it

//

drawChessboardCorners(image, board_sz, corners, found);

// If we got a good board, add it to our data

//

double timestamp = static_cast(clock()) / CLOCKS_PER_SEC;

if (found && timestamp - last_captured_timestamp > 1) {

last_captured_timestamp = timestamp;

image ^= cv::Scalar::all(255);

cv::Mat mcorners(corners);

// do not copy the data

mcorners *= (1.0 / image_sf);

// scale the corner coordinates

image_points.push_back(corners);

object_points.push_back(vector());

vector &opts = object_points.back();

opts.resize(board_n);

for (int j = 0; j < board_n; j++) {

opts[j] = cv::Point3f(static_cast(j / board_w),

static_cast(j % board_w), 0.0f);

}

cout << "Collected our " << static_cast(image_points.size())

<< " of " << n_boards << " needed chessboard images\n" << endl;

}

cv::imshow("Calibration", image);

cv::waitKey(1);

}

// END COLLECTION WHILE LOOP.

cv::destroyWindow("Calibration");

cout << "\n\n*** CALIBRATING THE CAMERA...\n" << endl;

// CALIBRATE THE CAMERA!

//

cv::Mat intrinsic_matrix, distortion_coeffs;

double err = cv::calibrateCamera(

object_points, image_points, image_size, intrinsic_matrix,

distortion_coeffs, cv::noArray(), cv::noArray(),

cv::CALIB_ZERO_TANGENT_DIST | cv::CALIB_FIX_PRINCIPAL_POINT);

// SAVE THE INTRINSICS AND DISTORTIONS

cout << " *** DONE!\n\nReprojection error is " << err

<< "\nStoring Intrinsics.xml and Distortions.xml files\n\n";

cv::FileStorage fs("intrinsics.xml", cv::FileStorage::WRITE);

fs << "image_width" << image_size.width << "image_height" << image_size.height

<< "camera_matrix" << intrinsic_matrix << "distortion_coefficients"

<< distortion_coeffs;

fs.release();

// EXAMPLE OF LOADING THESE MATRICES BACK IN:

fs.open("intrinsics.xml", cv::FileStorage::READ);

cout << "\nimage width: " << static_cast(fs["image_width"]);

cout << "\nimage height: " << static_cast(fs["image_height"]);

cv::Mat intrinsic_matrix_loaded, distortion_coeffs_loaded;

fs["camera_matrix"] >> intrinsic_matrix_loaded;

fs["distortion_coefficients"] >> distortion_coeffs_loaded;

cout << "\nintrinsic matrix:" << intrinsic_matrix_loaded;

cout << "\ndistortion coefficients: " << distortion_coeffs_loaded << endl;

// Build the undistort map which we will use for all

// subsequent frames.

//

cv::Mat map1, map2;

cv::initUndistortRectifyMap(intrinsic_matrix_loaded, distortion_coeffs_loaded,

cv::Mat(), intrinsic_matrix_loaded, image_size,

CV_16SC2, map1, map2);

// Just run the camera to the screen, now showing the raw and

// the undistorted image.

//

for (;;) {

cv::Mat image, image0;

capture >> image0;

if (image0.empty()) {

break;

}

cv::remap(image0, image, map1, map2, cv::INTER_LINEAR,

cv::BORDER_CONSTANT, cv::Scalar());

cv::imshow("Undistorted", image);

if ((cv::waitKey(30) & 255) == 27) {

break;

}

}

}

41. ROC曲线

(参考 https://www.jianshu.com/p/2ca96fce7e81)

42. opencv中的经典机器学习算法实现

(1) k-means聚类

(2) 随机森林分类

(3) SVM分类算法

(4) k近邻分类

(5)Boosting分类

(6) 决策树分类

//Example 21-1. Creating and training a decision tree

#include

#include

#include

using namespace std;

using namespace cv;

void use_test103()

{

// If the caller gave a filename, great. Otherwise, use a default.

//

const char *csv_file_name = "/home/wz/Documents/test/test_opencv3/mushroom/agaricus-lepiota.data";

cout << "OpenCV Version: " << CV_VERSION << endl;

// Read in the CSV file that we were given.

//

cv::Ptr data_set =

cv::ml::TrainData::loadFromCSV(csv_file_name, // Input file name

0, // Header lines (ignore this many)

0, // Responses are (start) at thie column

1, // Inputs start at this column

"cat[0-22]" // All 23 columns are categorical

);

// Use defaults for delimeter (',') and missch ('?')

// Verify that we read in what we think.

//

int n_samples = data_set->getNSamples();

if (n_samples == 0) {

cerr << "Could not read file: " << csv_file_name << endl;

exit(-1);

} else {

cout << "Read " << n_samples << " samples from " << csv_file_name << endl;

}

// Split the data, so that 90% is train data

//

data_set->setTrainTestSplitRatio(0.90, false);

int n_train_samples = data_set->getNTrainSamples();

int n_test_samples = data_set->getNTestSamples();

cout << "Found " << n_train_samples << " Train Samples, and "

<< n_test_samples << " Test Samples" << endl;

// Create a DTrees classifier.

//

cv::Ptr dtree = cv::ml::RTrees::create();

// set parameters

//

// These are the parameters from the old mushrooms.cpp code

// Set up priors to penalize "poisonous" 10x as much as "edible"

//

float _priors[] = {1.0, 10.0};

cv::Mat priors(1, 2, CV_32F, _priors);

dtree->setMaxDepth(8);

dtree->setMinSampleCount(10);

dtree->setRegressionAccuracy(0.01f);

dtree->setUseSurrogates(false /* true */);

dtree->setMaxCategories(15);

dtree->setCVFolds(0 /*10*/); // nonzero causes core dump

dtree->setUse1SERule(true);

dtree->setTruncatePrunedTree(true);

// dtree->setPriors( priors );

dtree->setPriors(cv::Mat()); // ignore priors for now...

// Now train the model

// NB: we are only using the "train" part of the data set

//

dtree->train(data_set);

// Having successfully trained the data, we should be able

// to calculate the error on both the training data, as well

// as the test data that we held out.

//

cv::Mat results;

float train_performance = dtree->calcError(data_set,

false, // use train data

results // cv::noArray()

);

std::vector names;

data_set->getNames(names);

Mat flags = data_set->getVarSymbolFlags();

// Compute some statistics on our own:

//

{

cv::Mat expected_responses = data_set->getResponses();

int good = 0, bad = 0, total = 0;

for (int i = 0; i < data_set->getNTrainSamples(); ++i) {

float received = results.at(i, 0);

float expected = expected_responses.at(i, 0);

cv::String r_str = names[(int)received];

cv::String e_str = names[(int)expected];

cout << "Expected: " << e_str << ", got: " << r_str << endl;

if (received == expected)

good++;

else

bad++;

total++;

}

cout << "Correct answers: " <<(float(good)/total) <<" % " << endl;

cout << "Incorrect answers: " << (float(bad) / total) << "%"

<< endl;

}

float test_performance = dtree->calcError(data_set,

true, // use test data

results // cv::noArray()

);

cout << "Performance on training data: " << train_performance << "%" << endl;

cout << "Performance on test data: " < Correct answers: 0.999179 %

Incorrect answers: 0.000820569%

Performance on training data: 0.0820569%

Performance on test data: 0 %

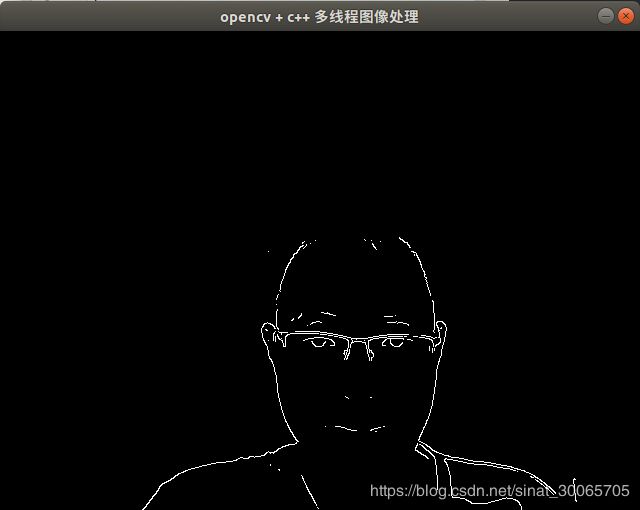

43. c++多线程实现opencv图像处理

main.cpp

#include "threadclass.hpp"

#include

#include

#include

using namespace std;

int main() {

cout << "主线程启动: " << endl;

cv::Mat result;

cv::namedWindow("opencv + c++ 多线程图像处理", cv::WINDOW_AUTOSIZE);

ThreadClass opencvthread;

thread t1(&ThreadClass::thread1, &opencvthread);

sleep(1);

thread t2(&ThreadClass::thread2, &opencvthread);

sleep(1);

thread t3(&ThreadClass::thread3, &opencvthread);

t1.join();

t2.join();

t3.join();

return 0;

}

threadclass.hpp

//

// Created by wz on 2020/6/1.

//

#ifndef THREADCLASS_THREADCLASS_HPP

#define THREADCLASS_THREADCLASS_HPP

#include

#include

#include

using namespace std;

class ThreadClass

{

private:

cv::VideoCapture cap;

cv::Mat rawdata;

cv::Mat data;

bool start;

bool readyfor_t2;

mutex mtx;

condition_variable cv;

public:

ThreadClass();

~ThreadClass();

void thread1(); // 读取摄像头数据

void thread2(); // Canny边缘检测

void thread3(); // 显示边缘检测结果

cv::Mat getresult(); // 用于主线程中获取结果

};

#endif //THREADCLASS_THREADCLASS_HPP

threadclass.cpp

//条件变量和互斥锁实现线程同步和通信

// Created by wz on 2020/6/1.

#include "threadclass.hpp"

#include

#include

#include

using namespace std;

ThreadClass::ThreadClass()

{

start = false;

readyfor_t2 = true;

}

ThreadClass::~ThreadClass()

{

rawdata.release();

data.release();

cap.release();

}

void ThreadClass::thread1()

{

cout << "线程1启动!" << endl;

cap.open(0);

if(cap.isOpened()) {printf("成功打开摄像头!\n");}

for(;;)

{

cap >> rawdata;

start = true;

}

start = false;

}

void ThreadClass::thread2()

{

cout << "线程2启动!" << endl;

while(start)

{

unique_lock lock(mtx);

while(!readyfor_t2)

{

cv.wait(lock);

}

rawdata.copyTo(data);

cv::cvtColor(data,data,CV_BGR2GRAY);

cv::Canny(data,data, 200,200,3);

readyfor_t2 = false;

cv.notify_all();

}

}

void ThreadClass::thread3()

{

cout << "线程3启动!" << endl;

while(start)

{

unique_lock lock(mtx);

while(readyfor_t2)

{

cv.wait(lock);

}

if(!data.empty()) {

cv::imshow("opencv + c++ 多线程图像处理", data);

cv::waitKey(1);

}

readyfor_t2 = true;

cv.notify_all();

}

}

cv::Mat ThreadClass::getresult()

{

return data;

}

运行结果: