前面的博客中介绍使用Heartbeat来实现高可用集群,接下来使用的是corosync来实现共可用集群。

下面是一个实验拓扑图

下面是一个实验拓扑图

HA1------HA2

IP规划:

VIP:192.168.1.80

HA1:192.168.1.78

HA2:192.168.1.151

VIP:192.168.1.80

HA1:192.168.1.78

HA2:192.168.1.151

一、环境初始化:

HA的主机名的配置,以及/etc/hosts文件的配置,不需要密码就能实现两个HA之间相互访问的配置:

#vim /etc/hosts //HA1和HA2上都要配置

192.168.1.78 www.luowei.com www

192.168.1.151 wap.luowei.com wap

#hostname www.luowei.com //HA1上的配置

#hostname wap.luowei.com //HA2上的配置

HA的主机名的配置,以及/etc/hosts文件的配置,不需要密码就能实现两个HA之间相互访问的配置:

#vim /etc/hosts //HA1和HA2上都要配置

192.168.1.78 www.luowei.com www

192.168.1.151 wap.luowei.com wap

#hostname www.luowei.com //HA1上的配置

#hostname wap.luowei.com //HA2上的配置

生成密钥文件,实现不实用密码就能相互访问主机:

#ssh-keygen -t rsa //一直Enter下去

#ssh-copy-id -i .ssh/id_rsa.pub root@wap //然后输入密码即可,在HA2上做同样的操作,只是后面的 root@wap改为 root@www就行了

#ssh-keygen -t rsa //一直Enter下去

#ssh-copy-id -i .ssh/id_rsa.pub root@wap //然后输入密码即可,在HA2上做同样的操作,只是后面的 root@wap改为 root@www就行了

在两个HA上安装httpd服务

#yum install httpd -y

给两个HA添加两个测试的页面

#echo "

#echo "

确保两个HA上的httpd服务不会自动启动

#chkconfig httpd off

#yum install httpd -y

给两个HA添加两个测试的页面

#echo "

HA1:www.luowei.com

" >/var/www/html/index.html#echo "

HA2:wap.luowei.com

" >/var/www/html/index.html确保两个HA上的httpd服务不会自动启动

#chkconfig httpd off

二、配置HA并启动服务

安装corosync软件包,如下所示

[root@www corosync]# ls

cluster-glue-1.0.6-1.6.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

libesmtp-1.0.4-5.el5.i386.rpm

openais-1.1.3-1.6.el5.i386.rpm

openaislib-1.1.3-1.6.el5.i386.rpm

pacemaker-1.0.11-1.2.el5.i386.rpm

pacemaker-libs-1.0.11-1.2.el5.i386.rpm

perl-TimeDate-1.16-5.el5.noarch.rpm

resource-agents-1.0.4-1.1.el5.i386.rpm

由于要解决一定的依赖关系,所以直接使用yum安装了

#yum localinstall * --nogpgcheck -y

#cd /etc/corosync/

#cp corosync.conf.example corosync.conf

#vim corosync.conf

bindnetaddr: 192.168.1.0 //修改集群所在的网络号,我使用的是192.168.1.0的网段,所以就写192.168.1.0

secauth: on //开启认证功能,防止其他的集群加入到自己的集群

然后在添加如下内容:

service {

ver: 0

name: pacemaker

}

安装corosync软件包,如下所示

[root@www corosync]# ls

cluster-glue-1.0.6-1.6.el5.i386.rpm

cluster-glue-libs-1.0.6-1.6.el5.i386.rpm

corosync-1.2.7-1.1.el5.i386.rpm

corosynclib-1.2.7-1.1.el5.i386.rpm

heartbeat-3.0.3-2.3.el5.i386.rpm

heartbeat-libs-3.0.3-2.3.el5.i386.rpm

libesmtp-1.0.4-5.el5.i386.rpm

openais-1.1.3-1.6.el5.i386.rpm

openaislib-1.1.3-1.6.el5.i386.rpm

pacemaker-1.0.11-1.2.el5.i386.rpm

pacemaker-libs-1.0.11-1.2.el5.i386.rpm

perl-TimeDate-1.16-5.el5.noarch.rpm

resource-agents-1.0.4-1.1.el5.i386.rpm

由于要解决一定的依赖关系,所以直接使用yum安装了

#yum localinstall * --nogpgcheck -y

#cd /etc/corosync/

#cp corosync.conf.example corosync.conf

#vim corosync.conf

bindnetaddr: 192.168.1.0 //修改集群所在的网络号,我使用的是192.168.1.0的网段,所以就写192.168.1.0

secauth: on //开启认证功能,防止其他的集群加入到自己的集群

然后在添加如下内容:

service {

ver: 0

name: pacemaker

}

aisexec {

user: root

group: root

}

保存退出!

user: root

group: root

}

保存退出!

由于集群之间还要进行认证,下面生成authkeys文件:

#corosync-keygen //生成的文件就在/etc/corosync/目录下

由于配置文件中需要记录日志,需要建立一个和配置文件中同样的目录

#mkdir /var/log/cluster

#corosync-keygen //生成的文件就在/etc/corosync/目录下

由于配置文件中需要记录日志,需要建立一个和配置文件中同样的目录

#mkdir /var/log/cluster

OK!配置完成,接下来就可以启动corosync服务了

[root@www corosync]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

启动成功!

[root@www corosync]# /etc/init.d/corosync start

Starting Corosync Cluster Engine (corosync): [ OK ]

启动成功!

接下来就是检查一下是否还有其他的错误了!

1.查看corosync引擎是否正常启动:

[root@www corosync]# grep -e "Corosync Cluster Engine" -e "configuration file" /var/log/messages

Sep 14 03:46:37 station78 corosync[17916]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Sep 14 03:46:37 station78 corosync[17916]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Sep 14 03:47:44 station78 corosync[17916]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Sep 14 03:47:44 station78 corosync[18015]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Sep 14 03:47:44 station78 corosync[18015]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

1.查看corosync引擎是否正常启动:

[root@www corosync]# grep -e "Corosync Cluster Engine" -e "configuration file" /var/log/messages

Sep 14 03:46:37 station78 corosync[17916]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Sep 14 03:46:37 station78 corosync[17916]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

Sep 14 03:47:44 station78 corosync[17916]: [MAIN ] Corosync Cluster Engine exiting with status 0 at main.c:170.

Sep 14 03:47:44 station78 corosync[18015]: [MAIN ] Corosync Cluster Engine ('1.2.7'): started and ready to provide service.

Sep 14 03:47:44 station78 corosync[18015]: [MAIN ] Successfully read main configuration file '/etc/corosync/corosync.conf'.

2.查看初始化成员节点通知是否正常发出:

[root@www corosync]# grep TOTEM /var/log/messages

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] Initializing transport (UDP/IP).

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] The network interface [192.168.1.78] is now up.

Sep 14 03:46:38 station78 corosync[17916]: [TOTEM ] Process pause detected for 681 ms, flushing membership messages.

Sep 14 03:46:38 station78 corosync[17916]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Sep 14 03:47:44 station78 corosync[18015]: [TOTEM ] Initializing transport (UDP/IP).

Sep 14 03:47:44 station78 corosync[18015]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] The network interface [192.168.1.78] is now up.

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] Process pause detected for 513 ms, flushing membership messages.

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

[root@www corosync]# grep TOTEM /var/log/messages

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] Initializing transport (UDP/IP).

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Sep 14 03:46:37 station78 corosync[17916]: [TOTEM ] The network interface [192.168.1.78] is now up.

Sep 14 03:46:38 station78 corosync[17916]: [TOTEM ] Process pause detected for 681 ms, flushing membership messages.

Sep 14 03:46:38 station78 corosync[17916]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

Sep 14 03:47:44 station78 corosync[18015]: [TOTEM ] Initializing transport (UDP/IP).

Sep 14 03:47:44 station78 corosync[18015]: [TOTEM ] Initializing transmit/receive security: libtomcrypt SOBER128/SHA1HMAC (mode 0).

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] The network interface [192.168.1.78] is now up.

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] Process pause detected for 513 ms, flushing membership messages.

Sep 14 03:47:45 station78 corosync[18015]: [TOTEM ] A processor joined or left the membership and a new membership was formed.

3.检查启动过程中是否有错误产生:

[root@www corosync]# grep ERROR: /var/log/messages | grep -v unpack_resources

[root@www corosync]#

[root@www corosync]# grep ERROR: /var/log/messages | grep -v unpack_resources

[root@www corosync]#

4.查看pacemaker是否正常启动:

[root@www corosync]# grep pcmk_startup /var/log/messages

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: CRM: Initialized

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] Logging: Initialized pcmk_startup

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Service: 9

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Local hostname: www.luowei.com

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: CRM: Initialized

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] Logging: Initialized pcmk_startup

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Service: 9

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Local hostname: www.luowei.com

[root@www corosync]# grep pcmk_startup /var/log/messages

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: CRM: Initialized

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] Logging: Initialized pcmk_startup

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Service: 9

Sep 14 03:46:38 station78 corosync[17916]: [pcmk ] info: pcmk_startup: Local hostname: www.luowei.com

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: CRM: Initialized

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] Logging: Initialized pcmk_startup

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Maximum core file size is: 4294967295

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Service: 9

Sep 14 03:47:45 station78 corosync[18015]: [pcmk ] info: pcmk_startup: Local hostname: www.luowei.com

由上面的信息可以看出,一切正常!接下来就是启动HA2了:

[root@www corosync]# ssh wap -- '/etc/init.d/corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

启动成功!

[root@www corosync]# ssh wap -- '/etc/init.d/corosync start'

Starting Corosync Cluster Engine (corosync): [ OK ]

启动成功!

验证:

# crm status

Online: [ www.luowei.com wap.luowei.com ] //可以看出两个都启动了

# crm status

Online: [ www.luowei.com wap.luowei.com ] //可以看出两个都启动了

四、配置集群的工作属性

1.禁用stonith

corosync默认启用了stonith,而当前集群并没有相应的stonith设备,因此此默认配置目前尚不可用,这可以通过如下命令验正

[root@www ~]# crm_verify -L

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

#crm configure property stonith-enabled=false //禁止stonith

然后查看:

#crm configure show

node wap.luowei.com

node www.luowei.com

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" //刚禁用的stonith

2.由于是两个节点的集群,不考虑quroum

#crm configure property no-quorum-policy=ignore

3.配置资源,由于我使用的是httpd服务的集群,所以要添加两个资源

#crm configure primitive webip ocf:heartbeat:IPaddr params ip=192.168.1.80 //为httpd添加VIP

#crm configure primitive webserver lsb:httpd //添加httpd进程

[root@www ~]# crm configure show //查看配置的信息

node wap.luowei.com

node www.luowei.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.1.80"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

1.禁用stonith

corosync默认启用了stonith,而当前集群并没有相应的stonith设备,因此此默认配置目前尚不可用,这可以通过如下命令验正

[root@www ~]# crm_verify -L

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: Resource start-up disabled since no STONITH resources have been defined

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: Either configure some or disable STONITH with the stonith-enabled option

crm_verify[20031]: 2011/09/14_04:36:38 ERROR: unpack_resources: NOTE: Clusters with shared data need STONITH to ensure data integrity

#crm configure property stonith-enabled=false //禁止stonith

然后查看:

#crm configure show

node wap.luowei.com

node www.luowei.com

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" //刚禁用的stonith

2.由于是两个节点的集群,不考虑quroum

#crm configure property no-quorum-policy=ignore

3.配置资源,由于我使用的是httpd服务的集群,所以要添加两个资源

#crm configure primitive webip ocf:heartbeat:IPaddr params ip=192.168.1.80 //为httpd添加VIP

#crm configure primitive webserver lsb:httpd //添加httpd进程

[root@www ~]# crm configure show //查看配置的信息

node wap.luowei.com

node www.luowei.com

primitive webip ocf:heartbeat:IPaddr \

params ip="192.168.1.80"

primitive webserver lsb:httpd

property $id="cib-bootstrap-options" \

dc-version="1.0.11-1554a83db0d3c3e546cfd3aaff6af1184f79ee87" \

cluster-infrastructure="openais" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

五、测试:

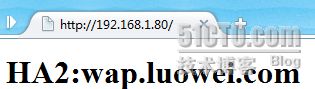

在浏览器中输入 http://192.168.1.80可以看到对应的网页

在浏览器中输入 http://192.168.1.80可以看到对应的网页

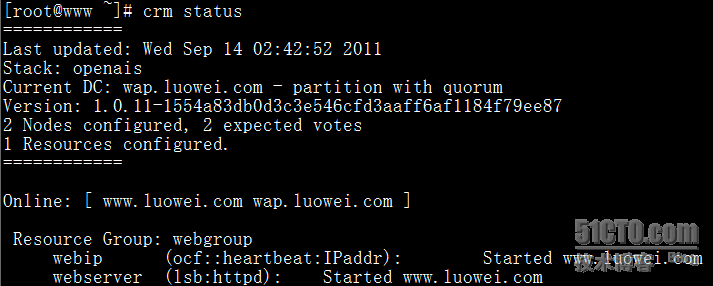

现在我们在来看一下资源情况:

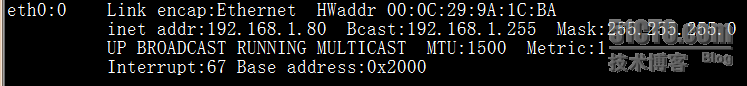

由此可见资源现在在HA1上,然后到HA1上使用ifconfig查看的时候会出现一个eth0:0的接口,如下图所示:

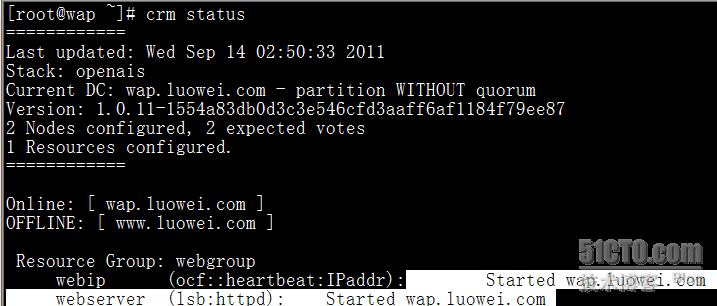

接下来我就在HA2上把HA1 的corosync给停了,然后在看一下资源流动的情况:

[root@wap ~]# ssh www -- '/etc/init.d/corosync stop'

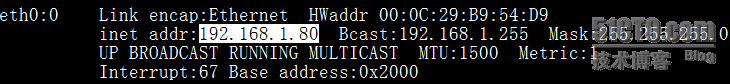

可以看到资源已经流转到了HA2上,使用ifconfig查看的话,VIP也会流转到HA2上:

这时候再在浏览其中输入 http://192.168.1.80查看

OK!实验完成,效果实现了!