canal系列—HA模式配置

一、机器准备

- zookeeper地址:192.168.134.128:2181;

- mysql地址:192.168.134.129:3306;

- 运行canal的机器: 192.168.134.131 , 192.168.134.132。

Zookeeper 安装配置请参考:Zookeeper系列—Linux下的安装

Mysql 安装配置请参考:MySQL系列—服务器安装与配置

canal 安装配置请参考:canal系列—Linux下的安装配置(快速开始)

Zookeeper 集群安装配置请参考: Zookeeper系列—集群安装

二、按照部署和配置,在单台机器上各自完成配置,演示时instance name为example

a. 修改canal.properties,加上zookeeper配置

canal.zkServers=192.168.134.128:2181

canal.instance.global.spring.xml = classpath:spring/default-instance.xmlb. 创建example目录,并修改instance.properties

canal.instance.mysql.slaveId = 131 ##另外一台机器改成132,保证slaveId不重复即可

canal.instance.master.address = 192.168.134.129:3306注意: 两台机器上的instance目录的名字需要保证完全一致,HA模式是依赖于instance name进行管理,同时必须都选择default-instance.xml配置

三、启动两台机器的canal

启动后,你可以查看logs/example/example.log,只会看到一台机器上出现了启动成功的日志。

比如我这里启动成功的是192.168.134.131

2017-12-13 16:05:45.421 [main] INFO c.a.o.c.i.spring.support.PropertyPlaceholderConfigurer - Loading properties file from class path resource [example/instance.properties]

2017-12-13 16:05:45.669 [main] WARN org.springframework.beans.TypeConverterDelegate - PropertyEditor [com.sun.beans.editors.EnumEditor] found through deprecated global PropertyEditorManager fallback - consider using a more isolated form of registration, e.g. on the BeanWrapper/BeanFactory!

2017-12-13 16:05:45.878 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start CannalInstance for 1-example

2017-12-13 16:05:45.932 [main] INFO c.a.otter.canal.instance.spring.CanalInstanceWithSpring - start successful....

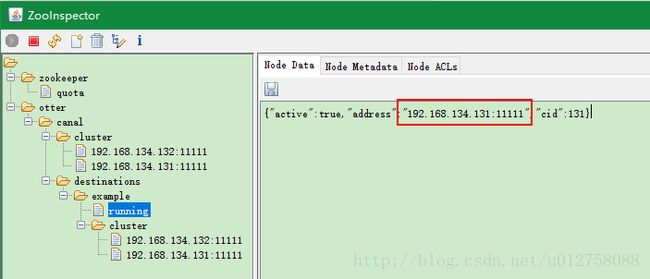

查看一下zookeeper中的节点信息,也可以知道当前工作的节点为192.168.134.131:11111

四、客户端链接, 消费数据

canal客户端的使用请参考: canal系列—canal客户端_“消息的消费”

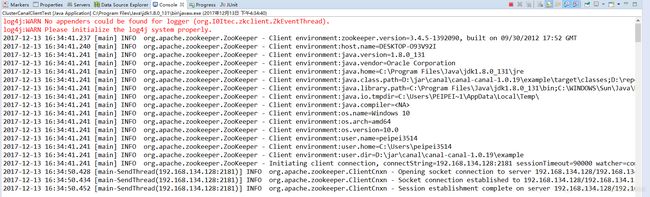

a. 可以直接指定zookeeper地址和instance name,canal client会自动从zookeeper中的running节点,获取当前服务的工作节点,然后与其建立链接:

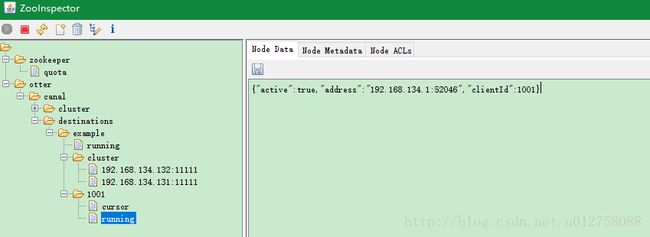

CanalConnector connector = CanalConnectors.newClusterConnector("192.168.134.128:2181", destination, "", "");b. 链接成功后,canal server会记录当前正在工作的canal client信息,比如客户端ip,链接的端口信息等 (聪明的你,应该也可以发现,canal client也可以支持HA功能)

[zk: localhost:2181(CONNECTED) 0] get /otter/canal/destinations/example/1001/running

{"active":true,"address":"192.168.134.1:52046","clientId":1001}c. 数据消费成功后,canal server会在zookeeper中记录下当前最后一次消费成功的binlog位点. (下次你重启client时,会从这最后一个位点继续进行消费)

[zk: localhost:2181(CONNECTED) 0] get /otter/canal/destinations/example/1001/cursor

{"@type":"com.alibaba.otter.canal.protocol.position.LogPosition","identity":{"slaveId":-1,"sourceAddress":{"address":"192.168.134.129","port":3306}},"postion":{"included":false,"journalName":"mysql-binlog.000004","position":889,"timestamp":1513154103000}}五、重启一下canal server

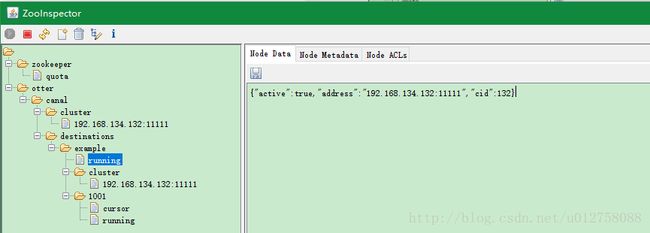

停止正在工作的192.168.134.131的canal server,这时192.168.134.132会立马启动example instance,提供新的数据服务。

与此同时,客户端也会随着canal server的切换,通过获取zookeeper中的最新地址,与新的canal server建立链接,继续消费数据,整个过程自动完成。

触发HA自动切换场景 (server/client HA模式都有效)

一、正常场景

a. 正常关闭canal server(会释放instance的所有资源,包括删除running节点)

b. 平滑切换(gracefully)

操作:更新对应instance的running节点内容,将”active”设置为false,对应的running节点收到消息后,会主动释放running节点,让出控制权但自己jvm不退出,gracefully.

{"active":false,"address":"10.20.144.22:11111","cid":1}二、异常场景

a. canal server对应的jvm异常crash,running节点的释放会在对应的zookeeper session失效后,释放running节点(EPHEMERAL节点)

ps. session过期时间默认为zookeeper配置文件中定义的tickTime的20倍,如果不改动zookeeper配置,那默认就是40秒

b. canal server所在的网络出现闪断,导致zookeeper认为session失效,释放了running节点,此时canal server对应的jvm并未退出,(一种假死状态,非常特殊的情况)

ps. 为了保护假死状态的canal server,避免因瞬间runing失效导致instance重新分布,所以做了一个策略:canal server在收到running节点释放后,延迟一段时间抢占running,原本running节点的拥有者可以不需要等待延迟,优先取得running节点,可以保证假死状态下尽可能不无谓的释放资源。 目前延迟时间的默认值为5秒,即running节点针对假死状态的保护期为5秒.

mysql多节点解析配置(parse解析自动切换)

一、mysql机器准备

准备两台mysql机器,配置为M-M模式,比如ip为:192.168.134.129:3306,192.168.134.129:3306

[mysqld]

xxxxx ##其他正常master/slave配置

log_slave_updates=true ##这个配置一定要打开二、canal instance配置

# position info

canal.instance.master.address = 192.168.134.129:3306

canal.instance.master.journal.name =

canal.instance.master.position =

canal.instance.master.timestamp =

canal.instance.standby.address = 192.168.134.130:3306

canal.instance.standby.journal.name =

canal.instance.standby.position =

canal.instance.standby.timestamp =三、detecing config

canal.instance.detecting.enable = true ## 需要开启心跳检查

canal.instance.detecting.sql = insert into retl.xdual values(1,now()) on duplicate key update x=now() ##心跳检查sql,也可以选择类似select 1的query语句

canal.instance.detecting.interval.time = 3 ##心跳检查频率

canal.instance.detecting.retry.threshold = 3 ## 心跳检查失败次数阀值,超过该阀值后会触发mysql链接切换,比如切换到standby机器上继续消费binlog

canal.instance.detecting.heartbeatHaEnable = true ## 心跳检查超过失败次数阀值后,是否开启master/standby的切换. 注意:

- 填写master/standby的地址和各自的起始binlog位置,目前配置只支持一个standby配置;

- 发生master/standby的切换的条件:(heartbeatHaEnable = true) && (失败次数>=retry.threshold);

- 多引入一个heartbeatHaEnable的考虑:开启心跳sql有时候是为client检测canal server是否正常工作,如果定时收到了心跳语句,那说明整个canal server工作正常。

四、启动 & 测试

比如关闭一台机器的mysql , /etc/init.d/mysql stop 。在经历大概 interval.time * retry.threshold时间后,就会切换到standby机器上