概念:

puppet基于ruby的开源自动化部署工具

具有可测试性,强一致性部署体验

丰富资源定义完成系统配置全周期流程

内置丰富编程体验完成自主任务

资源标识与通知机制特性

puttet服务器端定义客户端节点所需资源类型,客户端加入部署环境后,

客户端凭借主机名等申请(https)对应服务器资源,服务器编译catelog

后将结果返回客户端,客户端利用这些信息完成系统资源的定义与处理,

并返回结果给服务器.

规划:

172.16.43.200 master.king.com (预装 puppet server)

172.16.43.1 slave1.king.com (预装 puppet agent再无其他资源)

按照之前架构,这次演示slave1.king.com的自动化安装,其他节点的安装配置以附件形式上传

针对slave1.king.com部署实现

-> puppet agent 软件(由cobbler预装)

-> hosts 文件(puppet自动化)

-> bind 软件及配置文件(puppet自动化)

-> haproxy 软件及配置文件(puppet自动化)

-> keepalived 软件及配置文件

本地测试阶段(master.king.com)

在master节点编写模块,测试通过后进行主从方式的配置

# 安装puppet yum -y install puppet-2.7.23-1.el6.noarch.rpm

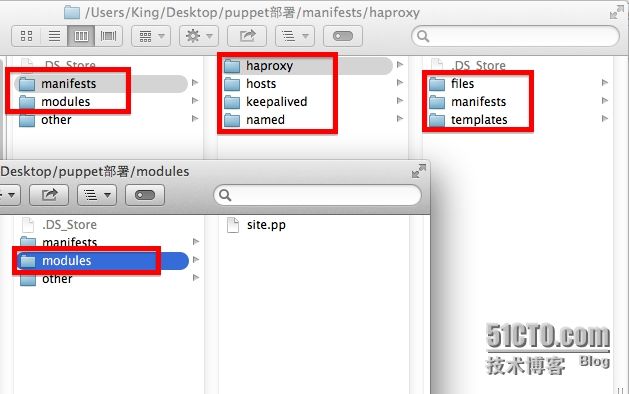

1.在/etc/puppet目录建立如下目录

mkdir /etc/puppet/manifest/{haproxy,hosts,keepalived,named}/{files,manifests,templates} -pv

mkdir /etc/puppet/modules

2.编辑/etc/puupet/manifests/hosts/files/hosts (host)

# 若要部署成前四节的架构,这里需要把每一个点都自动化所以配置文件及其对应的软件都要安装配置 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 172.16.43.1 slave1.king.com 172.16.43.2 slave2.king.com 172.16.43.3 slave3.king.com 172.16.43.4 slave4.king.com 172.16.43.3 imgs1.king.com 172.16.43.3 imgs2.king.com 172.16.43.3 text1.king.com 172.16.43.3 text2.king.com 172.16.43.3 dynamic1.king.com 172.16.43.4 dynamic2.king.com 172.16.43.200 master.king.com 172.16.43.6 server.king.com 172.16.43.5 proxy.king.com

编辑/etc/puupet/manifests/hosts/files/init.pp, 这是hosts的模块定义

# hosts 的 init.pp

# 自动定义资源模块名为hosts

# hosts 不需要安装软件仅仅是将hosts文件在master/slave模型下复制到slave的某目录中

# 所以这里定义的资源类型只有 file

class hosts {

file { "hosts" :

ensure => file,

source => "puppet:///modules/hosts/hosts",

path => "/etc/hosts"

}

}

3. 之后配置都如(2)类型配置,代码文件分别为配置文件和模块定义文件 (named)

// named.conf

options {

// listen-on port 53 { 127.0.0.1; };

// listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

// memstatistics-file "/var/named/data/named_mem_stats.txt";

allow-query { 172.16.0.0/16; };

recursion yes;

dnssec-enable yes;

dnssec-validation yes;

dnssec-lookaside auto;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.iscdlv.key";

managed-keys-directory "/var/named/dynamic";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

file "named.ca";

};

include "/etc/named.rfc1912.zones";

// named.rfc1912.zones:

zone "localhost.localdomain" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "localhost" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "1.0.0.127.in-addr.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "0.in-addr.arpa" IN {

type master;

file "named.empty";

allow-update { none; };

};

zone "king.com" IN {

type master;

file "king.com.zone";

};

# king.com.zone $TTL 600 @ IN SOA dns.king.com. adminmail.king.com. ( 2014050401 1H 5M 3D 12H ) IN NS dns dns IN A 172.16.43.1 www IN A 172.16.43.88 www IN A 172.16.43.188

# named 的 init.pp

# 定义模块named , dns软件报名为bind,所以package是安装bind软件借助yum源

class named {

package { "bind":

ensure => present,

name => "bind",

provider => yum,

}

# named 软件要运行起来需要配置区域文件并且要求改变属主属组

file { "/etc/named.conf":

ensure => file,

require => Package["bind"],

source => "puppet:///modules/named/named.conf",

owner => named,

group => named,

}

file { "/etc/named.rfc1912.zones":

ensure => file,

require => Package["bind"],

source => "puppet:///modules/named/named.rfc1912.zones",

owner => named,

group => named,

}

file { "/var/named/king.com.zone":

ensure => file,

require => Package["bind"],

source => "puppet:///modules/named/king.com.zone",

owner => named,

group => named,

}

# bind软件在package安装了之后,需要启动并设置开启启动,但前提是package已经帮我们装好的bind软件

service { "named":

ensure => true,

require => Package["bind"],

enable => true,

}

}

4. haproxy的配置

# /etc/haproxy/haproxy.cfg global log 127.0.0.1 local2 chroot /var/lib/haproxy pidfile /var/run/haproxy.pid maxconn 4000 user haproxy group haproxy daemon defaults mode http log global option httplog option dontlognull option http-server-close option forwardfor except 127.0.0.0/8 option redispatch retries 3 timeout http-request 10s timeout queue 1m timeout connect 10s timeout client 1m timeout server 1m timeout http-keep-alive 10s timeout check 10s maxconn 30000 listen stats mode http bind 0.0.0.0:8080 stats enable stats hide-version stats uri /haproxyadmin?stats stats realm Haproxy\ Statistics stats auth admin:admin stats admin if TRUE frontend http-in bind *:80 mode http log global option httpclose option logasap option dontlognull capture request header Host len 20 capture request header Referer len 60 acl img_static path_beg -i /p_w_picpaths /imgs acl img_static path_end -i .jpg .jpeg .gif .png acl text_static path_beg -i / /static /js /css acl text_static path_end -i .html .shtml .js .css use_backend img_servers if img_static use_backend text_servers if text_static default_backend dynamic_servers backend img_servers balance roundrobin server imgsrv1 imgs1.king.com:6081 check maxconn 4000 server imgsrv2 imgs2.king.com:6081 check maxconn 4000 backend text_servers balance roundrobin server textsrv1 text1.king.com:6081 check maxconn 4000 server textsrv2 text2.king.com:6081 check maxconn 4000 backend dynamic_servers balance roundrobin server websrv1 dynamic1.king.com:80 check maxconn 1000 server websrv2 dynamic2.king.com:80 check maxconn 1000

# haproxy 的 init.pp

class haproxy {

package { "haproxy":

ensure => present,

name => "haproxy",

provider => yum,

}

file { "/etc/haproxy/haproxy.cfg":

ensure => file,

require => Package["haproxy"],

source => "puppet:///modules/haproxy/haproxy.cfg",

}

service { "haproxy":

ensure => true,

require => Package["haproxy"],

enable => true,

}

}

5. keepalived的配置

# /etc/keepalived/keepalived.conf

global_defs {

notification_email {

root@localhost

}

notification_email_from keepadmin@localhost

smtp_connect_timeout 3

smtp_server 127.0.0.1

router_id LVS_DEVEL_KING

}

vrrp_script chk_haproxy {

script "/etc/keepalived/chk_haproxy.sh"

interval 2

weight 2

}

vrrp_instance VI_1 {

interface eth0

state MASTER # BACKUP for slave routers

priority 100 # 99 for BACKUP

virtual_router_id 173

garp_master_delay 1

authentication {

auth_type PASS

auth_pass king1

}

track_interface {

eth0

}

virtual_ipaddress {

172.16.43.88/16 dev eth0

}

track_script {

chk_haproxy

}

}

vrrp_instance VI_2 {

interface eth0

state BACKUP # master for slave routers

priority 99 # 99 for master

virtual_router_id 174

garp_master_delay 1

authentication {

auth_type PASS

auth_pass king2

}

track_interface {

eth0

}

virtual_ipaddress {

172.16.43.188/16 dev eth0

}

}

# keepalived 的 init.pp

class keepalived {

package { "keepalived":

ensure => present,

name => "keepalived",

provider => yum,

}

file { "/etc/keepalived/keepalived.conf":

ensure => file,

require => Package["keepalived"],

source => "puppet:///modules/keepalived/keepalived.conf",

notify => Exec["reload"],

}

service { "keepalived":

ensure => true,

require => Package["keepalived"],

enable => true,

}

exec { "reload":

command => "/usr/sbin/keepalived reload",

subscribe => File["/etc/keepalived/keepalived.conf"],

path => "/bin:/sbin:/usr/bin:/usr/sbin",

refresh => "/usr/sbin/keepalived reload",

}

}

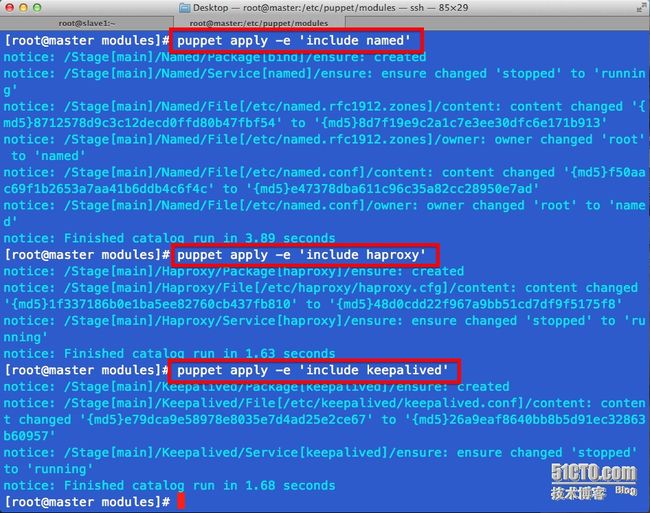

6. 一切定义完毕进入测试

# 首先所有的包都没有安装

# 使用puppet apply -e 命令在master节点测试安装hosts模块前后 /etc/hosts 文件的变化情况

# 其余模块一次测试 ,

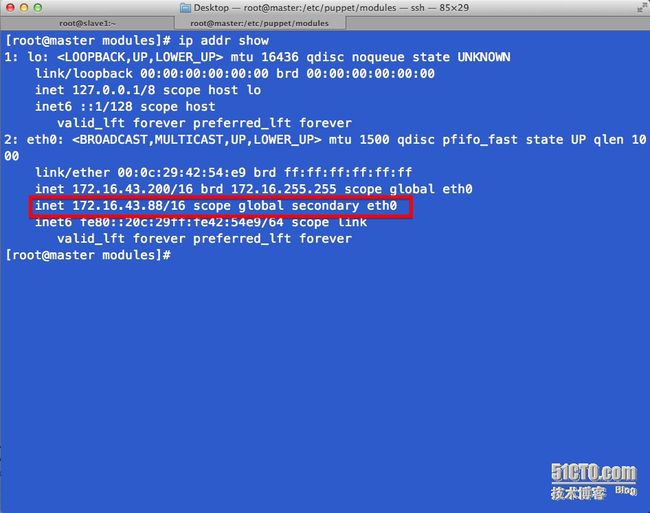

# 观察keepalived的启动效果,在配置文件中定义的vrrp已经出现

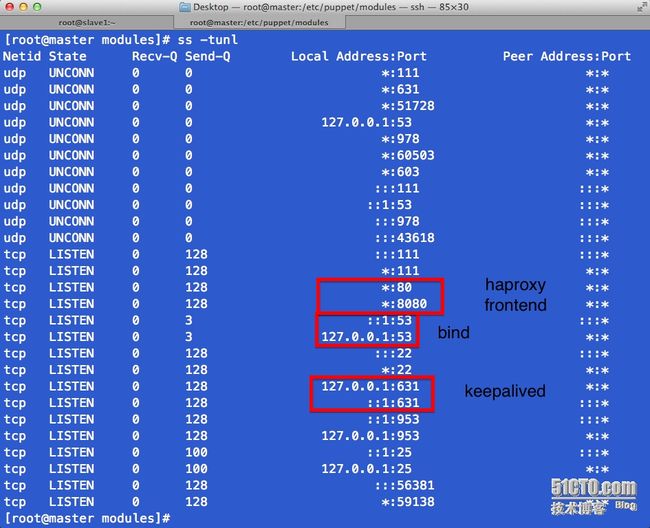

# 观察服务端口是否均已启动

# 本地测试没有异常的情况下,现在考虑将slave1.king.com之前内容情况后,利用puppet自动化部署slave1.king.com节点所需的软件和配置

master/slave测试

puppet master:

1, 安装puppet-server并配置,启动服务

yum -y install puppet-server-2.7.23-1.el6.noarch.rpm

puppet master --genconfig >> /etc/puppet/puppet.conf

service puppetmaster start

puppet agent:

1、安装puppet客户端并配置,启动服务

yum -y install puppet-2.7.23-1.el6.noarch.rpm

vim /etc/puppet/puppet.conf,在[agent]中添加server=master.king.com

service puppet start

# 在master/slave服务启动后,slave1节点会发出请求查询,master会从site.pp文件中查找slave1.king.com该装什么模块

# /etc/puppet/modules/site.pp

# 这里就是表示slave1将安装named,keepalived,haproxy,hosts等文件

# 当然之前写过的模块还有这里的节点都可以按照面向对象编程里的class一样

# 带有继承关系的

node 'slave1.king.com' {

include named

include keepalived

include haproxy

include hosts

}

# 在master/slave服务启动后,agent会根据配置文件中server的位置发起调用安装配置的请求,这才服务器需要将请求签证

# 已达到安全传输的目的

签署证书:

master:

puppet cert list # 查看所有的客户端的签证请求

puppet cert sign --all # 签署所有请求,那么客户端与服务器就可以进行交互了

# 在稍等片刻后,这些节点将被自动的在slave1.king.com上完成部署,当然也可以手动进行

# slave1.king.com puppet agent -t

# 手动去请求服务器应用完毕的结果

# 部署完毕后vrrp节点信息,此时仅仅部署了一个slave1节点,所以会有两个vrrp的ip地址,详见keepalived的配置文件

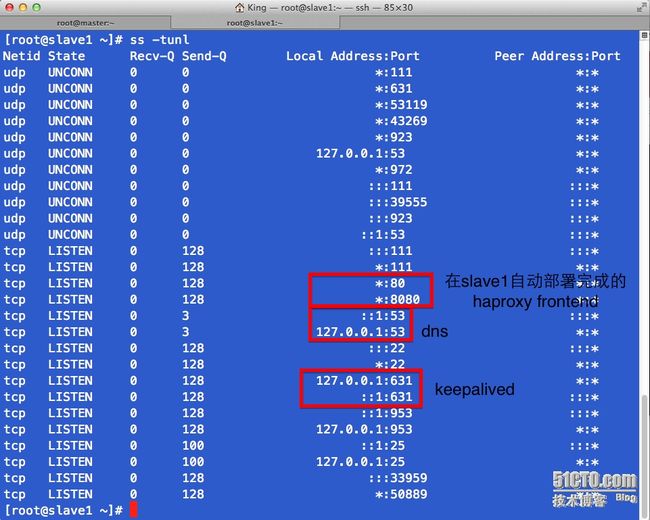

# slave1上自动部署完成后的服务端口信息