pytorch之分组卷积

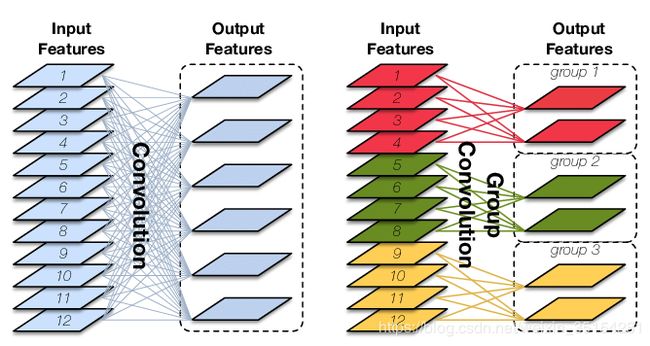

分组卷积

推荐:请先看最底部的参考连接

普通卷积

说明:普通卷积为组数为1的特殊分组卷积。

class Conv(nn.Module):

def __init__(self, in_ch, out_ch, groups):

super(Conv, self).__init__()

self.conv = nn.Conv2d(

in_channels=in_ch,

out_channels=out_ch,

kernel_size=3,

stride=1,

padding=1,

groups=groups

)

def forward(self, input):

out = self.conv(input)

return out

if __name__ == "__main__":

net = Group(64, 256, 1)

summary(net, (64, 224, 224), 1, "cpu")

---------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 256, 224, 224] 147,712

================================================================

Total params: 147,712

Trainable params: 147,712

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 98.00

Params size (MB): 0.56

Estimated Total Size (MB): 110.81

----------------------------------------------------------------

分组卷积

说明:可以人为指定分组大小,理论上减少的参数位1/G(G为分组数目)。

class Group(nn.Module):

def __init__(self, in_ch, out_ch, groups):

super(Group, self).__init__()

self.conv = nn.Conv2d(

in_channels=in_ch,

out_channels=out_ch,

kernel_size=3,

stride=1,

padding=1,

groups=groups

)

def forward(self, input):

out = self.conv(input)

return out

if __name__ == "__main__":

net = Group(64, 256, 8)

summary(net, (64, 224, 224), 1, "cpu")

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 256, 224, 224] 18,688

================================================================

Total params: 18,688

Trainable params: 18,688

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 98.00

Params size (MB): 0.07

Estimated Total Size (MB): 110.32

----------------------------------------------------------------

减少的参数

# 理论上减少的参数位1/G

print("理论值:{}\n实际值:{}".format(1/8, 18688/147712))

理论值:0.125

实际值:0.1265164644714038

深度可分离卷积

说明:组数等于输入通道数。。

class DepthwiseSeparableConv(nn.Module):

def __init__(self, in_ch, out_ch):

super(DepthwiseSeparableConv, self).__init__()

# 分组数目等于通道数目

self.depth_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=in_ch,

kernel_size=3,

stride=1,

padding=1,

groups=in_ch

)

# 使用1*1卷积得到指定的输出通道

self.point_conv = nn.Conv2d(

in_channels=in_ch,

out_channels=out_ch,

kernel_size=1,

stride=1,

padding=0,

groups=1

)

def forward(self, input):

out = self.depth_conv(input)

out = self.point_conv(out)

return out

if __name__ == "__main__":

net = DepthwiseSeparableConv(64, 256)

summary(net, (64, 224, 224), 1, "cpu")

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 64, 224, 224] 640

Conv2d-2 [1, 256, 224, 224] 16,640

================================================================

Total params: 17,280

Trainable params: 17,280

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 122.50

Params size (MB): 0.07

Estimated Total Size (MB): 134.82

----------------------------------------------------------------

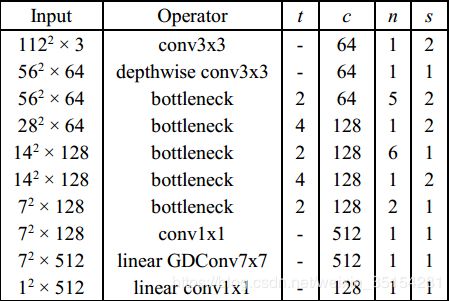

应用网络(MobileFaceNet)

class DepthwiseSeparableConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, padding, bias=False):

super(DepthwiseSeparableConv, self).__init__()

self.depthwise = nn.Conv2d(in_planes, in_planes

, kernel_size=kernel_siz

e, padding=padding

, groups=in_planes

, bias=bias

)

self.pointwise = nn.Conv2d(in_planes, out_planes

, kernel_size=1

, bias=bias)

self.bn1 = nn.BatchNorm2d(in_planes)

self.bn2 = nn.BatchNorm2d(out_planes)

self.relu = nn.ReLU()

def forward(self, x):

x = self.depthwise(x)

x = self.bn1(x)

x = self.relu(x)

x = self.pointwise(x)

x = self.bn2(x)

x = self.relu(x)

return x

if __name__ == "__main__":

net = DepthwiseSeparableConv(64, 256, 3, 0)

summary(net, (64, 224, 224), 1, "cpu")

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 64, 222, 222] 576

BatchNorm2d-2 [1, 64, 222, 222] 128

ReLU-3 [1, 64, 222, 222] 0

Conv2d-4 [1, 256, 222, 222] 16,384

BatchNorm2d-5 [1, 256, 222, 222] 512

ReLU-6 [1, 256, 222, 222] 0

================================================================

Total params: 17,600

Trainable params: 17,600

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 360.97

Params size (MB): 0.07

Estimated Total Size (MB): 373.28

----------------------------------------------------------------

全局可分离卷积

说明:组数等于输入通道,且卷积核大小等于输入大小。

class GDConv(nn.Module):

def __init__(self, in_planes, out_planes, kernel_size, padding, bias=False):

super(GDConv, self).__init__()

self.depthwise = nn.Conv2d(in_planes, out_planes, kernel_size=kernel_size, padding=padding, groups=in_planes,

bias=bias)

self.bn = nn.BatchNorm2d(in_planes)

def forward(self, x):

x = self.depthwise(x)

x = self.bn(x)

return x

if __name__ == "__main__":

net = GDConv(64, 64, 224, 0)

summary(net, (64, 224, 224), 1, "cpu")

----------------------------------------------------------------

Layer (type) Output Shape Param #

================================================================

Conv2d-1 [1, 64, 1, 1] 3,211,264

BatchNorm2d-2 [1, 64, 1, 1] 128

================================================================

Total params: 3,211,392

Trainable params: 3,211,392

Non-trainable params: 0

----------------------------------------------------------------

Input size (MB): 12.25

Forward/backward pass size (MB): 0.00

Params size (MB): 12.25

Estimated Total Size (MB): 24.50

----------------------------------------------------------------

Pytorch参数说明

groups – Number of blocked connections from input channels to output

- At groups=1, all inputs are convolved to all outputs.

- At groups=2, the operation becomes equivalent to having two conv layers side by side, each seeing half the input channels, and producing half the output channels, and both subsequently concatenated.

- At groups= in_channels, each input channel is convolved with its own set of filters, of size: ⌊(out_channels)/(in_channels)⌋.

参考

分组卷积图片来源

Group Convolution分组卷积,以及Depthwise Convolution和Global Depthwise Convolution

深度可分离卷积(Depthwise Separable Convolution)和分组卷积(Group Convolution)的理解,相互关系及PyTorch实现

MobileFaceNet