【Python行业分析】BOSS直聘招聘信息获取之使用webdriver进行爬取

序

进行网页数据爬取的方式有很多,我前面使用了requests模块添加浏览器的cookies来对页面数据进行爬取的,那我们是不是可以直接使用浏览器来获取数据呢,当然是可以的。

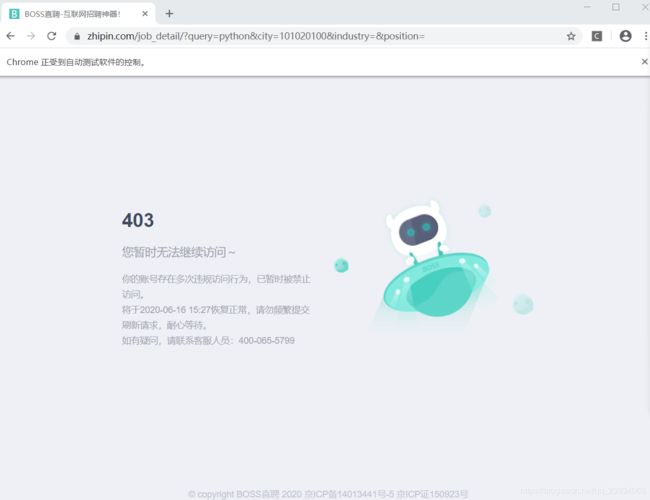

虽然boss对这种自动化测试软件也是做了限制的,但是比上一版的爬虫程序来说还是可以爬到更多的数据,BOSS的反爬策略:

可以操作浏览器的模块

WebDriver

导入浏览器驱动,用get方法打开浏览器,例如:

import time

from selenium import webdriver

def mac():

driver = webdriver.Chrome() //打开Chrome浏览器

# driver = webdriver.Firefox() //打开Firefox浏览器

# driver = webdriver.Ie() //打开IE浏览器

driver.implicitly_wait(5)

driver.get("http://www.baidu.com")

chromedriver.exe的下载地址为 http://chromedriver.storage.googleapis.com/index.html

选择版本是看下安装的浏览器版本,需要和它是一致的。

webbrowser

通过导入python的标准库webbrowser打开浏览器,例如:

import webbrowser

webbrowser.open("C:\\Program Files\\Internet Explorer\\iexplore.exe")

webbrowser.open("C:\\Program Files\\Internet Explorer\\iexplore.exe")

Splinter

Splinter的使用必修依靠Cython、lxml、selenium这三个软件。所以,安装前请提前安装

Cython、lxml、selenium。以下给出链接地址:

1)http://download.csdn.net/detail/feisan/4301293

2)http://code.google.com/p/pythonxy/wiki/AdditionalPlugins#Installation_no

3)http://pypi.python.org/pypi/selenium/2.25.0#downloads

4)http://splinter.cobrateam.info/

#coding=utf-8

import time

from splinter import Browser

def splinter(url):

browser = Browser()

#login 126 email websize

browser.visit(url)

#wait web element loading

time.sleep(5)

#fill in account and password

browser.find_by_id('idInput').fill('xxxxxx')

browser.find_by_id('pwdInput').fill('xxxxx')

#click the button of login

browser.find_by_id('loginBtn').click()

time.sleep(8)

#close the window of brower

browser.quit()

if __name__ == '__main__':

websize3 ='http://www.126.com'

splinter(websize3)

我们这次使用的是WebDriver

WebDriver常用方法

- 可以使用

get方法请求网页 find_element可以查找元素find_element_by_xx提供对 id、name、class_name等的查询send_keys输入click点击按钮、连接等text获取元素的文本

爬虫程序

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup as bs

from selenium import webdriver

import time

HOST = "https://www.zhipin.com"

def extract_data(content):

# 处理职位列表

job_list = []

for item in content.find_all(class_="job-primary"):

job_title = item.find("div", attrs={"class": "job-title"})

job_name = job_title.a.attrs["title"]

job_href = job_title.a.attrs["href"]

data_jid = job_title.a['data-jid']

data_lid = job_title.a["data-lid"]

job_area = job_title.find(class_="job-area").text

job_limit = item.find(class_="job-limit")

salary = job_limit.span.text

exp = job_limit.p.contents[0]

degree = job_limit.p.contents[2]

company = item.find(class_="info-company")

company_name = company.h3.a.text

company_type = company.p.a.text

stage = company.p.contents[2]

scale = company.p.contents[4]

info_desc = item.find(class_="info-desc").text

tags = [t.text for t in item.find_all(class_="tag-item")]

job_list.append([job_area, company_type, company_name, data_jid, data_lid, job_name, stage, scale, job_href,

salary, exp, degree, info_desc, "、".join(tags)])

return job_list

def get_job_text(driver):

"""

通过driver.text 也可以获取到页面数据 但是解析不太方便

"""

time.sleep(5)

job_list = driver.find_elements_by_class_name("job-primary")

for job in job_list:

print job.text

def main(host):

chrome_driver = "chromedriver.exe"

driver = webdriver.Chrome(executable_path=chrome_driver)

driver.get(host)

# 获取到查询框 输入查询条件

driver.find_element_by_name("query").send_keys("python")

# 点击查询按钮

driver.find_element_by_class_name("btn-search").click()

job_list = []

while 1:

time.sleep(5)

content = driver.execute_script("return document.documentElement.outerHTML")

content = bs(content, "html.parser")

job_list += extract_data(content)

next_page = content.find(class_="next")

if next_page:

driver.find_element_by_class_name("next").click()

else:

break

driver.close()

if __name__ == "__main__":

main(HOST)