scrapy 爬虫,ip代理,useragent,连接mysql的一些配置

爬虫Scrapy 数据库的配置mysql(pymysql)

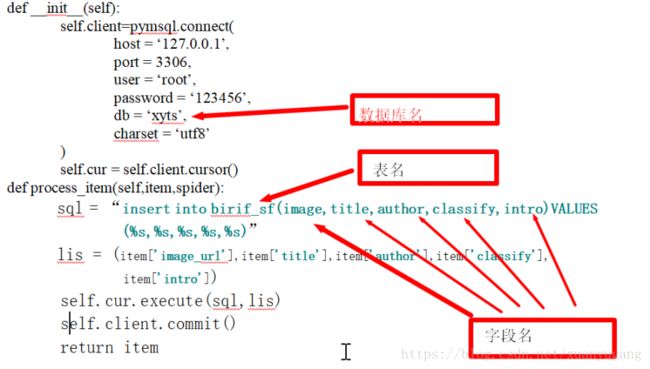

#进入pipelines.py文件

#首先导入pymysql

import pymysql

class SqkPipeline(object):

def __init__(self):

self.client=pymsql.connect(

host = ‘127.0.0.1’,

port = 3306,

user = ‘root’,

password = ‘123456’,

db = ‘xyts’,

charset = ‘utf8’

)

self.cur = self.client.cursor()

def process_item(self,item,spider):

sql = “insert into birif_sf(image,title,author,classify,intro)VALUES (%s,%s,%s,%s,%s)”

lis = (item['image_url'],item['title'],item['author'],item['classify'],

item['intro'])

self.cur.execute(sql,lis)

self.client.commit()

return item

#下图注释:

ip代理 配置

创建一个.py文件 proxymiddlewares.py

import random,base64

Class ProxyMiddleware(object):

proxy_list = [

'117.43.1.128:808',

'122.114.31.177:808',

'61.135.217.7:80',

'122.72.18.35:80',

'122.72.18.34:80',

'123.139.56.238:9999',

'110.52.8.14:53281',

'139.224.80.139:3128'

]

def process_request(self,request,spider):

pro_dir = random.choice(self.proxy_list)

print(“USE PROXY ->”+pro_dir)

request.meta[‘proxy’] = ‘http://’+pro_dir

继续创建一个.py文件useragent.py

#进入useragent.py文件

#导入所需模块

from scrapy import log

import loggin,random

from scrapy.downloadermiddlewares.useragent import UserAgentMiddleware

class UserAgent(UserAgentMiddleware):

user_agent_list = [

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/22.0.1207.1 Safari/537.1",

"Mozilla/5.0 (X11; CrOS i686 2268.111.0) AppleWebKit/536.11 "

"(KHTML, like Gecko) Chrome/20.0.1132.57 Safari/536.11",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1092.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.6 "

"(KHTML, like Gecko) Chrome/20.0.1090.0 Safari/536.6",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/537.1 "

"(KHTML, like Gecko) Chrome/19.77.34.5 Safari/537.1",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.9 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.0) AppleWebKit/536.5 "

"(KHTML, like Gecko) Chrome/19.0.1084.36 Safari/536.5",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 5.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Macintosh; Intel Mac OS X 10_8_0) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1063.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1062.0 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.1) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.1 Safari/536.3",

"Mozilla/5.0 (Windows NT 6.2) AppleWebKit/536.3 "

"(KHTML, like Gecko) Chrome/19.0.1061.0 Safari/536.3",

"Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24",

"Mozilla/5.0 (Windows NT 6.2; WOW64) AppleWebKit/535.24 "

"(KHTML, like Gecko) Chrome/19.0.1055.1 Safari/535.24"

]

def __init__(self,user_agent):

self.user_agent=user_agent

def process_request(self,request,spider):

ua = random.choice(self.user_agent_list)

if ua:

log.msg(‘Current UserAgent:’+ua,level=longgin.DEBUG)

request.headers.setdefault(‘user-agent’,ua)

创建好以上俩个文件以后修改settings.py

#覆盖默认头部请求

DEFAULT_REQUEST_HEADERS = {

'Accept':

'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8',

'Accept-Language': 'zh-CN,zh;q=0.8',

'Host':'ip84.com',

'Referer':'http://ip84.com/',

'X-XHR-Referer':'http://ip84.com/'

}

#启用自定义的俩个中间件,禁用scrapy内部的useragent中间件

DOWNLOADER_MIDDLEWARES = {

'sqk_xs.useragent.UserAgent':1,

'sqk_xs.proxymiddlewares.ProxyMiddleware': 100,

'scrapy.downloadermiddleware.useragent.UserAgentMiddleware':None,

}

#配置项目管道

ITEM_PIPELINES = {

'sqk_xs.pipelines.SqkXsPipeline': 300,

}

#启动scrapy的项目:

#创建一个.py文件start.py

#进入start.py文件

#导入模块

from scrapy.cmdline import execute

if __name__ == ‘__main__’:

execute(‘scrapy crawl qidian’.split())