动手造轮子:DenseNet

自己写的:

class denseNet(nn.modual):

def __inital__(self,input_dim=10,output_dim=10,kernel_size=1,stride=1):

super(denseNet,self).__inital__()

self.bn=nn.BatchNorm2d(input_dim)

self.conv1_1=nn.Conv2d(input_dim,output_dim,kernel_size=1,stride=1,bias=False)

self.conv3_3=nn.Conv2d(input_dim,output_dim,kernel_size=3,stride=stride)

def forward(self,x):

org_x=x

x=self.conv1_1(nn.Relu(slef.bn(x)))

x=self.conv3_3(nn.Relu(slef.bn(x)))

return torch.cat((x,org_x),1)

只实现了单一的前向传输,没有将功能模块独立开来。

教材实现:

import time

import torch

from torch import nn, optim

import torch.nn.functional as F

import sys

sys.path.append("..")

import d2lzh_pytorch as d2l

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

#稠密块的构建

def conv_block(in_channels, out_channels):

blk = nn.Sequential(nn.BatchNorm2d(in_channels),

nn.ReLU(),

nn.Conv2d(in_channels, out_channels, kernel_size=3, padding=1))

return blk

class DenseBlock(nn.Module):

def __init__(self, num_convs, in_channels, out_channels):

super(DenseBlock, self).__init__()

net = []

for i in range(num_convs):

in_c = in_channels + i * out_channels

net.append(conv_block(in_c, out_channels))

self.net = nn.ModuleList(net)

self.out_channels = in_channels + num_convs * out_channels # 计算输出通道数

def forward(self, X):

#对于比较重复的过程,可是在初始化时,将网络构建好,再forward里面依次传输

for blk in self.net:

Y = blk(X)

X = torch.cat((X, Y), dim=1) # 在通道维上将输入和输出连结

return X

#过度层,channel维度变化,HW减半

def transition_block(in_channels, out_channels):

blk = nn.Sequential(

nn.BatchNorm2d(in_channels),

nn.ReLU(),

nn.Conv2d(in_channels, out_channels, kernel_size=1),

nn.AvgPool2d(kernel_size=2, stride=2))

return blk

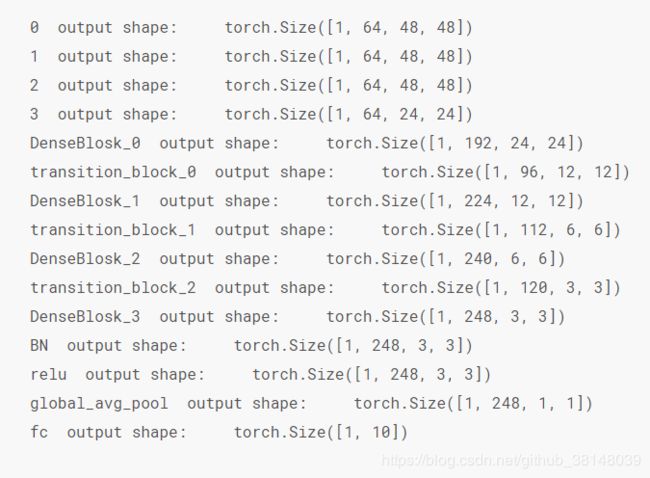

net = nn.Sequential(

nn.Conv2d(1, 64, kernel_size=7, stride=2, padding=3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(kernel_size=3, stride=2, padding=1))

num_channels, growth_rate = 64, 32 # num_channels为当前的通道数

num_convs_in_dense_blocks = [4, 4, 4, 4]

for i, num_convs in enumerate(num_convs_in_dense_blocks):

DB = DenseBlock(num_convs, num_channels, growth_rate)

net.add_module("DenseBlosk_%d" % i, DB)

# 上一个稠密块的输出通道数

num_channels = DB.out_channels

# 在稠密块之间加入通道数减半的过渡层

if i != len(num_convs_in_dense_blocks) - 1:

net.add_module("transition_block_%d" % i, transition_block(num_channels, num_channels // 2))

num_channels = num_channels // 2

net.add_module("BN", nn.BatchNorm2d(num_channels))

net.add_module("relu", nn.ReLU())

net.add_module("global_avg_pool", d2l.GlobalAvgPool2d()) # GlobalAvgPool2d的输出: (Batch, num_channels, 1, 1)

net.add_module("fc", nn.Sequential(d2l.FlattenLayer(), nn.Linear(num_channels, 10)))

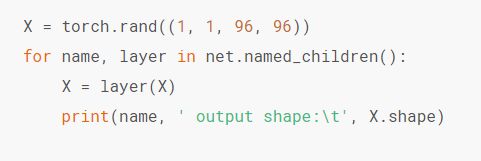

检测模型的参数设置:

参考:

https://tangshusen.me/Dive-into-DL-PyTorch/#/chapter05_CNN/5.12_densenet