vibe算法 c++实现

vibe算法

vibe 全称是 visual background extractor。算法性能很好,甚至优于GMM。我们从三个方面分析一下:

(1)what is the model and how does it behave?

(2)how is the model initialized?

(3)how is the model updated over time?

模型原理

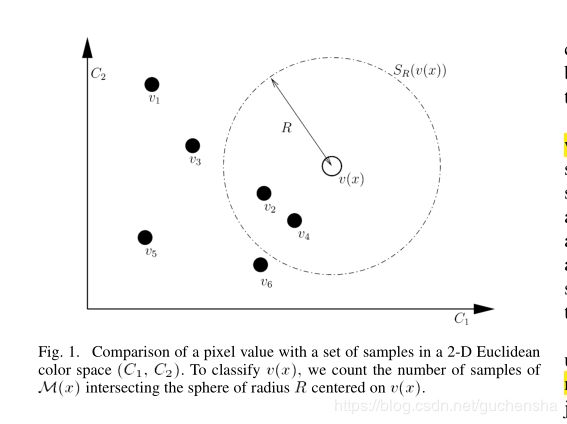

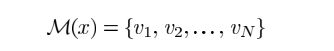

我们可以将前景提取问题看作是一个分类问题。对每一个像素点,为了避免某个异常值对结果产生较大的影响,采用的方法是为每一个像素点搭配了一组样本值。组内的值是该像素的过去值以及邻居点的像素值。对于每一个新的像素值,经过比较判断是否属于背景点。具体的比较方法如下:

我们设样本集为M(x),x是该像素点,v1~vn是n个样本值。v(x)是x处的像素值。

以v(x)的值为半径画圆

初始化

vibe算法的初始化比较特殊,特殊之处在于,只需要单帧图像即可完成初始化,GMM需要一定的视频序列。

由于像素点及其领域的相似性,我们随机在像素点的领域中选取某一点的像素值作为模型样本值。

可以用下式表示这一过程:

通过实际检验,这种方法是比较成功的,唯一的缺点是第一帧中的运动object可以会造成ghost现象。

更新策略

实时更新背景模型的重要性不言而喻,更新的过程需要能够处理光线的变化以及对新出现的物体的正确分类。我们需要解决的问题是如何判断那些像素在帧变化过程中应该保留或者更新,以及保留时间等等。

一般的更新策略可以分为conservative和blind两种。

保守的更新策略:一旦被分类为前景点,那么将不再有机会被划分到背景部分。即如果一开始被误以为是运动物体,将持续被认为是前景。

盲目的更新策略:对死锁不敏感。不论样本是否被划分为背景,样本都可能会被更新到背景当中。这样更新的问题是,当运动目标的运动较为缓慢时,也会被分类到背景当中。

每一个背景点有1/ φ的概率去更新自己的模型样本值,同时也有1/ φ的概率去更新它的邻居点的模型样本值。更新邻居的样本值利用了像素值的空间传播特性,背景模型逐渐向外扩散,这也有利于Ghost区域的更快的识别。同时当前景点计数达到临界值时将其变为背景,并有1/ φ的概率去更新自己的模型样本值。

1).无记忆更新策略

每次确定需要更新像素点的背景模型时,以新的像素值随机取代该像素点样本集的一个样本值。

2).时间取样更新策略

并不是每处理一帧数据,都需要更新处理,而是按一定的更新率更新背景模型。当一个像素点被判定为背景时,它有1/rate的概率更新背景模型。rate是时间采样因子,一般取值为16。

3).空间邻域更新策略

针对需要更新像素点,随机的选择一个该像素点邻域的背景模型,以新的像素点更新被选中的背景模型。

代码部分

#include

#include

#include

#include "BackgroundSubtract.h"

using namespace std;

int main(int argc, char* argv[])

{

cv::VideoCapture cap("D://test//video//PETS2009_sample_1.avi");

if(cap.isOpened()==NULL)

{

cout<<"shit"<>image;

cv::cvtColor(image, imageGray, COLOR_BGR2GRAY);

imageGray.copyTo(foregroundGray);

subtractor.init(imageGray);

while(!image.empty())

{

subtractor.subtract(imageGray, foregroundGray);

imshow("foreground", foregroundGray);

cap>>image;

cv::cvtColor(image, imageGray, COLOR_BGR2GRAY);

int c = cv::waitKey(20);

if(c==27)

{

break;

}

}

return 0;

}

#include "VIBE.h"

void initRnd(unsigned int size)

{

unsigned int seed = time(0);

srand(seed);

rndSize=size;

rnd=(unsigned int*)calloc(rndSize, sizeof(unsigned int));

for(unsigned int i=0; i < rndSize; i++)

{

rnd[i]=rand();

}

rndPos=0;

}

unsigned int freeRnd()

{

free(rnd);

rndSize=0;

return 0;

}

vibeModel *libvibeModelNew()

{

vibeModel *model = (vibeModel*)calloc(1, sizeof(vibeModel));

if(model)

{

model->numberOfSamples = 20;

model->matchingThreshold = 20;

model->matchingNumber = 2;

model->updateFactor = 16;

initRnd(65536);

}

return model;

}

unsigned char getRandPixel(const unsigned char *image_data, const unsigned int width, const unsigned int height, const unsigned int stride, const unsigned int x, const unsigned int y)

{

unsigned int neighborRange=1;

int dx;

int dy;

dx = (x-neighborRange) + rnd[rndPos=(rndPos+1)%rndSize]%(2*neighborRange);

dy = (y-neighborRange) + rnd[rndPos=(rndPos+1)%rndSize]%(2*neighborRange);

if((dx<0)||(dx>=width))

{

if(dx<0)

{

dx = rnd[rndPos=(rndPos+1)%rndSize]%(x+neighborRange);

}

else

{

dx = (x-neighborRange) + rnd[rndPos=(rndPos+1)%rndSize]%(width - x + neighborRange-1);

}

}

if((dy<0)||(dy>=height))

{

if(dy<0)

{

dy = rnd[rndPos=(rndPos+1)%rndSize]%(y+neighborRange);

}

else

{

dy = (y-neighborRange) + rnd[rndPos=(rndPos+1)%rndSize]%(height - y + neighborRange-1);

}

}

return image_data[dx+dy*stride];

}

int libvibeModelInit(vibeModel *model, const unsigned char *image_data, const unsigned int width, const unsigned int height, const unsigned int stride)

{

if (!model || !image_data || !width || !height || !stride || (stridewidth = width;

model->height = height;

model->stride = stride;

model->pixels = 0;

model->pixels = (pixel*)calloc(model->width*model->height, sizeof(pixel));

if (!model->pixels) return 1;

for (unsigned int i=0; i < model->width*model->height; i++)

{

model->pixels[i].numberOfSamples=model->numberOfSamples;

model->pixels[i].sizeOfSample = 1;

model->pixels[i].samples = 0;

model->pixels[i].samples = (unsigned char*)calloc(model->numberOfSamples,sizeof(unsigned char));

if (!model->pixels[i].samples) return 1;

}

unsigned int n=0;

for (unsigned int j=0; j < model->height; j++)

{

for (unsigned int i=0; i < model->width; i++)

{

model->pixels[n].samples[0] = image_data[i+j*stride];

for (unsigned int k=1; k < model->numberOfSamples; k++)

model->pixels[n].samples[k] = getRandPixel(image_data, width, height, stride, i, j);

n++;

}

}

return 0;

}

int libvibeModelUpdate(vibeModel *model, const unsigned char *image_data, unsigned char *segmentation_map)

{

int ad = model->stride - model->width;

if (!model || !image_data || !segmentation_map) return 1;

if (model->stride < model->width) return 1;

unsigned int n=0;

for (int j=0; j < model->height; j++)

{

for (int i=0; i < model->width; i++)

{

bool flag=false;

unsigned int matchingCounter=0;

for(unsigned int t=0; tpixels[n].numberOfSamples; t++)

{

if (abs((int)image_data[n]-(int)model->pixels[n].samples[t]) < model->matchingThreshold)

{

matchingCounter++;

if (matchingCounter >= model->matchingNumber)

{

flag=true;

break;

}

}

}

if(flag)

{

segmentation_map[n] = 0;

if(!(rnd[rndPos=(rndPos+1)%rndSize]%model->updateFactor))

{

model->pixels[i+model->width*j].samples[rnd[rndPos=(rndPos+1)%rndSize]%model->numberOfSamples]=image_data[n];

unsigned int m = (model->stride * j + i);

switch((rnd[rndPos=(rndPos+1)%rndSize])%8)

{

case 0:

if ((model->width - 1) <= i)

{

}

else

{

m++;

}

break;

case 1:

if ((model->width - 1) <= i)

{

}

else

{

m++;

}

if ((model->height - 1) <= j)

{

}

else

{

m += model->stride;

}

break;

case 2:

if ((model->height - 1) <= j)

{

}

else

{

m += model->stride;

}

break;

case 3:

if (i <= 0)

{

}

else

{

m--;

}

if ((model->height - 1) <= j)

{

}

else

{

m += model->stride;

}

break;

case 4:

if (i <= 0)

{

}

else

{

m--;

}

break;

case 5:

if (i <= 0)

{

}

else

{

m--;

}

if (j <= 0)

{

}

else

{

m -= model->stride;

}

break;

case 6:

if (j <= 0)

{

}

else

{

m -= model->stride;

}

break;

case 7:

if ((model->width - 1) <= i)

{

}

else

{

m++;

}

if (j <= 0)

{

}

else

{

m -= model->stride;

}

break;

default:

puts("You should not see this message!!!");

break;

}

model->pixels[m].samples[rnd[rndPos=(rndPos+1)%rndSize]%model->numberOfSamples]=image_data[n];

}

}

else

{

segmentation_map[n] = 255;

}

n++;

}

if (model->stride > model->width)

n+=ad;

}

return 0;

}

int libvibeModelFree(vibeModel *model)

{

for(unsigned int i=0; iwidth*model->height; i++)

{

free(model->pixels[i].samples);

}

free(model->pixels);

freeRnd();

return 0;

}

#include "BackgroundSubtract.h"

BackgroundSubtract::BackgroundSubtract()

{

model = libvibeModelNew();

}

BackgroundSubtract::~BackgroundSubtract()

{

libvibeModelFree(model);

}

void BackgroundSubtract::init(cv::Mat &image)

{

int32_t width = image.size().width;

int32_t height = image.size().height;

int32_t stride = image.channels()*image.size().width;

uint8_t *image_data = (uint8_t*)image.data;

libvibeModelInit(model, image_data, width, height, stride);

}

void BackgroundSubtract::subtract(const cv::Mat &image, cv::Mat &foreground)

{

uint8_t *image_data = (uint8_t*)image.data;

uint8_t *segmentation_map = (uint8_t*)foreground.data;

cv::Mat erodeElement = cv::getStructuringElement( 0, cv::Size( 2, 2 ), cv::Point( -1, -1 ) );

cv::Mat dilateElement = cv::getStructuringElement( 0, cv::Size( 2, 2 ), cv::Point( -1, -1 ) );

libvibeModelUpdate(model, image_data, segmentation_map);

/*cv::erode(foreground, foreground, erodeElement, cv::Point(-1, -1), 1);

cv::dilate(foreground, foreground, dilateElement, cv::Point(-1,-1), 2);*/

}

#ifndef _BACKGROUND_SUBTRACT_H_

#define _BACKGROUND_SUBTRACT_H_

#include "opencv2/opencv.hpp"

#include "VIBE.h"

class BackgroundSubtract

{

public:

BackgroundSubtract();

~BackgroundSubtract();

void init(cv::Mat &image);

void subtract(const cv::Mat &image, cv::Mat &foreground);

private:

vibeModel_t *model;

};

#endif

#ifndef __CV_VIBE_H__

#define __CV_VIBE_H__

#include

#include

#include

#include

#include

#include

typedef struct

{

unsigned char *samples;

unsigned int numberOfSamples;

unsigned int sizeOfSample;

}pixel;

typedef struct

{

pixel *pixels;

unsigned int width;

unsigned int height;

unsigned int stride;

unsigned int numberOfSamples;

unsigned int matchingThreshold;

unsigned int matchingNumber;

unsigned int updateFactor;

}vibeModel;

typedef vibeModel vibeModel_t;

static unsigned int *rnd;

static unsigned int rndSize;

static unsigned int rndPos;

vibeModel *libvibeModelNew();

unsigned char getRandPixel(const unsigned char *image_data, const unsigned int width, const unsigned int height, const unsigned int stride, const unsigned int x, const unsigned int y);

int32_t libvibeModelInit(vibeModel *model, const unsigned char *image_data, const unsigned int width, const unsigned int height, const unsigned int stride);

int32_t libvibeModelUpdate(vibeModel *model, const unsigned char *image_data, unsigned char *segmentation_map);

int32_t libvibeModelFree(vibeModel *model);

void initRnd(unsigned int size);

unsigned int freeRnd();

#endif /*__CV_VIBE_H__*/

参考文献

ViBe: A Universal Background Subtraction Algorithm for Video Sequences

Olivier Barnich and Marc Van Droogenbroeck, Member, IEEE