跟我学storm教程1-基本组件及分布式wordCount

原文地址:http://blog.csdn.net/hongkangwl/article/details/71056362,请勿转载

storm拓扑组成结构

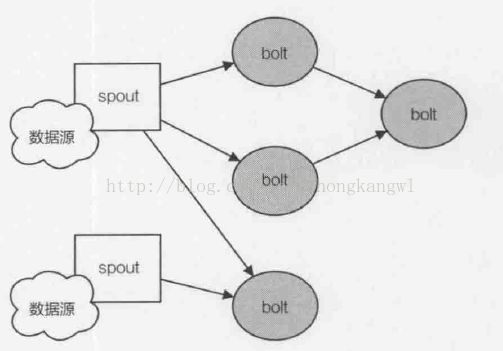

storm的分布式计算拓扑结果英文为topology,由数据流(stream)、数据源(spout)、运算单元(bolt)三个部分组成。数据源的数据流(stream)按照一定的方式(是否分组等等,这个概念后续会讲)流入一级bolt做运算,之后这些一级bolt的输出再按照一定的方式流入下一级bolt,如下图所示。storm跟hadoop等离线批量计算框架的最大区别是hadoop中的job从hdfs或者hbase等读取文件或数据处理结束后该job就结束了,而storm是任务一旦被提交会一直运行下去,除非程序内部报错或者被强制杀死。

数据流(stream)

storm的核心数据结构是tuple,tuple是一个包含了一个或者多个键值对的列表,跟python的tuple有一丢丢类似。而数据流本身就是一个个的tuple组成的序列。tuple是数据流的原子组成。

数据源(spout)

spout是一个storm拓扑的数据入口,类似于水龙头一样,源源不断的将外部数据采集后抓换为一个个tuple,然后输入给系统进行处理,最后将tuple作为数据流进行持续不断的发射给接收bolt。在写代码的时候你就会发现,spout的主要工作就是采集数据,可能的数据源有:

- 分布式消息队列的消息:比如flume采集到的应用的日志发送到kafka,spout从kafka读数据,storm-kafka这个开源工具貌似就是为了解决storm从kafka消费数据的问题;

- 消费者在购物网站页面或者app点击事件消息,用于实时推荐等

- 传感器的采集信息等

运算单元(Bolt)

Bolt是计算逻辑的主要实现者,将一个或者多个spout或者上级bolt的输出作为输入,对数据进行运算,然后创建一个或者多个数据流。bolt可以被动接收多个由spout或者bolt发射的数据流,并执行过滤、增加字段、join和聚合(aggregation)、计算、数据库读写、缓存更新、往下游发送消息等等功能,最终根据建立的复杂的数据流转换网络完成用户需求功能。

分布式实时wordCount

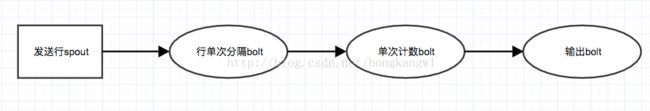

基本拓扑

这这里spout是将一行行的单次发送给行单词分隔bolt,然后再将单词发送给单词计算bolt,单词计算bolt通过对各个单词进行计算后发送给输出bolt进行打印显示。

代码

发送行spout

import org.apache.storm.wanglu.selfstudy.util.Utils;

import java.util.Map;

import java.util.concurrent.atomic.AtomicInteger;

import backtype.storm.spout.SpoutOutputCollector;

import backtype.storm.task.TopologyContext;

import backtype.storm.topology.OutputFieldsDeclarer;

import backtype.storm.topology.base.BaseRichSpout;

import backtype.storm.tuple.Fields;

import backtype.storm.tuple.Values;

/**

* Created by wanglu on 2017/5/1.

*/

public class SentenceSpout extends BaseRichSpout {

private SpoutOutputCollector collector;

private String[] sentences = {

"my dog has fleas",

"i like cold beverages",

"the dog ate my homework",

"don't have a cow man",

"i don't think i like fleas"

};

private static AtomicInteger index = new AtomicInteger(0);

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("sentence"));

}

public void open(Map config, TopologyContext context,

SpoutOutputCollector collector) {

this.collector = collector;

}

public void nextTuple() {

this.collector.emit(new Values(sentences[index.getAndIncrement()]));

if (index.get() >= sentences.length) {

index = new AtomicInteger(0);

}

Utils.waitForMillis(1);

}

}

单词分隔bolt

public class SplitSentenceBolt extends BaseRichBolt {

private OutputCollector collector;

public void prepare(Map config, TopologyContext context, OutputCollector collector) {

this.collector = collector;

}

public void execute(Tuple tuple) {

String sentence = tuple.getStringByField("sentence");

String[] words = sentence.split(" ");

for(String word : words){

this.collector.emit(new Values(word));

}

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word"));

}

}单次计数bolt

public class WordCountBolt extends BaseRichBolt {

private OutputCollector collector = null;

private static HashMap counts = null;

public void prepare(Map config, TopologyContext context,

OutputCollector collector) {

this.collector = collector;

this.counts = new HashMap();

}

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

Long count = this.counts.get(word);

if(count == null){

count = 0L;

}

count++;

this.counts.put(word, count);

this.collector.emit(new Values(word, count));

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

declarer.declare(new Fields("word", "count"));

}

} 打印结果bolt

public class ReportBolt extends BaseRichBolt {

private static final Logger logger = LoggerFactory.getLogger(ReportBolt.class);

private HashMap counts = null;

public void prepare(Map config, TopologyContext context, OutputCollector collector) {

this.counts = new HashMap();

}

public void execute(Tuple tuple) {

String word = tuple.getStringByField("word");

Long count = tuple.getLongByField("count");

this.counts.put(word, count);

}

public void declareOutputFields(OutputFieldsDeclarer declarer) {

// this bolt does not emit anything

}

@Override

public void cleanup() {

logger.warn("--- FINAL COUNTS ---");

List keys = new ArrayList();

keys.addAll(this.counts.keySet());

Collections.sort(keys);

logger.warn("ReportBolt print final result is: " + JSONObject.toJSONString(counts, SerializerFeature.WriteMapNullValue));

logger.warn("FINISH ReportBolt");

}

} 设置拓扑结构

public class WordCountTopology {

private static final String SENTENCE_SPOUT_ID = "sentence-spout";

private static final String SPLIT_BOLT_ID = "split-bolt";

private static final String COUNT_BOLT_ID = "count-bolt";

private static final String REPORT_BOLT_ID = "report-bolt";

private static final String TOPOLOGY_NAME = "word-count-topology";

public static void main(String[] args) throws Exception {

SentenceSpout spout = new SentenceSpout();

SplitSentenceBolt splitBolt = new SplitSentenceBolt();

WordCountBolt countBolt = new WordCountBolt();

ReportBolt reportBolt = new ReportBolt();

TopologyBuilder builder = new TopologyBuilder();

builder.setSpout(SENTENCE_SPOUT_ID, spout);

// SentenceSpout --> SplitSentenceBolt

builder.setBolt(SPLIT_BOLT_ID, splitBolt)

.shuffleGrouping(SENTENCE_SPOUT_ID);

// SplitSentenceBolt --> WordCountBolt

builder.setBolt(COUNT_BOLT_ID, countBolt)

.fieldsGrouping(SPLIT_BOLT_ID, new Fields("word"));

// WordCountBolt --> ReportBolt

builder.setBolt(REPORT_BOLT_ID, reportBolt)

.globalGrouping(COUNT_BOLT_ID);

Config conf = JStormHelper.getConfig(null);

conf.setDebug(true);

boolean isLocal = true;

JStormHelper.runTopology(builder.createTopology(), TOPOLOGY_NAME, conf, 10,

new JStormHelper.CheckAckedFail(conf), isLocal);

}

}

运行结果

2017-05-01 17:00:02.475 WARN org.apache.storm.wanglu.selfstudy.example.wordCount.v1.ReportBolt - --- FINAL COUNTS ---

2017-05-01 17:00:02.477 ERROR com.alibaba.jstorm.task.execute.BaseExecutors - TaskStatus isn't shutdown, but calling shutdown, illegal state!

2017-05-01 17:00:02.650 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.660 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.684 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.695 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.710 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.728 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.765 ERROR com.alibaba.jstorm.task.master.TopologyMaster - No handler of __master_task_heartbeat

2017-05-01 17:00:02.816 WARN org.apache.storm.wanglu.selfstudy.example.wordCount.v1.ReportBolt - ReportBolt print final result is: {"a":12389,"think":12389,"beverages":12390,"like":24779,"homework":12389,"don't":24778,"i":37168,"cold":12390,"cow":12389,"my":24779,"the":12389,"ate":12389,"have":12389,"has":12390,"man":12389,"dog":24779,"fleas":24779}

2017-05-01 17:00:02.817 WARN org.apache.storm.wanglu.selfstudy.example.wordCount.v1.ReportBolt - FINISH ReportBolt根据日志可以看出,在程序结束的时候,ReportBolt的cleanUp函数被调用,然后输出了整个计数结果~~

nice~~

gitHub代码地址

org.apache.storm.wanglu.selfstudy.example.wordCount.v1

第一节就到这里吧