HA 高可用集群搭建(详细教程)

hadoop的高可用HA集群搭建(详细)

- 准备工作及zookeeper集群搭建

- 进行hadoop配置基本文件

- hadoop 环境变量配置

- 格式化hdfs

- 脚本文件

- 启动及运行等服务命令

- 四台机器需全部启动journalnode:

- 格式化c01的namenode

- 启动c01的namenode

- 将c02的namenode进行数据同步

- 然后将c01进行格式化zkfc

- 关闭dfs

- 然后启动dfs

- 再将c03和c04的resourcemanager启动

- 去网页查看效果

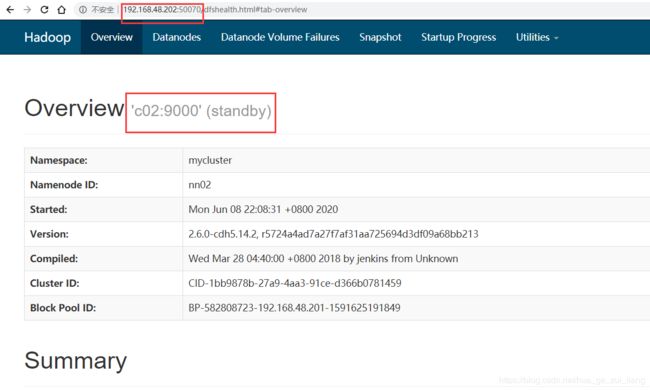

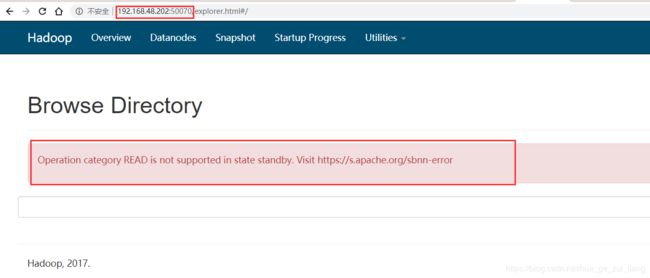

- 查看namenode

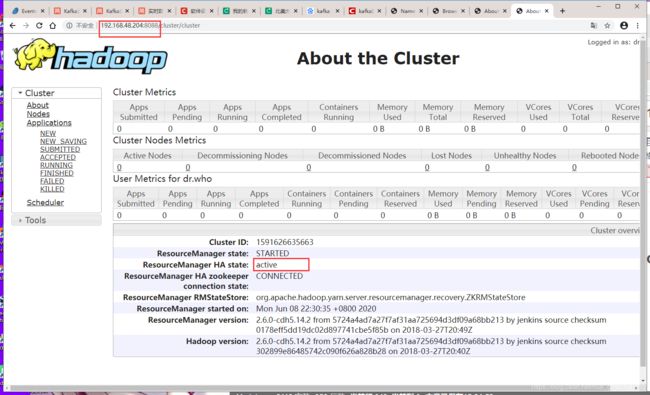

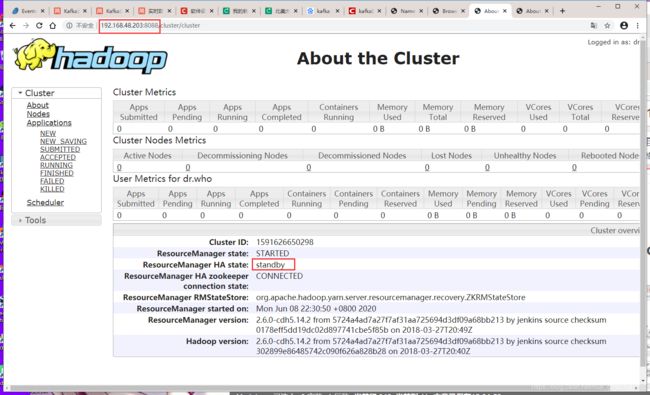

- 查看resourcemanager

本文以两台namenode(c01,c02)及两台resourcemanager(c03,c04)为例:

准备工作及zookeeper集群搭建

这些步骤点击此处去详细了解并配置

进行hadoop配置基本文件

需要配置如下文件:

hadoop-env.sh

mapred-env.sh

yarn-env.sh

slaves

mapred-site.xml

hadoop-env.sh

[root@c01 hadoop]# vi hadoop-env.sh

export JAVA_HOME=/opt/bigdata/jdk180

mapred-env.sh

[root@c01 hadoop]# vi mapred-env.sh

export JAVA_HOME=/opt/bigdata/jdk180

yarn-env.sh

[root@c01 hadoop]# vi yarn-env.sh

export JAVA_HOME=/opt/bigdata/jdk180

slaves

[root@c01 hadoop]# vi ./slaves

c01

c02

c03

c04

mapred-site.xml

[root@c01 hadoop]# cp mapred-site.xml.template mapred-site.xml

[root@c01 hadoop]# vi mapred-site.xml

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

c01:10020

mapreduce.jobhistory.webapp.address

c01:19888

core-site.xml

[root@c01 hadoop]# vi ./core-site.xml

fs.defaultFS

hdfs://mycluster

hadoop.tmp.dir

/opt/bigdata/hadoop260/hadoopdata

dfs.journalnode.edits.dir

/opt/bigdata/hadoop260/hadoopdata/jn

hadoop.proxyuser.root.hosts

*

hadoop.proxyuser.root.groups

*

ha.zookeeper.quorum

c01:2181,c02:2181,c03:2181,c04:2181

hdfs-site.xml

[root@c01 hadoop]# vi ./hdfs-site.xml

dfs.replication

1

dfs.nameservices

mycluster

dfs.ha.namenodes.mycluster

nn01,nn02

dfs.namenode.rpc-address.mycluster.nn01

c01:9000

dfs.namenode.http-address.mycluster.nn01

c01:50070

dfs.namenode.rpc-address.mycluster.nn02

c02:9000

dfs.namenode.http-address.mycluster.nn02

c02:50070

dfs.namenode.shared.edits.dir

qjournal://c01:8485;c02:8485;c03:8485;c04:8485/mycluster

dfs.journalnode.edits.dir

/opt/bigdata/hadoop260/data/journaldata

dfs.ha.automatic-failover.enabled

true

dfs.client.failover.proxy.provider.mycluster

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.permissions.enable

false

dfs.ha.fencing.methods

shell(/bin/true)

dfs.ha.fencing.ssh.private-key-files

/root/.ssh/id_rsa

dfs.ha.fencing.ssh.connect-timeout

30000

yarn-site.xml

[root@c01 hadoop]# vi ./yarn-site.xml

yarn.resourcemanager.ha.enabled

true

yarn.resourcemanager.cluster-id

jyarn

yarn.resourcemanager.ha.rm-ids

rm1,rm2

yarn.resourcemanager.hostname.rm1

c03

yarn.resourcemanager.hostname.rm2

c04

yarn.resourcemanager.recovery.enabled

true

yarn.resourcemanager.store.class

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore

yarn.resourcemanager.zk-address

c01:2181,c02:2181,c03:2181,c04:2181

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.log-aggregation-enable

true

yarn.log-aggregation.retain-seconds

604800

hadoop 环境变量配置

[root@c01 hadoop]# vi /etc/profile.d/env.sh

export HADOOP_HOME=/opt/bigdata/hadoop260

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

[root@c01 hadoop]# source /etc/profile.d/env.sh

格式化hdfs

[root@c01 hadoop]# hadoop namenode -format

然后使用以下命令去执行脚本,拷贝到每台机器上

[root@c01 hadoop260]# xrsync ./etc/hadoop/

脚本文件

启动及运行等服务命令

通过脚本文件启动各个机器上的zookeeper

[root@c01 hadoop260]# zkop.sh start

四台机器需全部启动journalnode:

[root@c01 hadoop260]# ./sbin/hadoop-daemon.sh start journalnode

[root@c02 hadoop260]# ./sbin/hadoop-daemon.sh start journalnode

[root@c03 hadoop260]# ./sbin/hadoop-daemon.sh start journalnode

[root@c04 hadoop260]# ./sbin/hadoop-daemon.sh start journalnode

格式化c01的namenode

[root@c01 hadoop260]# ./bin/hdfs namenode -format

启动c01的namenode

[root@c01 hadoop260]# ./sbin/hadoop-daemon.sh start namenode

将c02的namenode进行数据同步

[root@c02 hadoop260]# ./bin/hdfs namenode -bootstrapStandby

然后将c01进行格式化zkfc

[root@c01 hadoop260]# ./bin/hdfs zkfc -formatZK

关闭dfs

[root@c01 hadoop260]# ./sbin/stop-dfs.sh

然后启动dfs

[root@c01 hadoop260]# ./sbin/start-dfs.sh

再将c03和c04的resourcemanager启动

[root@c03 hadoop260]# ./sbin/start-yarn.sh

[root@c04 hadoop260]# ./sbin/start-yarn.sh

通过showjps.sh 脚本查看全部机器的jps:

[root@c01 hadoop260]# showjps.sh jps

去网页查看效果

查看namenode

查看resourcemanager

可以看到resourcemanager也是一台active,一台standby。(阁下可以通过kill 掉其中一个namenode 或者 resourcemanager进程来查看他们的namenode以及resourcemanager的状态改变!)

ps:至此,HA高可用集群搭建完毕!

ps:望多多支持,后续更新中。。。