Windows下运行LSD-SLAM

转载请注明出处:https://blog.csdn.net/huaweijian0324/article/details/80547782

最近学校的项目基本做完了,也快去实习了,所以抽个时间把之前做的windows下的LSD-SLAM整理一下。

LSD-SLAM是一个大规模的单目视觉半稠密slam项目,具体我就不多介绍了,个人感觉是一个很秀的算法,

想要详细了解这个算法的,请参阅这位大神lancelot_vim的博客:

https://blog.csdn.net/lancelot_vim/article/details/51706832

LSD-SLAM本身是直接在ROS下运行的,不过ROS只是起到了一个输入输出的作用,

其算法最核心的代码已有国外某大神帮我们写好(不带点云显示):

https://github.com/williammc/lsd_slam

网络不好的可以直接在这下载:

https://download.csdn.net/download/huaweijian0324/10257778

首先就是在windows下配置LSD-SLAM的环境,关于这点,我最开始做的时候也是一脸迷茫,

在windows下配置其环境是件比较繁琐的事情,因为需要用到很多很多库,

在这里要特别感谢这位兄弟YOY_,按照他的博客一步一步配置:

https://blog.csdn.net/ouyangying123/article/details/70861654

中间应该会出现编译错误,关于这点我搞了好久才明白,原来是G2O库文件的版本不对,导致用法出现了变化,

应该用老版本G2O库文件,可以在这里下载:

https://download.csdn.net/download/huaweijian0324/10257773

到这里程序应该能正常运行了,只是此时只能出现原始的图像和深度图,

想要看到三维点云的话需要自己画出来,具体画的话可以参考ROS下的LSD-SLAM源码,

特别需要注意的是,

在refreshPC()函数里,一定要加上glewInit(),不然会出现运行时异常,这点当时坑了我很久。

这里附上关键代码:

#include "KeyFrameDisplay.h"

#include

#include "lsd_slam\util\settings.h"

#include "opencv2/opencv.hpp"

KeyFrameDisplay::KeyFrameDisplay()

{

originalInput = 0;

id = 0;

vertexBufferIdValid = false;

glBuffersValid = false;

camToWorld = Sophus::Sim3f();

width = height = 0;

my_scaledTH = my_absTH = 0;

totalPoints = displayedPoints = 0;

}

KeyFrameDisplay::~KeyFrameDisplay()

{

if (vertexBufferIdValid)

{

glDeleteBuffers(1, &vertexBufferId);

vertexBufferIdValid = false;

}

if (originalInput != 0)

delete[] originalInput;

}

void KeyFrameDisplay::setFrom(lsd_slam::KeyFrameMessage* msg)

{

// copy over campose.

memcpy(camToWorld.data(), msg->camToWorld.data(), 7 * sizeof(float));

fx = msg->fx;

fy = msg->fy;

cx = msg->cx;

cy = msg->cy;

fxi = 1 / fx;

fyi = 1 / fy;

cxi = -cx / fx;

cyi = -cy / fy;

width = msg->width;

height = msg->height;

id = msg->id;

time = msg->time;

if (originalInput != 0)

delete[] originalInput;

originalInput = 0;

if (msg->pointcloud.size() != width*height*sizeof(InputPointDense))

{

if (msg->pointcloud.size() != 0)

{

printf("WARNING: PC with points, but number of points not right! (is %zu, should be %u*%dx%d=%u)\n",

msg->pointcloud.size(), sizeof(InputPointDense), width, height, width*height*sizeof(InputPointDense));

}

}

else

{

originalInput = new InputPointDense[width*height];

memcpy(originalInput, msg->pointcloud.data(), width*height*sizeof(InputPointDense));

}

glBuffersValid = false;

}

void KeyFrameDisplay::refreshPC()

{

bool paramsStillGood = my_scaledTH == scaledDepthVarTH &&

my_absTH == absDepthVarTH &&

my_scale*1.2 > camToWorld.scale() &&

my_scale < camToWorld.scale()*1.2 &&

my_minNearSupport == minNearSupport &&

my_sparsifyFactor == sparsifyFactor;

if (glBuffersValid && (paramsStillGood || numRefreshedAlready > 10)) return;

numRefreshedAlready++;

glBuffersValid = true;

// delete old vertex buffer

if (vertexBufferIdValid)

{

glDeleteBuffers(1, &vertexBufferId);

vertexBufferIdValid = false;

}

// if there are no vertices, done!

if (originalInput == 0)

return;

// make data

MyVertex* tmpBuffer = new MyVertex[width*height];

my_scaledTH = scaledDepthVarTH;

my_absTH = absDepthVarTH;

my_scale = camToWorld.scale();

my_minNearSupport = minNearSupport;

my_sparsifyFactor = sparsifyFactor;

// data is directly in ros message, in correct format.

vertexBufferNumPoints = 0;

int total = 0, displayed = 0;

for (int y = 1; y < height - 1; y++)

for (int x = 1; x < width - 1; x++)

{

if (originalInput[x + y*width].idepth <= 0) continue;

total++;

if (my_sparsifyFactor > 1 && rand() % my_sparsifyFactor != 0) continue;

float depth = 1 / originalInput[x + y*width].idepth;

//float depth = -(1 / originalInput[x + y*width].idepth);

float depth4 = depth*depth; depth4 *= depth4;

if (originalInput[x + y*width].idepth_var * depth4 > my_scaledTH)

continue;

if (originalInput[x + y*width].idepth_var * depth4 * my_scale*my_scale > my_absTH)

continue;

if (my_minNearSupport > 1)

{

int nearSupport = 0;

for (int dx = -1; dx < 2; dx++)

for (int dy = -1; dy < 2; dy++)

{

int idx = x + dx + (y + dy)*width;

if (originalInput[idx].idepth > 0)

{

float diff = originalInput[idx].idepth - 1.0f / depth;

if (diff*diff < 2 * originalInput[x + y*width].idepth_var)

nearSupport++;

}

}

if (nearSupport < my_minNearSupport)

continue;

}

tmpBuffer[vertexBufferNumPoints].point[0] = (x*fxi + cxi) * depth;

tmpBuffer[vertexBufferNumPoints].point[1] = (y*fyi + cyi) * depth;

tmpBuffer[vertexBufferNumPoints].point[2] = depth;

tmpBuffer[vertexBufferNumPoints].color[3] = 100;

tmpBuffer[vertexBufferNumPoints].color[2] = originalInput[x + y*width].color[0];

tmpBuffer[vertexBufferNumPoints].color[1] = originalInput[x + y*width].color[1];

tmpBuffer[vertexBufferNumPoints].color[0] = originalInput[x + y*width].color[2];

vertexBufferNumPoints++;

displayed++;

}

totalPoints = total;

displayedPoints = displayed;

//不加glewInit()会出现运行时异常

glewInit();

vertexBufferId = 0;

glGenBuffers(1, &vertexBufferId);

glBindBuffer(GL_ARRAY_BUFFER, vertexBufferId); // for vertex coordinates

//glBindBuffer(GL_ELEMENT_ARRAY_BUFFER, vertexBufferId); // for vertex coordinates

glBufferData(GL_ARRAY_BUFFER, sizeof(MyVertex) * vertexBufferNumPoints, tmpBuffer, GL_STATIC_DRAW);

vertexBufferIdValid = true;

if (!keepInMemory)

{

delete[] originalInput;

originalInput = 0;

}

delete[] tmpBuffer;

}

void KeyFrameDisplay::drawCam(float lineWidth, float* color)

{

if(width == 0)

return;

glPushMatrix();

Sophus::Matrix4f m = camToWorld.matrix();

glMultMatrixf((GLfloat*)m.data());

if(color == 0)

glColor3f(1,0,0);

else

glColor3f(color[0],color[1],color[2]);

glLineWidth(lineWidth);

glBegin(GL_LINES);

glVertex3f(0,0,0);

glVertex3f(0.05*(0-cx)/fx,0.05*(0-cy)/fy,0.05);

glVertex3f(0,0,0);

glVertex3f(0.05*(0-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0,0,0);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0,0,0);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(0-cy)/fy,0.05);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(0-cy)/fy,0.05);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0.05*(0-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0.05*(0-cx)/fx,0.05*(height-1-cy)/fy,0.05);

glVertex3f(0.05*(0-cx)/fx,0.05*(0-cy)/fy,0.05);

glVertex3f(0.05*(0-cx)/fx,0.05*(0-cy)/fy,0.05);

glVertex3f(0.05*(width-1-cx)/fx,0.05*(0-cy)/fy,0.05);

glEnd();

glPopMatrix();

}

int KeyFrameDisplay::flushPC(std::ofstream* f)

{

MyVertex* tmpBuffer = new MyVertex[width*height];

int num = 0;

for(int y=1;y 1 && rand()%my_sparsifyFactor != 0) continue;

float depth = 1 / originalInput[x+y*width].idepth;

float depth4 = depth*depth; depth4*= depth4;

if(originalInput[x+y*width].idepth_var * depth4 > my_scaledTH)

continue;

if(originalInput[x+y*width].idepth_var * depth4 * my_scale*my_scale > my_absTH)

continue;

if(my_minNearSupport > 1)

{

int nearSupport = 0;

for(int dx=-1;dx<2;dx++)

for(int dy=-1;dy<2;dy++)

{

int idx = x+dx+(y+dy)*width;

if(originalInput[idx].idepth > 0)

{

float diff = originalInput[idx].idepth - 1.0f / depth;

if(diff*diff < 2*originalInput[x+y*width].idepth_var)

nearSupport++;

}

}

if(nearSupport < my_minNearSupport)

continue;

}

Sophus::Vector3f pt = camToWorld * (Sophus::Vector3f((x*fxi + cxi), (y*fyi + cyi), 1) * depth);

tmpBuffer[num].point[0] = pt[0];

tmpBuffer[num].point[1] = pt[1];

tmpBuffer[num].point[2] = pt[2];

tmpBuffer[num].color[3] = 100;

tmpBuffer[num].color[2] = originalInput[x+y*width].color[0];

tmpBuffer[num].color[1] = originalInput[x+y*width].color[1];

tmpBuffer[num].color[0] = originalInput[x+y*width].color[2];

num++;

}

for(int i=0;iwrite((const char *)tmpBuffer[i].point,3*sizeof(float));

float color = tmpBuffer[i].color[0] / 255.0;

f->write((const char *)&color,sizeof(float));

}

// *f << tmpBuffer[i].point[0] << " " << tmpBuffer[i].point[1] << " " << tmpBuffer[i].point[2] << " " << (tmpBuffer[i].color[0] / 255.0) << "\n";

delete tmpBuffer;

printf("Done flushing frame %d (%d points)!\n", this->id, num);

return num;

}

void KeyFrameDisplay::drawPC(float pointSize, float alpha)

{

refreshPC();

if(!vertexBufferIdValid)

{

return;

}

GLfloat LightColor[] = {1, 1, 1, 1};

if(alpha < 1)

{

glEnable(GL_BLEND);

glBlendFunc(GL_SRC_ALPHA, GL_ONE_MINUS_SRC_ALPHA);

LightColor[0] = LightColor[1] = 0;

glEnable(GL_LIGHTING);

glDisable(GL_LIGHT1);

glLightfv (GL_LIGHT0, GL_AMBIENT, LightColor);

}

else

{

glDisable(GL_LIGHTING);

}

glPushMatrix();

Sophus::Matrix4f m = camToWorld.matrix();

glMultMatrixf((GLfloat*)m.data());

glPointSize(pointSize);

glBindBuffer(GL_ARRAY_BUFFER, vertexBufferId);

glVertexPointer(3, GL_FLOAT, sizeof(MyVertex), 0);

glColorPointer(4, GL_UNSIGNED_BYTE, sizeof(MyVertex), (const void*) (3*sizeof(float)));

glEnableClientState(GL_VERTEX_ARRAY);

glEnableClientState(GL_COLOR_ARRAY);

glDrawArrays(GL_POINTS, 0, vertexBufferNumPoints);

glDisableClientState(GL_COLOR_ARRAY);

glDisableClientState(GL_VERTEX_ARRAY);

glPopMatrix();

if(alpha < 1)

{

glDisable(GL_BLEND);

glDisable(GL_LIGHTING);

LightColor[2] = LightColor[1] = LightColor[0] = 1;

glLightfv (GL_LIGHT0, GL_AMBIENT_AND_DIFFUSE, LightColor);

}

}

然后再插上一个单目摄像头,就可以愉快地运行了,我用的摄像头是罗技C270型号。

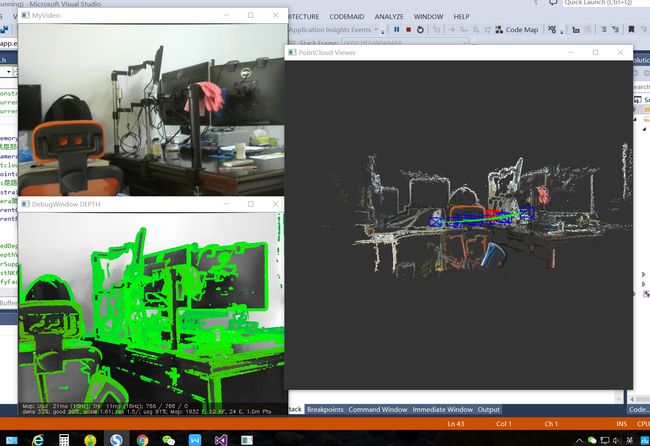

下面附上一张在VS2013下运行的效果图:

还是很炫的吧~

后来就是想着怎么把原始点云数据转化为RGB点云数据,因为如果能生成RGB点云的话岂不是更炫?

然后我参考国外某大神的一个帖子,主要思路就是重载一个Frame的构造函数,把rgb数据传进来,

附上关键代码:

Frame::Frame(int id, int width, int height, const Eigen::Matrix3f& K, double timestamp, const unsigned char* image, const unsigned char* rgbImage)

{

initialize(id, width, height, K, timestamp);

data.image[0] = FrameMemory::getInstance().getFloatBuffer(data.width[0] * data.height[0]);

data.imageRGB[0] = FrameMemory::getInstance().getFloatBuffer(data.width[0] * data.height[0] * 3);

float* maxPt = data.image[0] + data.width[0] * data.height[0];

float* maxPtRGB = data.imageRGB[0] + data.width[0] * data.height[0] * 3;

for (float* pt = data.image[0]; pt < maxPt; pt++)

{

*pt = *image;

image++;

}

for (float* pt = data.imageRGB[0]; pt < maxPtRGB; pt++)

{

*pt = *rgbImage;

rgbImage++;

}

data.imageValid[0] = true;

privateFrameAllocCount++;

if (enablePrintDebugInfo && printMemoryDebugInfo)

printf("ALLOCATED frame %d, now there are %d\n", this->id(), privateFrameAllocCount);

}特别注意:

一定要在frame析构函数里把添加的rbg数据给删除掉,不然会出现很严重的内存泄露问题。

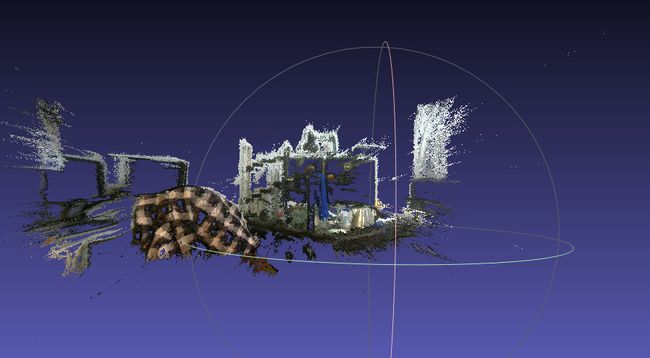

下面附上一张改进后的rgb点云效果图:

是不是看起来又更炫了一点呢?

点云数据是可以保存为.ply文件到本地的,可以用Mashlab直接打开,

附上很久之前保存的一个.ply文件的效果图(椅子上挂的是件睡衣):

还是很有立体感的吧,Y(^_^)Y~

好了,就先写到这吧,有什么不对的地方还请大家多多指教~