一步一步学linux操作系统: 13 进程调度二_主动调度

进程调度可分为主动调度与抢占式调度

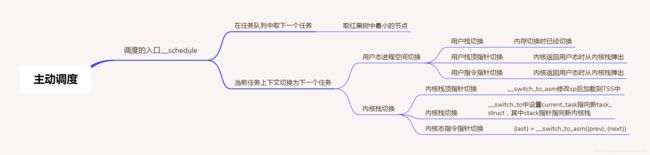

主动调度

进程运行中,发现里面有一条指令 sleep,或者在等待某个 I/O 事件,就要主动让出 CPU

两个例子代码片段

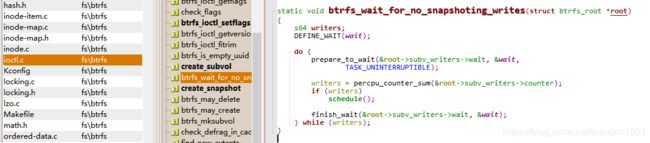

等待一个写入

写入块设备的一个典型场景,写入需要一段时间,这段时间用不上 CPU,需要主动让给其他进程

\fs\btrfs\ioctl.c

static void btrfs_wait_for_no_snapshoting_writes(struct btrfs_root *root)

{

s64 writers;

DEFINE_WAIT(wait);

do {

prepare_to_wait(&root->subv_writers->wait, &wait,

TASK_UNINTERRUPTIBLE);

writers = percpu_counter_sum(&root->subv_writers->counter);

if (writers)

schedule();

finish_wait(&root->subv_writers->wait, &wait);

} while (writers);

}

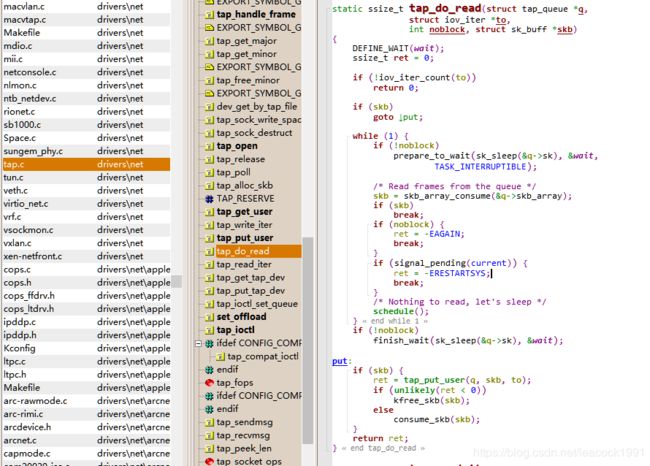

从 Tap 网络设备等待一个读取

Tap 网络设备是虚拟机使用的网络设备,当没有数据到来的时候,它也需要等待,所以也会选择把 CPU 让给其他进程

\drivers\net\tap.c

static ssize_t tap_do_read(struct tap_queue *q,

struct iov_iter *to,

int noblock, struct sk_buff *skb)

{

DEFINE_WAIT(wait);

ssize_t ret = 0;

if (!iov_iter_count(to))

return 0;

if (skb)

goto put;

while (1) {

if (!noblock)

prepare_to_wait(sk_sleep(&q->sk), &wait,

TASK_INTERRUPTIBLE);

/* Read frames from the queue */

skb = skb_array_consume(&q->skb_array);

if (skb)

break;

if (noblock) {

ret = -EAGAIN;

break;

}

if (signal_pending(current)) {

ret = -ERESTARTSYS;

break;

}

/* Nothing to read, let's sleep */

schedule();

}

if (!noblock)

finish_wait(sk_sleep(&q->sk), &wait);

put:

if (skb) {

ret = tap_put_user(q, skb, to);

if (unlikely(ret < 0))

kfree_skb(skb);

else

consume_skb(skb);

}

return ret;

}

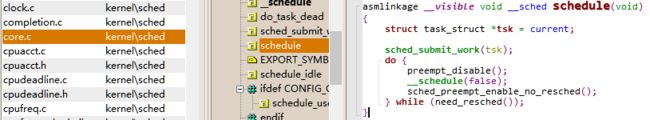

schedule 函数

计算机在操作外部设备的时候,往往需要让出 CPU,就像上面两段代码一样,选择调用 schedule() 函数。

\kernel\sched\core.c

asmlinkage __visible void __sched schedule(void)

{

struct task_struct *tsk = current;

sched_submit_work(tsk);

do {

preempt_disable();

__schedule(false);

sched_preempt_enable_no_resched();

} while (need_resched());

}

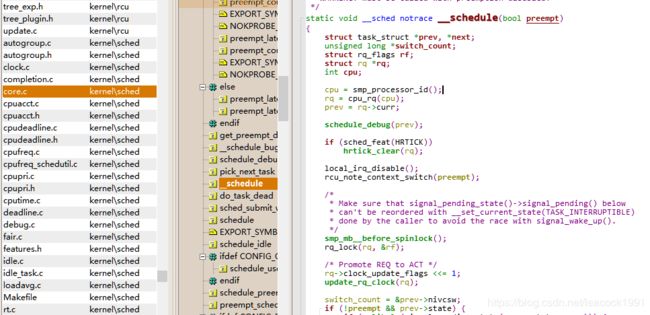

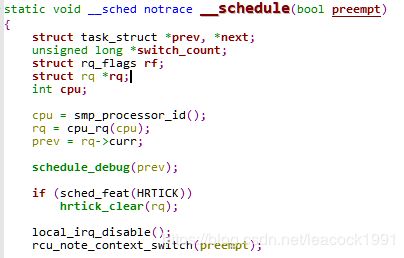

__schedule 函数

\kernel\sched\core.c

/*

* __schedule() is the main scheduler function.

*

* The main means of driving the scheduler and thus entering this function are:

*

* 1. Explicit blocking: mutex, semaphore, waitqueue, etc.

*

* 2. TIF_NEED_RESCHED flag is checked on interrupt and userspace return

* paths. For example, see arch/x86/entry_64.S.

*

* To drive preemption between tasks, the scheduler sets the flag in timer

* interrupt handler scheduler_tick().

*

* 3. Wakeups don't really cause entry into schedule(). They add a

* task to the run-queue and that's it.

*

* Now, if the new task added to the run-queue preempts the current

* task, then the wakeup sets TIF_NEED_RESCHED and schedule() gets

* called on the nearest possible occasion:

*

* - If the kernel is preemptible (CONFIG_PREEMPT=y):

*

* - in syscall or exception context, at the next outmost

* preempt_enable(). (this might be as soon as the wake_up()'s

* spin_unlock()!)

*

* - in IRQ context, return from interrupt-handler to

* preemptible context

*

* - If the kernel is not preemptible (CONFIG_PREEMPT is not set)

* then at the next:

*

* - cond_resched() call

* - explicit schedule() call

* - return from syscall or exception to user-space

* - return from interrupt-handler to user-space

*

* WARNING: must be called with preemption disabled!

*/

static void __sched notrace __schedule(bool preempt)

{

struct task_struct *prev, *next;

unsigned long *switch_count;

struct rq_flags rf;

struct rq *rq;

int cpu;

cpu = smp_processor_id();

rq = cpu_rq(cpu);

prev = rq->curr;

schedule_debug(prev);

if (sched_feat(HRTICK))

hrtick_clear(rq);

local_irq_disable();

rcu_note_context_switch(preempt);

/*

* Make sure that signal_pending_state()->signal_pending() below

* can't be reordered with __set_current_state(TASK_INTERRUPTIBLE)

* done by the caller to avoid the race with signal_wake_up().

*/

smp_mb__before_spinlock();

rq_lock(rq, &rf);

/* Promote REQ to ACT */

rq->clock_update_flags <<= 1;

update_rq_clock(rq);

switch_count = &prev->nivcsw;

if (!preempt && prev->state) {

if (unlikely(signal_pending_state(prev->state, prev))) {

prev->state = TASK_RUNNING;

} else {

deactivate_task(rq, prev, DEQUEUE_SLEEP | DEQUEUE_NOCLOCK);

prev->on_rq = 0;

if (prev->in_iowait) {

atomic_inc(&rq->nr_iowait);

delayacct_blkio_start();

}

/*

* If a worker went to sleep, notify and ask workqueue

* whether it wants to wake up a task to maintain

* concurrency.

*/

if (prev->flags & PF_WQ_WORKER) {

struct task_struct *to_wakeup;

to_wakeup = wq_worker_sleeping(prev);

if (to_wakeup)

try_to_wake_up_local(to_wakeup, &rf);

}

}

switch_count = &prev->nvcsw;

}

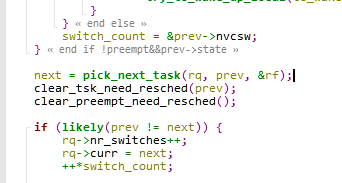

next = pick_next_task(rq, prev, &rf);

clear_tsk_need_resched(prev);

clear_preempt_need_resched();

if (likely(prev != next)) {

rq->nr_switches++;

rq->curr = next;

++*switch_count;

trace_sched_switch(preempt, prev, next);

/* Also unlocks the rq: */

rq = context_switch(rq, prev, next, &rf);

} else {

rq->clock_update_flags &= ~(RQCF_ACT_SKIP|RQCF_REQ_SKIP);

rq_unlock_irq(rq, &rf);

}

balance_callback(rq);

}

主要步骤如下

第一步, 在当前的 CPU 上,取出任务队列 rq

static void __sched notrace __schedule(bool preempt)

{

struct task_struct *prev, *next;

unsigned long *switch_count;

struct rq_flags rf;

struct rq *rq;

int cpu;

cpu = smp_processor_id();

rq = cpu_rq(cpu);

prev = rq->curr;

......

task_struct *prev 指向这个 CPU 的任务队列上面正在运行的那个进程 curr,prev是因为一旦它被切换下来,那它就成了前任了

第二步, 获取下一个任务,task_struct *next 指向下一个任务,这就是继任

next = pick_next_task(rq, prev, &rf);

clear_tsk_need_resched(prev);

clear_preempt_need_resched();

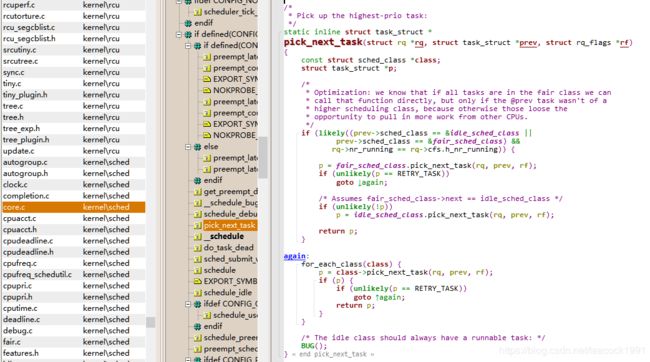

pick_next_task 的实现

\kernel\sched\core.c

/*

* Pick up the highest-prio task:

*/

static inline struct task_struct *

pick_next_task(struct rq *rq, struct task_struct *prev, struct rq_flags *rf)

{

const struct sched_class *class;

struct task_struct *p;

/*

* Optimization: we know that if all tasks are in the fair class we can

* call that function directly, but only if the @prev task wasn't of a

* higher scheduling class, because otherwise those loose the

* opportunity to pull in more work from other CPUs.

*/

if (likely((prev->sched_class == &idle_sched_class ||

prev->sched_class == &fair_sched_class) &&

rq->nr_running == rq->cfs.h_nr_running)) {

p = fair_sched_class.pick_next_task(rq, prev, rf);

if (unlikely(p == RETRY_TASK))

goto again;

/* Assumes fair_sched_class->next == idle_sched_class */

if (unlikely(!p))

p = idle_sched_class.pick_next_task(rq, prev, rf);

return p;

}

again:

for_each_class(class) {

p = class->pick_next_task(rq, prev, rf);

if (p) {

if (unlikely(p == RETRY_TASK))

goto again;

return p;

}

}

/* The idle class should always have a runnable task: */

BUG();

}

again 这里就是 12进程调度一 中介绍的依次调用调度类,但是这里有了一个优化,因为大部分进程是普通进程,所以大部分情况下会调用上面的逻辑,调用的就是 fair_sched_class.pick_next_task。

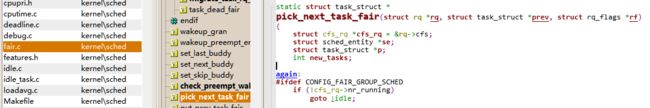

由 12进程调度一可知fair_sched_class中调用的是 pick_next_task_fair

\kernel\sched\fair.c

static struct task_struct *

pick_next_task_fair(struct rq *rq, struct task_struct *prev, struct rq_flags *rf)

{

struct cfs_rq *cfs_rq = &rq->cfs;

struct sched_entity *se;

struct task_struct *p;

int new_tasks;

....

对于 CFS 调度类,取出相应的队列 cfs_rq,这就是 12进程调度一介绍的那棵红黑树。

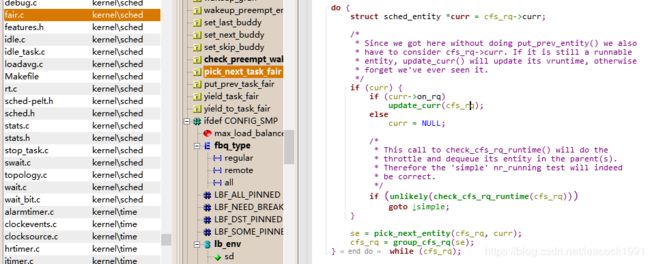

pick_next_task_fair函数中

struct sched_entity *curr = cfs_rq->curr;

if (curr) {

if (curr->on_rq)

update_curr(cfs_rq);

else

curr = NULL;

......

}

se = pick_next_entity(cfs_rq, curr);

取出当前正在运行的任务 curr,如果依然是可运行的状态,也即处于进程就绪状态,则调用 update_curr 更新 vruntime

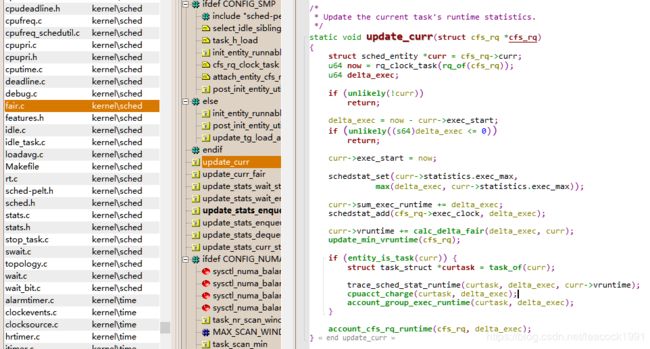

update_curr 函数,计算并更新vruntime,虚拟运行时间 vruntime += 实际运行时间 delta_exec * NICE_0_LOAD/ 权重

\kernel\sched\fair.c

/*

* Update the current task's runtime statistics.

*/

static void update_curr(struct cfs_rq *cfs_rq)

{

struct sched_entity *curr = cfs_rq->curr;

u64 now = rq_clock_task(rq_of(cfs_rq));

u64 delta_exec;

if (unlikely(!curr))

return;

delta_exec = now - curr->exec_start;

if (unlikely((s64)delta_exec <= 0))

return;

curr->exec_start = now;

schedstat_set(curr->statistics.exec_max,

max(delta_exec, curr->statistics.exec_max));

curr->sum_exec_runtime += delta_exec;

schedstat_add(cfs_rq->exec_clock, delta_exec);

curr->vruntime += calc_delta_fair(delta_exec, curr);

update_min_vruntime(cfs_rq);

if (entity_is_task(curr)) {

struct task_struct *curtask = task_of(curr);

trace_sched_stat_runtime(curtask, delta_exec, curr->vruntime);

cpuacct_charge(curtask, delta_exec);

account_group_exec_runtime(curtask, delta_exec);

}

account_cfs_rq_runtime(cfs_rq, delta_exec);

}

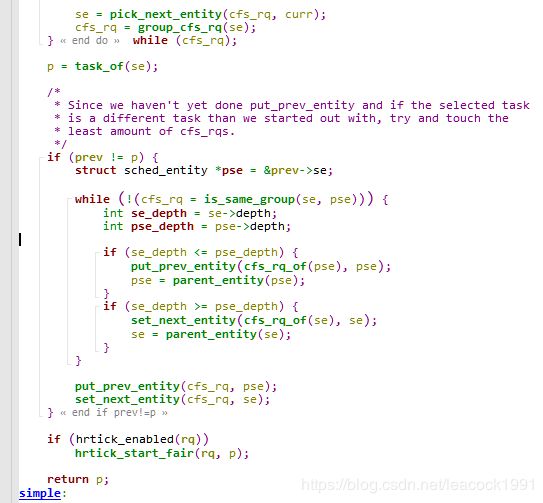

pick_next_entity 从红黑树里面,取最左边的一个节点,也就是下一个调度实体

pick_next_task_fair函数中

p = task_of(se);

if (prev != p) {

struct sched_entity *pse = &prev->se;

......

put_prev_entity(cfs_rq, pse);

set_next_entity(cfs_rq, se);

}

return p

task_of 得到下一个调度实体对应的 task_struct,如果发现继任和前任不一样,这就说明有一个更需要运行的进程了,就需要更新红黑树了。

前面 vruntime 更新过了,put_prev_entity 放回红黑树,然后 set_next_entity 将继任者设为当前任务。

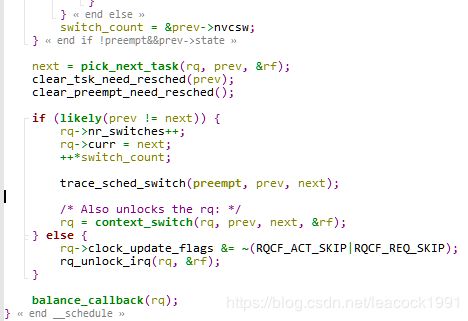

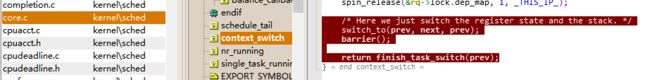

第三步,当选出的继任者和前任不同,就要进行上下文切换,继任者进程正式进入运行

上面 static void __sched notrace __schedule(bool preempt)函数中

if (likely(prev != next)) {

rq->nr_switches++;

rq->curr = next;

++*switch_count;

trace_sched_switch(preempt, prev, next);

/* Also unlocks the rq: */

rq = context_switch(rq, prev, next, &rf);

} else {

rq->clock_update_flags &= ~(RQCF_ACT_SKIP|RQCF_REQ_SKIP);

rq_unlock_irq(rq, &rf);

}

进程上下文切换

上面 static void __sched notrace __schedule(bool preempt)函数中的 context_switch

上下文切换主要干两件事情,一是切换进程空间,也即虚拟内存;二是切换寄存器和 CPU 上下文。

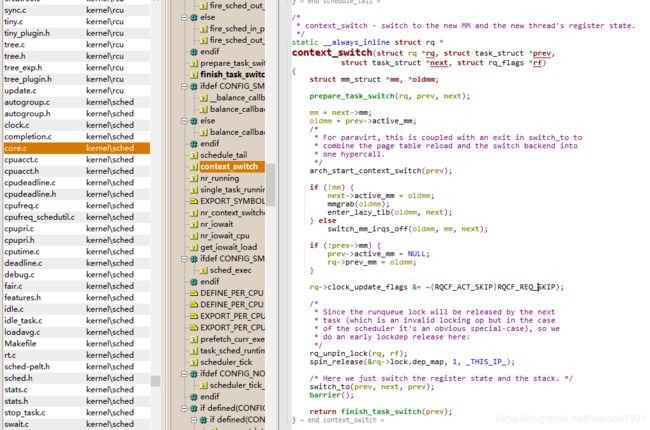

context_switch 的实现

\kernel\sched\core.c

/*

* context_switch - switch to the new MM and the new thread's register state.

*/

static __always_inline struct rq *

context_switch(struct rq *rq, struct task_struct *prev,

struct task_struct *next, struct rq_flags *rf)

{

struct mm_struct *mm, *oldmm;

prepare_task_switch(rq, prev, next);

mm = next->mm;

oldmm = prev->active_mm;

/*

* For paravirt, this is coupled with an exit in switch_to to

* combine the page table reload and the switch backend into

* one hypercall.

*/

arch_start_context_switch(prev);

if (!mm) {

next->active_mm = oldmm;

mmgrab(oldmm);

enter_lazy_tlb(oldmm, next);

} else

switch_mm_irqs_off(oldmm, mm, next);

if (!prev->mm) {

prev->active_mm = NULL;

rq->prev_mm = oldmm;

}

rq->clock_update_flags &= ~(RQCF_ACT_SKIP|RQCF_REQ_SKIP);

/*

* Since the runqueue lock will be released by the next

* task (which is an invalid locking op but in the case

* of the scheduler it's an obvious special-case), so we

* do an early lockdep release here:

*/

rq_unpin_lock(rq, rf);

spin_release(&rq->lock.dep_map, 1, _THIS_IP_);

/* Here we just switch the register state and the stack. */

switch_to(prev, next, prev);

barrier();

return finish_task_switch(prev);

}

首先是内存空间的切换

见 context_switch 的实现 中函数

涉及内存管理的内容比较多,在后续内存管理介绍

接着 switch_to 这就是寄存器和栈的切换

它调用到了 __switch_to_asm,是一段汇编代码,主要用于栈的切换

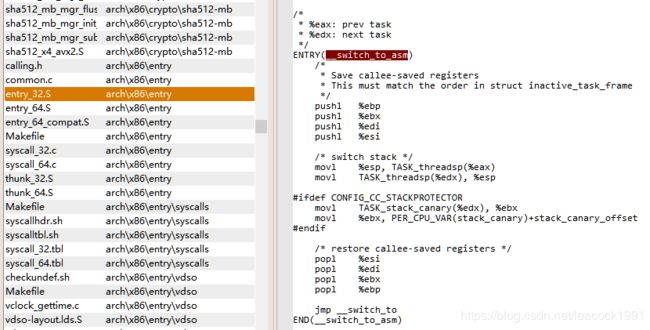

32 位操作系统

切换的是栈顶指针 esp

\arch\x86\entry\entry_32.S

/*

* %eax: prev task

* %edx: next task

*/

ENTRY(__switch_to_asm)

/*

* Save callee-saved registers

* This must match the order in struct inactive_task_frame

*/

pushl %ebp

pushl %ebx

pushl %edi

pushl %esi

/* switch stack */

movl %esp, TASK_threadsp(%eax)

movl TASK_threadsp(%edx), %esp

#ifdef CONFIG_CC_STACKPROTECTOR

movl TASK_stack_canary(%edx), %ebx

movl %ebx, PER_CPU_VAR(stack_canary)+stack_canary_offset

#endif

/* restore callee-saved registers */

popl %esi

popl %edi

popl %ebx

popl %ebp

jmp __switch_to

END(__switch_to_asm)

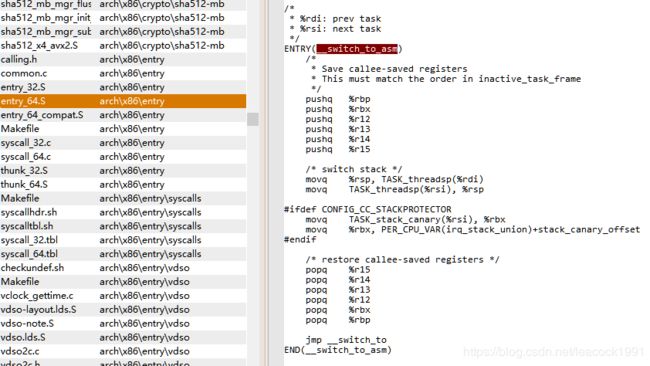

切换的是栈顶指针 rsp

\arch\x86\entry\entry_64.S

/*

* %rdi: prev task

* %rsi: next task

*/

ENTRY(__switch_to_asm)

/*

* Save callee-saved registers

* This must match the order in inactive_task_frame

*/

pushq %rbp

pushq %rbx

pushq %r12

pushq %r13

pushq %r14

pushq %r15

/* switch stack */

movq %rsp, TASK_threadsp(%rdi)

movq TASK_threadsp(%rsi), %rsp

#ifdef CONFIG_CC_STACKPROTECTOR

movq TASK_stack_canary(%rsi), %rbx

movq %rbx, PER_CPU_VAR(irq_stack_union)+stack_canary_offset

#endif

/* restore callee-saved registers */

popq %r15

popq %r14

popq %r13

popq %r12

popq %rbx

popq %rbp

jmp __switch_to

END(__switch_to_asm)

最终,都返回了 __switch_to 这个函数

__switch_to 这个函数源码位置

- 32位在 arch/x86/kernel/process_32.c

- 64位在 arch/x86/kernel/process_64.c

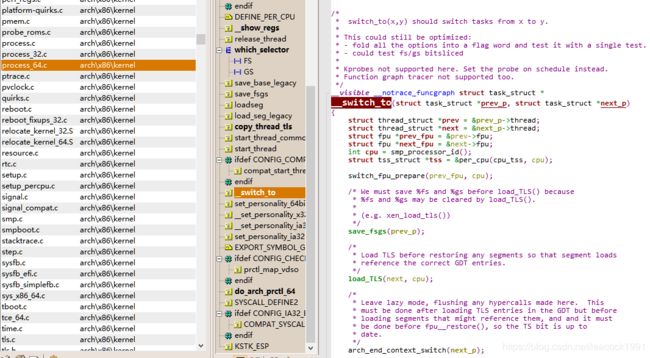

对于 32 位和 64 位操作系统虽然有不同的实现,但里面做的事情是差不多的,以64 位操作系统为例

arch/x86/kernel/process_64.c

__visible __notrace_funcgraph struct task_struct *

__switch_to(struct task_struct *prev_p, struct task_struct *next_p)

{

struct thread_struct *prev = &prev_p->thread;

struct thread_struct *next = &next_p->thread;

......

int cpu = smp_processor_id();

struct tss_struct *tss = &per_cpu(cpu_tss, cpu);

......

load_TLS(next, cpu);

......

this_cpu_write(current_task, next_p);

/* Reload esp0 and ss1. This changes current_thread_info(). */

load_sp0(tss, next);

......

return prev_p;

}

在 x86 体系结构中,提供了一种以硬件的方式进行进程切换的模式,对于每个进程,x86 希望在内存里面维护一个 TSS(Task State Segment,任务状态段)结构。这里面有所有的寄存器。

寄存器 TR(Task Register,任务寄存器)

一个特殊的寄存器 TR(Task Register,任务寄存器)指向某个进程的 TSS,更改 TR 的值,将会触发硬件保存 CPU 所有寄存器的值到当前进程的 TSS 中,然后从新进程的 TSS 中读出所有寄存器值,加载到 CPU 对应的寄存器中。

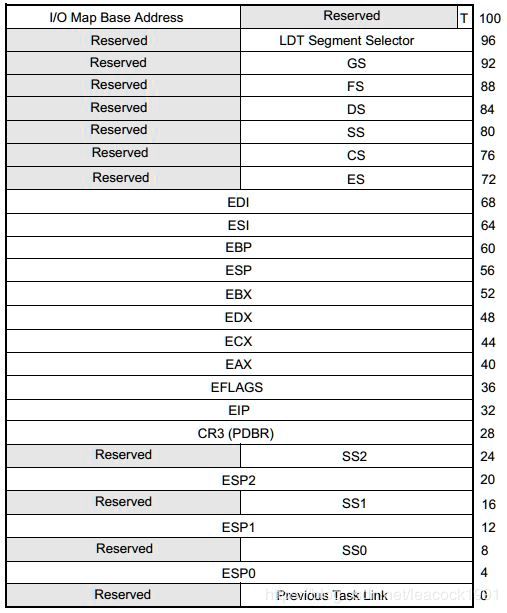

32 位的 TSS 结构

图片来自 Intel® 64 and IA-32 Architectures Software Developer’s Manual Combined Volumes

做进程切换的时候,没必要每个寄存器都切换,如果这样每个进程一个 TSS,需要全量保存,全量切换,动作太大了,所以没有必要全部切换

Linux 操作系统是如何切换的呢?

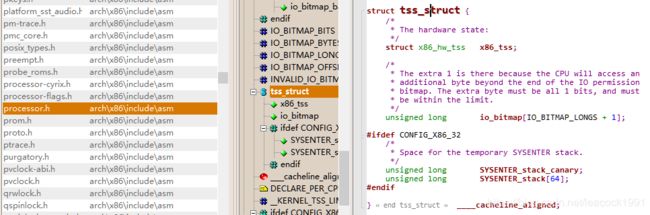

系统初始化会调用 cpu_init(init/main.c中start_kernel(void)函数 系统初始化->trap_init ->cpu_init),这里面会给每一个 CPU 关联一个 TSS,然后将 TR 指向这个 TSS,然后在操作系统的运行过程中,TR 就不切换了,永远指向这个 TSS。TSS 用数据结构 tss_struct 表示,在 x86_hw_tss (/arch/x86/include/asm/processor.h)中可以看到和上图相应的结构

cpu_init函数实现

\arch\x86\kernel\cpu\common.c

void cpu_init(void)

{

int cpu = smp_processor_id();

struct task_struct *curr = current;

struct tss_struct *t = &per_cpu(cpu_tss, cpu);

......

load_sp0(t, thread);

set_tss_desc(cpu, t);

load_TR_desc();

......

}

数据结构 tss_struct

\arch\x86\include\asm\processor.h

struct tss_struct {

/*

* The hardware state:

*/

struct x86_hw_tss x86_tss;

/*

* The extra 1 is there because the CPU will access an

* additional byte beyond the end of the IO permission

* bitmap. The extra byte must be all 1 bits, and must

* be within the limit.

*/

unsigned long io_bitmap[IO_BITMAP_LONGS + 1];

#ifdef CONFIG_X86_32

/*

* Space for the temporary SYSENTER stack.

*/

unsigned long SYSENTER_stack_canary;

unsigned long SYSENTER_stack[64];

#endif

} ____cacheline_aligned;

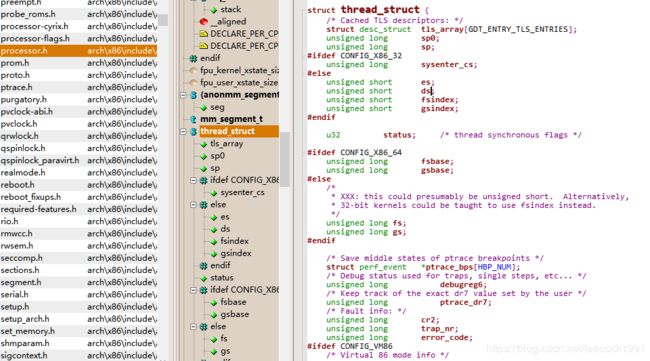

在 Linux 中,真的参与进程切换的寄存器很少,主要的就是栈顶寄存器,于是,在 task_struct 里面,还有一个成员变量 thread。这里面保留了要切换进程的时候需要修改的寄存器。

/* CPU-specific state of this task: */

struct thread_struct thread;

\arch\x86\include\asm\processor.h

struct thread_struct {

/* Cached TLS descriptors: */

struct desc_struct tls_array[GDT_ENTRY_TLS_ENTRIES];

unsigned long sp0;

unsigned long sp;

#ifdef CONFIG_X86_32

unsigned long sysenter_cs;

#else

unsigned short es;

unsigned short ds;

unsigned short fsindex;

unsigned short gsindex;

#endif

u32 status; /* thread synchronous flags */

#ifdef CONFIG_X86_64

unsigned long fsbase;

unsigned long gsbase;

......

所谓的进程切换,就是将某个进程的 thread_struct 里面的寄存器的值,写入到 CPU 的 TR 指向的 tss_struct,对于 CPU 来讲,这就算是完成了切换。

指令指针的保存与恢复

盘点进程切换中的用户栈和内核栈以及相关指针

从进程 A 切换到进程 B

用户栈

在切换内存空间的时候,用户栈已切换。每个进程的用户栈都是独立的,都在内存空间里面

内核栈

在 __switch_to 里面切换了,也就是将 current_task 指向当前的 task_struct。里面的 void *stack 指针,指向的就是当前的内核栈。

内核栈的栈顶指针

在 __switch_to_asm 里面已经切换了栈顶指针,并且将栈顶指针在 __switch_to 加载到了 TSS 里面。

用户栈的栈顶指针

如果当前在内核里面的话,它是在内核栈顶部的 pt_regs 结构里面,当从内核返回用户态运行的时候,pt_regs 里面有所有当时在用户态的时候运行的上下文信息得以恢复

指令指针寄存器

指令指针寄存器指向下一条指令

进程的调度都最终会调用到 __schedule 函数,假设进程 A 在用户态是要写一个文件

写文件需要通过系统调用到达内核态,在这个切换的过程中,用户态的指令指针寄存器是保存在 pt_regs 里面的

到了内核态,一步一步执行中发现写文件需要等待,于是就调用 __schedule 函数进行主动调度,这个时候,进程 A 在内核态的指令指针是指向 __schedule 了,A 进程的内核栈会保存这个 __schedule 的调用,而且知道这是从 btrfs_wait_for_no_snapshoting_writes 这个函数里面进去的

__schedule 里面经过层层调用,到达了 context_switch 的最后三行指令

\kernel\sched\core.c

/* Here we just switch the register state and the stack. */

switch_to(prev, next, prev);

barrier();

return finish_task_switch(prev);

barrier 语句是一个编译器指令,用于保证 switch_to 和 finish_task_switch的执行顺序,不会因为编译阶段优化而改变

当进程 A 在内核里面执行 switch_to 的时候,内核态的指令指针也是指向这一行的

但是在switch_to 里面,将寄存器和栈都切换到成了进程 B 的,唯一没有变的就是指令指针寄存器。

当 switch_to 返回的时候,指令指针寄存器指向了下一条语句 finish_task_switch

但这个时候的 finish_task_switch 已经不是进程 A 的 finish_task_switch 了,而是进程 B 的 finish_task_switch 了。

进程的调度都最终会调用到 __schedule 函数,那么之前B 进程被别人切换走的时候,也是调用 __schedule,也是调用到 switch_to,B 进程之前的下一个指令也是 finish_task_switch,这次切换回来指令指针指到这里是没有错的。

从 finish_task_switch 完毕后,返回 __schedule 的调用了,返回到哪里呢?按照函数返回的原理,当然是从内核栈里面去找,由于这时内核栈已切换为B进程的内核栈,所以就不是返回A进程的 btrfs_wait_for_no_snapshoting_writes ,而是B进程之前被切换的那一时刻内核栈中的函数

假设,B 就是最前面例子里面调用 tap_do_read 读网卡的进程

之前调用 __schedule 的时候,是从 tap_do_read 这个函数调用进去的。那么B 进程的内核栈里面放的是 tap_do_read,于是,从A进程 __schedule 返回之后,是接着 tap_do_read 运行。最后B进程内核执行完毕返回用户态,同样恢复B进程的 pt_regs,恢复B进程用户态的指令指针寄存器,从B进程用户态接着运行。

参考资料:

趣谈Linux操作系统(极客时间)链接:

http://gk.link/a/10iXZ

欢迎大家来一起交流学习