Elasticsearch搜索引擎学习笔记(三)---Django+ElasticSearch交互打造网站搜索引擎

文章需要结合上两篇Elasticsearch学习笔记,因为要修改配置信息,具体的翻阅前两篇博客自行查阅

1.项目背景:

用Django搭建的项目,在前端输入所需查询内容,后台通过Elasticsearch引擎实现对相关内容算法搜索,将相应的文章内容发送给前端并展现给用户,从而实现搜索功能;

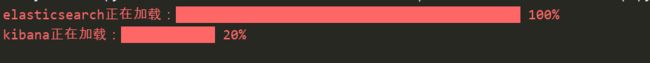

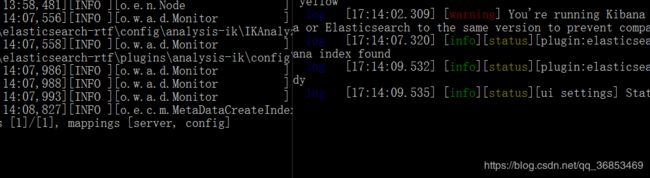

2. 利用之前制作好的启动命令启动ES和Kibana

3. 将我们的数据批量存到ElasticSearch中

先从数据库读取数据,数据不是很多,所以就一次直接读取了,如果很大的话就要分批次读取了

def getDataFromDB(self):

'''

将文章从数据库提取出来

:return:

'''

query_sql1 = "select * from article order by id"

self.conn.ping(reconnect=True)

cursor1 = self.cur.execute(query_sql1)

print(cursor1)

if cursor1:

InfoAll = self.cur.fetchall()

return InfoAll #将所有数据库数据返回将获取到的数据批量存入ES,第一次存入批量,因为是从数据库直接读取出来的,所以id字段具有唯一性,我们往ES中存入的时候,根据id字段,可以实现插入和更新的功能,就不需要再次去判断数据存不存在es中的问题了。

def dataInsertEs(self):

'''

将数据存入ES

:return:

'''

infoAll = self.getDataFromDB()

es = Elasticsearch()

action = ({

"_index": "blog",

"_type": "article",

"_source": {

"id":info[0],

"title": info[1],

"summary":info[2],

"body":info[3],

"img_link":info[4],

"create_date":info[5],

"update_date":info[6],

"views":info[7],

"slug":info[8],

"autohr_id":info[9],

"category_id":info[10],

"loves":info[11]

}

} for info in infoAll)

helpers.bulk(es, action)

读数据库,存入ES总体代码:

# -*- coding: utf-8 -*-

'''

@Author :Jason

'''

from elasticsearch import Elasticsearch

import pymysql,time,csv,hashlib,re

from elasticsearch import helpers

class InsertDataIntoEs(object):

'''

定时任务,将数据实时存入ES

'''

def __init__(self):

self.conn = pymysql.connect(

# 本地测试库 假的账号密码哈

host='localhost',

port=3306,

user='root',

password='DF#%fdkj',

db='blog',

charset='utf8mb4'

)

self.cur = self.conn.cursor()

def getDataFromDB(self):

'''

将文章从数据库提取出来

:return:

'''

query_sql1 = "select * from jason_article order by id"

self.conn.ping(reconnect=True)

cursor1 = self.cur.execute(query_sql1)

print(cursor1)

if cursor1:

InfoAll = self.cur.fetchall()

print(InfoAll)

return InfoAll #将所有数据库数据返回

def dataInsertEs(self):

'''

将数据存入ES

:return:

'''

infoAll = self.getDataFromDB()

es = Elasticsearch()

action = ({

"_index": "blog",

"_type": "article",

"_source": {

"id":info[0],

"title": info[1],

"summary":info[2],

"body":info[3],

"img_link":info[4],

"create_date":info[5],

"update_date":info[6],

"views":info[7],

"slug":info[8],

"autohr_id":info[9],

"category_id":info[10],

"loves":info[11]

}

} for info in infoAll)

helpers.bulk(es, action)

if __name__ == "__main__":

InsES = InsertDataIntoEs()

InsES.dataInsertEs()

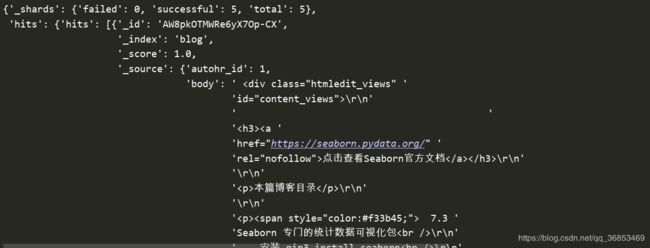

4.检测存入是否成功:

# -*- coding: utf-8 -*-

'''

@Author :Jason

'''

from elasticsearch import Elasticsearch

from pprint import pprint

# 创建index

es = Elasticsearch()

result = es.search(index='blog')

pprint(result)

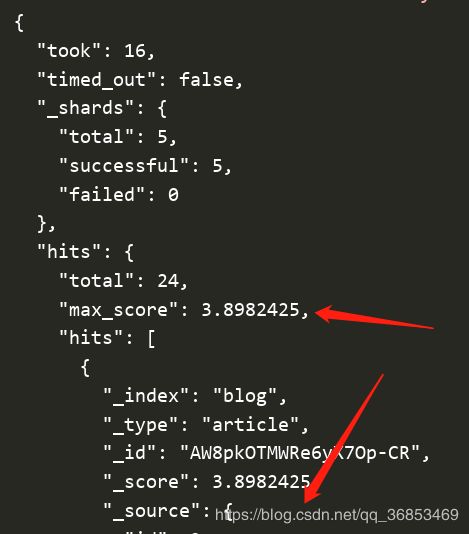

如图所示说明成功将数据库数据存到ES的data目录中;

5.安装中文分词器elasticsearch-analysis-ik 或者 也可以调用Python中的jieba分词

具体参考上一篇博客,因为涉及到中文的搜索和不规范的输入

pythonjieba分词的话,直接如下命令安装即可

pip3 install jieba因为上一篇用了ik,我们这里就用python的结巴分词。

6.Django 中配置路由:

path(r'search/', esSearch , name='esSearch'),7.view.py中的esSearch视图函数中实现搜索算法:

7.1 url中可能含有汉字,而汉字经过url编码后需要我们进行urldecode

from urllib import parse

url = parse.unquote(str(request.get_full_path()+" "))7.2 先将获取到的查询词,利用结巴分词的专门针对分词的方法cut_for_search,将传入的查询词进行分词:

b = re.findall(r'/search/\?q=(.*)', str(url))#直接正则提取,而不是路由参数

search_words_list = []

if b:

wordsList = b[0].replace("+"," ") #查询的+会被urlEncode成%2B,所以不用特殊处理+字符

wordsList = [x for x in jieba.cut_for_search("".join(wordsList)) if len(x) > 2] #长度小于2的自动过滤掉,暂时认为是无意义的

wordsList = Counter(wordsList).most_common(3) #如果输入很多的就取前三

for word in wordsList:

search_words_list.append(word[0])

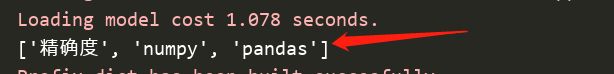

print(search_words_list)结果,完美分词成我们想要搜索的词语

7.3 将成功分词后的词语列表放到es算法中,从众多数据中开始搜索(处理的非常不精细:直接模糊搜索):

# 查询title和body包含关键字的数据

body = {

"query": {

"multi_match": {

"query": str(search_words_list).replace("[","").replace("]",""),

"fields": ["title", "body"]

}

},

"sort": {

"_score": { # 根据权重排序,得分越高排在越前排

"order": "desc"

}

}

}

或者 body = {

"query": {

"bool": {

"should":[

{"match": {"title": word for word in search_words_list}},

{"match": {"body": word for word in search_words_list}},

]

}

},

"sort": {

"_score": { # 根据权重排序,得分越高排在越前排

"order": "desc"

}

}

}

es = Elasticsearch()

result = es.search(index='blog',body = body)查看搜索结果,实现了根据得分降序排列的结果

7.4 返回搜索出的文章之前,对关键字高亮显示,加上样式:

for info in result["hits"]["hits"]:

for word in search_words_list:

info["title"].replace(str(word),''+word+'')

info["body"].replace(str(word), '' + word + '')最后只要将相应模板和数据传给前端就好了