Saltstack 实现keepalived高可用

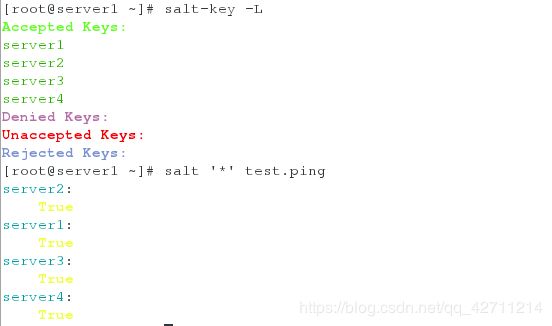

实验环境:在SaltStack部署完毕的前提下进行实验:

系统: redhat6

server1 172.25.45.1 salt-master keepalived

server2 172.25.45.2 salt-minion apache

server2 172.25.45.3 salt-minion nginx

server4 172.25.45.4 salt-minion keepalived

server4的yum源配置负载均衡包:

[root@server4 ~]# vim /etc/yum.repos.d/rhel-source.repo [

rhel-source]

name=Red Hat Enterprise Linux $releasever - $basearch - Source

baseurl=http://172.25.45.250/rhel6.5

enabled=1

gpgcheck=1

gpgkey=file:///etc/pki/rpm-gpg/RPM-GPG-KEY-redhat-release

[saltsack]

name=saltsack

baseurl=http://172.25.45.250/rhel6 enabled=1

gpgcheck=0

[LoadBalancer]

name=LoadBalancer

baseurl=http://172.25.45.250/rhel6.5/LoadBalancer

gpgcheck=0

一、安装keepalived(源码编译)

[root@server1 ~]# cd /srv/salt/

[root@server1 salt]# mkdir keepalived

[root@server1 salt]# cd keepalived/

[root@server1 keepalived]# mkdir files

[root@server1 keepalived]# cd files/

[root@server1 files]# mv /root/keepalived-2.0.6.tar.gz .

[root@server1 files]# ls

keepalived-2.0.6.tar.gz

[root@server1 files]# cd ..

[root@server1 keepalived]# vim install.sls

kp-install:

pkg.installed:

- pkgs:

- gcc:

- pcre-devel:

- openssl-devel

file.managed:

- name: /mnt/keepalived-2.0.6.tar.gz

- source: salt://keepalived/files/keepalived-2.0.6.tar.gz

cmd.run:

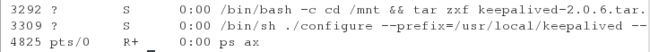

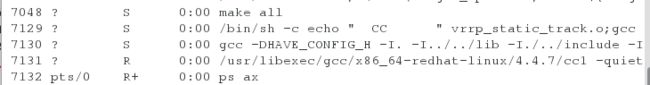

- name: cd /mnt && tar zxf keepalived-2.0.6.tar.gz && cd keepalived-2.0.6

&& ./configure --prefix=/usr/local/keepalived --with-init=SYSV &>/dev/null &&

make &>/dev/null && make install &>/dev/null

- creates: /usr/local/keepalived

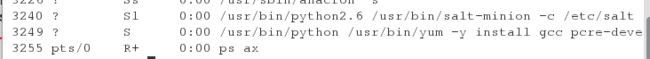

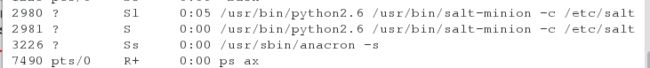

[root@server1 keepalived]# salt server4 state.sls keepalived.install #推送在server4实时查看进程:

安装依赖包

创建预编译环境

编译中

编译完成

查看server4中keepalived安装是否成功并将server4上的keepalived配置文件传给server1

[root@server4 ~]# cd /mnt/

[root@server4 mnt]# ls

keepalived-2.0.6 keepalived-2.0.6.tar.gz

[root@server4 mnt]# cd /usr/local/keepalived/

[root@server4 keepalived]# ls

bin etc sbin share

[root@server4 keepalived]# cd etc/rc.d/init.d/

[root@server4 init.d]# ls

keepalived

[root@server4 init.d]# scp keepalived server1:/srv/salt/keepalived/files/

[root@server4 init.d]# cd /usr/local/keepalived/etc/keepalived/

[root@server4 keepalived]# ls

keepalived.conf samples

[root@server4 keepalived]# scp keepalived.conf server1:/srv/salt/keepalived/files/二·做链接

[root@server1 keepalived]# vim install.sls ##添加以下内容做软连接

/etc/keepalived:

file.directory:

- mode: 755

/etc/sysconfig/keepalived:

file.symlink:

- target: /usr/local/keepalived/etc/sysconfig/keepalived

/sbin/keepalived:

file.symlink:

- target: /usr/local/keepalived/sbin/keepalived

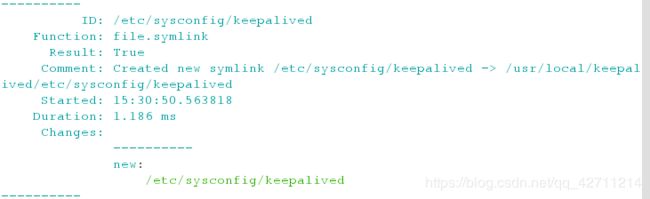

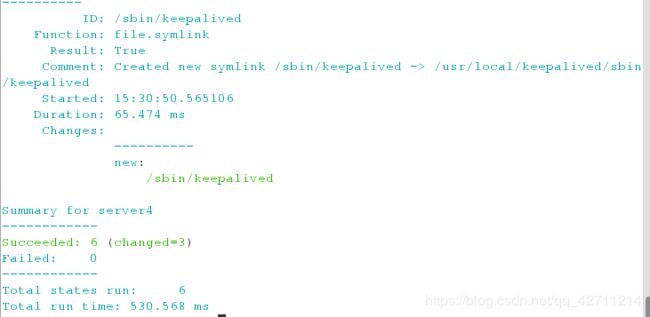

[root@server1 keepalived]# salt server4 state.sls keepalived.install #推送

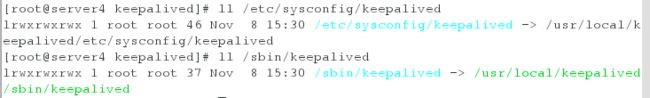

在server4中查看:

[root@server4 keepalived]# ll /etc/sysconfig/keepalived

[root@server4 keepalived]# ll /sbin/keepalived 三、修改keepalived配置文件

[root@server1 keepalived]# cd /srv/salt/keepalived/files/

[root@server1 files]# vim keepalived.conf ##删除31行之后 修改vip及相关内容

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

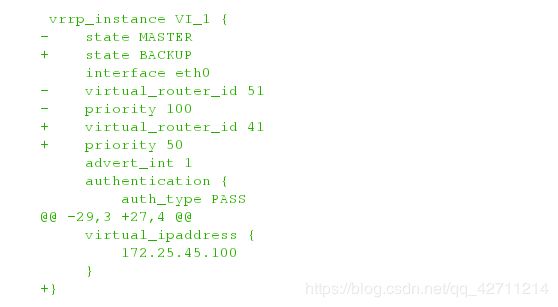

vrrp_instance VI_1 {

state {{ STATE }}

interface eth0

virtual_router_id {{vrid}}

advert_int 1

priority {{priority}}

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.25.45.100

}

}四、服务文件(jinja模板)

[root@server1 files]# cd /srv/salt/keepalived/

[root@server1 keepalived]# ls

files install.sls

[root@server1 keepalived]# vim service.sls

include:

- keepalived.install

/etc/keepalived/keepalived.conf:

file.managed:

- source: salt://keepalived/files/keepalived.conf

- template: jinja

- context:

STATE: {{ pillar['state'] }}

vrid: {{ pillar['vrid'] }}

priority: {{ pillar['priority'] }}

kp-service:

file.managed:

- name: /etc/init.d/keepalived

- source: salt://keepalived/files/keepalived

- mode: 755

service.running:

- name: keepalived

- reload: True

- watch:

- file: /etc/keepalived/keepalived.conf五、定义变量

[root@server1 ~]# cd /srv/pillar/

[root@server1 pillar]# mkdir keepalived

[root@server1 pillar]# cd keepalived/

[root@server1 keepalived]# vim install.sls

{% if grains['fqdn'] == 'server1' %}

state: MASTER

vrid: 45

priority: 100

{% elif grains['fqdn'] == 'server4' %}

state: BACKUP

vrid: 45

priority: 50

{% endif %}[root@server1 keepalived]# cd /srv/pillar/

[root@server1 pillar]# ls

keepalived top.sls web

[root@server1 pillar]# cd web/

[root@server1 web]# vim install.sls ##之前做负载均衡的文件

{% if grains['fqdn'] == 'server2' %}

webserver: apache

bind: 172.25.45.2

port: 80

{% elif grains['fqdn'] == 'server3' %}

webserver: nginx

{% endif %}

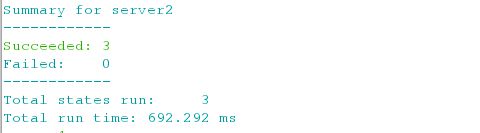

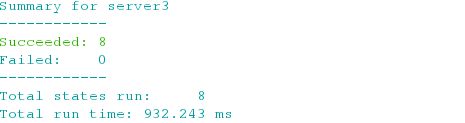

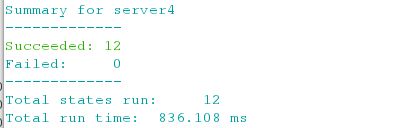

[root@server1 files]# salt server4 state.sls keepalived.service #推送六、修改base

[root@server1 web]# cd /srv/pillar/

[root@server1 pillar]# vim top.sls

base:

'server2':

- web.install

'server3':

- web.install

'server1':

- keepalived.install

'server4':

- keepalived.install七、安装mailx 编辑高级推送文件

[root@server1 salt]# yum install -y mailx

[root@server1 pillar]# cd /srv/salt/

[root@server1 salt]# ls

apache _grains haproxy keepalived _modules nginx pkgs top.sls users

[root@server1 salt]# vim top.sls

base:

'server1':

- haproxy.install

- keepalived.service

'server4':

- haproxy.install

- keepalived.service

'server2':

- apache.install

'server3':

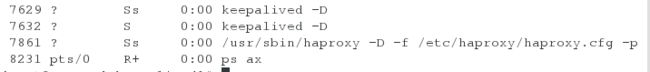

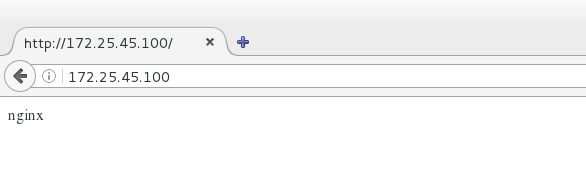

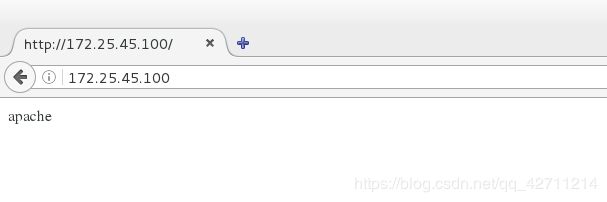

- nginx.service测试:

[root@server1 salt]# salt '*' state.highstate

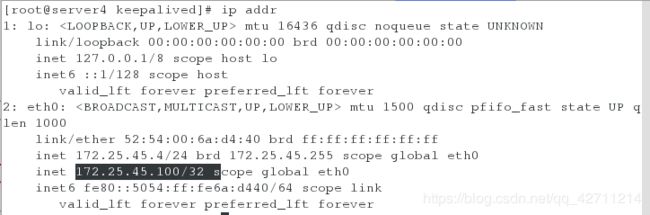

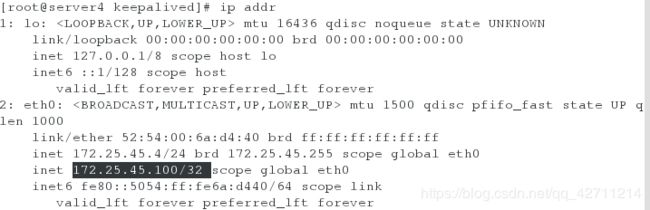

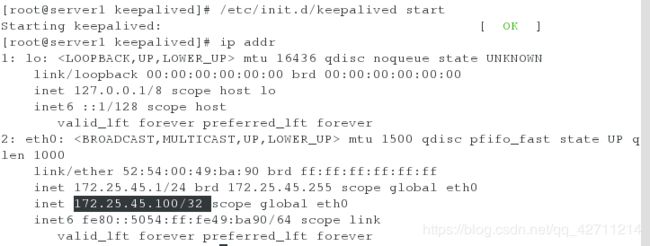

[root@server1 salt]# ip addr1、高级推送成功

在server4中:

当在server1中关闭keepalived时,负载均衡不会受影响.此时vip在server4上 实现高可用

server1打开服务后,VIP还是会回到server1上