elasticsearch 6.3.2 支持jdbc连接es 破解版

- 进去elasticsearch安装目录 找到x-pack-core-6.3.2.jar

cd /data/server/es/elasticsearch-6.3.2/modules/x-pack/x-pack-core

- 解压jar包,然后找到下面的两个class文件,使用luyten反编译

org/elasticsearch/xpack/core/XPackBuild.class

org/elasticsearch/license/LicenseVerifier.class

LicenseVerifier 中有两个静态方法,这就是验证授权文件是否有效的方法,我们把它修改为全部返回true.

XPackBuild 中 返回两个变量用来判断jar包是否被修改 如下写死即可

- 将修改后的java类上传到服务器

进入上传java类的目录下执行以下命令

cp /data/server/es/elasticsearch-6.3.2/modules/x-pack/x-pack-core/x-pack-core-6.3.2.jar .

jar -xvf x-pack-core-6.3.2.jar

rm x-pack-core-6.3.2.jar

javac -cp "/data/server/elasticsearch-6.3.2/modules/x-pack/x-pack-core/x-pack-core-6.3.2.jar" LicenseVerifier.java

javac -cp "/data/server/elasticsearch-6.3.2/lib/elasticsearch-core-6.3.2.jar" XPackBuild.java

mv XPackBuild.class xpack/org/elasticsearch/xpack/core/

mv LicenseVerifier.class xpack/org/elasticsearch/license/

jar -cvf x-pack-core-6.3.2.jar ./*

cp x-pack-core-6.3.2.jar /data/server/es/elasticsearch-6.3.2/modules/x-pack/x-pack-core/

7.更新license:

去官网申请免费license,会发邮件给你进行下载;

将下载的文件重命名为license.json,并做如下修改:

"type":"platinum" #白金版

"expiry_date_in_millis":2524579200999 #截止日期 2050

- 或者在服务器上通过curl 上传

Curl -XPUT http://10.3.4.128:9200/_xpack/license?acknowledge=true -H "Content-Type: application/json" -d @license.json

![]()

- 查看license 是否生效

curl -XGET 'http://10.3.4.128:9200/_license'

注意:

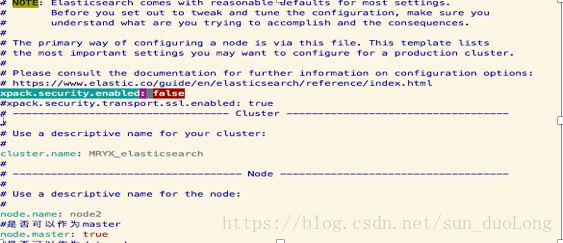

elasticsearch 6.3.2 中默认开启了安全验证,我们暂时修改配置文件以方便导入自己的文件

在elasticsearch.yml 中 添加一下配置

xpack.security.enabled: false

启动es 启动kibana

搞定~

- 下面是一个案例测试jdbc连接es

- import cn.missfresh.Tools.TimeTools;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataOutputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import java.net.URI;

import java.sql.*;

import java.util.Properties;

/**

* Created by Irving on 2018/8/21.

*/

public class JDBCReadEs {

private static String driver = "org.elasticsearch.xpack.sql.jdbc.jdbc.JdbcDriver";

public static void main(String[] args) throws Exception {

if (args.length != 3) {

System.out.println("请传入正确参数");

System.out.println("参数0:sql语句");

System.out.println("参数1:0为写入hdfs路径、1为打印到控制台");

System.out.println("参数2:hdfs路径");

System.exit(-1);

}

System.setProperty("HADOOP_USER_NAME", "hadoop");

System.out.println("程序开始时间:" + TimeTools.getCurrentTime());

Long t1 = System.currentTimeMillis();

String address = "jdbc:es://10.3.4.128:9200";

Properties connectionProperties = new Properties();

Connection connection = null;

try {

Class.forName(driver).newInstance();

connection = DriverManager.getConnection(address, connectionProperties);

} catch (Exception e) {

e.printStackTrace();

}

Statement statement = connection.createStatement();

String sql = args[0];

ResultSet rs = statement.executeQuery(sql);

ResultSetMetaData metaData = rs.getMetaData();

int columnCount = metaData.getColumnCount();

System.out.println("获取列数:" + columnCount);

// 为0表示写入hdfs操作、为1表示打印到控制台操作

if (args[1].equals("0")) {

FileSystem fs = getFileSystem();

System.out.println("客户端工具获取完成,正在写入,请稍后...");

FSDataOutputStream outputStream = fs.create(new Path(args[2]));

String tohdfs = "";

while (rs.next()) {

for (int i = 1; i <= columnCount; i++) {

if (i == 1) {

tohdfs = rs.getString(i);

} else {

tohdfs = tohdfs + "\t" + rs.getString(i);

}

}

outputStream.write((tohdfs + "\n").getBytes("UTF-8"));

}

System.out.println("写入完成.");

outputStream.close();

fs.close();

} else if (args[1].equals("1")) {

while (rs.next()) {

for (int i = 1; i <= columnCount; i++) {

if (i == (columnCount)) {

System.out.println(rs.getString(i));

} else {

System.out.print(rs.getString(i) + "\t");

}

}

}

} else {

System.out.println("请输入正确输出路径");

System.exit(-1);

}

//关闭资源

rs.close();

statement.close();

connection.close();

System.out.println("程序结束时间:" + TimeTools.getCurrentTime());

Long t2 = System.currentTimeMillis();

System.out.println("程序执行总时间:" + (t2 - t1) / 1000 + "s");

}

/**

* 获取一个操作HDFS系统的客户端工具对象

*

* @return

*/

public static FileSystem getFileSystem() {

FileSystem fs = null;

try {

Configuration config = new Configuration();

config.set("dfs.support.append", "true");

config.set("dfs.client.block.write.replace-datanode-on-failure.policy", "NEVER");

config.set("dfs.client.block.write.replace-datanode-on-failure.enable", "true");

config.set("fs.hdfs.impl", "org.apache.hadoop.hdfs.DistributedFileSystem");

fs = FileSystem.get(new URI("hdfs://10.3.4.67:4007"), config, "hadoop");

} catch (Exception e) {

e.printStackTrace();

}

return fs;

}

}

附上pom文件

xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0modelVersion> <groupId>cn.missfreshgroupId> <artifactId>RTG_JDBCReadEsartifactId> <version>1.0-SNAPSHOTversion> <properties> <maven.compiler.source>1.8maven.compiler.source> <maven.compiler.target>1.8maven.compiler.target> <scala.version>2.11.8scala.version> <spark.version>2.2.1spark.version> <hadoop.version>2.7.3hadoop.version> <encoding>UTF-8encoding> <project.build.sourceEncoding>UTF-8project.build.sourceEncoding> properties> <dependencies> <dependency> <groupId>org.scala-langgroupId> <artifactId>scala-libraryartifactId> <version>${scala.version}version> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-core_2.11artifactId> <version>${spark.version}version> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-sql_2.11artifactId> <version>${spark.version}version> dependency> <dependency> <groupId>org.apache.hadoopgroupId> <artifactId>hadoop-clientartifactId> <version>${hadoop.version}version> dependency> <dependency> <groupId>com.alibabagroupId> <artifactId>fastjsonartifactId> <version>1.2.39version> dependency> <dependency> <groupId>mysqlgroupId> <artifactId>mysql-connector-javaartifactId> <version>5.1.38version> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-streaming_2.11artifactId> <version>${spark.version}version> dependency> <dependency> <groupId>org.apache.sparkgroupId> <artifactId>spark-streaming-kafka-0-10_2.11artifactId> <version>${spark.version}version> dependency> <dependency> <groupId>redis.clientsgroupId> <artifactId>jedisartifactId> <version>2.9.0version> dependency> <dependency> <groupId>org.mongodb.sparkgroupId> <artifactId>mongo-spark-connector_2.11artifactId> <version>2.2.0version> dependency> <dependency> <groupId>org.elasticsearch.plugingroupId> <artifactId>jdbcartifactId> <version>6.3.0version> dependency> <dependency> <groupId>mryx.elasticsearchgroupId> <artifactId>es-shaded-6.3.2artifactId> <version>2.0-SNAPSHOTversion> dependency> <dependency> <groupId>org.apache.directory.studiogroupId> <artifactId>org.apache.logging.log4jartifactId> <version>1.2.16version> dependency> <dependency> <groupId>org.apache.logging.log4jgroupId> <artifactId>log4j-coreartifactId> <version>2.10.0version> dependency> <dependency> <groupId>org.apache.logging.log4jgroupId> <artifactId>log4j-apiartifactId> <version>2.10.0version> dependency> <dependency> <groupId>org.apache.hivegroupId> <artifactId>hive-jdbcartifactId> <version>1.1.0version> dependency> dependencies> <repositories> <repository> <id>elastic.coid> <url>https://artifacts.elastic.co/mavenurl> repository> repositories> <build> <plugins> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-compiler-pluginartifactId> <configuration> <source>1.7source> <target>1.7target> <encoding>${project.build.sourceEncoding}encoding> configuration> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-jar-pluginartifactId> <configuration> <classesDirectory>target/classes/classesDirectory> <archive> <manifest> <mainClass>com.missfresh.data.main.StartProvidermainClass> <useUniqueVersions>falseuseUniqueVersions> <addClasspath>trueaddClasspath> <classpathPrefix>lib/classpathPrefix> manifest> <manifestEntries> <Class-Path>.Class-Path> manifestEntries> archive> configuration> plugin> <plugin> <groupId>org.apache.maven.pluginsgroupId> <artifactId>maven-dependency-pluginartifactId> <executions> <execution> <id>copy-dependenciesid> <phase>packagephase> <goals> <goal>copy-dependenciesgoal> goals> <configuration> <type>jartype> <includeTypes>jarincludeTypes> <outputDirectory> ${project.build.directory}/lib outputDirectory> configuration> execution> executions> plugin> plugins> build>

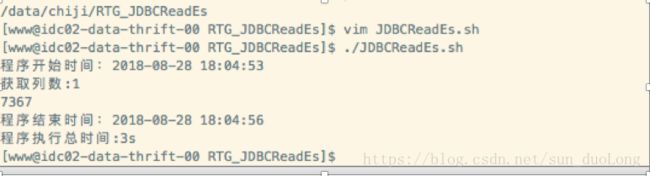

测试脚本:

#!/bin/bash

source /etc/profile

java -cp \

/data/chiji/RTG_JDBCReadEs/RTG_JDBCReadEs-1.0-SNAPSHOT.jar:\

/data/chiji/RTG_JDBCReadEs/lib/elasticsearch-x-content-6.3.0.jar:\

/data/chiji/RTG_JDBCReadEs/lib/elasticsearch-6.3.0.jar:\

/data/chiji/RTG_JDBCReadEs/lib/elasticsearch-core-6.3.0.jar:\

/data/chiji/RTG_JDBCReadEs/lib/joda-time-2.9.9.jar:\

/data/chiji/RTG_JDBCReadEs/lib/lucene-core-7.3.1.jar:\

/data/chiji/RTG_JDBCReadEs/lib/jackson-core-2.8.10.jar:\

/data/chiji/RTG_JDBCReadEs/lib/org.apache.logging.log4j-1.2.16.jar:\

/data/chiji/RTG_JDBCReadEs/lib/log4j-core-2.10.0.jar:\

/data/chiji/RTG_JDBCReadEs/lib/log4j-api-2.10.0.jar \

cn.missfresh.JDBCReadEs \

"select count(*) from dw_test10" 1 /user/test08

注意

上面部分是自己打shade包 可以自己添加依赖 如果需要可以私信我