【图解例说机器学习】集成学习之AdaBoost算法

三个臭皮匠,顶个诸葛亮。

集成学习 (Ensemble learning) 通过构建并结合多个学习器来完成学习任务,即先产生一组个体学习器,再通过某种策略将它们结合起来完成学习任务。

个体学习器通常为一个现有的学习算法从训练数据产生,例如决策树,神经网络等。结合策略:在回归问题中,一般采用 (加权) 平均法,在分类问题中,一般采用 (加权) 投票法。当训练数据很多时,一种更为强大的结合策略为学习法,即通过另一个学习器来进行结合,典型代表为Stacking.

根据个体学习器的生成方式不同,目前的集成学习方法大致分为两大类:序列化方法和并行化方法。在序列化方法中,个体学习器之间存在强依赖关系,需要串行生成,其代表为Boosting;在并行化方法中,个体学习器间不存在强依赖关系,可同时生成,其代表为Bagging和随机森林 (Random Forest)。

本文主要介绍Boosting算法簇中最经典的代表AdaBoost。

AdaBoost

AdaBoost算法有多种推导方式,比较简单易懂的是基于加性模型,以指数函数为损失函数,优化方法为前向分步算法的推导。具体如下所示:

模型与损失函数

假设给定一个二分类的训练数据集 D = { ( x i , y i ) ∣ i = 1 , 2 , ⋯ , N } \mathcal D=\{(\mathrm x_i,y_i)\mid i=1,2,\cdots,N\} D={(xi,yi)∣i=1,2,⋯,N},总共有 K K K个个体学习器 f k ( x ) , k = 1 , 2 , ⋯ , K f_k(\mathrm x),k=1,2,\cdots,K fk(x),k=1,2,⋯,K。此时,模型的表达式为:

f ( x ) = ∑ k = 1 K α k f k ( x ) (1) f(\mathrm x)=\sum\limits_{k=1}^{K}\alpha_kf_k(\mathrm x)\tag{1} f(x)=k=1∑Kαkfk(x)(1)

这里我们采用指数损失函数:

E = ∑ i = 1 N E i = ∑ i = 1 N exp ( − y i f ( x i ) ) (2) E=\sum\limits_{i=1}^{N}E_i=\sum\limits_{i=1}^{N}\exp(-y_if(\mathrm x_i))\tag{2} E=i=1∑NEi=i=1∑Nexp(−yif(xi))(2)

我们的目标是优化参数 α k , f k ( x ) \alpha_k, f_k(\mathrm x) αk,fk(x)使得损失函数最小,即

min α k , f k ( x ) ∑ i = 1 N exp ( − y i f ( x i ) ) (3) \min_{\alpha_k,f_k(\mathrm x)}\quad\sum\limits_{i=1}^{N}\exp(-y_if(\mathrm x_i))\tag{3} αk,fk(x)mini=1∑Nexp(−yif(xi))(3)

一般来说,优化问题(3)不易求解。我们可以采用前向分布算法逐一地学习个体学习器 f k ( x ) f_k(\mathrm x) fk(x), 具体操作如下:

在第 t t t次迭代中,我们假设已经学习得到了 α k , f k ( x ) , k = 1 , 2 , ⋯ , t − 1 \alpha_k, f_k(\mathrm x),k=1,2,\cdots,t-1 αk,fk(x),k=1,2,⋯,t−1, 根据公式(1),我们有

f ( x ) = ∑ k = 1 t − 1 α k f k ( x ) + α t f t ( x ) (4) f(x)=\sum\limits_{k=1}^{t-1}\alpha_kf_k(\mathrm x)+\alpha_tf_t(\mathrm x)\tag{4} f(x)=k=1∑t−1αkfk(x)+αtft(x)(4)

根据公式(2),此时的损失函数为:

E = ∑ i = 1 N exp ( − y i f ( x i ) ) = ∑ i = 1 N exp ( − y i [ ∑ k = 1 t − 1 α k f k ( x i ) + α t f t ( x i ) ] ) = ∑ i = 1 N exp ( − y i ∑ k = 1 t − 1 α k f t ( x i ) ) exp ( − y i α t f t ( x i ) ) = ∑ i = 1 N w t i exp ( − y i α t f t ( x i ) ) (5) \begin{aligned} E&=\sum\limits_{i=1}^{N}\exp(-y_if(\mathrm x_i))=\sum\limits_{i=1}^{N}\exp\left(-y_i\left[\sum\limits_{k=1}^{t-1}\alpha_kf_k(\mathrm x_i)+\alpha_tf_t(\mathrm x_i)\right]\right)\\ &=\sum\limits_{i=1}^{N}\exp(-y_i\sum\limits_{k=1}^{t-1}\alpha_kf_t(\mathrm x_i))\exp(-y_i\alpha_tf_t(\mathrm x_i))=\sum\limits_{i=1}^{N}w_{ti}\exp(-y_i\alpha_tf_t(\mathrm x_i)) \end{aligned}\tag{5} E=i=1∑Nexp(−yif(xi))=i=1∑Nexp(−yi[k=1∑t−1αkfk(xi)+αtft(xi)])=i=1∑Nexp(−yik=1∑t−1αkft(xi))exp(−yiαtft(xi))=i=1∑Nwtiexp(−yiαtft(xi))(5)

注意:在公式(5)中, w t i w_{ti} wti已经由前 t − 1 t-1 t−1次迭代得到。为此,为最小化当前的损失函数,我们可以对 α t \alpha_t αt求导可得:

∂ E ∂ α t = ∑ i = 1 N ∂ E i ∂ α t (6) \frac{\partial E}{\partial\alpha_t}=\sum\limits_{i=1}^{N}\frac{\partial E_i}{\partial\alpha_t}\tag{6} ∂αt∂E=i=1∑N∂αt∂Ei(6)

其中,我们有:

∂ E i ∂ α t = { w t i exp ( − α t ) , i f ( f t ( x i ) = = y i ) w t i exp ( α t ) , i f ( f t ( x i ) ! = y i ) (7) \frac{\partial E_i}{\partial\alpha_t}= \begin{cases} w_{ti}\exp(-\alpha_t),\quad if\hspace{3pt}(f_t(\mathrm x_i)==y_i)\\ w_{ti}\exp(\alpha_t),\quad if\hspace{3pt}(f_t(\mathrm x_i)!=y_i) \end{cases}\tag{7} ∂αt∂Ei={wtiexp(−αt),if(ft(xi)==yi)wtiexp(αt),if(ft(xi)!=yi)(7)

令公式(6)等于0,我们可得:

α t = 1 2 l n 1 − e t e t (8) \alpha_t=\frac{1}{2}ln\frac{1-e_t}{e_t}\tag{8} αt=21lnet1−et(8)

其中,分类误差率 e t e_t et可以表示为:

e t = ∑ i = 1 N w t i I ( f t ( x i ) ≠ y i ) ∑ i = 1 N w t i (9) e_t=\frac{\sum\nolimits_{i=1}^{N}w_{ti}\mathbb I(f_t(\mathrm x_i)\neq y_i)}{\sum\nolimits_{i=1}^{N}w_{ti}}\tag{9} et=∑i=1Nwti∑i=1NwtiI(ft(xi)=yi)(9)

由公式(2):

∑ k = 1 t α k f k ( x ) = ∑ k = 1 t − 1 α k f k ( x ) + α t f t ( x ) (10) \sum\limits_{k=1}^{t}\alpha_kf_k(\mathrm x)=\sum\limits_{k=1}^{t-1}\alpha_kf_k(\mathrm x)+\alpha_tf_t(\mathrm x)\tag{10} k=1∑tαkfk(x)=k=1∑t−1αkfk(x)+αtft(x)(10)

根据公式(5)中 w t i w_{ti} wti的定义可知

w t + 1 , i = exp [ − y i ∑ k = 1 t α k f t ( x i ) ] = exp [ − y i ∑ k = 1 t − 1 α k f t ( x i ) ] exp [ − y i α t f t ( x i ) ] = w t i exp [ − y i α t f t ( x i ) ] (11) \begin{aligned} w_{t+1,i}&=\exp[-y_i\sum\limits_{k=1}^{t}\alpha_kf_t(\mathrm x_i)]\\&=\exp[-y_i\sum\limits_{k=1}^{t-1}\alpha_kf_t(\mathrm x_i)]\exp[-y_i\alpha_tf_t(\mathrm x_i)]\\&=w_{ti}\exp[-y_i\alpha_tf_t(\mathrm x_i)] \end{aligned}\tag{11} wt+1,i=exp[−yik=1∑tαkft(xi)]=exp[−yik=1∑t−1αkft(xi)]exp[−yiαtft(xi)]=wtiexp[−yiαtft(xi)](11)

这里我们对 w t + 1 , i , i = 1 , 2 , ⋯ , N w_{t+1,i},i=1,2,\cdots,N wt+1,i,i=1,2,⋯,N进行归一化,即:

w ˉ t + 1 , i = w t + 1 , i ∑ i = 1 N w t + 1 , i = w t i exp ( − y i α t f t ( x i ) ) ∑ i = 1 N w t i exp ( − y i α t f t ( x i ) ) (12) \bar w_{t+1,i}=\frac{w_{t+1,i}}{\sum\nolimits_{i=1}^{N}w_{t+1,i}}=\frac{w_{ti}\exp(-y_i\alpha_tf_t(\mathrm x_i))}{\sum\nolimits_{i=1}^{N}w_{ti}\exp(-y_i\alpha_tf_t(\mathrm x_i))}\tag{12} wˉt+1,i=∑i=1Nwt+1,iwt+1,i=∑i=1Nwtiexp(−yiαtft(xi))wtiexp(−yiαtft(xi))(12)

由公式(9)可知, w t i w_{ti} wti可以看成是在 t t t次迭代过程中,样例点 x i \mathrm x_i xi的误差权重。 w t i w_{ti} wti越大,说明越期望 x i \mathrm x_i xi被正确分类,其被误分类的损失越大。

到目前为止,我们就完成了第 t t t次迭代过程中需要更新的值:个体学习器 f t ( x ) f_t(x) ft(x)及其权重 α t \alpha_t αt ,以及下一次迭代时计算误差率(9)所需要的权重 w ˉ t + 1 , i \bar w_{t+1,i} wˉt+1,i。注意:这里的个体学习器 f t ( x ) f_t(x) ft(x)可以是一些常见的算法,如决策树,神经网络等;另外,初始的权重值可以设置为 1 / N 1/N 1/N,即可把所有样例等同看待。

一个例子

如下表所示包含10个训练样例的训练集。假设个体分类器简单地设为 x < v x

| x \mathrm x x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| y y y | 1 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | -1 |

第 t = 1 t=1 t=1次迭代:

我们假设初始权值为

w 1 i = 0.1 , i = 1 , 2 , ⋯ , 10 (13) w_{1i}=0.1, i=1,2,\cdots,10\tag{13} w1i=0.1,i=1,2,⋯,10(13)

然后根据公式(9)和我们假定的分类器

f 1 ( x ) = { 1 , x < v − 1 , x ≥ v (14) f_1(\mathrm x)= \begin{cases} 1,\quad x

通过穷举 v = { − 0.5 , 0.5 , 1.5 , ⋯ , 9.5 } v=\{-0.5,0.5,1.5,\cdots,9.5\} v={−0.5,0.5,1.5,⋯,9.5}来找到最优的 v = 2.5 v=2.5 v=2.5使得误分类率(9)最小,即:

e 1 = 0.3 (15) e_1=0.3\tag{15} e1=0.3(15)

根据公式(8)和(15),我们计算

α 1 = 0.4236 (16) \alpha_1=0.4236\tag{16} α1=0.4236(16)

至此,我们得到第 t = 1 t=1 t=1次的表达式:

f ( x ) = α 1 f 1 ( x ) = 0.4236 f 1 ( x ) (17) f(\mathrm x)=\alpha_1f_1(\mathrm x)=0.4236f_1(\mathrm x)\tag{17} f(x)=α1f1(x)=0.4236f1(x)(17)

(17)对应的分类函数为:

y ^ = f ( x ) = { 1 , α 1 f 1 ( x ) < 0 − 1 , α 1 f 1 ( x ) ≥ 0 (18) \hat y=f(\mathrm x)= \begin{cases} 1,\quad\alpha_1f_1(\mathrm x)<0\\ -1,\quad\alpha_1f_1(\mathrm x)\ge 0 \end{cases}\tag{18} y^=f(x)={1,α1f1(x)<0−1,α1f1(x)≥0(18)

根据(18),我们有

| x \mathrm x x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| y | 1 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | -1 |

| y ^ \hat y y^ | 1 | 1 | 1 | -1 | -1 | -1 | − 1 \color{red}-1 −1 | − 1 \color{red}-1 −1 | − 1 \color{red}-1 −1 | -1 |

最后根据(12)来计算下次迭代所需要的权重:

w 21 = w 22 = w 23 = w 24 = w 25 = w 26 = w 210 = 0.0714 w 27 = w 28 = w 29 = 0.1667 (19) \begin{aligned} w_{21}=w_{22}=w_{23}=w_{24}=w_{25}=w_{26}=w_{210}=0.0714\\ w_{27}=w_{28}=w_{29}=0.1667 \end{aligned}\tag{19} w21=w22=w23=w24=w25=w26=w210=0.0714w27=w28=w29=0.1667(19)

第 t = 2 t=2 t=2次迭代:

然后根据公式(9)和我们假定的第二个分类器

f 2 ( x ) = { 1 , x < v − 1 , x ≥ v (20) f_2(\mathrm x)= \begin{cases} 1,\quad x

通过穷举 v = { − 0.5 , 0.5 , 1.5 , ⋯ , 9.5 } v=\{-0.5,0.5,1.5,\cdots,9.5\} v={−0.5,0.5,1.5,⋯,9.5}来找到最优的 v = 8.5 v=8.5 v=8.5使得误分类率(9)最小,即:

e 2 = 0.2143 (21) e_2=0.2143\tag{21} e2=0.2143(21)

根据公式(8)和(21),我们计算:

α 2 = 0.6496 (22) \alpha_2=0.6496\tag{22} α2=0.6496(22)

此时,我们得到第 t = 2 t=2 t=2次的表达式:

f ( x ) = α 1 f 1 ( x ) + α 2 f 2 ( x ) = 0.4236 f 1 ( x ) + 0.6496 f 2 ( x ) (23) f(\mathrm x)=\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)=0.4236f_1(\mathrm x)+0.6496f_2(\mathrm x)\tag{23} f(x)=α1f1(x)+α2f2(x)=0.4236f1(x)+0.6496f2(x)(23)

(23)对应的分类函数为:

y ^ = f ( x ) = { 1 , α 1 f 1 ( x ) + α 2 f 2 ( x ) < 0 − 1 , α 1 f 1 ( x ) + α 2 f 2 ( x ) ≥ 0 (24) \hat y=f(\mathrm x)= \begin{cases} 1,\quad\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)<0\\ -1,\quad\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)\ge 0 \end{cases}\tag{24} y^=f(x)={1,α1f1(x)+α2f2(x)<0−1,α1f1(x)+α2f2(x)≥0(24)

根据(24),我们有

| x \mathrm x x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| y | 1 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | -1 |

| y ^ \hat y y^ | 1 | 1 | 1 | 1 \color{red}1 1 | 1 \color{red}1 1 | 1 \color{red}1 1 | 1 | 1 | 1 | -1 |

最后根据(12)来计算下次迭代所需要的权重:

w 31 = w 32 = w 33 = w 310 = 0.0455 w 34 = w 35 = w 36 = 0.1667 w 37 = w 38 = w 39 = 0.1061 (25) \begin{aligned} w_{31}=w_{32}=w_{33}=w_{310}=0.0455\\ w_{34}=w_{35}=w_{36}=0.1667\\ w_{37}=w_{38}=w_{39}=0.1061 \end{aligned}\tag{25} w31=w32=w33=w310=0.0455w34=w35=w36=0.1667w37=w38=w39=0.1061(25)

第 t = 3 t=3 t=3次迭代:

然后根据公式(9)和我们假定的第三个分类器

f 3 ( x ) = { − 1 , x < v 1 , x ≥ v (26) f_3(\mathrm x)= \begin{cases} -1,\quad x

通过穷举 v = { − 0.5 , 0.5 , 1.5 , ⋯ , 9.5 } v=\{-0.5,0.5,1.5,\cdots,9.5\} v={−0.5,0.5,1.5,⋯,9.5}来找到最优的 v = 5.5 v=5.5 v=5.5使得误分类率(9)最小,即:

e 3 = 0.1818 (27) e_3=0.1818\tag{27} e3=0.1818(27)

根据公式(8)和(27),我们计算:

α 3 = 0.7520 (28) \alpha_3=0.7520\tag{28} α3=0.7520(28)

此时,我们得到第 t = 3 t=3 t=3次的表达式:

f ( x ) = α 1 f 1 ( x ) + α 2 f 2 ( x ) + α 3 f 3 ( x ) = 0.4236 f 1 ( x ) + 0.6496 f 2 ( x ) + 0.7514 f 3 ( x ) (29) f(\mathrm x)=\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)+\alpha_3f_3(\mathrm x)=0.4236f_1(\mathrm x)+0.6496f_2(\mathrm x)+0.7514f_3(\mathrm x)\tag{29} f(x)=α1f1(x)+α2f2(x)+α3f3(x)=0.4236f1(x)+0.6496f2(x)+0.7514f3(x)(29)

(29)对应的分类函数为:

y ^ = f ( x ) = { 1 , α 1 f 1 ( x ) + α 2 f 2 ( x ) + α 3 f 3 ( x ) < 0 − 1 , α 1 f 1 ( x ) + α 2 f 2 ( x ) + α 3 f 3 ( x ) ≥ 0 (30) \hat y=f(\mathrm x)= \begin{cases} 1,\quad\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)+\alpha_3f_3(\mathrm x)<0\\ -1,\quad\alpha_1f_1(\mathrm x)+\alpha_2f_2(\mathrm x)+\alpha_3f_3(\mathrm x)\ge 0 \end{cases}\tag{30} y^=f(x)={1,α1f1(x)+α2f2(x)+α3f3(x)<0−1,α1f1(x)+α2f2(x)+α3f3(x)≥0(30)

根据(30),我们有

| x \mathrm x x | 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 |

|---|---|---|---|---|---|---|---|---|---|---|

| y | 1 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | -1 |

| y ^ \hat y y^ | 1 | 1 | 1 | -1 | -1 | -1 | 1 | 1 | 1 | -1 |

最后根据(12)来计算下次迭代所需要的权重:

w 41 = w 42 = w 43 = w 410 = 0.125 w 44 = w 45 = w 46 = 0.102 w 47 = w 48 = w 49 = 0.065 (31) \begin{aligned} w_{41}=w_{42}=w_{43}=w_{410}=0.125\\ w_{44}=w_{45}=w_{46}=0.102\\ w_{47}=w_{48}=w_{49}=0.065 \end{aligned}\tag{31} w41=w42=w43=w410=0.125w44=w45=w46=0.102w47=w48=w49=0.065(31)

从上表可知,分类函数(30)已经能成功将这10个样例分类,实际上(31)已经不需要计算了。此时,最终的分类函数即为(30)。

具体算法实现

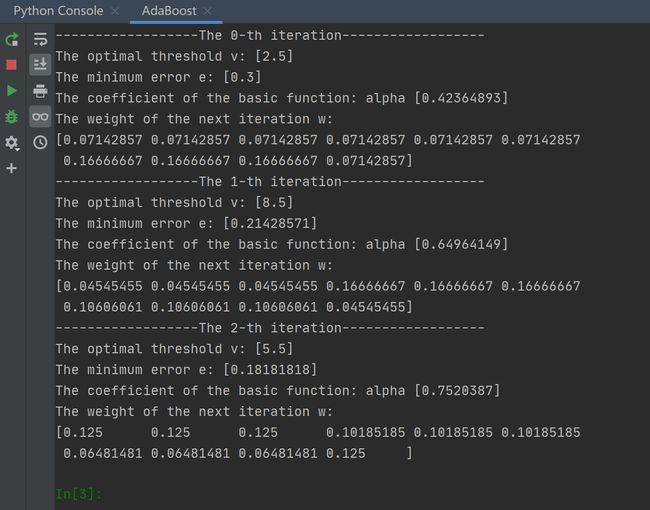

对于上面的例子,我们使用python进行了算法实践,其结果与上述过程相同,如图1:

|

对应的Python源代码如下:

# -*- encoding: utf-8 -*-

"""

@File : AdaBoost.py

@Time : 2020/6/17 22:02

@Author : tengweitw

@Email : [email protected]

"""

import numpy as np

import matplotlib.pyplot as plt

train_x = range(10)

train_y = [1, 1, 1, -1, -1, -1, 1, 1, 1, -1]

N = len(train_x) # num of instances

K = 3 # num of basic classfier

w = np.zeros((K + 1, N))

alpha = np.zeros((K, 1))

v = np.zeros((K, 1))

f_t_x = np.zeros((K, 1))

# used for brute force

values = np.linspace(-0.5, 9.5, 11)

# exhaust search for the optimal threshold value

def Choose_values(values, w, train_x, train_y, N):

error1 = []

tmp1 = np.zeros((N, 1))

tmp2 = np.zeros((N, 1))

for v in values:

tmp3 = 0

for i in range(N):

if (train_x[i] < v):

tmp1[i] = 1

else:

tmp1[i] = -1

if (tmp1[i] == train_y[i]):

tmp2[i] = 0

else:

tmp2[i] = 1

tmp3 = tmp3 + tmp2[i] * w[i]

error1.append(tmp3)

# Note that there are two cases: y=1,if x>v or y=-1, if x>v

err_min1 = min(error1)

err_min_index1 = error1.index(err_min1)

error2 = []

tmp1 = np.zeros((N, 1))

tmp2 = np.zeros((N, 1))

for v in values:

tmp3 = 0

for i in range(N):

if (train_x[i] < v):

tmp1[i] = -1

else:

tmp1[i] = 1

if (tmp1[i] == train_y[i]):

tmp2[i] = 0

else:

tmp2[i] = 1

tmp3 = tmp3 + tmp2[i] * w[i]

error2.append(tmp3)

err_min2 = min(error2)

err_min_index2 = error2.index(err_min2)

if (err_min1 < err_min2):

error = err_min1

index = err_min_index1

flag = 0 # case 0: y=1 if x

else:

error = err_min2

index = err_min_index2

flag = 1 # case 1: y=-1 if x

return index, error, flag

for k in range(K):

print('------------------The %d-th iteration------------------' % (k))

if k == 0:

for i in range(N):

w[k][i] = 1.0 / N # initialization: equal weigh

v_index, err, flag = Choose_values(values, w[k], train_x, train_y, N)

v[k] = values[v_index]

alpha[k] = np.log((1 - err) / err) / 2.0

print('The optimal threshold v:', v[k])

print('The minimum error e:', err)

print('The coefficient of the basic function: alpha', alpha[k])

for i in range(N):

if train_x[i] < v[k]:

f_t_x = 1

else:

f_t_x = -1

if flag == 1: # check case 0 or case 1

f_t_x = -f_t_x

w[k + 1][i] = w[k][i] * np.exp(-train_y[i] * alpha[k] * f_t_x)

sum_tmp = sum(w[k + 1])

for i in range(N):

w[k + 1][i] = w[k + 1][i] / sum_tmp # regularization

print('The weight of the next iteration w:')

print(w[k + 1])