Hadoop项目练习

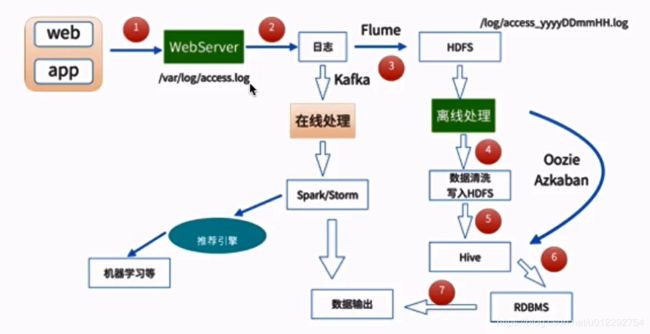

1 数据处理

2 统计网站不同浏览器访问次数

2.1 解析工具

https://github.com/LeeKemp/UserAgentParser

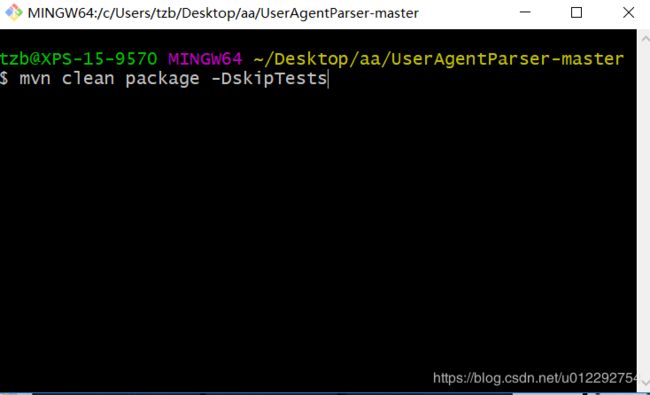

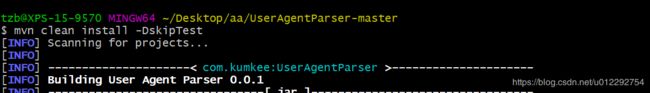

下载后用 maven 编译

安装

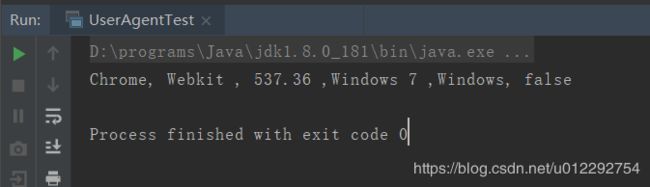

2.1.1 测试解析工具

修改 pom

<dependency>

<groupId>com.kumkeegroupId>

<artifactId>UserAgentParserartifactId>

<version>0.0.1version>

dependency>

package com.bzt.cn.hdfs.useragent;

import com.kumkee.userAgent.UserAgent;

import com.kumkee.userAgent.UserAgentParser;

public class UserAgentTest {

public static void main(String[] args) {

String source = "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36";

UserAgentParser userAgentParser = new UserAgentParser();

UserAgent agent = userAgentParser.parse(source);

String browser = agent.getBrowser();

String engine = agent.getEngine();

String engineVersion = agent.getEngineVersion();

String os = agent.getOs();

String platform = agent.getPlatform();

boolean isMobile = agent.isMobile();

System.out.println(browser + ", " + engine + " , " + engineVersion + " ,"

+ os + " ," + platform + ", " + isMobile);

}

}

2.2 抽取部分数据

[hadoop@node1 ~]$ ll

total 2752

-rw-r--r--. 1 hadoop hadoop 2769741 Oct 17 2017 10000_access.log

-rw-r--r--. 1 hadoop hadoop 58 Oct 30 10:13 animal.txt

drwxrwxr-x. 11 hadoop hadoop 236 Oct 29 10:49 apps

drwxrwxr-x. 4 hadoop hadoop 30 Oct 25 21:59 elasticsearchData

drwxrwxr-x. 4 hadoop hadoop 28 Sep 14 19:02 hbase

drwxrwxr-x. 4 hadoop hadoop 32 Sep 14 14:44 hdfsdir

drwxrwxr-x. 3 hadoop hadoop 17 Oct 29 11:00 hdp2.6-cdh5.7-data

-rw-r--r--. 1 hadoop hadoop 46 Oct 29 22:33 hello.txt

drwxrwxrwx. 3 hadoop hadoop 18 Oct 24 21:45 kafkaData

-rw-rw-r--. 1 hadoop hadoop 0 Oct 24 21:58 log-cleaner.log

drwxrwxr-x. 5 hadoop hadoop 133 Oct 23 14:40 metastore_db

-rw-r--r--. 1 hadoop hadoop 6709 Oct 30 10:30 part.jar

drwxrwxr-x. 3 hadoop hadoop 63 Oct 24 21:21 zookeeperData

-rw-rw-r--. 1 hadoop hadoop 26108 Oct 25 17:54 zookeeper.out

[hadoop@node1 ~]$ head -n 100 10000_access.log > 100_access.log

[hadoop@node1 ~]$ wc -l 100_access.log

100 100_access.log

[hadoop@node1 ~]$

一条数据

183.162.52.7 - - [10/Nov/2016:00:01:02 +0800] "POST /api3/getadv HTTP/1.1" 200 813 "www.imooc.com" "-" cid=0×tamp=1478707261865&uid=2871142&marking=androidbanner&secrect=a6e8e14701ffe9f6063934780d9e2e6d&token=f51e97d1cb1a9caac669ea8acc162b96 "mukewang/5.0.0 (Android 5.1.1; Xiaomi Redmi 3 Build/LMY47V),Network 2G/3G" "-" 10.100.134.244:80 200 0.027 0.027

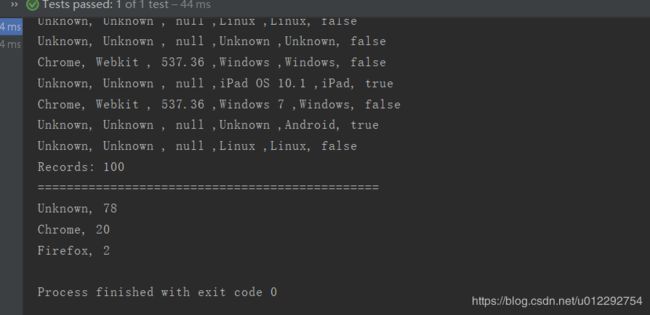

2.3 本地测试

package com.bzt.cn.hdfs.useragent;

import com.kumkee.userAgent.UserAgent;

import com.kumkee.userAgent.UserAgentParser;

import org.apache.commons.lang.StringUtils;

import org.junit.Test;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileInputStream;

import java.io.InputStreamReader;

import java.util.HashMap;

import java.util.Map;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class UserAgentTest {

@Test

public void testReadFile() throws Exception {

String path = "d://100_access.log";

BufferedReader reader = new BufferedReader(

new InputStreamReader(new FileInputStream(new File(path)))

);

String line = "";

int i = 0;

Map<String, Integer> browserMap = new HashMap<>();

UserAgentParser userAgentParser = new UserAgentParser();

while (line != null) {

line = reader.readLine(); //一次读入一行数据

i++;

if (StringUtils.isNotBlank(line)) {

String source = line.substring(getCharacterPosition(line, "\"", 7)) + 1;

UserAgent agent = userAgentParser.parse(source);

String browser = agent.getBrowser();

String engine = agent.getEngine();

String engineVersion = agent.getEngineVersion();

String os = agent.getOs();

String platform = agent.getPlatform();

boolean isMobile = agent.isMobile();

Integer browserValue = browserMap.get(browser);

if (browserValue != null) {

browserMap.put(browser, (browserValue + 1));

} else {

browserMap.put(browser, 1);

}

System.out.println(browser + ", " + engine + " , " + engineVersion + " ,"

+ os + " ," + platform + ", " + isMobile);

}

}

System.out.println("Records: " + (i - 1));

System.out.println("===============================================");

for (Map.Entry<String, Integer> entry : browserMap.entrySet()) {

System.out.println(entry.getKey() + ", " + entry.getValue());

}

}

/*

* 测试自定义的方法

* */

@Test

public void testGetCharacterPosition() {

String value = "183.162.52.7 - - [10/Nov/2016:00:01:02 +0800] \"POST /api3/getadv HTTP/1.1\"";

int index = getCharacterPosition(value, "\"", 2);

System.out.println(index);

}

/*

* 获取指定字符串中指定标识字符串出现索引的位置

* */

private int getCharacterPosition(String value, String operator, int index) {

Matcher slashMatcher = Pattern.compile(operator).matcher(value);

int mIdx = 0;

while (slashMatcher.find()) {

mIdx++;

if (mIdx == index) {

break;

}

}

return slashMatcher.start();

}

/**

* 单元测试

*/

public void testUserAgentParser() {

//public static void main(String[] args) {

String source = "Mozilla/5.0 (Windows NT 6.1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/45.0.2454.101 Safari/537.36";

UserAgentParser userAgentParser = new UserAgentParser();

UserAgent agent = userAgentParser.parse(source);

String browser = agent.getBrowser();

String engine = agent.getEngine();

String engineVersion = agent.getEngineVersion();

String os = agent.getOs();

String platform = agent.getPlatform();

boolean isMobile = agent.isMobile();

System.out.println(browser + ", " + engine + " , " + engineVersion + " ,"

+ os + " ," + platform + ", " + isMobile);

}

}

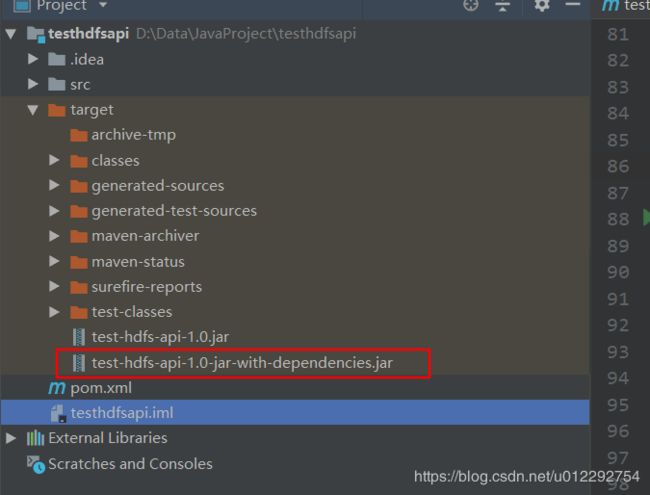

3 用 MapReduce 实现

3.1 pom 文件

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<groupId>com.bzt.cngroupId>

<artifactId>test-hdfs-apiartifactId>

<version>1.0version>

<name>test-hdfs-apiname>

<url>http://www.example.comurl>

<properties>

<project.build.sourceEncoding>UTF-8project.build.sourceEncoding>

<maven.compiler.source>1.8maven.compiler.source>

<maven.compiler.target>1.8maven.compiler.target>

<hadoop.version>2.6.0-cdh5.7.0hadoop.version>

properties>

<repositories>

<repository>

<id>clouderaid>

<url>https://repository.cloudera.com/artifactory/cloudera-repos/url>

repository>

repositories>

<dependencies>

<dependency>

<groupId>org.apache.hadoopgroupId>

<artifactId>hadoop-clientartifactId>

<version>${hadoop.version}version>

<scope>providedscope>

dependency>

<dependency>

<groupId>com.kumkeegroupId>

<artifactId>UserAgentParserartifactId>

<version>0.0.1version>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.11version>

<scope>testscope>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<artifactId>maven-assembly-pluginartifactId>

<configuration>

<descriptorRefs>

<descriptorRef>jar-with-dependenciesdescriptorRef>

descriptorRefs>

<archive>

<manifest>

<mainClass>urlcount.BrowserCountmainClass>

manifest>

archive>

configuration>

<executions>

<execution>

<id>make-assemblyid>

<phase>packagephase>

<goals>

<goal>singlegoal>

goals>

execution>

executions>

plugin>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<configuration>

<source>1.8source>

<target>1.8target>

configuration>

plugin>

<plugin>

<artifactId>maven-clean-pluginartifactId>

<version>3.0.0version>

plugin>

<plugin>

<artifactId>maven-resources-pluginartifactId>

<version>3.0.2version>

plugin>

<plugin>

<artifactId>maven-compiler-pluginartifactId>

<version>3.7.0version>

plugin>

<plugin>

<artifactId>maven-surefire-pluginartifactId>

<version>2.20.1version>

plugin>

<plugin>

<artifactId>maven-jar-pluginartifactId>

<version>3.0.2version>

plugin>

<plugin>

<artifactId>maven-install-pluginartifactId>

<version>2.5.2version>

plugin>

<plugin>

<artifactId>maven-deploy-pluginartifactId>

<version>2.8.2version>

plugin>

plugins>

build>

project>

3.2 源码

package urlcount;

import com.kumkee.userAgent.UserAgent;

import com.kumkee.userAgent.UserAgentParser;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Partitioner;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import java.io.IOException;

import java.util.regex.Matcher;

import java.util.regex.Pattern;

public class BrowserCount {

public static class MyMapper extends Mapper<LongWritable, Text, Text, LongWritable> {

LongWritable one = new LongWritable(1);

private UserAgentParser userAgentParser;

@Override

protected void setup(Context context) throws IOException, InterruptedException {

userAgentParser = new UserAgentParser();

}

@Override

protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException {

//一行日志信息

String line = value.toString();

String source = line.substring(getCharacterPosition(line, "\"", 7)) + 1;

UserAgent agent = userAgentParser.parse(source);

String browser = agent.getBrowser();

context.write(new Text(browser),one);

}

@Override

protected void cleanup(Context context) throws IOException, InterruptedException {

userAgentParser = null;

}

}

/*

* 获取指定字符串中指定标识字符串出现索引的位置

* */

private static int getCharacterPosition(String value, String operator, int index) {

Matcher slashMatcher = Pattern.compile(operator).matcher(value);

int mIdx = 0;

while (slashMatcher.find()) {

mIdx++;

if (mIdx == index) {

break;

}

}

return slashMatcher.start();

}

/*

* Reducer ; 归并操作

* */

public static class MyReducer extends Reducer<Text, LongWritable, Text, LongWritable> {

long sum = 0;

@Override

protected void reduce(Text key, Iterable<LongWritable> values, Context context) throws IOException, InterruptedException {

for (LongWritable value : values) {

sum += value.get();

}

context.write(key, new LongWritable(sum));

}

}

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException {

Configuration conf = new Configuration();

// 清理已经存在的输出目录

Path outputPath = new Path(args[1]);

FileSystem fileSystem = FileSystem.get(conf);

if (fileSystem.exists(outputPath)) {

fileSystem.delete(outputPath);

System.out.println("output file deleted!");

}

// 创建 job

Job job = Job.getInstance(conf, "BrowserCount");

// 设置 job 处理类

job.setJarByClass(BrowserCount.class);

//设置作业处理的输入路径

FileInputFormat.setInputPaths(job, new Path(args[0]));

//设置 map 相关的参数

job.setMapperClass(MyMapper.class);

job.setOutputKeyClass(Text.class);

job.setMapOutputValueClass(LongWritable.class);

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

//设置作业处理的输出路径

FileOutputFormat.setOutputPath(job, new Path(args[1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}