图像配准融合(一)——基于互信息的图像配准方法(c++)

1、内容简介

图像配准方法按照其算法原理可以分为:基于灰度信息的配准、基于变换域信息的配准、基于特征信息的配准

(本人实验主要集中在基于灰度信息的配准以及基于特征信息的配准两类方法,对基于变换域信息的配准不熟悉,以下不涉及该方面内容的讨论。本篇主要是基于互信息的图像配准与融合(属于基于灰度信息配准与融合)实验的一些记录;下一篇主要介绍基于特征信息的配准与融合。)

图像配准的互信息基础含义,参照https://blog.csdn.net/WillVictory/article/details/5470401?locationNum=5&fps=1该博客

简单记录如下:1.互信息是信息论里一种有用的信息度量,它是指两个事件集合之间的相关性。

2.A、B两幅图像的互信息I最大时,即相关性最大时,则两幅图像配准。

2.代码部分

基于互信息的图像配准算法常用于医学图像配准中,在github里可以找到大神使用c++实现的该配准方法

附上大神实现链接https://github.com/mariusherzog/ImageRegistration

代码中对两幅图像直接融合、两幅图像先使用基于互信息的方法配准再融合的结果进行了对比。

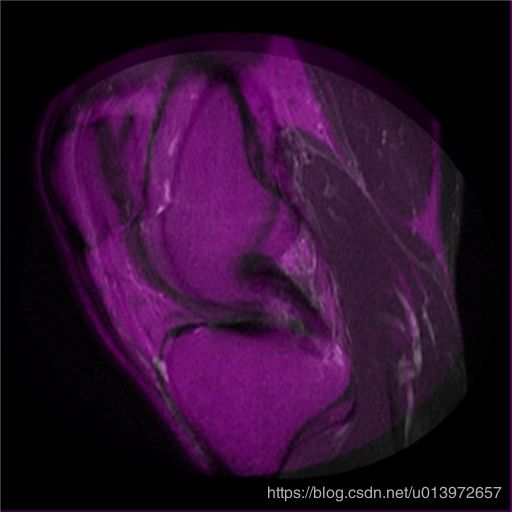

对下面两幅图1、图2(图源来自网络)使用该代码获得融合结果如下:

图1 图2

下面左图是直接融合,右图是先配准再融合效果。(融合图中图1(Base image)保留原色,图2(Float image)或图2的转换图像保留B、R通道呈现紫色)

本人对链接中代码稍作整理,其中基于互信息的配准的函数如下:

cv::Mat register_images(cv::Mat ref, cv::Mat flt)//配准,返回配准图像

{

cv::Size origsize(512, 512);

cv::resize(ref, ref, origsize);

if (is_inverted(ref, flt)) {//判断flt的图像是不是需要转置

cv::bitwise_not(flt, flt);//bitwise_not是对二进制数据进行“非”操作

}

cv::Size ksize(5, 5);

cv::GaussianBlur(ref, ref, ksize, 10, 10);

cv::GaussianBlur(flt, flt, ksize, 10, 10);

auto max_size = ref.size();

cv::resize(flt, flt, max_size);

double tx, ty, a11, a12, a21, a22;

estimate_initial(ref, flt, tx, ty, a11, a12, a21, a22);

std::vector init{ tx, ty, a11, a12, a21, a22 };

std::vector rng{ 80.0, 80.0, 1.0, 1.0, 1.0, 1.0 };

std::pair::iterator, std::vector::iterator> o{ init.begin(), init.end() };

optimize_powell(o, std::pair::iterator, std::vector::iterator>{rng.begin(), rng.end()}, std::bind(cost_function, ref, flt, std::placeholders::_1));

tx = init[0];

ty = init[1];

a11 = init[2];

a12 = init[3];

a21 = init[4];

a22 = init[5];

cv::Mat fin = transform(flt, tx, ty, a11, a12, a21, a22);

double mutual_inf = mutual_information(ref, fin);

std::cout << exp(-mutual_inf) << "*** \n";

return fin.clone();

}

以上涉及is_inverted函数、estimate_initial函数、optimize_powell函数、cost_function函数、transform函数、 mutual_information函数。下面是整理的全部代码(该代码都整理自上边链接中的代码,其中实现细节并没有深究。。):

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

#include

using namespace cv;

using namespace std;

float alpha = 0.5;

//optimize_powell函数

template

T optimize_goldensectionsearch(T init, T rng, F function)

{

T sta = init - 0.382*rng;

T end = init + 0.618*rng;

T c = (end - (end - sta) / 1.618);

T d = (sta + (end - sta) / 1.618);

while (fabs(c - d) > 0.005) {

if (function(c) < function(d)) {

end = d;

}

else {

sta = c;

}

c = (end - (end - sta) / 1.618);

d = (sta + (end - sta) / 1.618);

}

return (end + sta) / 2;

}

/**

* @brief optimize_powell is a strategy to optimize a parameter space for a

* given cost function

* @param init range with the initial values, optimized values are stored in

* there when the function returns

* @param rng range containing the ranges in which each parameter is optimized

* @param cost_function cost function for which the parameters are optimized

*/

template

void optimize_powell(std::pair init,std::pair rng,Cf cost_function)

{

using TPS = typename std::remove_reference::type;

bool converged = false;

const double eps = 0.00005;

double last_mutualinf = 100000.0;

while (!converged) {

converged = true;

for (auto it = init.first; it != init.second; ++it) {

std::size_t pos = it - init.first;

auto curr_param = init.first[pos];

auto curr_rng = rng.first[pos];

auto fn = [pos, init, &cost_function](TPS p)

{

init.first[pos] = p;

return cost_function(init.first);

};

auto param_optimized = optimize_goldensectionsearch(curr_param, curr_rng, fn);

auto curr_mutualinf = cost_function(init.first);

init.first[pos] = curr_param;

if (last_mutualinf - curr_mutualinf > eps) {

*it = param_optimized;

last_mutualinf = curr_mutualinf;

converged = false;

}

else {

*it = curr_param;

}

}

}

}

//transform函数

static cv::Mat transform(cv::Mat image, double tx, double ty, double a11, double a12, double a21, double a22)

{

cv::Mat trans_mat = (cv::Mat_(2, 3) << a11, a12, tx, a21, a22, ty);

cv::Mat out = image.clone();

cv::Scalar borderColor = Scalar(255, 255, 255);

warpAffine(image, out, trans_mat, image.size(), INTER_LINEAR, BORDER_CONSTANT, borderColor);

return out;

}

//is_inverted函数

static bool is_inverted(cv::Mat ref, cv::Mat flt)

{

//灰度直方图

std::vector hist_ref(256, 0);

std::vector hist_flt(256, 0);

for (int i = 0; i(i, j);

hist_ref[val]++;

}

}

for (int i = 0; i(i, j);

hist_flt[val]++;

}

}

//transform将某操作应用于指定范围的每一个元素

std::transform(hist_ref.begin(), hist_ref.end(), hist_ref.begin(), [&ref](int val) { return 1.0*val / (1.0*ref.cols*ref.rows); });

std::transform(hist_flt.begin(), hist_flt.end(), hist_flt.begin(), [&flt](int val) { return 1.0*val / (1.0*flt.cols*flt.rows); });

std::vector distances(256, 0);

std::transform(hist_ref.begin(), hist_ref.end(), hist_flt.begin(), distances.begin(),

[](double ref_val, double flt_val) { return fabs(ref_val - flt_val); });

double distance_flt = std::accumulate(distances.begin(), distances.end(), 0.0);

// invert

std::reverse(hist_flt.begin(), hist_flt.end());//reverse 逆序反转

std::transform(hist_ref.begin(), hist_ref.end(), hist_flt.begin(), distances.begin(),

[](double ref_val, double inv_val) { return fabs(ref_val - inv_val); });

double distance_inv = std::accumulate(distances.begin(), distances.end(), 0.0);

return distance_flt > distance_inv;

}

//estimate_initial函数

static void estimate_initial(cv::Mat ref, cv::Mat flt,double& tx, double& ty,double& a11, double& a12, double& a21, double& a22)//估算初始值

{

cv::Moments im_mom = moments(ref);//计算图像的中心距

cv::Moments pt_mom = moments(flt);

cv::Mat ref_bin = ref.clone();

cv::Mat flt_bin = flt.clone();

cv::threshold(ref, ref_bin, 40, 256, 0);

cv::threshold(flt, flt_bin, 40, 256, 0);

cv::Moments ref_mom_bin = moments(ref_bin);

cv::Moments flt_mom_bin = moments(flt_bin);

double pt_avg_10 = pt_mom.m10 / pt_mom.m00;

double pt_avg_01 = pt_mom.m01 / pt_mom.m00;

double pt_mu_20 = (pt_mom.m20 / pt_mom.m00*1.0) - (pt_avg_10*pt_avg_10);

double pt_mu_02 = (pt_mom.m02 / pt_mom.m00*1.0) - (pt_avg_01*pt_avg_01);

double pt_mu_11 = (pt_mom.m11 / pt_mom.m00*1.0) - (pt_avg_01*pt_avg_10);

double im_avg_10 = im_mom.m10 / im_mom.m00;

double im_avg_01 = im_mom.m01 / im_mom.m00;

double im_mu_20 = (im_mom.m20 / im_mom.m00*1.0) - (im_avg_10*im_avg_10);

double im_mu_02 = (im_mom.m02 / im_mom.m00*1.0) - (im_avg_01*im_avg_01);

double im_mu_11 = (im_mom.m11 / im_mom.m00*1.0) - (im_avg_01*im_avg_10);

tx = im_mom.m10 / im_mom.m00 - pt_mom.m10 / pt_mom.m00;

ty = im_mom.m01 / im_mom.m00 - pt_mom.m01 / pt_mom.m00;

double scale = ref_mom_bin.m00 / flt_mom_bin.m00;

double rho = 0.5f * atan((2.0*pt_mu_11) / (pt_mu_20 - pt_mu_02));

double rho_im = 0.5f * atan((2.0*im_mu_11) / (im_mu_20 - im_mu_02));

const double rho_diff = rho_im - rho;

const double roundness = (pt_mom.m20 / pt_mom.m00) / (pt_mom.m02 / pt_mom.m00);

if (abs(roundness - 1.0) >= 0.3) {

a11 = cos(rho_diff);

a12 = -sin(rho_diff);

a21 = sin(rho_diff);

a22 = cos(rho_diff);

}

else {

a11 = 1.0;

a12 = 0.0;

a21 = 0.0;

a22 = 1.0;

}

}

//mutual_information函数

static double mutual_information(cv::Mat ref, cv::Mat flt)

{

cv::Mat joint_histogram(256, 256, CV_64FC1, cv::Scalar(0));

for (int i = 0; i(j, i);

int flt_intensity = flt.at(j, i);

joint_histogram.at(ref_intensity, flt_intensity) = joint_histogram.at(ref_intensity, flt_intensity) + 1;

double v = joint_histogram.at(ref_intensity, flt_intensity);

}

}

for (int i = 0; i<256; ++i) {

for (int j = 0; j<256; ++j) {

joint_histogram.at(j, i) = joint_histogram.at(j, i) / (1.0*ref.rows*ref.cols);

double v = joint_histogram.at(j, i);

}

}

cv::Size ksize(7, 7);

cv::GaussianBlur(joint_histogram, joint_histogram, ksize, 7, 7);

double entropy = 0.0;

for (int i = 0; i<256; ++i) {

for (int j = 0; j<256; ++j) {

double v = joint_histogram.at(j, i);

if (v > 0.000000000000001) {

entropy += v*log(v) / log(2);

}

}

}

entropy *= -1;

// std::cout << entropy << "###";

std::vector hist_ref(256, 0.0);

for (int i = 0; i(i, j);

}

}

cv::Size ksize2(5, 0);

// cv::GaussianBlur(hist_ref, hist_ref, ksize2, 5);

std::vector hist_flt(256, 0.0);

for (int i = 0; i(j, i);

}

}

// cv::GaussianBlur(hist_flt, hist_flt, ksize2, 5);

double entropy_ref = 0.0;

for (int i = 0; i<256; ++i) {

if (hist_ref[i] > 0.000000000001) {

entropy_ref += hist_ref[i] * log(hist_ref[i]) / log(2);

}

}

entropy_ref *= -1;

//std::cout << entropy_ref << "~~ ";

double entropy_flt = 0.0;

for (int i = 0; i<256; ++i) {

if (hist_flt[i] > 0.000000000001) {

entropy_flt += hist_flt[i] * log(hist_flt[i]) / log(2);

}

}

entropy_flt *= -1;

// std::cout << entropy_flt << "++ ";

double mutual_information = entropy_ref + entropy_flt - entropy;

return mutual_information;

}

//cost_function函数

static double cost_function(cv::Mat ref, cv::Mat flt,

std::vector::iterator affine_params)

{

const double tx = affine_params[0];

const double ty = affine_params[1];

const double a11 = affine_params[2];

const double a12 = affine_params[3];

const double a21 = affine_params[4];

const double a22 = affine_params[5];

return exp(-mutual_information(ref, transform(flt, tx, ty, a11, a12, a21, a22)));

}

//register_images配准图像

cv::Mat register_images(cv::Mat ref, cv::Mat flt)

{

cv::Size origsize(512, 512);

cv::resize(ref, ref, origsize);

if (is_inverted(ref, flt)) {//判断flt的图像是不是需要值进行取反

cv::bitwise_not(flt, flt);//bitwise_not是对二进制数据进行“非”操作

}

cv::Size ksize(5, 5);

cv::GaussianBlur(ref, ref, ksize, 10, 10);

cv::GaussianBlur(flt, flt, ksize, 10, 10);

auto max_size = ref.size();

cv::resize(flt, flt, max_size);

double tx, ty, a11, a12, a21, a22;

estimate_initial(ref, flt, tx, ty, a11, a12, a21, a22);

std::vector init{ tx, ty, a11, a12, a21, a22 };

std::vector rng{ 80.0, 80.0, 1.0, 1.0, 1.0, 1.0 };

std::pair::iterator, std::vector::iterator> o{ init.begin(), init.end() };

optimize_powell(o, std::pair::iterator, std::vector::iterator>{rng.begin(), rng.end()}, std::bind(cost_function, ref, flt, std::placeholders::_1));

tx = init[0];

ty = init[1];

a11 = init[2];

a12 = init[3];

a21 = init[4];

a22 = init[5];

cv::Mat fin = transform(flt, tx, ty, a11, a12, a21, a22);

double mutual_inf = mutual_information(ref, fin);

std::cout << exp(-mutual_inf) << "*** \n";

return fin.clone();

}

//对图像融合的具体过程,将基准图也配有颜色展现,基准图为绿色、转换图为紫色

cv::Mat fuse(cv::Mat ref, cv::Mat flt)

{

//assert(abs(alpha) < 1.0);

cv::Mat color(flt.cols, flt.rows, CV_8UC3);

cv::cvtColor(flt, color, cv::COLOR_GRAY2BGR);

cv::Mat channel[3];

split(color, channel);

//channel[0] = cv::Mat::zeros(flt.rows, flt.cols, CV_8UC1);

channel[1] = cv::Mat::zeros(flt.rows, flt.cols, CV_8UC1);

merge(channel, 3, color);

cv::Mat color1(ref.cols, ref.rows, CV_8UC3);

cv::cvtColor(ref, color1, cv::COLOR_GRAY2BGR);

cv::Mat channel1[3];

split(color1, channel1);

channel1[0] = cv::Mat::zeros(ref.rows, ref.cols, CV_8UC1);

channel1[2] = cv::Mat::zeros(ref.rows, ref.cols, CV_8UC1);

merge(channel1, 3, color1);

const double beta = 1 - alpha;

cv::Mat dst = color1.clone();

cv::addWeighted(color1, alpha, color, beta, 0.0, dst);

return dst;

}

//flt不配准的情况

Mat register_images1(cv::Mat, cv::Mat flt)

{

return flt.clone();

}

//图像融合

Mat fuse_images(Mat ref, Mat flt, string register_strategy)

{

using namespace cv;

Size origsize(512, 512);

resize(ref, ref, origsize);

resize(flt, flt, origsize);

// register to align images

Mat fused;

if (register_strategy == "mutualinformation")

{

Mat fin = register_images(ref, flt);

// now do the fusion

fused = fuse(ref, fin);

}

else

{

Mat fin = register_images1(ref, flt);

fused = fuse(ref, fin);

}

return fused;

}

//程序配准融合的调用入口

Mat perform_fusion_from_files(string path_reference_image, string path_floating_image, string register_strategy)

{

Mat reference_image = imread(path_reference_image, 0);

Mat floating_image = imread(path_floating_image, 0);

return fuse_images(reference_image, floating_image, register_strategy);

}

int main()

{

string refpath = "1.png";

string fltpath = "2.png";

//通过配准融合

Mat fused = perform_fusion_from_files(refpath, fltpath, "mutualinformation");

//不配准直接融合

Mat fused_unregistered = perform_fusion_from_files(refpath, fltpath, "identity");

imwrite("配准融合图像.png", fused);

imwrite("不配准直接融合.png", fused_unregistered);

return 0;

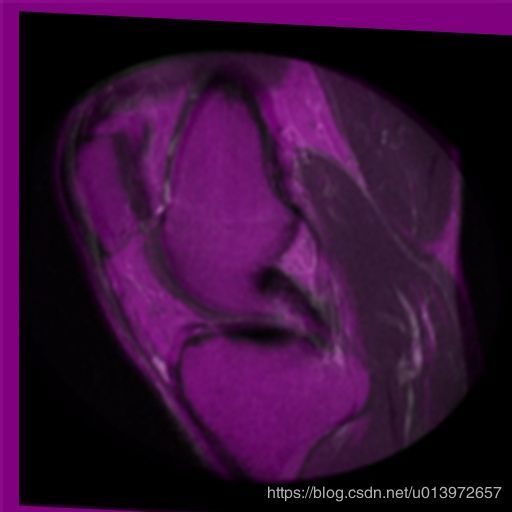

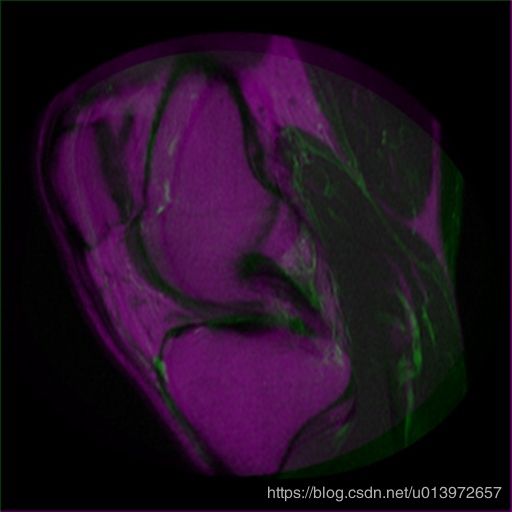

} 在以上的融合中,将基准图也配有颜色展现,基准图为绿色、转换图为紫色,便于观察,下面附上上述代码的结果图

配准融合图像 不配准直接融合图像

文中若有错误以及不妥之处,还望指出,以便共同学习。